How to write LoRA code from scratch, here is a tutorial

LoRA (Low-Rank Adaptation) is a popular technique designed to fine-tune large language models (LLM). This technology was originally proposed by Microsoft researchers and included in the paper "LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS". LoRA differs from other techniques in that instead of adjusting all parameters of the neural network, it focuses on updating a small number of low-rank matrices, significantly reducing the amount of computation required to train the model.

Since LoRA’s fine-tuning quality is comparable to full-model fine-tuning, many people refer to this method as a fine-tuning artifact. Since its release, many people have been curious about the technology and wanted to write code to better understand the research. In the past, the lack of proper documentation has been an issue, but now, we have tutorials to help.

The author of this tutorial is Sebastian Raschka, a well-known machine learning and AI researcher. He said that among various effective LLM fine-tuning methods, LoRA is still his first choice. To this end, Sebastian wrote a blog "Code LoRA From Scratch" to build LoRA from scratch. In his opinion, this is a good learning method.

This article introduces low-rank adaptation (LoRA) by writing code from scratch. Sebastian fine-tuned the DistilBERT model in the experiment and used it applied to classification tasks.

The comparison results between the LoRA method and the traditional fine-tuning method show that the LoRA method achieved 92.39% in test accuracy, which is better than fine-tuning only the last few layers of the model (86.22% of the test accuracy) shows better performance. This shows that the LoRA method has obvious advantages in optimizing model performance and can better improve the model's generalization ability and prediction accuracy. This result highlights the importance of adopting advanced techniques and methods during model training and tuning to obtain better performance and results. By comparing how

#Sebastian achieves it, we will continue to look down.

Writing LoRA from scratch

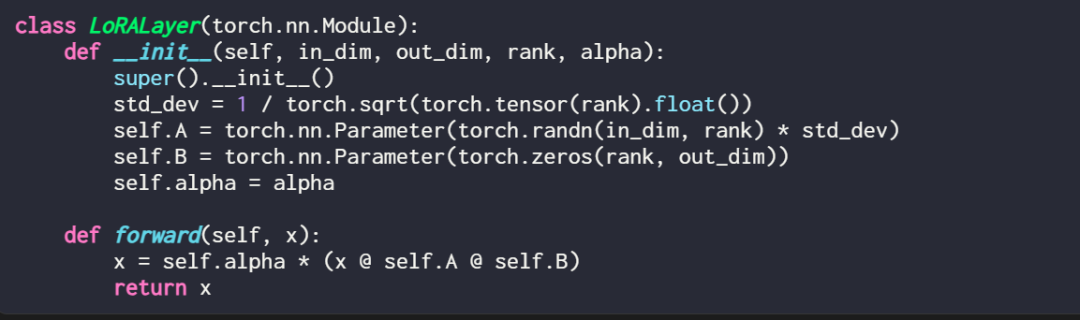

Expressing a LoRA layer in code is like this:

Among them, in_dim is the input dimension of the layer you want to modify using LoRA, and the corresponding out_dim is the output dimension of the layer. A hyperparameter, the scaling factor alpha, is also added to the code. Higher alpha values mean greater adjustments to model behavior, and lower values mean the opposite. Additionally, this article initializes matrix A with smaller values from a random distribution and initializes matrix B with zeros.

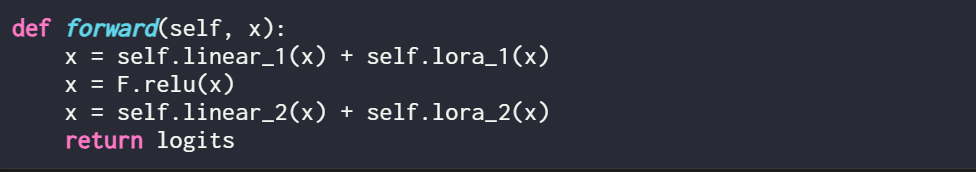

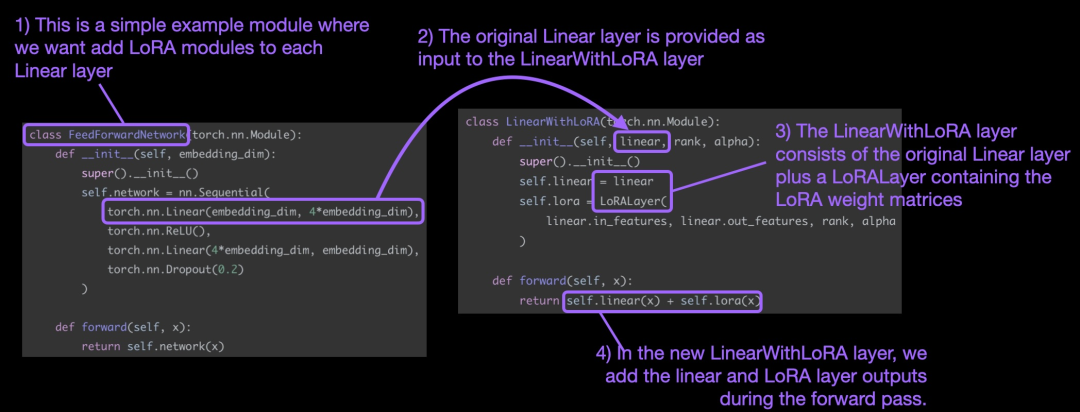

It’s worth mentioning that where LoRA comes into play is usually the linear (feedforward) layer of a neural network. For example, for a simple PyTorch model or module with two linear layers (for example, this might be the feedforward module of the Transformer block), the forward method can be expressed as:

When using LoRA, LoRA updates are usually added to the output of these linear layers, and the code is as follows:

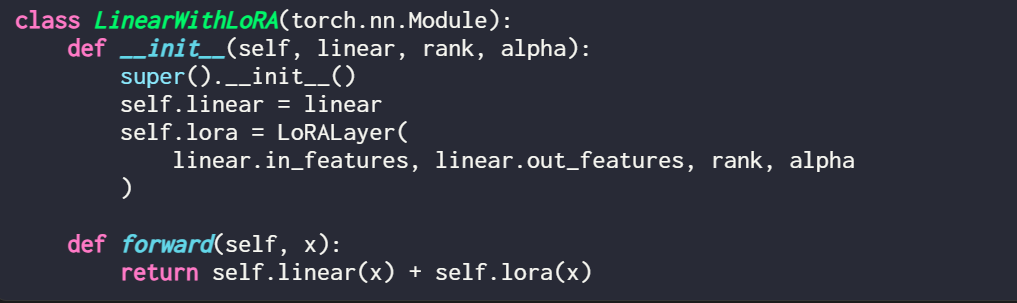

If you want to implement LoRA by modifying an existing PyTorch model, a simple way is to replace each linear layer with a LinearWithLoRA layer:

A summary of the above concepts is shown in the figure below:

In order to apply LoRA, this article replaces the existing linear layers in the neural network with a combination of the original linear Layer and LoRALayer's LinearWithLoRA layer.

How to get started using LoRA for fine-tuning

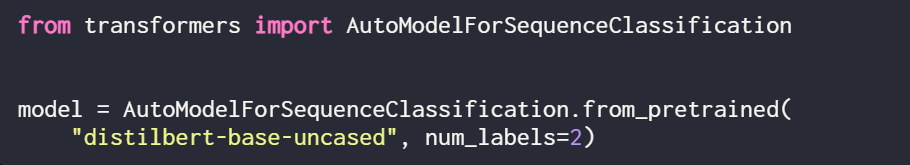

LoRA can be used for models such as GPT or image generation. For simple explanation, this article uses a small BERT (DistilBERT) model for text classification.

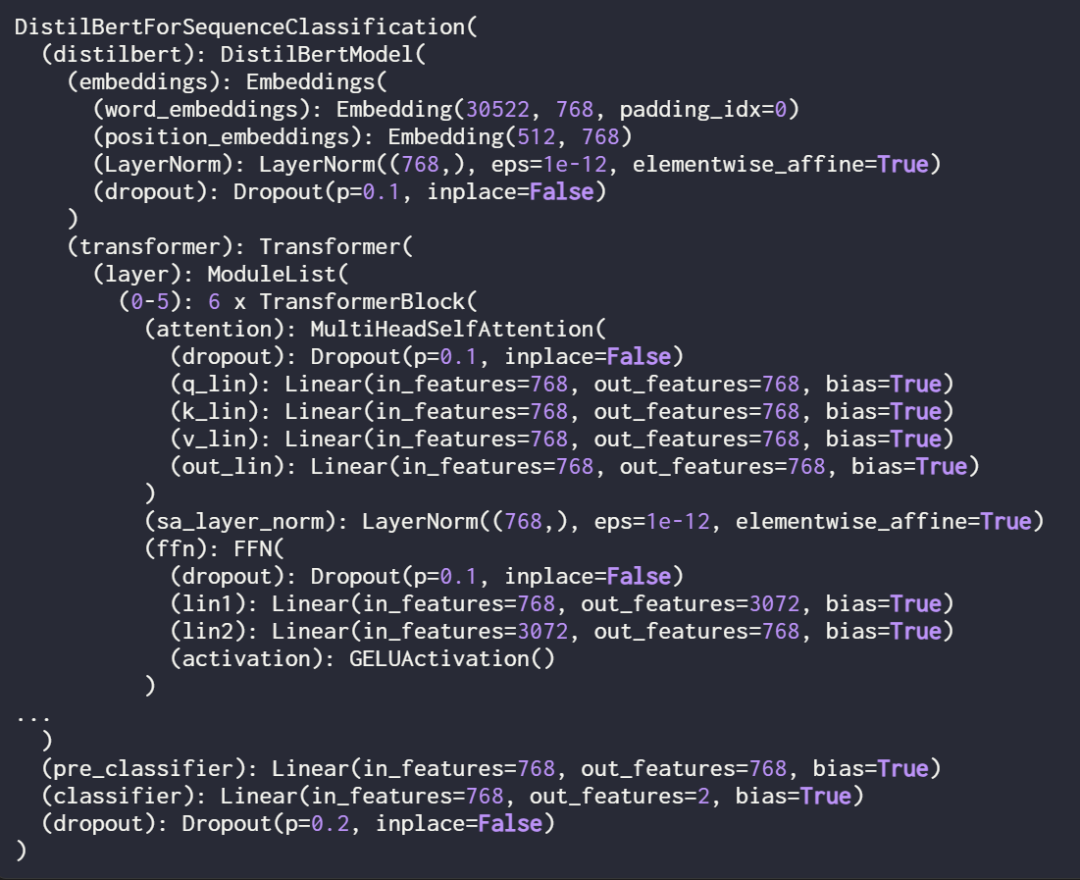

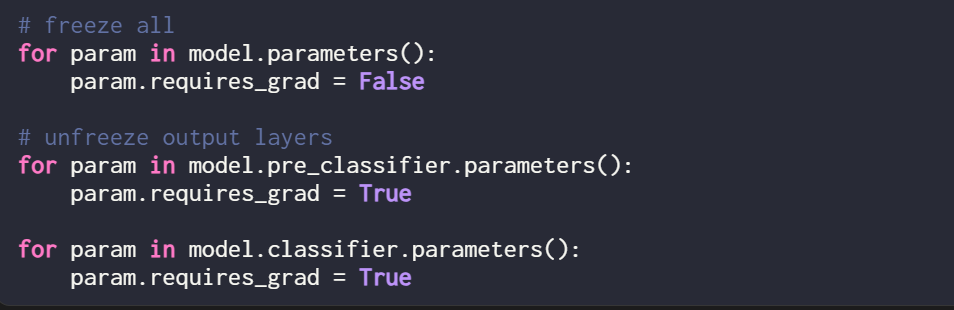

Since this article only trains new LoRA weights, it is necessary to set the requires_grad of all trainable parameters to False to freeze all model parameters:

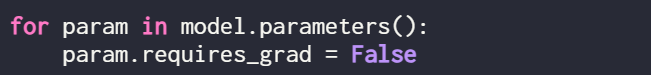

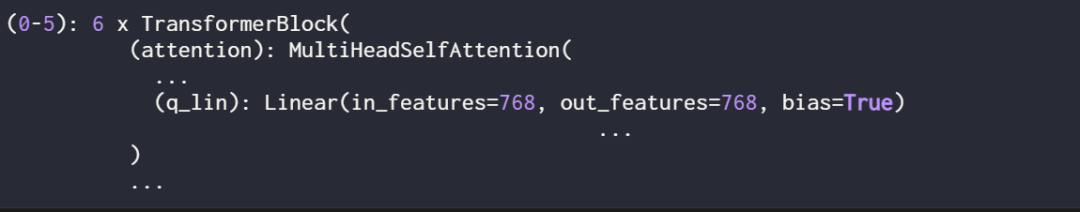

Next, use print (model) to check the structure of the model:

It can be seen from the output that the model consists of 6 transformer layers, including linear layers:

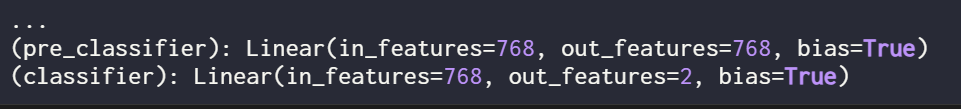

In addition , the model has two linear output layers:

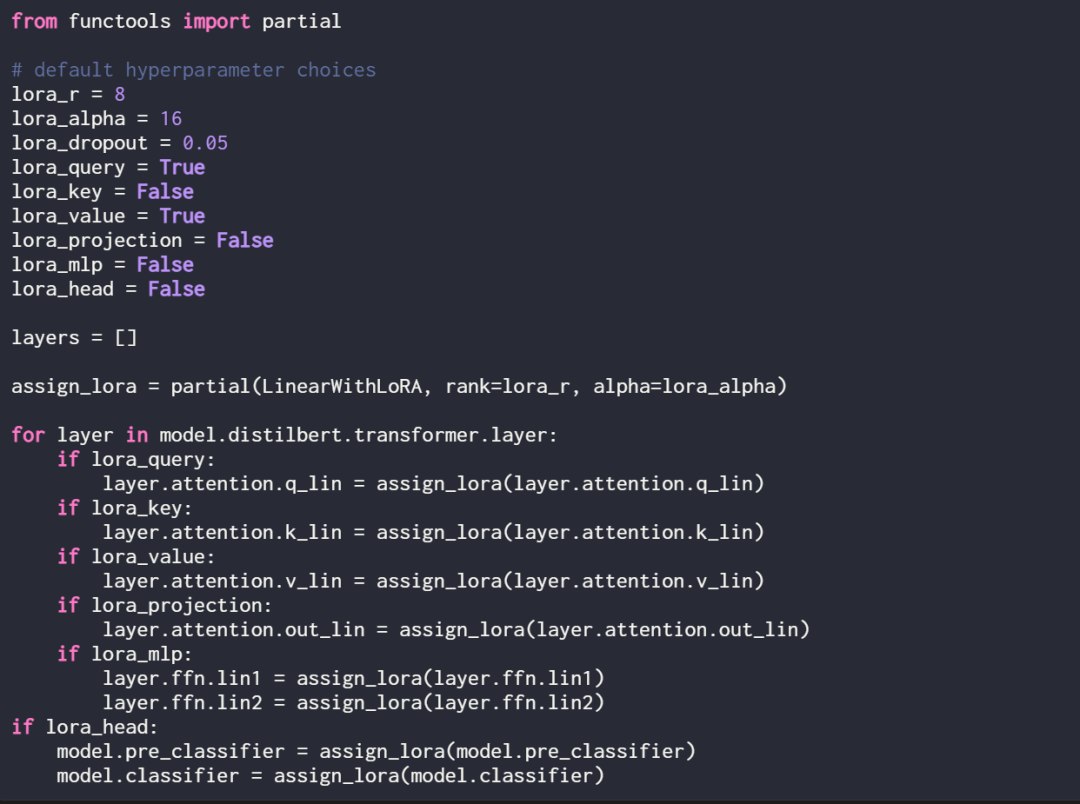

LoRA can be selectively enabled for these linear layers by defining the following assignment function and loop:

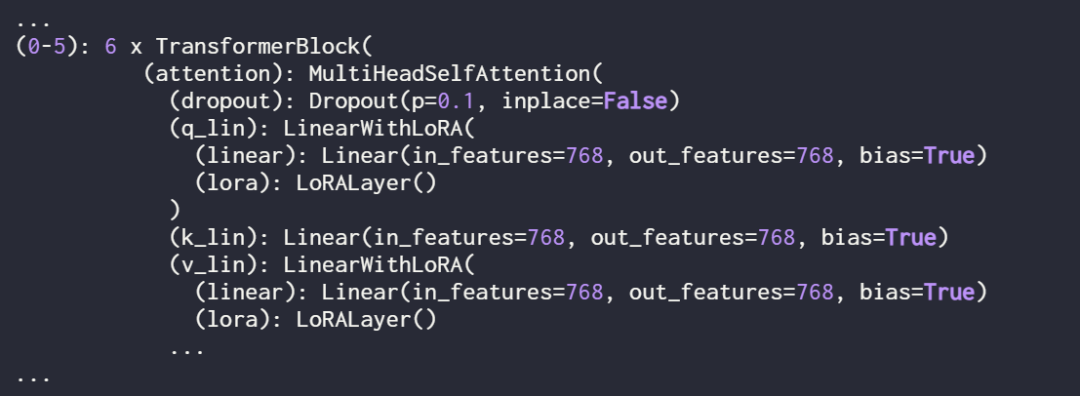

Check the model again using print (model) to check its updated structure:

##

##

As you can see above, the Linear layer has been successfully replaced by the LinearWithLoRA layer.

If you train the model using the default hyperparameters shown above, it results in the following performance on the IMDb movie review classification dataset:

- Training accuracy: 92.15%

- Verification accuracy: 89.98%

- ##Test accuracy: 89.44%

In the next section, this paper compares these LoRA fine-tuning results with traditional fine-tuning results.

Comparison with traditional fine-tuning methods

In the previous section, LoRA achieved a test accuracy of 89.44% under default settings, How does this compare to traditional fine-tuning methods?

For comparison, this article conducted another experiment, taking training the DistilBERT model as an example, but only updated the last 2 layers during training. The researchers achieved this by freezing all model weights and then unfreezing the two linear output layers:

Classification performance obtained by training only the last two layers As follows:

- Training accuracy: 86.68%

- Validation accuracy: 87.26%

- Test accuracy: 86.22%

The results show that LoRA performs better than the traditional method of fine-tuning the last two layers, but it uses 4 times fewer parameters . Fine-tuning all layers required updating 450 times more parameters than the LoRA setup, but only improved test accuracy by 2%.

Optimize LoRA configuration

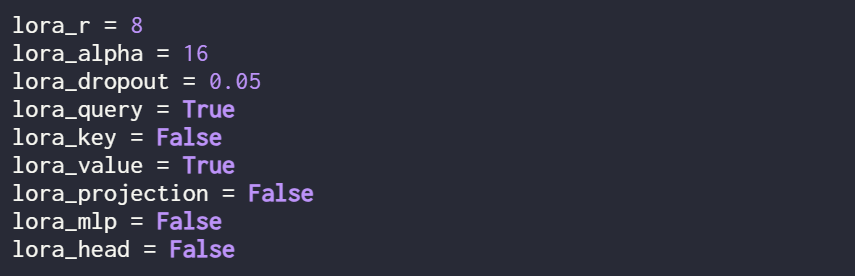

The results mentioned above are all performed by LoRA under the default settings, and the hyperparameters are as follows:

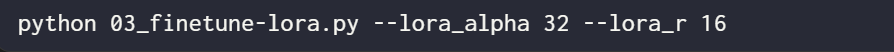

If the user wants to try different hyperparameter configurations, he can use the following command:

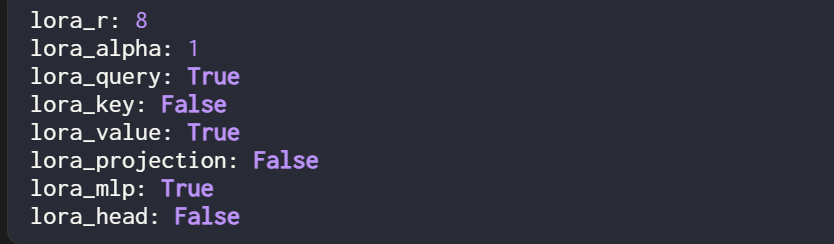

However, the optimal hyperparameter configuration is as follows:

Under this configuration, the result is:

- Verification accuracy: 92.96%

- Test accuracy: 92.39%

Notable Yes, even with only a small set of trainable parameters in the LoRA setting (500k VS 66M), the accuracy is slightly higher than that obtained with full fine-tuning.

Original link: https://lightning.ai/lightning-ai/studios/code-lora-from-scratch?cnotallow=f5fc72b1f6eeeaf74b648b2aa8aaf8b6

The above is the detailed content of How to write LoRA code from scratch, here is a tutorial. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

Sony confirms the possibility of using special GPUs on PS5 Pro to develop AI with AMD

Apr 13, 2025 pm 11:45 PM

Sony confirms the possibility of using special GPUs on PS5 Pro to develop AI with AMD

Apr 13, 2025 pm 11:45 PM

Mark Cerny, chief architect of SonyInteractiveEntertainment (SIE, Sony Interactive Entertainment), has released more hardware details of next-generation host PlayStation5Pro (PS5Pro), including a performance upgraded AMDRDNA2.x architecture GPU, and a machine learning/artificial intelligence program code-named "Amethylst" with AMD. The focus of PS5Pro performance improvement is still on three pillars, including a more powerful GPU, advanced ray tracing and AI-powered PSSR super-resolution function. GPU adopts a customized AMDRDNA2 architecture, which Sony named RDNA2.x, and it has some RDNA3 architecture.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

What are the methods of tuning performance of Zookeeper on CentOS

Apr 14, 2025 pm 03:18 PM

What are the methods of tuning performance of Zookeeper on CentOS

Apr 14, 2025 pm 03:18 PM

Zookeeper performance tuning on CentOS can start from multiple aspects, including hardware configuration, operating system optimization, configuration parameter adjustment, monitoring and maintenance, etc. Here are some specific tuning methods: SSD is recommended for hardware configuration: Since Zookeeper's data is written to disk, it is highly recommended to use SSD to improve I/O performance. Enough memory: Allocate enough memory resources to Zookeeper to avoid frequent disk read and write. Multi-core CPU: Use multi-core CPU to ensure that Zookeeper can process it in parallel.

How to train PyTorch model on CentOS

Apr 14, 2025 pm 03:03 PM

How to train PyTorch model on CentOS

Apr 14, 2025 pm 03:03 PM

Efficient training of PyTorch models on CentOS systems requires steps, and this article will provide detailed guides. 1. Environment preparation: Python and dependency installation: CentOS system usually preinstalls Python, but the version may be older. It is recommended to use yum or dnf to install Python 3 and upgrade pip: sudoyumupdatepython3 (or sudodnfupdatepython3), pip3install--upgradepip. CUDA and cuDNN (GPU acceleration): If you use NVIDIAGPU, you need to install CUDATool

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library: