Technology peripherals

Technology peripherals

AI

AI

Accelerating enterprise GenAI innovation end-to-end, NVIDIA NIM microservices have become a highlight for software companies!

Accelerating enterprise GenAI innovation end-to-end, NVIDIA NIM microservices have become a highlight for software companies!

Accelerating enterprise GenAI innovation end-to-end, NVIDIA NIM microservices have become a highlight for software companies!

Software development company Cloudera recently announced a strategic partnership with NVIDIA to accelerate the deployment of generative AI applications. The collaboration will involve integrating NVIDIA's AI microservices into the Cloudera Data Platform (CDP) and is designed to help enterprises more quickly build and scale custom large language models (LLMs) based on their data. This initiative will provide enterprises with more powerful tools and technologies to better utilize their data resources and accelerate the development and deployment process of AI applications. This collaboration will bring more opportunities to enterprises, helping them make more efficient data-driven decisions and drive business development. The cooperation between Cloudera and NVIDIA will provide enterprises with more choices and flexibility, and is expected to promote the widespread application of AI technology in various industries.

As part of this collaboration, Cloudera plans to leverage NVIDIA AI Enterprise technology, including NVIDIA Inference Manager (NIM) microservices, to uncover the power of more than 25 exabytes of data in CDPs insights. This valuable enterprise information will be imported into Cloudera's machine learning platform, an end-to-end AI workflow service provided by the company, designed to drive a new round of generative AI innovation.

Priyank Patel, vice president of AI/ML products at Cloudera, pointed out that a full-stack platform that combines enterprise data and is optimized for large-scale language models is essential for taking organizations’ generative AI applications from pilot to production. It's important. Cloudera is currently integrating NVIDIA NIM and CUDA-X microservices to drive its machine learning platform and help customers transform the potential of AI into business reality.

This collaboration highlights the strength of Cloudera and NVIDIA in technological innovation and also demonstrates the rapidly growing market demand for generative AI applications. By integrating the resources and technical advantages of both parties, we will jointly promote the practical application of AI in enterprises and provide enterprises with more efficient and intelligent solutions.

In addition, by leveraging the massive data in CDP and combining it with the powerful capabilities of the Cloudera machine learning platform, enterprises can dig deeper into the value of data and achieve more accurate decisions and more efficient operations. Business operations. This cooperation will bring a more intelligent and automated future to enterprises and promote the development and progress of the entire industry.

1. Connecting models and data

In connecting models and data, enterprise AI faces a key challenge, that is, how to connect the basic Models are connected with relevant business data to produce accurate, contextual output. NVIDIA’s NIM and NeMo Retriever microservices aim to bridge this gap by enabling developers to connect LLMs (Large Language Models) with structured and unstructured enterprise data ranging from text documents to images and visualizations.

Specifically, Cloudera Machine Learning will provide integrated NIM model serving capabilities to enhance inference performance and enable fault tolerance, low latency and automatic scaling in hybrid and multi-cloud environments. The addition of NeMo Retriever will simplify the development of Retrieval Augmented Generation (RAG) applications, which improve the accuracy of generative AI by retrieving relevant data in real time.

Among them, NVIDIA NeMo Retriever is a new service in the NVIDIA NeMo framework and tool series. NeMo is a family of frameworks and tools for building, customizing, and deploying generative AI models. As a semantic retrieval microservice, NeMo Retriever uses NVIDIA-optimized algorithms to help generative AI applications make more accurate answers. Developers using this microservice can connect their AI applications to business data located in various clouds and data centers. This connection not only enhances the accuracy of AI applications, but also enables developers to process and utilize enterprise data more flexibly.

In summary, microservices such as NVIDIA's NIM and NeMo Retriever provide enterprises with an effective way to closely integrate AI models with business data to generate more Accurate and useful output. This provides enterprises with powerful tools to further promote the application and development of AI in various fields.

2. Data to generative AI deployment, greatly shortening the time

The cooperation between NVIDIA and Cloudera is opening a new door for enterprises , leading them to more efficiently utilize massive data to build customized collaborative assistants and productivity tools. Justin Boitano, vice president of enterprise products at NVIDIA, said: "The integration of NVIDIA NIM microservices with the Cloudera data platform provides developers with a more flexible and easier way to deploy large-scale language models, thereby promoting enterprise business transformation."

By simplifying the path from data to generative AI deployment, Cloudera and NVIDIA aim to accelerate enterprise adoption of transformative applications such as coding assistants, chatbots, document summarization tools and semantic search tools. . This collaboration builds on the two companies' previous efforts to leverage GPU acceleration by integrating NVIDIA RAPIDS into CDP.

Patel highlighted the business benefits of the expanded collaboration, stating: “In addition to providing customers with powerful generative AI capabilities and performance, the results of this integration will enable enterprises to The ability to make more accurate, timely decisions while reducing inaccuracies, hallucinations and errors in forecasts – all critical factors in navigating today’s data environment.”

Cloudera will demonstrate its new generative AI capabilities at NVIDIA GTC March 18-21 in San Jose, CA. As leading enterprises explore the potential of foundational models to transform their operations, Cloudera and NVIDIA believe their collaboration will position customers at the forefront of the emerging era of enterprise AI.

The above is the detailed content of Accelerating enterprise GenAI innovation end-to-end, NVIDIA NIM microservices have become a highlight for software companies!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

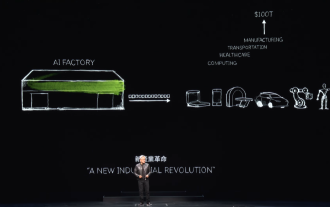

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

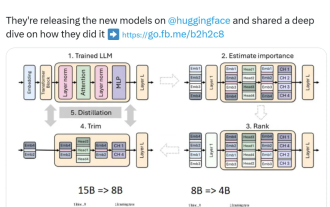

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

The rise of small models. Last month, Meta released the Llama3.1 series of models, which includes Meta’s largest model to date, the 405B model, and two smaller models with 70 billion and 8 billion parameters respectively. Llama3.1 is considered to usher in a new era of open source. However, although the new generation models are powerful in performance, they still require a large amount of computing resources when deployed. Therefore, another trend has emerged in the industry, which is to develop small language models (SLM) that perform well enough in many language tasks and are also very cheap to deploy. Recently, NVIDIA research has shown that structured weight pruning combined with knowledge distillation can gradually obtain smaller language models from an initially larger model. Turing Award Winner, Meta Chief A

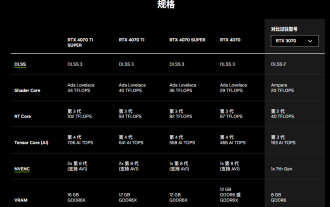

Nvidia releases GDDR6 memory version of GeForce RTX 4070 graphics card, available from September

Aug 21, 2024 am 07:31 AM

Nvidia releases GDDR6 memory version of GeForce RTX 4070 graphics card, available from September

Aug 21, 2024 am 07:31 AM

According to news from this site on August 20, multiple sources reported in July that Nvidia RTX4070 and above graphics cards will be in tight supply in August due to the shortage of GDDR6X video memory. Subsequently, speculation spread on the Internet about launching a GDDR6 memory version of the RTX4070 graphics card. As previously reported by this site, Nvidia today released the GameReady driver for "Black Myth: Wukong" and "Star Wars: Outlaws". At the same time, the press release also mentioned the release of the GDDR6 video memory version of GeForce RTX4070. Nvidia stated that the new RTX4070's specifications other than the video memory will remain unchanged (of course, it will also continue to maintain the price of 4,799 yuan), providing similar performance to the original version in games and applications, and related products will be launched from

NVIDIA launches SFF-Ready small size chassis specification: 15 graphics card and chassis manufacturers participate to ensure graphics card and chassis compatibility

Jun 07, 2024 am 11:51 AM

NVIDIA launches SFF-Ready small size chassis specification: 15 graphics card and chassis manufacturers participate to ensure graphics card and chassis compatibility

Jun 07, 2024 am 11:51 AM

According to news from this site on June 2, Nvidia has cooperated with graphics card and chassis manufacturers to officially introduce the SFF-Ready specification for GeForce RTX gaming graphics cards and chassis, simplifying the accessory selection process for small-sized chassis. According to reports, there are currently 15 graphics card and chassis manufacturers participating in the SFF-Ready project, including ASUS, Cooler Master, and Parting Technology. SFF-Ready GeForce gaming graphics cards are for RTX4070 and above models. The size requirements are as follows: Maximum height: 151mm, including power cord bending radius. Maximum length: 304mm. Maximum thickness: 50mm or 2.5 slots. As of June 2, 2024, there are 36 GeForce RTX40 series graphics cards. Compliant with specifications, more graphics cards will be available in the future

Compliant with NVIDIA SFF-Ready specification, ASUS launches Prime GeForce RTX 40 series graphics cards

Jun 15, 2024 pm 04:38 PM

Compliant with NVIDIA SFF-Ready specification, ASUS launches Prime GeForce RTX 40 series graphics cards

Jun 15, 2024 pm 04:38 PM

According to news from this site on June 15, Asus has recently launched the Prime series GeForce RTX40 series "Ada" graphics card. Its size complies with Nvidia's latest SFF-Ready specification. This specification requires that the size of the graphics card does not exceed 304 mm x 151 mm x 50 mm (length x height x thickness). ). The Prime series GeForceRTX40 series launched by ASUS this time includes RTX4060Ti, RTX4070 and RTX4070SUPER, but it currently does not include RTX4070TiSUPER or RTX4080SUPER. This series of RTX40 graphics cards adopts a common circuit board design with dimensions of 269 mm x 120 mm x 50 mm. The main differences between the three graphics cards are

NVIDIA's most powerful open source universal model Nemotron-4 340B

Jun 16, 2024 pm 10:32 PM

NVIDIA's most powerful open source universal model Nemotron-4 340B

Jun 16, 2024 pm 10:32 PM

The performance exceeds Llama-3 and is mainly used for synthetic data. NVIDIA's general-purpose large model Nemotron has open sourced the latest 340 billion parameter version. On Friday, NVIDIA announced the launch of Nemotron-4340B. It contains a series of open models that developers can use to generate synthetic data for training large language models (LLM), which can be used for commercial applications in all industries such as healthcare, finance, manufacturing, and retail. High-quality training data plays a critical role in the responsiveness, accuracy, and quality of custom LLMs—but robust data sets are often expensive and inaccessible. Through a unique open model license, Nemotron-4340B provides developers with

PHP Frameworks and Microservices: Cloud Native Deployment and Containerization

Jun 04, 2024 pm 12:48 PM

PHP Frameworks and Microservices: Cloud Native Deployment and Containerization

Jun 04, 2024 pm 12:48 PM

Benefits of combining PHP framework with microservices: Scalability: Easily extend the application, add new features or handle more load. Flexibility: Microservices are deployed and maintained independently, making it easier to make changes and updates. High availability: The failure of one microservice does not affect other parts, ensuring higher availability. Practical case: Deploying microservices using Laravel and Kubernetes Steps: Create a Laravel project. Define microservice controllers. Create Dockerfile. Create a Kubernetes manifest. Deploy microservices. Test microservices.