Technology peripherals

Technology peripherals

AI

AI

In addition to CNN, Transformer, and Uniformer, we finally have more efficient video understanding technology

In addition to CNN, Transformer, and Uniformer, we finally have more efficient video understanding technology

In addition to CNN, Transformer, and Uniformer, we finally have more efficient video understanding technology

The core goal of video understanding is to accurately understand spatiotemporal representation, but it faces two main challenges: there is a large amount of spatiotemporal redundancy in short video clips, and complex spatiotemporal dependencies. Three-dimensional convolutional neural networks (CNN) and video transformers have performed well in solving one of these challenges, but they have certain shortcomings in addressing both challenges simultaneously. UniFormer attempts to combine the advantages of both approaches, but encounters difficulties in modeling long videos.

The emergence of low-cost solutions such as S4, RWKV and RetNet in the field of natural language processing has opened up new avenues for visual models. Mamba stands out with its Selective State Space Model (SSM), which achieves a balance of maintaining linear complexity while facilitating long-term dynamic modeling. This innovation drives its application in vision tasks, as demonstrated by Vision Mamba and VMamba, which exploit multi-directional SSM to enhance 2D image processing. These models are comparable in performance to attention-based architectures while significantly reducing memory usage.

Given that the sequences produced by videos are inherently longer, a natural question is: does Mamba work well for video understanding?

Inspired by Mamba, this article introduces VideoMamba, an SSM (Selective State Space Model) specifically tailored for video understanding. VideoMamba draws on the design philosophy of Vanilla ViT and combines convolution and attention mechanisms. It provides a linear complexity method for dynamic spatiotemporal background modeling, especially suitable for processing high-resolution long videos. The evaluation mainly focuses on four key capabilities of VideoMamba:

Scalability in the visual field: This article evaluates the scalability of VideoMamba The performance was tested and found that the pure Mamba model is often prone to overfitting when it continues to expand. This paper introduces a simple and effective self-distillation strategy, so that as the model and input size increase, VideoMamba can be used without the need for large-scale data sets. Achieve significant performance enhancements without pre-training.

Sensitivity to short-term action recognition: The analysis in this paper is extended to evaluate VideoMamba’s ability to accurately distinguish short-term actions, especially those with Actions with subtle motion differences, such as opening and closing. Research results show that VideoMamba exhibits excellent performance over existing attention-based models. More importantly, it is also suitable for mask modeling, further enhancing its temporal sensitivity.

Superiority in long video understanding: This article evaluates VideoMamba’s ability to interpret long videos. With end-to-end training, it demonstrates significant advantages over traditional feature-based methods. Notably, VideoMamba runs 6x faster than TimeSformer on 64-frame video and requires 40x less GPU memory (shown in Figure 1).

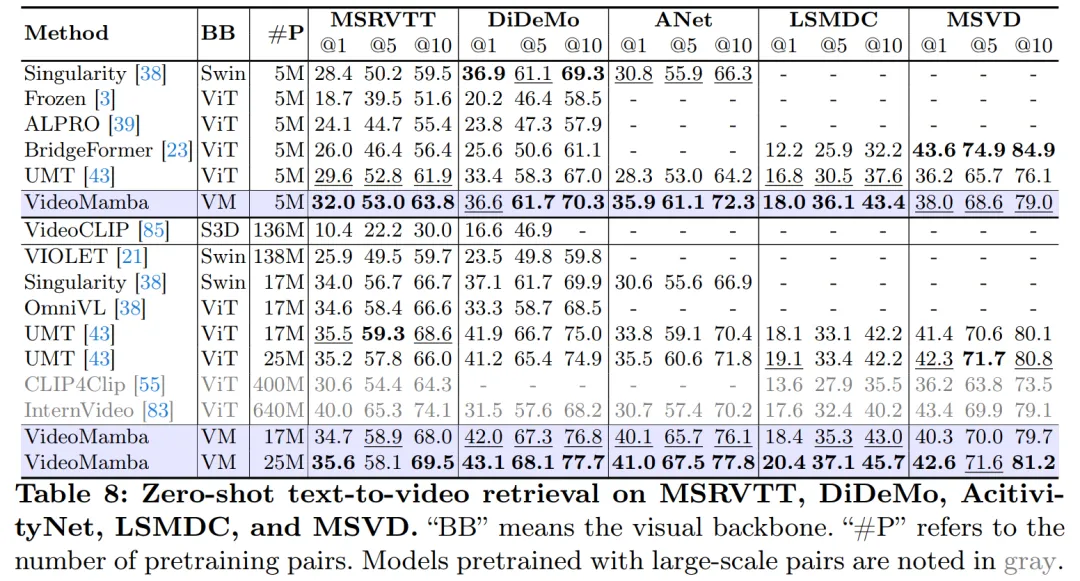

Compatibility with other modalities: Finally, this article evaluates the adaptability of VideoMamba with other modalities. Results in video text retrieval show improved performance compared to ViT, especially in long videos with complex scenarios. This highlights its robustness and multimodal integration capabilities.

In-depth experiments in this study reveal VideoMamba’s great potential for short-term (K400 and SthSthV2) and long-term (Breakfast, COIN and LVU) video content understanding. VideoMamba demonstrates high efficiency and accuracy, indicating that it will become a key component in the field of long video understanding. To facilitate future research, all code and models have been made open source.

- Paper address: https://arxiv.org/pdf/2403.06977.pdf

- Project address: https://github.com/OpenGVLab/VideoMamba

- Paper title: VideoMamba: State Space Model for Efficient Video Understanding

Method introduction

Figure 2a below shows the details of the Mamba module.

Figure 3 illustrates the overall framework of VideoMamba. This paper first uses 3D convolution (i.e. 1×16×16) to project the input video Xv ∈ R 3×T ×H×W into L non-overlapping spatio-temporal patches Xp ∈ R L×C, where L=t×h×w (t=T, h= H 16, and w= W 16). The token sequence input to the next VideoMamba encoder is

Spatiotemporal scanning: To apply the B-Mamba layer to the spatiotemporal input, In Figure 4 of this article, the original 2D scan is expanded into different bidirectional 3D scans:

(a) Spatial first, organize spatial tokens by position, and then stack them frame by frame;

(b) Time priority, arrange time tokens according to frames, and then stack them along the spatial dimension;

(c) Space-time mixing, both space priority and There is time priority, where v1 executes half of it and v2 executes all (2 times the calculation amount).

The experiments in Figure 7a show that space-first bidirectional scanning is the most efficient yet simplest. Due to Mamba's linear complexity, VideoMamba in this article can efficiently process high-resolution long videos.

For SSM in the B-Mamba layer, this article uses the same default hyperparameter settings as Mamba, setting the state dimension and expansion ratio to 16 and 2 respectively. Following ViT's approach, this paper adjusts the depth and embedding dimensions to create models of comparable size to those in Table 1, including VideoMamba-Ti, VideoMamba-S and VideoMamba-M. However, it was observed in experiments that larger VideoMamba is often prone to overfitting in experiments, resulting in suboptimal performance as shown in Figure 6a. This overfitting problem exists not only in the model proposed in this paper, but also in VMamba, where the best performance of VMamba-B is achieved at three-quarters of the total training period. To combat the overfitting problem of larger Mamba models, this paper introduces an effective self-distillation strategy that uses smaller and well-trained models as "teachers" to guide the training of larger "student" models. The results shown in Figure 6a show that this strategy leads to the expected better convergence.

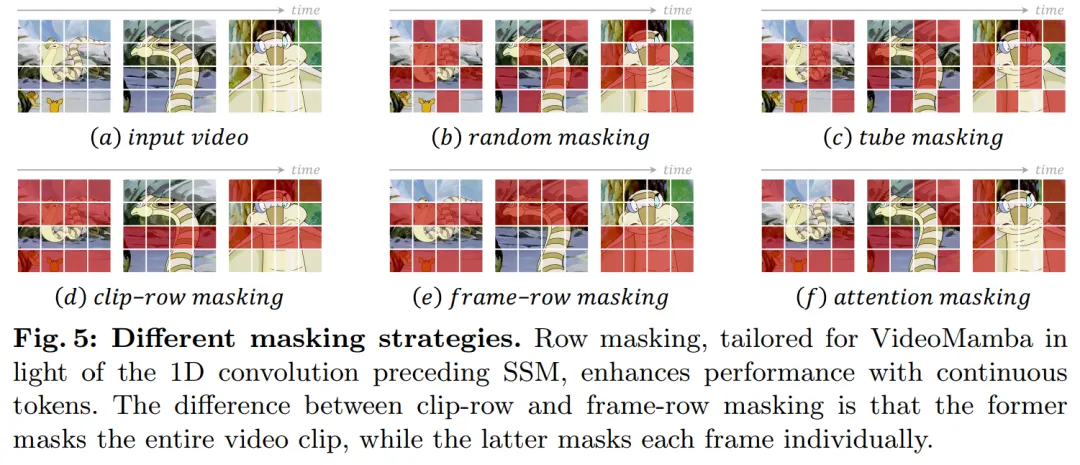

Regarding the masking strategy, this article proposes different row masking techniques, as shown in Figure 5 , specifically for the B-Mamba block's preference for consecutive tokens.

Experiment

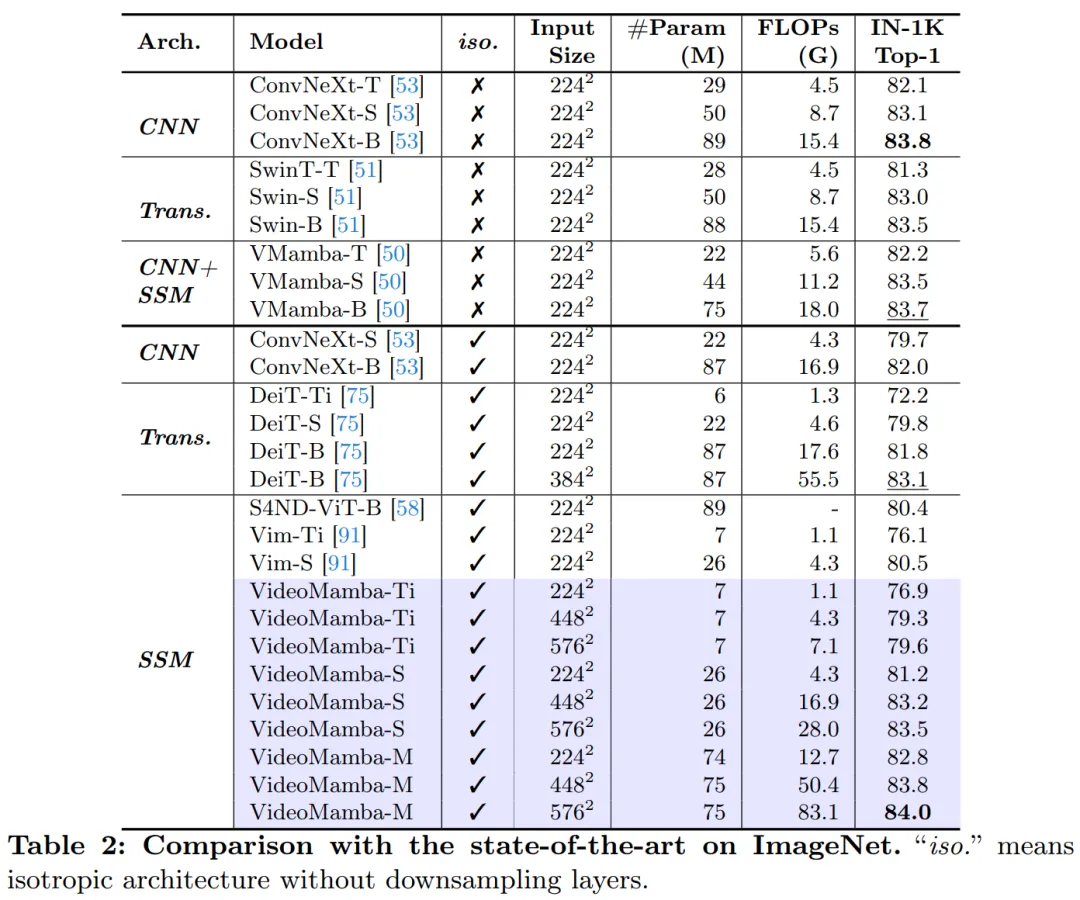

Table 2 shows the results on the ImageNet-1K dataset. Notably, VideoMamba-M significantly outperforms other isotropic architectures, improving by 0.8% compared to ConvNeXt-B and 2.0% compared to DeiT-B, while using fewer parameters. VideoMamba-M also performs well in a non-isotropic backbone structure that employs layered features for enhanced performance. Given Mamba's efficiency in processing long sequences, this paper further improves performance by increasing the resolution, achieving 84.0% top-1 accuracy using only 74M parameters.

Table 3 and Table 4 list the results on the short-term video dataset. (a) Supervised learning: Compared with pure attention methods, VideoMamba-M based on SSM gained obvious advantages, outperforming ViViT-L on the scene-related K400 and time-related Sth-SthV2 datasets respectively. 2.0% and 3.0%. This improvement comes with significantly reduced computational requirements and less pre-training data. VideoMamba-M's results are on par with SOTA UniFormer, which cleverly integrates convolution and attention in a non-isotropic architecture. (b) Self-supervised learning: With mask pre-training, VideoMamba outperforms VideoMAE, which is known for its fine motor skills. This achievement highlights the potential of our pure SSM-based model to understand short-term videos efficiently and effectively, emphasizing its suitability for both supervised and self-supervised learning paradigms.

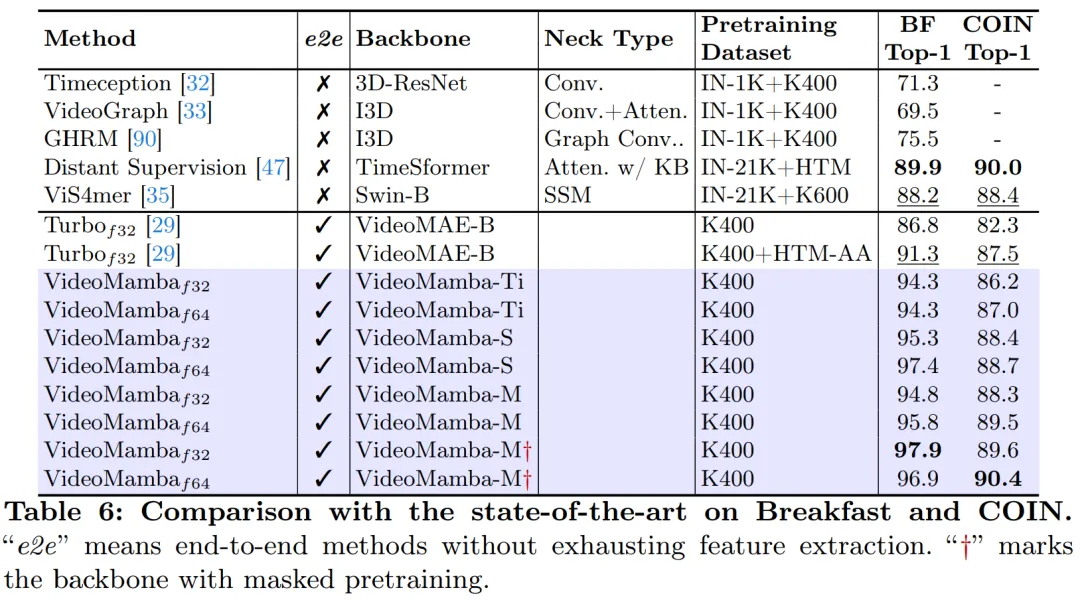

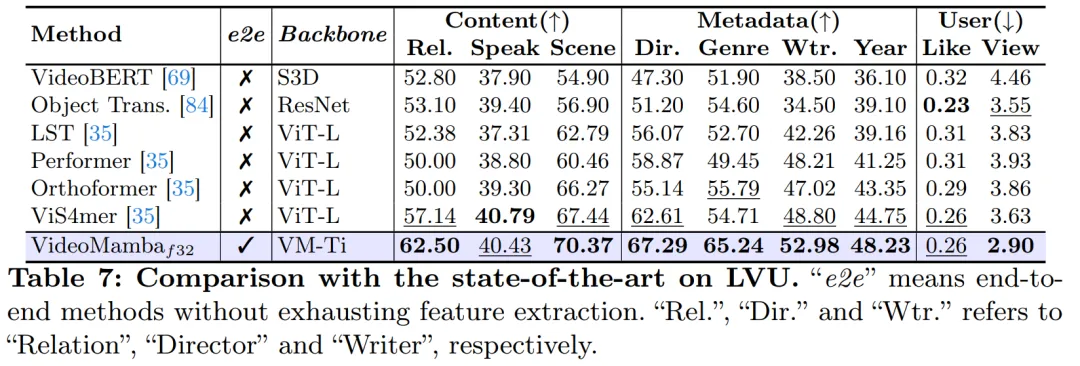

As shown in Figure 1, VideoMamba’s linear complexity makes it very suitable for end-to-end training with long videos. . The comparison in Tables 6 and 7 highlights the simplicity and effectiveness of VideoMamba over traditional feature-based methods in these tasks. It brings significant performance improvements, enabling SOTA results even at smaller model sizes. VideoMamba-Ti shows a significant 6.1% improvement over ViS4mer using Swin-B features, and also a 3.0% improvement over Turbo's multi-modal alignment method. Notably, the results highlight the positive impact of scaling models and frame rates for long-term tasks. On nine diverse and challenging tasks proposed by LVU, this paper adopts an end-to-end approach to fine-tune VideoMamba-Ti and achieves results that are comparable to or superior to current SOTA methods. These results not only highlight the effectiveness of VideoMamba, but also demonstrate its great potential for future long video understanding.

As shown in Table 8, under the same pre-training corpus and similar training strategy, VideoMamba It is better than ViT-based UMT in zero-sample video retrieval performance. This highlights Mamba's comparable efficiency and scalability compared to ViT in processing multi-modal video tasks. Notably, VideoMamba shows significant improvements on datasets with longer video lengths (e.g., ANet and DiDeMo) and more complex scenarios (e.g., LSMDC). This demonstrates Mamba's capabilities in challenging multimodal environments, even where cross-modal alignment is required.

For more research details, please refer to the original paper.

The above is the detailed content of In addition to CNN, Transformer, and Uniformer, we finally have more efficient video understanding technology. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

This article describes how to adjust the logging level of the ApacheWeb server in the Debian system. By modifying the configuration file, you can control the verbose level of log information recorded by Apache. Method 1: Modify the main configuration file to locate the configuration file: The configuration file of Apache2.x is usually located in the /etc/apache2/ directory. The file name may be apache2.conf or httpd.conf, depending on your installation method. Edit configuration file: Open configuration file with root permissions using a text editor (such as nano): sudonano/etc/apache2/apache2.conf

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

The steps to install an SSL certificate on the Debian mail server are as follows: 1. Install the OpenSSL toolkit First, make sure that the OpenSSL toolkit is already installed on your system. If not installed, you can use the following command to install: sudoapt-getupdatesudoapt-getinstallopenssl2. Generate private key and certificate request Next, use OpenSSL to generate a 2048-bit RSA private key and a certificate request (CSR): openss

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

The readdir function in the Debian system is a system call used to read directory contents and is often used in C programming. This article will explain how to integrate readdir with other tools to enhance its functionality. Method 1: Combining C language program and pipeline First, write a C program to call the readdir function and output the result: #include#include#include#includeintmain(intargc,char*argv[]){DIR*dir;structdirent*entry;if(argc!=2){

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

In Debian systems, OpenSSL is an important library for encryption, decryption and certificate management. To prevent a man-in-the-middle attack (MITM), the following measures can be taken: Use HTTPS: Ensure that all network requests use the HTTPS protocol instead of HTTP. HTTPS uses TLS (Transport Layer Security Protocol) to encrypt communication data to ensure that the data is not stolen or tampered during transmission. Verify server certificate: Manually verify the server certificate on the client to ensure it is trustworthy. The server can be manually verified through the delegate method of URLSession

How to learn Debian syslog

Apr 13, 2025 am 11:51 AM

How to learn Debian syslog

Apr 13, 2025 am 11:51 AM

This guide will guide you to learn how to use Syslog in Debian systems. Syslog is a key service in Linux systems for logging system and application log messages. It helps administrators monitor and analyze system activity to quickly identify and resolve problems. 1. Basic knowledge of Syslog The core functions of Syslog include: centrally collecting and managing log messages; supporting multiple log output formats and target locations (such as files or networks); providing real-time log viewing and filtering functions. 2. Install and configure Syslog (using Rsyslog) The Debian system uses Rsyslog by default. You can install it with the following command: sudoaptupdatesud