Technology peripherals

Technology peripherals

AI

AI

Zero-sample 6D object pose estimation framework SAM-6D, a step closer to embodied intelligence

Zero-sample 6D object pose estimation framework SAM-6D, a step closer to embodied intelligence

Zero-sample 6D object pose estimation framework SAM-6D, a step closer to embodied intelligence

Object pose estimation plays a key role in many real-world applications, such as embodied intelligence, dexterous robot manipulation, and augmented reality.

In this field, the first task to receive attention is Instance-level 6D pose estimation, which requires annotated data about the target object for model training. Make the depth model object-specific and cannot be transferred to new objects. Later, the research focus gradually turned to category-level 6D pose estimation, which is used to process unseen objects, but requires that the object belongs to a known category of interest.

And Zero-sample 6D pose estimation is a more generalized task setting. Given a CAD model of any object, it aims to detect in the scene the target object and estimate its 6D pose. Despite its significance, this zero-shot task setting faces significant challenges in both object detection and pose estimation.

Figure 1. Zero-sample 6D object pose estimation task

Recently, segment all models SAM [1] has attracted much attention, and its excellent zero-sample segmentation ability is eye-catching. SAM achieves high-precision segmentation through various cues, such as pixels, bounding boxes, text and masks, etc., which also provides reliable support for the zero-sample 6D object pose estimation task, demonstrating its promising potential.

Therefore, researchers from Cross-Dimensional Intelligence, the Chinese University of Hong Kong (Shenzhen), and South China University of Technology jointly proposed an innovative zero-sample 6D object pose estimation framework SAM-6D. This research has been included in CVPR 2024.

- Paper link: https://arxiv.org/pdf/2311.15707.pdf

- Code link: https ://github.com/JiehongLin/SAM-6D

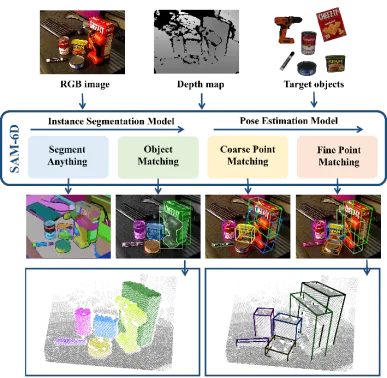

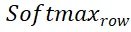

SAM-6D achieves zero-sample 6D object pose estimation through two steps, including instance segmentation and Pose estimation. Accordingly, given an arbitrary target object, SAM-6D utilizes two dedicated sub-networks, namely Instance Segmentation Model (ISM) and Pose Estimation Model (PEM), to achieve the target from RGB-D scene images ; Among them, ISM uses SAM as an excellent starting point, combined with carefully designed object matching scores to achieve instance segmentation of arbitrary objects, and PEM solves the object pose problem through a local-to-local two-stage point set matching process. An overview of the SAM-6D is shown in Figure 2.

Figure 2. SAM-6D Overview

In general, SAM-6D technology The contributions can be summarized as follows:

- SAM-6D is an innovative zero-sample 6D pose estimation framework that enables the generation of RGB-D images from RGB-D images given a CAD model of any object. performs instance segmentation and pose estimation of target objects, and performs excellently on the seven core datasets of BOP [2].

- SAM-6D leverages the zero-shot segmentation capability of the segmentation-everything model to generate all possible candidate objects and designs a novel object matching score to identify objects corresponding to the target object. candidate.

- SAM-6D regards pose estimation as a local-to-local point set matching problem, adopts a simple but effective Background Token design, and proposes a two-dimensional algorithm for arbitrary objects. Stage point set matching model; the first stage implements coarse point set matching to obtain the initial object pose, and the second stage uses a novel sparse to dense point set transformer to perform fine point set matching to further optimize the pose.

Instance Segmentation Model (ISM)

SAM-6D uses the Instance Segmentation Model (ISM) to detect and segment arbitrary objects mask.

Given a cluttered scene represented by RGB images, ISM leverages the zero-shot transfer capability of the Segmentation Everything Model (SAM) to generate all possible candidates. For each candidate object, ISM calculates an object match score to estimate how well it matches the target object in terms of semantics, appearance, and geometry. Finally, by simply setting a matching threshold, instances matching the target object can be identified.

The object matching score is calculated by the weighted sum of the three matching items:

Semantic matching items - For the target object, ISM renders object templates from multiple perspectives, and uses the DINOv2 [3] pre-trained ViT model to extract candidate objects and object templates Semantic features and calculate correlation scores between them. The semantic matching score is obtained by averaging the top K highest scores, and the object template corresponding to the highest correlation score is regarded as the best matching template.

Appearance matching item ——For the best matching template, use the ViT model to extract image block features and calculate the correlation between it and the block features of the candidate object , thereby obtaining an appearance match score, which is used to distinguish objects that are semantically similar but have different appearances.

Geometric Match - Taking into account factors such as the shape and size differences of different objects, ISM also designed a geometric match score. The average of the rotation corresponding to the best matching template and the point cloud of the candidate object can give a rough object pose, and the bounding box can be obtained by rigidly transforming and projecting the object CAD model using this pose. Calculating the intersection-over-union (IoU) ratio between the bounding box and the candidate bounding box can obtain the geometric matching score.

Pose Estimation Model (PEM)

For each candidate object that matches the target object, SAM-6D utilizes the Pose Estimation Model (PEM) ) to predict its 6D pose relative to the object CAD model.

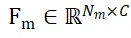

Denote the segmented candidate object and the sampling point set of the object CAD model as  and

and  respectively, where N_m and N_o represent the number of their points; at the same time, the features of these two point sets are represented as

respectively, where N_m and N_o represent the number of their points; at the same time, the features of these two point sets are represented as  and

and  , and C represents the number of channels of the feature. The goal of PEM is to obtain an assignment matrix that represents the local-to-local correspondence from P_m to P_o; due to occlusion, P_o only partially matches P_m, and due to segmentation inaccuracy and sensor noise, P_m only partially matches Partial AND matches P_o.

, and C represents the number of channels of the feature. The goal of PEM is to obtain an assignment matrix that represents the local-to-local correspondence from P_m to P_o; due to occlusion, P_o only partially matches P_m, and due to segmentation inaccuracy and sensor noise, P_m only partially matches Partial AND matches P_o.

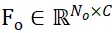

In order to solve the problem of allocating non-overlapping points between two point sets, ISM is equipped with Background Token for them respectively, recorded as  and

and  , Then local-to-local correspondence can be effectively established based on feature similarity. Specifically, the attention matrix can first be calculated as follows:

, Then local-to-local correspondence can be effectively established based on feature similarity. Specifically, the attention matrix can first be calculated as follows:

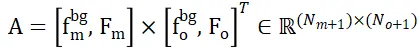

Then the distribution matrix can be obtained

and

and  represent softmax operations along rows and columns respectively, and

represent softmax operations along rows and columns respectively, and  represents a constant.

represents a constant.  The value of each row in (except the first row) represents the matching probability of each point P_m in the point set P_m with the background and the midpoint of P_o. By locating the index of the maximum score, you can find Points matching P_m (including background).

The value of each row in (except the first row) represents the matching probability of each point P_m in the point set P_m with the background and the midpoint of P_o. By locating the index of the maximum score, you can find Points matching P_m (including background).

Once the calculation is obtained, all matching point pairs {(P_m,P_o)} and their matching scores can be gathered, and finally calculated using weighted SVD Object posture.

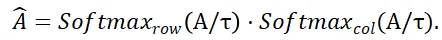

Figure 3. Schematic diagram of the Pose Estimation Model (PEM) in SAM-6D

Figure 3. Schematic diagram of the Pose Estimation Model (PEM) in SAM-6D

Using the above strategy based on Background Token, two point set matching stages are designed in PEM. The model structure is shown in Figure 3, which includes feature extraction, rough point set matching and fine point set Matches three modules.

The rough point set matching module implements sparse correspondence to calculate the initial object pose, and then uses this pose to transform the point set of the candidate object to achieve position encoding learning.

The fine point set matching module combines the position encoding of the sampling point sets of the candidate object and the target object, thereby injecting the rough correspondence relationship in the first stage, and further establishing dense correspondence relationships to obtain better Precise object pose. In order to effectively learn dense interactions at this stage, PEM introduces a novel sparse to dense point set transformer, which implements interactions on sparse versions of dense features, and utilizes Linear Transformer [5] to transform the enhanced sparse features into Diffusion back into dense features.

Experimental results

For the two sub-models of SAM-6D, the instance segmentation model (ISM) is built based on SAM and does not require The network is retrained and finetune, while the pose estimation model (PEM) is trained using the large-scale ShapeNet-Objects and Google-Scanned-Objects synthetic datasets provided by MegaPose [4].

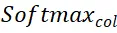

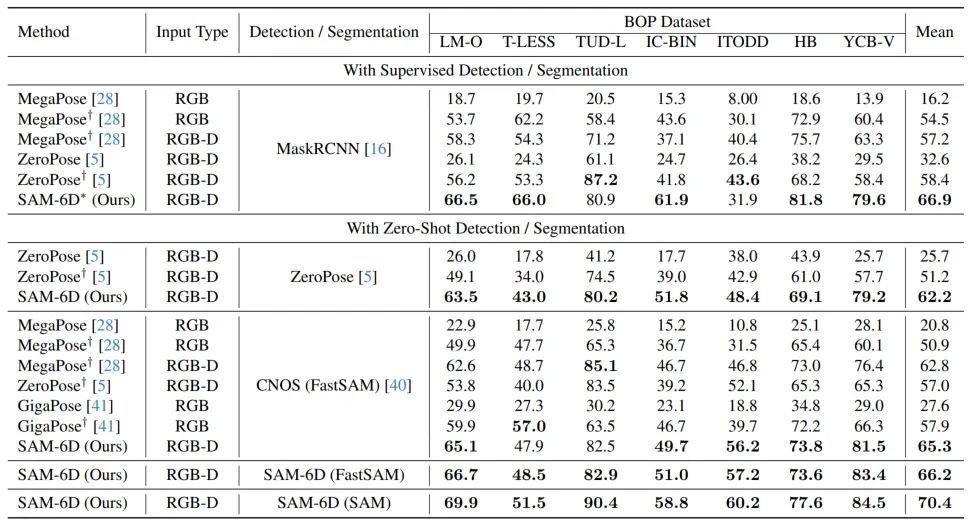

To verify its zero-sample capability, SAM-6D was tested on seven core data sets of BOP [2], including LM-O, T-LESS, TUD- L, IC-BIN, ITODD, HB and YCB-V. Tables 1 and 2 show the comparison of instance segmentation and pose estimation results of different methods on these seven datasets, respectively. Compared with other methods, SAM-6D performs very well on both methods, fully demonstrating its strong generalization ability.

Table 1. Comparison of instance segmentation results of different methods on the BOP seven core data sets

Table 2. Comparison of attitude estimation results of different methods on BOP seven core data sets

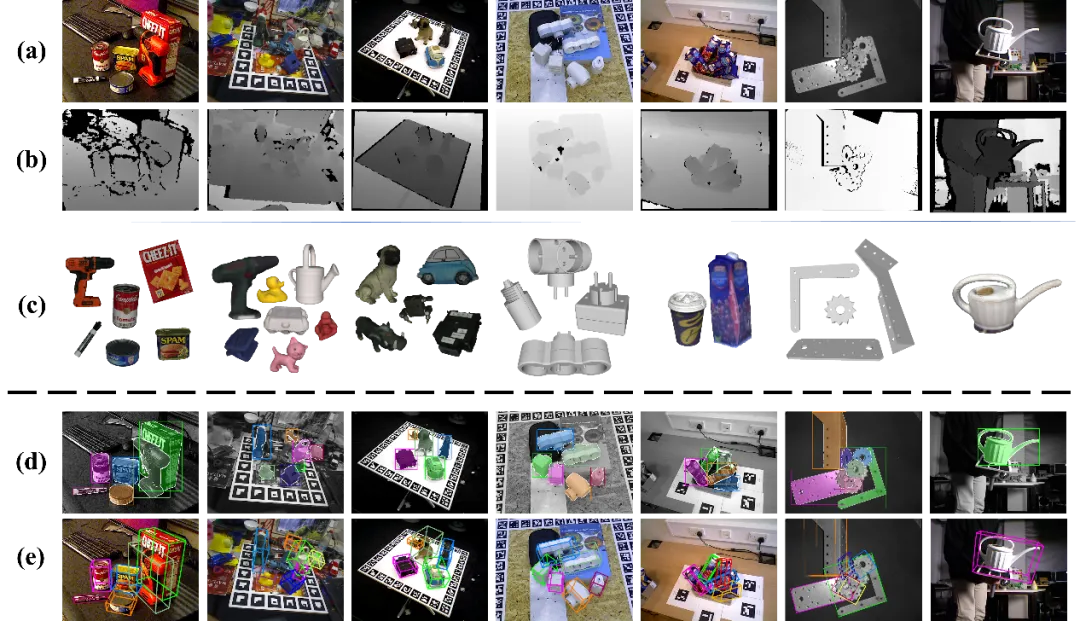

Figure 4 shows the performance of SAM-6D on BOP seven Visualization results of detection segmentation and 6D pose estimation on three data sets, where (a) and (b) are the test RGB image and depth map respectively, (c) is the given target object, and (d) and (e ) are the visualization results of detection segmentation and 6D pose respectively.

Figure 4. Visualization results of SAM-6D on the seven core data sets of BOP.

For more implementation details of SAM-6D, please read the original paper.

The above is the detailed content of Zero-sample 6D object pose estimation framework SAM-6D, a step closer to embodied intelligence. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to choose a GitLab database in CentOS

Apr 14, 2025 pm 05:39 PM

How to choose a GitLab database in CentOS

Apr 14, 2025 pm 05:39 PM

When installing and configuring GitLab on a CentOS system, the choice of database is crucial. GitLab is compatible with multiple databases, but PostgreSQL and MySQL (or MariaDB) are most commonly used. This article analyzes database selection factors and provides detailed installation and configuration steps. Database Selection Guide When choosing a database, you need to consider the following factors: PostgreSQL: GitLab's default database is powerful, has high scalability, supports complex queries and transaction processing, and is suitable for large application scenarios. MySQL/MariaDB: a popular relational database widely used in Web applications, with stable and reliable performance. MongoDB:NoSQL database, specializes in

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product