Technology peripherals

Technology peripherals

AI

AI

Cambridge team's open source: empowering multi-modal large model RAG applications, the first pre-trained universal multi-modal post-interactive knowledge retriever

Cambridge team's open source: empowering multi-modal large model RAG applications, the first pre-trained universal multi-modal post-interactive knowledge retriever

Cambridge team's open source: empowering multi-modal large model RAG applications, the first pre-trained universal multi-modal post-interactive knowledge retriever

- ##Paper link : https://arxiv.org/abs/2402.08327

- DEMO link: https://u60544-b8d4-53eaa55d.westx.seetacloud.com:8443 /

- Project homepage link: https://preflmr.github.io/

- # #Paper title: PreFLMR: Scaling Up Fine-Grained Late-Interaction Multi-modal Retrievers ##Background

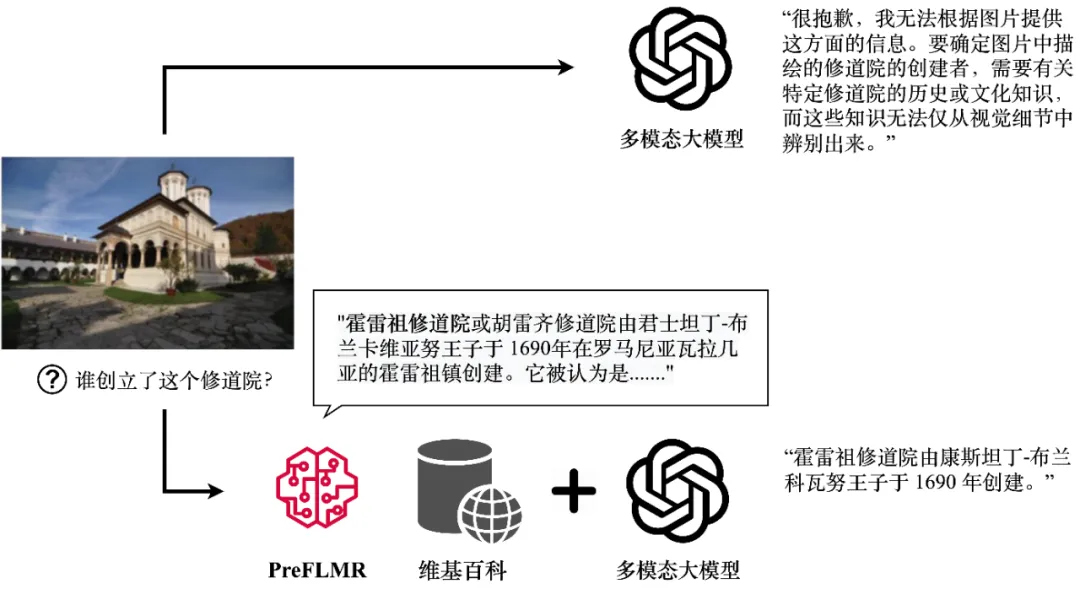

Although large multi-modal models (such as GPT4-Vision, Gemini, etc.) demonstrate powerful general image and text understanding capabilities, their performance is not satisfactory when dealing with problems that require professional knowledge. Even GPT4-Vision cannot effectively answer knowledge-intensive questions (as shown in Figure 1), which poses challenges to many enterprise-level applications.

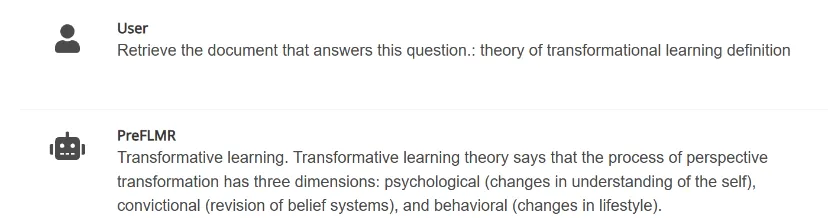

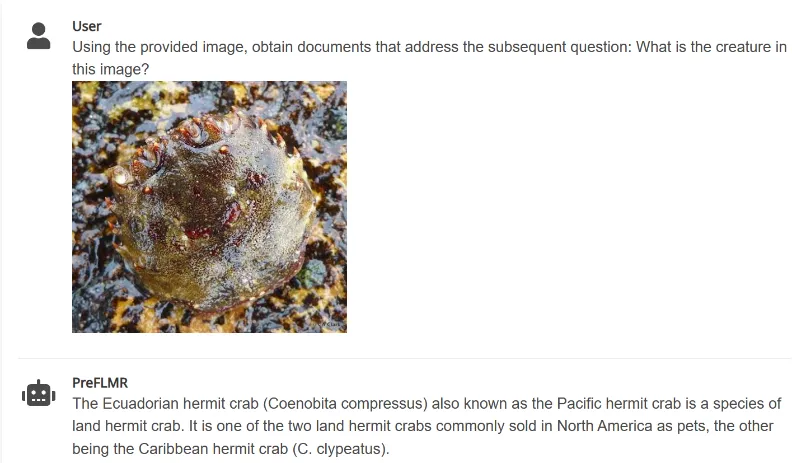

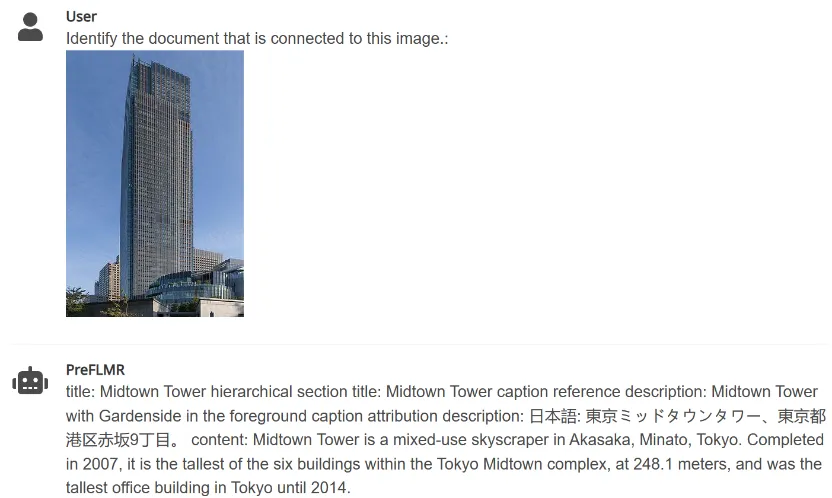

GPT4-Vision can obtain relevant knowledge through the PreFLMR multi-modal knowledge retriever and generate accurate answers. The figure shows the actual output of the model.

GPT4-Vision can obtain relevant knowledge through the PreFLMR multi-modal knowledge retriever and generate accurate answers. The figure shows the actual output of the model.

Retrieval-Augmented Generation (RAG) provides a simple and effective way to solve this problem, making multi-modal large models become like "domains" in a certain field Experts". Its working principle is as follows: first, use a lightweight knowledge retriever (Knowledge Retriever) to retrieve relevant professional knowledge from professional databases (such as Wikipedia or enterprise knowledge bases); then, the large-scale model takes this knowledge and questions as input and outputs Accurate answer. The knowledge "recall ability" of multi-modal knowledge extractors directly affects whether large-scale models can obtain accurate professional knowledge when answering reasoning questions.

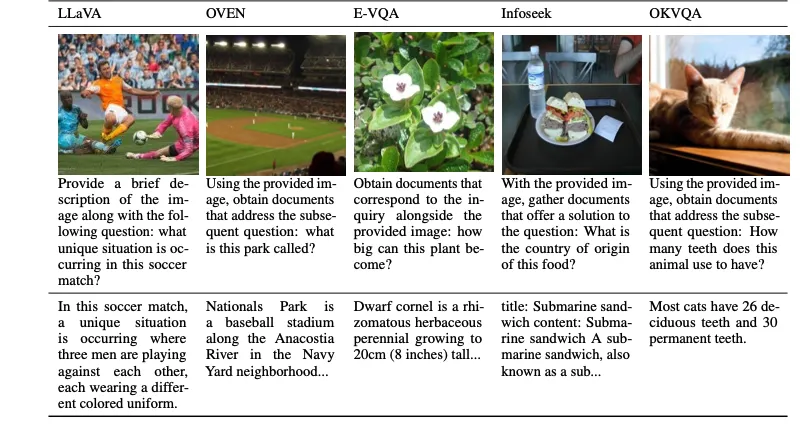

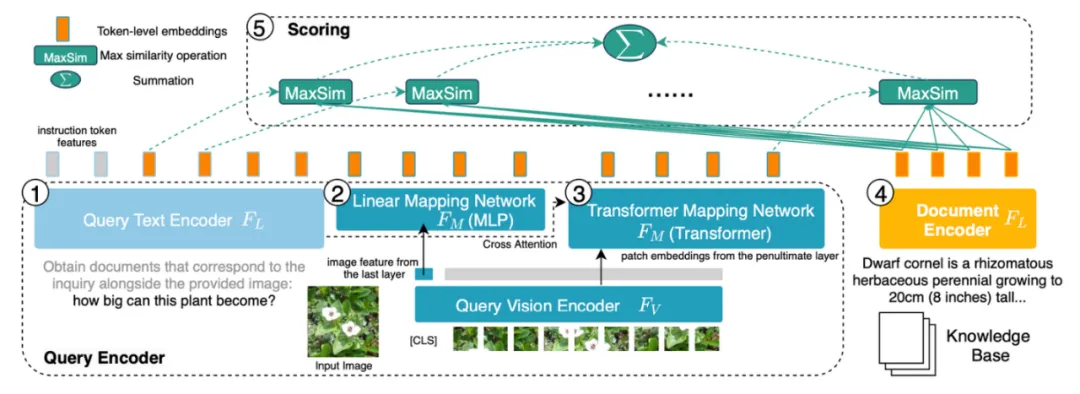

Recently,

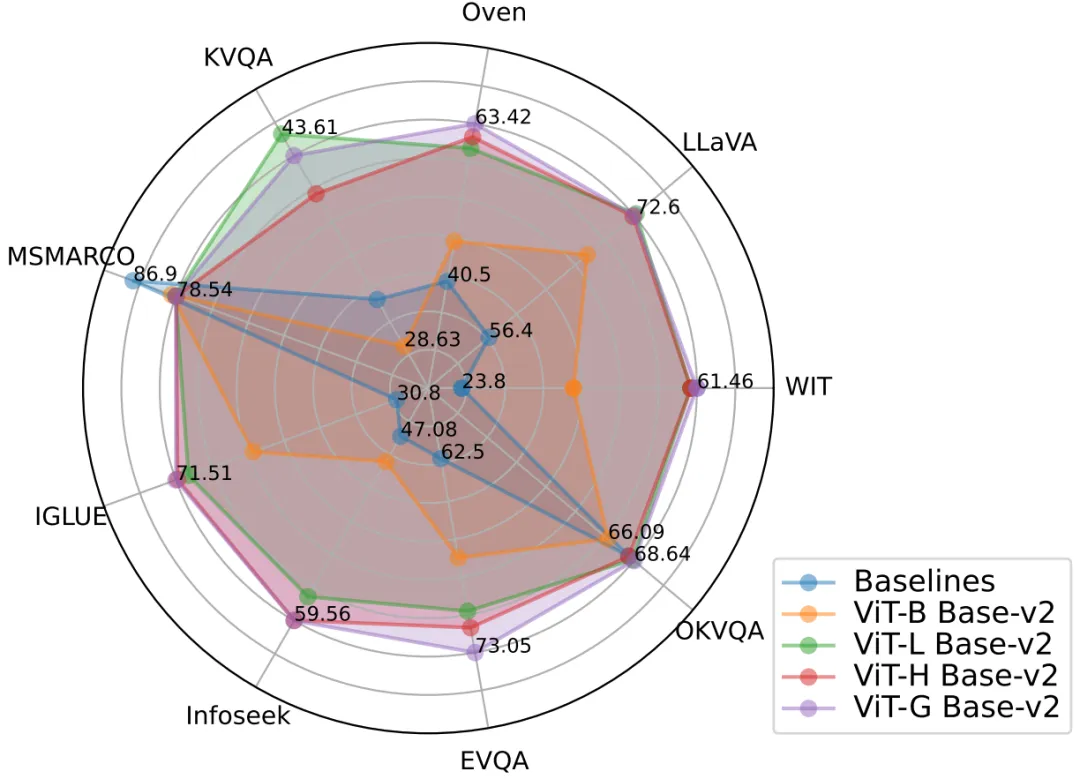

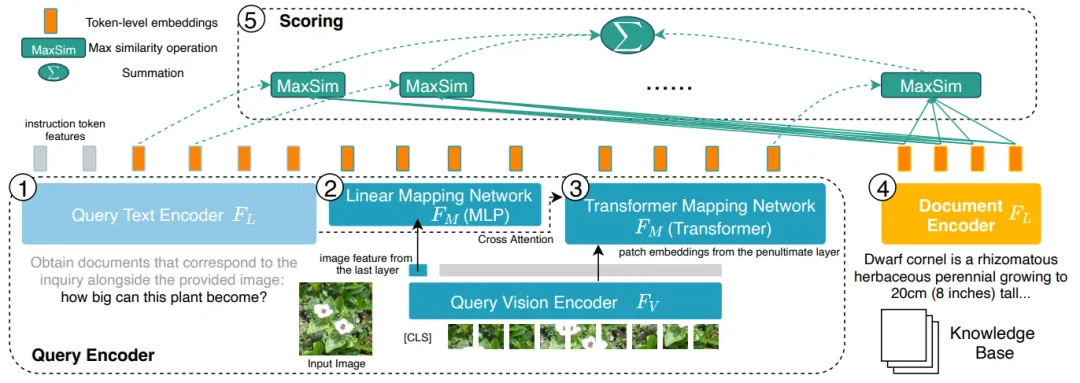

The Artificial Intelligence Laboratory of the Department of Information Engineering, University of Cambridge has completely open sourced the first pre-trained, universal multi-modal post-interactive knowledge retrieval PreFLMR (Pre- trained Fine-grained Late-interaction Multi-modal Retriever). Compared with previous common models, PreFLMR has the following characteristics: PreFLMR is a general pre-training model that can effectively solve multiple sub-tasks such as text retrieval, image retrieval and knowledge retrieval. Pre-trained on millions of levels of multi-modal data, the model performs well in multiple downstream retrieval tasks. In addition, as an excellent basic model, PreFLMR can quickly develop into an excellent domain-specific model after fine-tuning for private data. Figure 2: The PreFLMR model achieves excellent multi-modal retrieval performance on multiple tasks at the same time and is an extremely strong pre-training base. Model. 2. Traditional Dense Passage Retrieval (DPR) only uses one vector to represent the query (Query) or document (Document). The FLMR model published by the Cambridge team at NeurIPS 2023 proved that the single-vector representation design of DPR can lead to fine-grained information loss, causing DPR to perform poorly on retrieval tasks that require fine information matching. Especially in multi-modal tasks, the user's query contains complex scene information, and compressing it into a one-dimensional vector greatly inhibits the expressive ability of features. PreFLMR inherits and improves the structure of FLMR, giving it unique advantages in multi-modal knowledge retrieval. Figure 3: PreFLMR encodes the query (Query, 1, 2 on the left) at the character level (Token level) , 3) and Document (Document, 4 on the right), compared with the DPR system that compresses all information into one-dimensional vectors, it has the advantage of fine-grained information. 3. PreFLMR can extract documents related to the items in the picture according to the instructions entered by the user (such as "Extract documents that can be used to answer the following questions" or "Extract documents related to the items in the picture"). Relevant documents are extracted from the knowledge base to help multi-modal large models significantly improve the performance of professional knowledge question and answer tasks. Figure 4: PreFLMR can simultaneously process multi-modal queries that extract documents from pictures, extract documents based on questions, and extract documents based on questions and pictures together. Task. The Cambridge University team has open sourced three models of different sizes. The parameters of the models from small to large are: PreFLMR_ViT-B (207M), PreFLMR_ViT-L (422M) ), PreFLMR_ViT-G (2B), for users to choose according to actual conditions. In addition to the open source model PreFLMR itself, this project has also made two important contributions in this research direction: The following will briefly introduce the M2KR data set, PreFLMR model and experimental result analysis. To pretrain and evaluate general multi-modal retrieval models at scale, the authors compiled ten publicly available datasets and convert it into a unified question-document retrieval format. The original tasks of these data sets include image captioning, multi-modal dialogue, etc. The figure below shows the questions (first row) and corresponding documents (second row) for five of the tasks. Figure 5: Part of the knowledge extraction task in the M2KR dataset # Figure 6: Model structure of PreFLMR. Query is encoded as a Token-level feature. For each vector in the query matrix, PreFLMR finds the nearest vector in the document matrix and calculates the dot product, and then sums these maximum dot products to obtain the final relevance. The PreFLMR model is based on the Fine-grained Late-interaction Multi-modal Retriever (FLMR) published in NeurIPS 2023 and undergoes model improvements and large-scale pre-training on M2KR. Compared with DPR, FLMR and PreFLMR use a matrix composed of all token vectors to characterize documents and queries. Tokens include text tokens and image tokens projected into the text space. Late interaction is an algorithm for efficiently calculating the correlation between two representation matrices. The specific method is: for each vector in the query matrix, find the nearest vector in the document matrix and calculate the dot product. These maximum dot products are then summed to obtain the final correlation. In this way, each token's representation can explicitly affect the final correlation, thus preserving token-level fine-grained information. Thanks to a dedicated post-interactive retrieval engine, PreFLMR can extract 100 relevant documents out of 400,000 documents in just 0.2 seconds, which greatly improves usability in RAG scenarios. The pre-training of PreFLMR consists of the following four stages: At the same time, the authors show that PreFLMR can be further fine-tuned on sub-datasets (such as OK-VQA, Infoseek) to obtain better retrieval performance on specific tasks. Best retrieval results: The best performing PreFLMR model uses ViT-G as the image encoder and ColBERT -base-v2 as text encoder, two billion parameters in total. It achieves performance beyond baseline models on 7 M2KR retrieval subtasks (WIT, OVEN, Infoseek, E-VQA, OKVQA, etc.). Extended visual encoding is more effective: The author found that upgrading the image encoder ViT from ViT-B (86M) to ViT-L (307M) brought significant performance improvements, but upgrading the text encoder ColBERT from Expanding base (110M) to large (345M) resulted in performance degradation and training instability. Experimental results show that for later interactive multi-modal retrieval systems, increasing the parameters of the visual encoder brings greater returns. At the same time, using multiple layers of Cross-attention for image-text projection has the same effect as using a single layer, so the design of the image-text projection network does not need to be too complicated. PreFLMR makes RAG more effective: On knowledge-intensive visual question answering tasks, retrieval enhancement using PreFLMR greatly improves the performance of the final system: reaching 94% on Infoseek and EVQA respectively And an effect improvement of 275%. After simple fine-tuning, the model based on BLIP-2 can beat the PALI-X model with hundreds of billions of parameters and the PaLM-Bison Lens system enhanced with Google API. The PreFLMR model proposed by Cambridge Artificial Intelligence Laboratory is the first open source general late interactive multi-modal retrieval model. After pre-training on millions of data on M2KR, PreFLMR shows strong performance in multiple retrieval subtasks. The M2KR dataset, PreFLMR model weights and code are available on the project homepage https://preflmr.github.io/. Expand resources

M2KR Dataset

PreFLMR retrieval model

Experimental results and vertical expansion

Conclusion

The above is the detailed content of Cambridge team's open source: empowering multi-modal large model RAG applications, the first pre-trained universal multi-modal post-interactive knowledge retriever. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

During Laravel development, it is often necessary to add virtual columns to the model to handle complex data logic. However, adding virtual columns directly into the model can lead to complexity of database migration and maintenance. After I encountered this problem in my project, I successfully solved this problem by using the stancl/virtualcolumn library. This library not only simplifies the management of virtual columns, but also improves the maintainability and efficiency of the code.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.