Technology peripherals

Technology peripherals

AI

AI

TensorFlow deep learning framework model inference pipeline for portrait cutout inference

TensorFlow deep learning framework model inference pipeline for portrait cutout inference

TensorFlow deep learning framework model inference pipeline for portrait cutout inference

Overview

In order to enable ModelScope users to quickly and conveniently use various models provided by the platform, a set of fully functional Python library is provided, which includes the implementation of the official ModelScope model. As well as code related to data preprocessing, postprocessing, effect evaluation and other functions required for using these models for inference, finetune and other tasks. It also provides a simple and easy-to-use API and rich usage examples. By calling the library, users can complete tasks such as model reasoning, training, and evaluation by writing just a few lines of code. They can also quickly perform secondary development on this basis to realize their own innovative ideas.

The algorithm model currently provided by the library covers five major AI fields: image, natural language processing, speech, multi-modality, and science, and dozens of application scenario tasks. For specific tasks, please refer to the document: Task introduce.

Deep Learning Framework

ModelScope Library currently supports deep learning frameworks such as Pytorch and Tensorflow. More frameworks will be continuously updated and expanded in the future, so stay tuned! All official models can be used for model inference through the ModelScope Library, and some models can also use the library for training and evaluation. For complete usage information, see the model card for the corresponding model.

Model Inference Pipeline

Model Inference

In deep learning, inference refers to the process by which the model predicts data. When ModelScope performs inference, it uses pipeline to perform necessary operations sequentially. A typical pipeline usually includes three steps: data preprocessing, model forward inference, and data postprocessing.

Pipeline introduction

The pipeline() method is one of the most basic user methods in the ModelScope framework and can be used to quickly perform model inference in various fields. With the pipeline() method, users can easily complete model inference for specific tasks with just one line of code.

The pipeline() method is one of the most basic user methods in the ModelScope framework and can be used to quickly perform model inference in various fields. With the pipeline() method, users can easily complete model inference for specific tasks with just one line of code.

Usage of Pipeline

This article will briefly introduce how to use the pipeline method to load the model for inference. Through the pipeline method, users can easily pull the required model from the model warehouse based on the task type and model name for inference. The main advantage of this method is that it is easy to use and can perform model inference quickly and efficiently. The convenience of the pipeline method is that it provides a direct way to obtain and apply the model without requiring users to understand the specific details of the model, thereby lowering the threshold for using the model. Through the pipeline method, users can focus more on solving problems and

- Environment preparation

- Important parameters

- Basic usage of Pipeline

- Specify preprocessing, Model for inference

- Usage examples of pipeline for different scenario task reasoning

Basic usage of Pipeline

Chinese word segmentation

The pipeline function supports specifying a specific task name. Load the task default model and create the corresponding pipeline object.

Python code

from modelscope.pipelines import pipelineword_segmentation = pipeline('word-segmentation')input_str = '开源技术小栈作者是Tinywan,你知道不?'print(word_segmentation(input_str))PHP code

<?php $operator = PyCore::import("operator");$builtins = PyCore::import("builtins");$pipeline = PyCore::import('modelscope.pipelines')->pipeline;$word_segmentation = $pipeline("word-segmentation");$input_str = "开源技术小栈作者是Tinywan,你知道不?";PyCore::print($word_segmentation($input_str));Online conversion tool: https://www.swoole.com/py2php/

Output results

/usr/local/php-8.2.14/bin/php demo.php 2024-03-25 21:41:42,434 - modelscope - INFO - PyTorch version 2.2.1 Found.2024-03-25 21:41:42,434 - modelscope - INFO - Loading ast index from /home/www/.cache/modelscope/ast_indexer2024-03-25 21:41:42,577 - modelscope - INFO - Loading done! Current index file version is 1.13.0, with md5 f54e9d2dceb89a6c989540d66db83a65 and a total number of 972 components indexed2024-03-25 21:41:44,661 - modelscope - WARNING - Model revision not specified, use revision: v1.0.32024-03-25 21:41:44,879 - modelscope - INFO - initiate model from /home/www/.cache/modelscope/hub/damo/nlp_structbert_word-segmentation_chinese-base2024-03-25 21:41:44,879 - modelscope - INFO - initiate model from location /home/www/.cache/modelscope/hub/damo/nlp_structbert_word-segmentation_chinese-base.2024-03-25 21:41:44,880 - modelscope - INFO - initialize model from /home/www/.cache/modelscope/hub/damo/nlp_structbert_word-segmentation_chinese-baseYou are using a model of type bert to instantiate a model of type structbert. This is not supported for all configurations of models and can yield errors.2024-03-25 21:41:48,633 - modelscope - WARNING - No preprocessor field found in cfg.2024-03-25 21:41:48,633 - modelscope - WARNING - No val key and type key found in preprocessor domain of configuration.json file.2024-03-25 21:41:48,633 - modelscope - WARNING - Cannot find available config to build preprocessor at mode inference, current config: {'model_dir': '/home/www/.cache/modelscope/hub/damo/nlp_structbert_word-segmentation_chinese-base'}. trying to build by task and model information.2024-03-25 21:41:48,639 - modelscope - INFO - cuda is not available, using cpu instead.2024-03-25 21:41:48,640 - modelscope - WARNING - No preprocessor field found in cfg.2024-03-25 21:41:48,640 - modelscope - WARNING - No val key and type key found in preprocessor domain of configuration.json file.2024-03-25 21:41:48,640 - modelscope - WARNING - Cannot find available config to build preprocessor at mode inference, current config: {'model_dir': '/home/www/.cache/modelscope/hub/damo/nlp_structbert_word-segmentation_chinese-base', 'sequence_length': 512}. trying to build by task and model information./home/www/anaconda3/envs/tinywan-modelscope/lib/python3.10/site-packages/transformers/modeling_utils.py:962: FutureWarning: The `device` argument is deprecated and will be removed in v5 of Transformers.warnings.warn({'output': ['开源', '技术', '小', '栈', '作者', '是', 'Tinywan', ',', '你', '知道', '不', '?']}Input multiple samples

The pipeline object also supports passing in multiple sample list inputs and returns the corresponding output list, with each element corresponding to the return result of the input sample. The reasoning method for multiple pieces of text is that the input data is processed individually using an iterator inside the pipeline and then appended to the same return List.

Python code

from modelscope.pipelines import pipelineword_segmentation = pipeline('word-segmentation')inputs =['开源技术小栈作者是Tinywan,你知道不?','webman这个框架不错,建议你看看']print(word_segmentation(inputs))PHP code

<?php $operator = PyCore::import("operator");$builtins = PyCore::import("builtins");$pipeline = PyCore::import('modelscope.pipelines')->pipeline;$word_segmentation = $pipeline("word-segmentation");$inputs = new PyList(["开源技术小栈作者是Tinywan,你知道不?", "webman这个框架不错,建议你看看"]);PyCore::print($word_segmentation($inputs));Output

[{'output': ['开源', '技术', '小', '栈', '作者', '是', 'Tinywan', ',', '你', '知道', '不', '?']},{'output': ['webman', '这个', '框架', '不错', ',', '建议', '你', '看看']}]Batch inference

pipeline's support for batch inference is similar to the above "input multiple texts", the difference is that batch forward inference will be implemented in the model forward process at the batch_size scale specified by the user.

inputs =['今天天气不错,适合出去游玩','这本书很好,建议你看看']# 指定batch_size参数来支持批量推理print(word_segmentation(inputs, batch_size=2))# 输出[{'output': ['今天', '天气', '不错', ',', '适合', '出去', '游玩']}, {'output': ['这', '本', '书', '很', '好', ',', '建议', '你', '看看']}]Input a data set

from modelscope.msdatasets import MsDatasetfrom modelscope.pipelines import pipelineinputs = ['今天天气不错,适合出去游玩', '这本书很好,建议你看看']dataset = MsDataset.load(inputs, target='sentence')word_segmentation = pipeline('word-segmentation')outputs = word_segmentation(dataset)for o in outputs:print(o)# 输出{'output': ['今天', '天气', '不错', ',', '适合', '出去', '游玩']}{'output': ['这', '本', '书', '很', '好', ',', '建议', '你', '看看']}Specify preprocessing and model for inference

The pipeline function supports incoming instantiation preprocessing objects and model objects, thereby supporting users to customize preprocessing and models during the inference process.

Creating model objects for inference

Python code

from modelscope.models import Modelfrom modelscope.pipelines import pipelinemodel = Model.from_pretrained('damo/nlp_structbert_word-segmentation_chinese-base')word_segmentation = pipeline('word-segmentation', model=model)inputs =['开源技术小栈作者是Tinywan,你知道不?','webman这个框架不错,建议你看看']print(word_segmentation(inputs))PHP code

<?php $operator = PyCore::import("operator");$builtins = PyCore::import("builtins");$Model = PyCore::import('modelscope.models')->Model;$pipeline = PyCore::import('modelscope.pipelines')->pipeline;$model = $Model->from_pretrained("damo/nlp_structbert_word-segmentation_chinese-base");$word_segmentation = $pipeline("word-segmentation", model: $model);$inputs = new PyList(["开源技术小栈作者是Tinywan,你知道不?", "webman这个框架不错,建议你看看"]);PyCore::print($word_segmentation($inputs));Output

[{'output': ['开源', '技术', '小', '栈', '作者', '是', 'Tinywan', ',', '你', '知道', '不', '?']},{'output': ['webman', '这个', '框架', '不错', ',', '建议', '你', '看看']}]Create preprocessor and model objects for inference

from modelscope.models import Modelfrom modelscope.pipelines import pipelinefrom modelscope.preprocessors import Preprocessor, TokenClassificationTransformersPreprocessormodel = Model.from_pretrained('damo/nlp_structbert_word-segmentation_chinese-base')tokenizer = Preprocessor.from_pretrained(model.model_dir)# Or call the constructor directly: # tokenizer = TokenClassificationTransformersPreprocessor(model.model_dir)word_segmentation = pipeline('word-segmentation', model=model, preprocessor=tokenizer)inputs =['开源技术小栈作者是Tinywan,你知道不?','webman这个框架不错,建议你看看']print(word_segmentation(inputs))[{'output': ['开源', '技术', '小', '栈', '作者', '是', 'Tinywan', ',', '你', '知道', '不', '?']},{'output': ['webman', '这个', '框架', '不错', ',', '建议', '你', '看看']}]Image

Note:

- Make sure you have the OpenCV library installed. If it is not installed, you can install it through pip

pip install opencv-python

If not installed, it will prompt: PHP Fatal error: Uncaught PyError: No module named 'cv2' in /home/www/build /ai/demo3.php:4

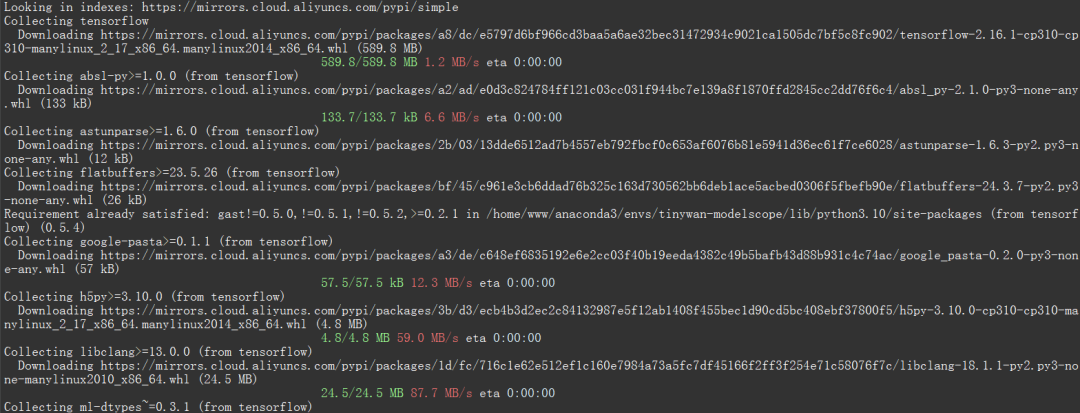

- Make sure you have installed the deep learning framework package TensorFlow library

Otherwise it will prompt modelscope.pipelines.cv.image_matting_pipeline requires the TensorFlow library but it was not found in your environment. Checkout the instructions on the installation page: https://www.tensorflow.org/install and follow the ones that match your environment..

The error message indicates that you are trying to use a module named modelscope.pipelines.cv.image_matting_pipeline, which depends on the TensorFlow library. However, the module does not work properly because the necessary TensorFlow dependencies are missing.

You can use the following command to install the latest version of TensorFlow

pip install tensorflow

##Picture

##Picture

Picture

Picture

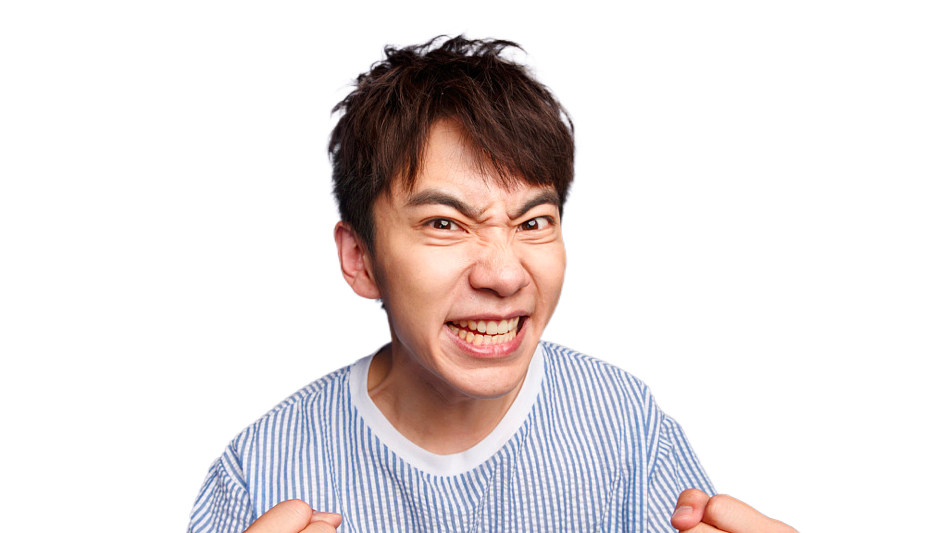

import cv2from modelscope.pipelines import pipelineportrait_matting = pipeline('portrait-matting')result = portrait_matting('https://modelscope.oss-cn-beijing.aliyuncs.com/test/images/image_matting.png')cv2.imwrite('result.png', result['output_img'])<?php $operator = PyCore::import("operator");$builtins = PyCore::import("builtins");$cv2 = PyCore::import('cv2');$pipeline = PyCore::import('modelscope.pipelines')->pipeline;$portrait_matting = $pipeline("portrait-matting");$result = $portrait_matting("https://modelscope.oss-cn-beijing.aliyuncs.com/test/images/image_matting.png");$cv2->imwrite("tinywan_result.png", $result->__getitem__("output_img"));Load local file image$result = $portrait_matting("./tinywan.png");

Execution result

/usr/local/php-8.2.14/bin/php tinywan-images.php 2024-03-25 22:17:25,630 - modelscope - INFO - PyTorch version 2.2.1 Found.2024-03-25 22:17:25,631 - modelscope - INFO - TensorFlow version 2.16.1 Found.2024-03-25 22:17:25,631 - modelscope - INFO - Loading ast index from /home/www/.cache/modelscope/ast_indexer2024-03-25 22:17:25,668 - modelscope - INFO - Loading done! Current index file version is 1.13.0, with md5 f54e9d2dceb89a6c989540d66db83a65 and a total number of 972 components indexed2024-03-25 22:17:26,990 - modelscope - WARNING - Model revision not specified, use revision: v1.0.02024-03-25 22:17:27.623085: I tensorflow/core/util/port.cc:113] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.2024-03-25 22:17:27.678592: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.To enable the following instructions: AVX2 AVX512F AVX512_VNNI FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.2024-03-25 22:17:28.551510: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT2024-03-25 22:17:29,206 - modelscope - INFO - initiate model from /home/www/.cache/modelscope/hub/damo/cv_unet_image-matting2024-03-25 22:17:29,206 - modelscope - INFO - initiate model from location /home/www/.cache/modelscope/hub/damo/cv_unet_image-matting.2024-03-25 22:17:29,209 - modelscope - WARNING - No preprocessor field found in cfg.2024-03-25 22:17:29,210 - modelscope - WARNING - No val key and type key found in preprocessor domain of configuration.json file.2024-03-25 22:17:29,210 - modelscope - WARNING - Cannot find available config to build preprocessor at mode inference, current config: {'model_dir': '/home/www/.cache/modelscope/hub/damo/cv_unet_image-matting'}. trying to build by task and model information.2024-03-25 22:17:29,210 - modelscope - WARNING - Find task: portrait-matting, model type: None. Insufficient information to build preprocessor, skip building preprocessorWARNING:tensorflow:From /home/www/anaconda3/envs/tinywan-modelscope/lib/python3.10/site-packages/modelscope/utils/device.py:60: is_gpu_available (from tensorflow.python.framework.test_util) is deprecated and will be removed in a future version.Instructions for updating:Use `tf.config.list_physical_devices('GPU')` instead.2024-03-25 22:17:29,213 - modelscope - INFO - loading model from /home/www/.cache/modelscope/hub/damo/cv_unet_image-matting/tf_graph.pbWARNING:tensorflow:From /home/www/anaconda3/envs/tinywan-modelscope/lib/python3.10/site-packages/modelscope/pipelines/cv/image_matting_pipeline.py:45: FastGFile.__init__ (from tensorflow.python.platform.gfile) is deprecated and will be removed in a future version.Instructions for updating:Use tf.gfile.GFile.2024-03-25 22:17:29,745 - modelscope - INFO - load model doneOutput image

picture

picture

The above is the detailed content of TensorFlow deep learning framework model inference pipeline for portrait cutout inference. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Solving common pandas installation problems: interpretation and solutions to installation errors

Feb 19, 2024 am 09:19 AM

Solving common pandas installation problems: interpretation and solutions to installation errors

Feb 19, 2024 am 09:19 AM

Pandas installation tutorial: Analysis of common installation errors and their solutions, specific code examples are required Introduction: Pandas is a powerful data analysis tool that is widely used in data cleaning, data processing, and data visualization, so it is highly respected in the field of data science . However, due to environment configuration and dependency issues, you may encounter some difficulties and errors when installing pandas. This article will provide you with a pandas installation tutorial and analyze some common installation errors and their solutions. 1. Install pandas

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Efficient installation: tips and tricks to quickly install the pandas library

Feb 21, 2024 am 09:45 AM

Efficient installation: tips and tricks to quickly install the pandas library

Feb 21, 2024 am 09:45 AM

Efficient Installation: Tips and tricks for quickly installing the pandas library, requiring specific code examples Overview: Pandas is a powerful data processing and analysis tool that is very popular among Python developers. However, installing the pandas library may sometimes face some challenges, especially if the network conditions are poor. This article will introduce some tips and tricks to help you quickly install the pandas library, and provide specific code examples. Install using pip: pip is the official package manager for Python

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

Numpy installation guide: Solving installation problems in one article

Feb 21, 2024 pm 08:15 PM

Numpy installation guide: Solving installation problems in one article

Feb 21, 2024 pm 08:15 PM

Numpy installation guide: One article to solve installation problems, need specific code examples Introduction: Numpy is a powerful scientific computing library in Python. It provides efficient multi-dimensional array objects and tools for operating array data. However, for beginners, installing Numpy may cause some confusion. This article will provide you with a Numpy installation guide to help you quickly solve installation problems. 1. Install the Python environment: Before installing Numpy, you first need to make sure that Py is installed.

Baidu Intelligent Cloud Qianfan large model platform has been upgraded again: 5 large models and 55 new tool components have been released!

Mar 22, 2024 am 08:10 AM

Baidu Intelligent Cloud Qianfan large model platform has been upgraded again: 5 large models and 55 new tool components have been released!

Mar 22, 2024 am 08:10 AM

Serving 80,000 enterprise users, it has helped users fine-tune 13,000 large models and helped users develop 160,000 large model applications. Since December 2023, the daily API calls of Baidu Smart Cloud Qianfan Large Model Platform have increased by 97% month-on-month. ..From the "pioneer" of the domestic large model platform a year ago to today's large model "super factory", Baidu Intelligent Cloud Qianfan large model platform firmly occupies a leading position in the domestic large model market, but its pace is slow. Didn't stop. On March 21, Baidu Intelligent Cloud held a Qianfan product launch conference in Beijing Shougang Park. Baidu Intelligent Cloud announced during the conference: 1. Joining hands with Beijing Shijingshan District to build the country's first Baidu Intelligent Cloud Qianfan large-scale model industrial innovation base to help Promote the take-off of regional industries; 2. Satisfy the “valency” of enterprises

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve