Technology peripherals

Technology peripherals

AI

AI

Watch videos, draw CAD, and recognize motion imagery! 75B's large multi-modal industrial model is so capable

Watch videos, draw CAD, and recognize motion imagery! 75B's large multi-modal industrial model is so capable

Watch videos, draw CAD, and recognize motion imagery! 75B's large multi-modal industrial model is so capable

The focus of this year’s upgrade is the introduction of multi-modal large model capabilities.

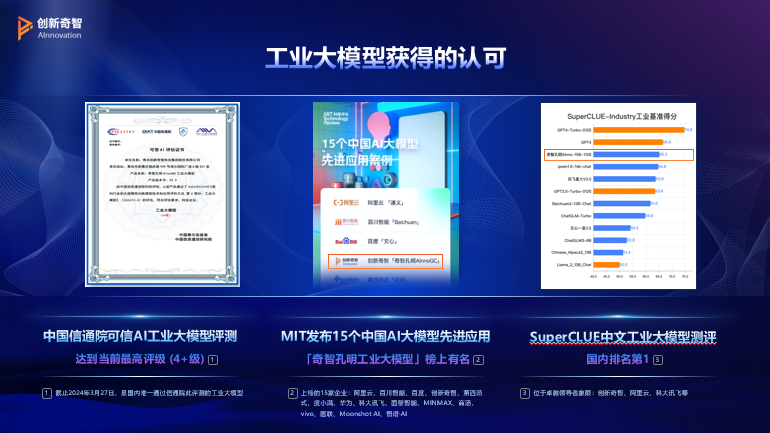

As the video and musical compositions created by Sora and Suno spark an audiovisual revolution around the world, how will large-scale multimodal applications in industry evolve? On March 27, as China's leading "AI manufacturing" solution provider, Innovation Qizhi unveiled their forward-looking answer.

After half a year of hard work, Innovation Qizhi released the more powerful Qizhi Haiming Industrial Large Model 2.0 version (AInno-75B) at a press conference held in Beijing. Several large-model native applications also made their debut, including ChatVision, ChatCAD, and ChatRobot was also upgraded to the Pro version.

## to innovate Qizhi CTO Zhang Faen at the press conference

The application of Scaling laws is helpful for research People and engineers predict the performance gains from increasing model size and the number of parameters needed to achieve specific performance goals. At present, some consensus has been formed on the interface. Improving parameters can improve model performance. Compared with AInno-15B, AInno-75B has achieved significant growth in size and performance. The focus of this year’s upgrade is the introduction of multi-mode large model capabilities. Zhang Faen explained that this advanced large model can handle multiple information modalities including text, pictures, and videos, and can even integrate data types unique to industrial scenarios, such as CAD drawings and EEG signals. Its output is equally diverse and can generate text, images, videos, CAD design drawings or tool body operation behaviors.

1. ChatCAD: The beauty of industrial “Wen Sheng Diagram”

C-side AIGC application The pictures and videos generated are breathtaking, and in the field of enterprise services, AI generation capabilities are equally exciting. Industrial design is the cornerstone of production activities. From mobile phones to new energy vehicle factories, industrial design should be completed before production and construction. As the foundation of industrial design, CAD software occupies an important position in the industrial chain. For a long time, my country's media CAD software market has been dominated by foreign manufacturers, with complex interfaces and high barriers to use. Wang Xian, the general manager of operations of China Zhongyuan International Mechanical Engineering Co., Ltd., revealed that most of their design work relies on manual labor. A single building, whether it is a standard floor or a complex, requires designers to draw it one by one. The same goes for industrial drawings, which consume a lot of manpower and material resources. In addition, there are many industry specifications and frequent revisions, which further increases the difficulty of design.

In order to break this situation, Chuangxinqizhi took the lead in introducing industrial large model technology into the field of industrial design and launched a Text-to-CAD application - "ChatCAD": through a simple dialogue and question-and-answer format, you can quickly understand Based on the designer's creative intention, industrial design drawings that meet the requirements are automatically generated and exported to traditional software for fine-tuning.

## - Live demonstration of industrial pulley design Even in the face of lengthy and complex component design requirements, ChatCAD can handle it. For example, "Help me design a turbine. The turbine consists of a motor and an engine cover. The specific requirements are as follows: the motor is cylindrical, 20 in length, and 16 in diameter. The turbine consists of a cylindrical turbine shaft and 5 fan blades. The turbine shaft is 20 in length. The diameter is 12, the top of the turbine should have a cylindrical cone rotating shaft, the shaft cap length is 9, the diameter is 12, the engine cover has a diameter of 50, a length of 30, and the distance between the turbine blades and the engine cover is 1."

## - Live demonstration of industrial pulley design Even in the face of lengthy and complex component design requirements, ChatCAD can handle it. For example, "Help me design a turbine. The turbine consists of a motor and an engine cover. The specific requirements are as follows: the motor is cylindrical, 20 in length, and 16 in diameter. The turbine consists of a cylindrical turbine shaft and 5 fan blades. The turbine shaft is 20 in length. The diameter is 12, the top of the turbine should have a cylindrical cone rotating shaft, the shaft cap length is 9, the diameter is 12, the engine cover has a diameter of 50, a length of 30, and the distance between the turbine blades and the engine cover is 1."

ChatCAD still generates results and continues to improve based on feedback. The designs generated by ChatCAD also support mainstream file formats and can be seamlessly connected to other industrial software to facilitate subsequent integration and modification.

Live demonstration of turbine design This feature makes Mr. Wang very excited. He believes that ChatCAD is expected to help the industry reduce repetitive labor and avoid rigid specification restrictions, thereby affecting the manual quotation of the entire industry. So, how is ChatCAD implemented? Zhang Faen explained that CAD is different from common modalities such as text, pictures, and videos. It needs to represent geometric data such as points, lines, edges, circles, columns, and processes. "So we also call it a modality, which is a modality that the C side does not have. We need to invent our own intermediate language to express CAD, generate this intermediate language or intermediate code for large models, and then translate these intermediate codes into CAD .” ## ha hung ▲ ▲ △ ▲ ▲ to to be ? It can be used directly for processing, but complex designs still need to be perfected. The goal of ChatCAD is to become a right-hand assistant for engineers in design institutes. It is expected to shorten the design process that originally took ten hours to one hour, with the large model responsible for 90% of the work and the remaining 10% being optimized manually. It is worth mentioning that Chuangxinqizhi has successfully integrated advanced large model technology into various industrial software such as CAD, MES, and BI, realizing a comprehensive integration of "R&D design-production control-information management". Intelligent transformation and upgrading of processes. ## Since the live demonstration, C hatVision finds the power socket in the screen", "Find out "White hard hat" and other specific targets. These instructions seem very simple. Without a large model, they need to be developed for each small recognition category (such as hard hat, smoking) The specific algorithm is difficult to modify after debugging and deployment, and the implementation cost is high and the cycle is long. The emergence of large models subverts the traditional paradigm. A single large model can cover the functions of multiple small models, surpassing it in terms of performance, accuracy, and generalization capabilities. , and supports natural language interaction, which greatly simplifies the development and deployment process. During the live demonstration, the screen changed: one colleague took off his work hat to play with his mobile phone, and another colleague took off his safety clothing. The demonstrator issued Instruction: "Please analyze this screen carefully. If there are any violations, send an email to the administrator." This instruction is very knowledge-intensive. It not only involves the judgment of violations, but also determines whether to trigger email sending and the recipients. . This is the typical service model of large-model native applications. As a result, ChatVision called many security monitoring skills in the background to identify, not only marked three violations, but also sent an email with screenshots. There is a clear demonstration in the officially released ChatVision DEMO The ChatVision demonstration fully reflects the planning and reasoning capabilities of industrial large models. It can convert user intentions into a series of external tool calls to complete complex video understanding tasks in an orderly manner. Zhang Faen, CTO of Innovation Qizhi, said that the company has accumulated more than 200 visual algorithms and model assets in the past few years, and Industrial large models have opened up new horizons for the application of these assets. Large models can not only act as intelligent orchestrators to optimize user experience, but their multi-modal capabilities can also enhance video understanding and play a significant role in the field of enterprise security. The last demonstration case highlights the cutting-edge application of large models in the multi-modal field. Faced with a real workshop video, the demonstrator put forward a difficult requirement: "Please analyze this video carefully, tell me whether anyone is eating and mark the time when this action occurred." This task requires a large model to perform continuous action recognition on long-term sequence images and mark the start and end times of the actions. As a result, ChatVision accurately located the scene where workers were eating within the first 15 seconds of the video. "Eating is a very common event, and the ability of large models to understand events is far better than traditional small algorithm models." Zhang Faen explained. For a long time, there has been an urgent need to ensure production and engineering safety through video. In the future, related work around large models will be expected to achieve intelligent video understanding of production safety conditions and production process compliance. In Wang Xian’s view, safety is always the top priority in engineering projects. For many years, engineering safety training rarely involves on-site hazard identification. He believes that ChatVision has broad application prospects, and it is expected to be implemented in on-site safety helmet detection, high-altitude safety rope wearing, safety equipment carrying and other scenarios. ChatVision also has great potential in the supervision industry. Currently, many on-site safety inspections still rely heavily on manpower. AInno-15B's native application ChatRobot has implemented voice control of industrial robots. Just tell ChatRobot "Bring me a cup of coffee", and it can direct the industrial robot arm to search for coffee on the shelf and design its own route to deliver the goods to you. ChatRobot Pro can process more complex information carrier EEG signals. EEG signals are signals generated during brain activity. The relationship between brain activity and EEG signals is very complex, and how to decode it has become a major problem for researchers. While traditional approaches have low accuracy, AInno-75B shows potential for interpreting this type of multimodal information. Some foreign brain-computer interface technologies use invasive electrodes to obtain EEG signals, which involves a series of engineering issues such as electrode design, surgical implantation, rejection reaction, signal transmission, and signal decoding. Innovation Qizhi uses non-invasive EEG caps to collect EEG information, which greatly reduces the engineering difficulty. However, Zhang Faen also said that the invasive method can obtain more channels and clearer EEG signals, which will facilitate subsequent decoding of more complex brain intentions. A vivid metaphor is: the invasive method of collecting EEG signals is like listening to a concert inside a stadium, while the non-invasive method is like listening to a concert outside the stadium. There will be a big difference in the clarity of the singing. Currently, the research and development work that Innovation Qizhi is doing is to verify the multi-modal capabilities of large industrial models and conduct technical pre-research for possible future brain-controlled industrial automation scenarios. This is also an end-to-end native application, Zhang Faen emphasized. The entire process from EEG signal input to direct output of the final result (a robotic arm delivering the goods to the demonstrator) is completed by the neural network, without relying on hand-designed features or traditional data processing. In addition to natural language interaction and motor imagination recognition, ChatRobot Pro also makes full use of the reasoning capabilities of industrial large models to realize long sequence task orchestration and complex decision-making. Giving powerful intelligent control and decision-making capabilities to different bodies (whether it is industrial robotic arms or AGVs, etc.) will also be the future direction of the innovative Qizhi Industrial large model. In the era of generative AI, there is no precedent for industrial application, and innovation and wisdom have always been Explore the possibilities in industrial scenarios. Zhang Faen calls the prospect of large models in the direction of enterprise services “Promising”. But he admitted that during the window period of technological change, everyone's understanding is often uneven, especially for relatively large changes. People's understanding needs time to follow up, and he is no exception. In addition to the new native applications, the overall performance and effect of ChatDOC released last year have been improved, and the product functions have become more complete. ChatBI has added support for Excel and CSV data, and now the accuracy of generating SQL statements and analysis reports has increased by 15%. Large model serving engines are easier to deploy and provide higher inference performance. "Innovation Qizhi will further polish the ChatX application built directly based on the core generation capabilities of industrial large models." Zhang Faen said.

In the on-site demonstration at the press conference, ChatVision accurately responded to comprehensive understanding commands such as "Look carefully at the current screen and tell me where this might be", as well as "Find the power socket in the screen", "Find the white Specific target recognition tasks such as "hard hat" have demonstrated its broad application prospects.

At the press conference, the demonstrator randomly selected a product (Uniform Green Tea) and asked a person with multiple electrodes fixed on his scalp to use his motor imagination to control an industrial robot to put the drink into his hands. The man wearing the collector is trying to think of three things: left, right, and selection. The cursor also moves left and right on the screen based on the signals translated by the large model. When the cursor moves to the target icon, he will stare at the icon and click the cursor to select it.

Next, ChatRobot Pro will independently complete the intelligent orchestration of tasks, generate executable task steps, and interact with the industrial robot interface in real time to instruct the robot to complete the task.

The above is the detailed content of Watch videos, draw CAD, and recognize motion imagery! 75B's large multi-modal industrial model is so capable. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

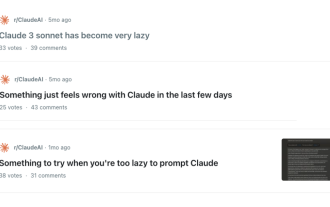

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

This afternoon, Hongmeng Zhixing officially welcomed new brands and new cars. On August 6, Huawei held the Hongmeng Smart Xingxing S9 and Huawei full-scenario new product launch conference, bringing the panoramic smart flagship sedan Xiangjie S9, the new M7Pro and Huawei novaFlip, MatePad Pro 12.2 inches, the new MatePad Air, Huawei Bisheng With many new all-scenario smart products including the laser printer X1 series, FreeBuds6i, WATCHFIT3 and smart screen S5Pro, from smart travel, smart office to smart wear, Huawei continues to build a full-scenario smart ecosystem to bring consumers a smart experience of the Internet of Everything. Hongmeng Zhixing: In-depth empowerment to promote the upgrading of the smart car industry Huawei joins hands with Chinese automotive industry partners to provide

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow

Aug 19, 2024 pm 04:48 PM

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow

Aug 19, 2024 pm 04:48 PM

Artificial intelligence is developing faster than you might imagine. Since GPT-4 introduced multimodal technology into the public eye, multimodal large models have entered a stage of rapid development, gradually shifting from pure model research and development to exploration and application in vertical fields, and are deeply integrated with all walks of life. In the field of interface interaction, international technology giants such as Google and Apple have invested in the research and development of large multi-modal UI models, which is regarded as the only way forward for the mobile phone AI revolution. In this context, the first large-scale UI model in China was born. On August 17, at the IXDC2024 International Experience Design Conference, Motiff, a design tool in the AI era, launched its independently developed UI multi-modal model - Motiff Model. This is the world's first UI design tool