Technology peripherals

Technology peripherals

AI

AI

The first post since the boss left! Stability official code model Stable Code Instruct 3B

The first post since the boss left! Stability official code model Stable Code Instruct 3B

The first post since the boss left! Stability official code model Stable Code Instruct 3B

After the boss left, the first model is here!

Just today, Stability AI officially announced a new code model, Stable Code Instruct 3B.

Picture

Picture

Stability is very important. The departure of the CEO has caused some troubles to Stable Diffusion. The investment company has If something goes wrong, your salary may also be in trouble.

However, outside the building is turbulent, but in the laboratory, it is still. Research should be done, discussions should be made, models should be adjusted, and the war in various fields of the large model has fallen into place.

Not only is it spreading out to engage in all-out war, but every research is also making continuous progress. For example, today's Stable Code Instruct 3B is based on the previous Stable Code 3B. Instruction tuning.

Picture

Picture

Paper address: https://static1.squarespace.com/static/6213c340453c3f502425776e/t/6601c5713150412edcd56f8e/1711392114564 /Stable_Code_TechReport_release.pdf

With natural language prompts, Stable Code Instruct 3B can handle a variety of tasks such as code generation, mathematics, and other software development-related queries.

Picture

Picture

Invincible at the same level, strong kill by leapfrogging

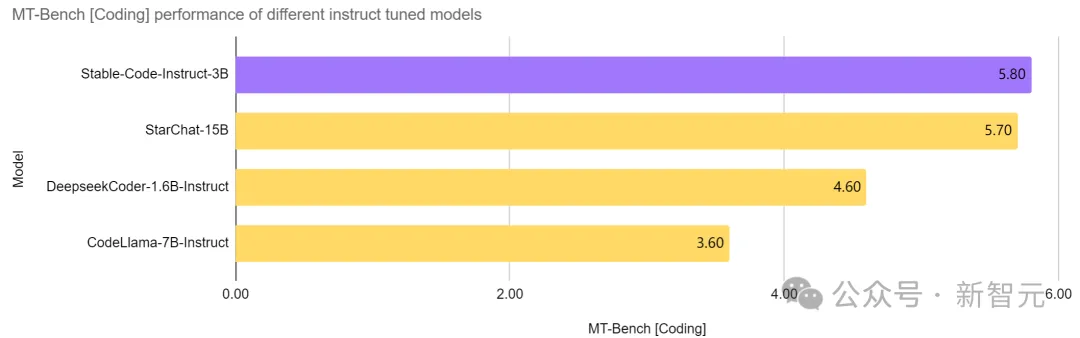

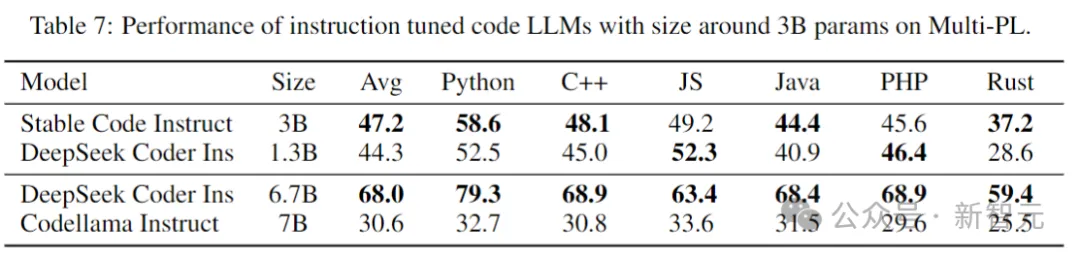

Stable Code Instruct In models with the same number of parameters, 3B has achieved the current SOTA, even better than models such as CodeLlama 7B Instruct, which is more than twice its size, and its performance in software engineering-related tasks is equivalent to StarChat 15B.

Picture

Picture

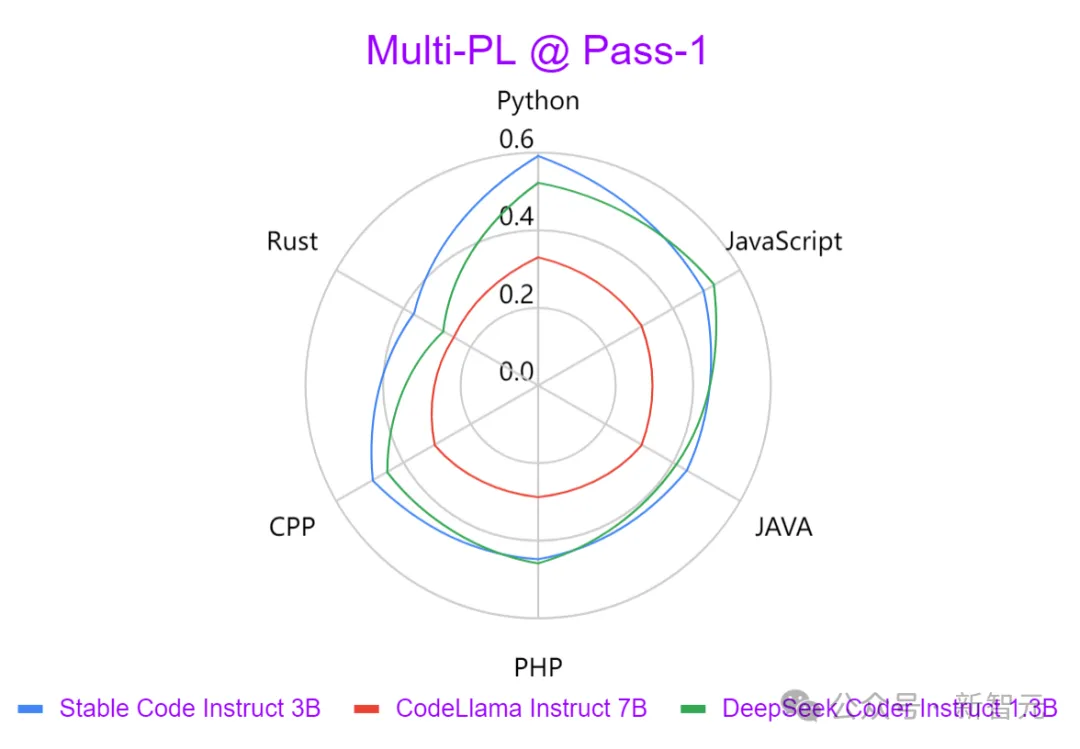

As you can see from the picture above, it is comparable to leading models such as Codellama 7B Instruct and DeepSeek-Coder Instruct 1.3B Compared to Stable Code Instruct 3B, Stable Code Instruct 3B performs well across a range of coding tasks.

Testing shows that Stable Code Instruct 3B matches or exceeds the competition in code completion accuracy, understanding of natural language instructions, and versatility across different programming languages opponent.

Picture

Picture

Stable Code Instruct 3B Based on the results of the Stack Overflow 2023 developer survey, the training focuses on Python, Javascript, Programming languages such as Java, C, C and Go.

The above graph compares the strength of the output generated by three models in various programming languages using the Multi-PL benchmark. It can be found that Stable Code Instruct 3B is significantly better than CodeLlama in all languages, and the number of parameters is more than half.

In addition to the popular programming languages mentioned above, Stable Code Instruct 3B also includes training for other languages (such as SQL, PHP and Rust), and even in languages without training (such as Lua), it can also provide powerful testing performance.

Stable Code Instruct 3B is proficient not only in code generation, but also in FIM (Fill-in-the-Code) tasks, database queries, code translation, interpretation and creation.

Through instruction tuning, models are able to understand and act on subtle instructions, facilitating a wide range of coding tasks beyond simple code completion, such as mathematical understanding, logical reasoning, and processing software development complex technology.

Picture

Picture

Model download: https://huggingface.co/stabilityai/stable-code-instruct-3b

Stable Code Instruct 3B is now available for commercial purposes through Stability AI membership. For non-commercial use, model weights and code can be downloaded on Hugging Face.

Technical details

Pictures

Pictures

Model architecture

Stable Code is built on Stable LM 3B and is a decoder-only Transformer structure with a design similar to LLaMA. The following table is some key structural information:

Picture

Picture

##The main differences from LLaMA include:

Positional embedding: Use rotated positional embedding in the first 25% of the header embedding to improve subsequent throughput.

Regularization: Use LayerNorm with learning bias term instead of RMSNorm.

Bias terms: All bias terms in the feedforward network and multi-head self-attention layer are deleted, except for KQV.

Uses the same tokenizer (BPE) as the Stable LM 3B model, with a size of 50,257; in addition, special markers of StarCoder are also referenced, including indicating file name, storage Library stars, fill-in-the-middle (FIM), etc.

For long context training, special markers are used to indicate when two concatenated files belong to the same repository.

Training process

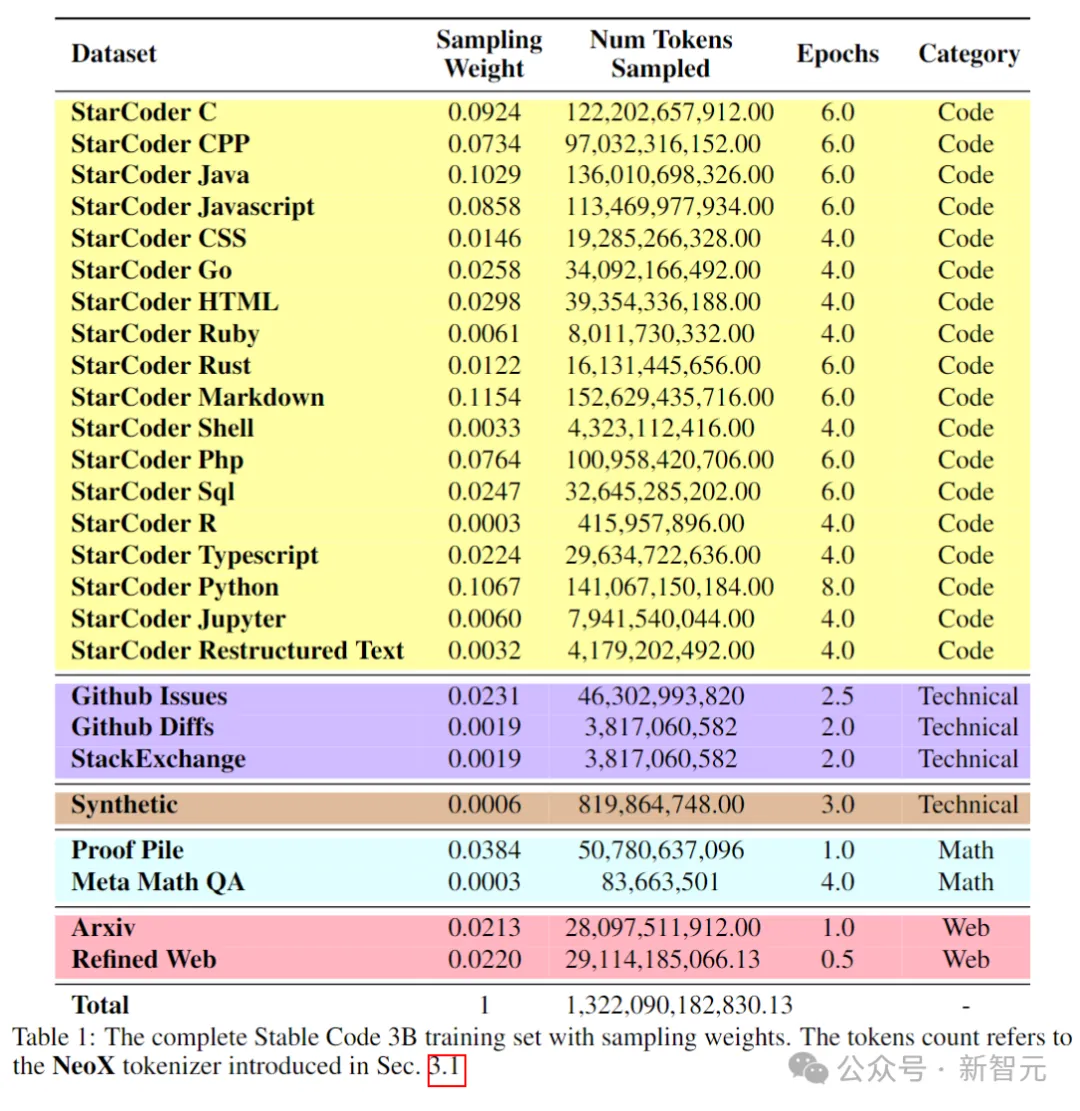

Training data

Pre-training data set A variety of publicly accessible large-scale data sources are collected, including code repositories, technical documentation (such as readthedocs), mathematics-focused texts, and extensive web datasets.

The main goal of the initial pre-training phase is to learn rich internal representations to significantly improve the model's ability in mathematical understanding, logical reasoning, and processing complex technical texts related to software development.

Additionally, the training data includes a general text dataset to provide the model with broader language knowledge and context, ultimately enabling the model to handle a wider range of queries and tasks in a conversational manner.

The following table shows the data sources, categories and sampling weights of the pre-training corpus, where the ratio of code and natural language data is 80:20.

Picture

Picture

In addition, the researchers also introduced a small synthetic dataset, the data was synthesized from the seed prompts of CodeAlpaca dataset, Contains 174,000 tips.

And followed the WizardLM method, gradually increasing the complexity of the given seed prompts, and obtained an additional 100,000 prompts.

The authors believe that introducing this synthetic data early in the pre-training stage helps the model respond better to natural language text.

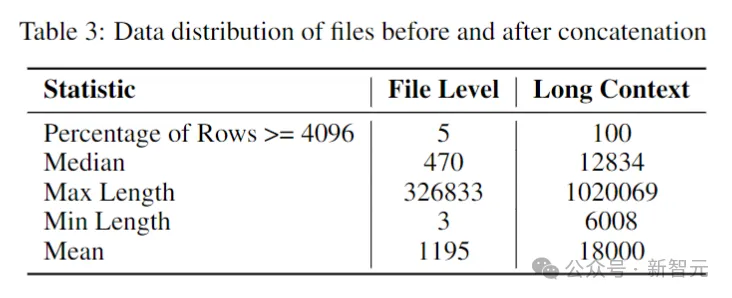

Long context dataset

Since multiple files in a repository often depend on each other, the context length is important for encoding Models are important.

The researchers estimated the median and average number of tokens in the software repository to be 12k and 18k respectively, so 16,384 was chosen as the context length.

The next step was to create a long context dataset. The researchers took some files written in popular languages in the repository and combined them together, inserting between each file. A special tag to maintain separation while preserving content flow.

To circumvent any potential bias that might arise from the fixed order of the files, the authors employed a randomization strategy. For each repository, two different sequences of connection files are generated.

Picture

Picture

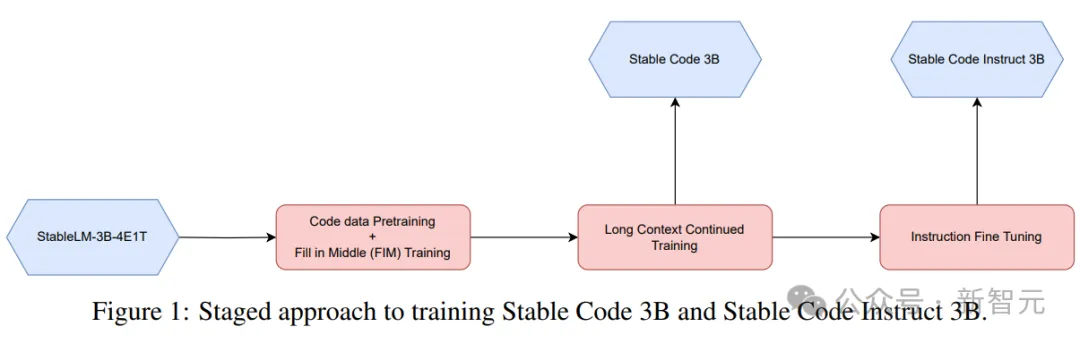

Phase-based training

Stable Code uses 32 Amazon P4d instances for training, containing 256 NVIDIA A100 (40GB HBM2) GPUs, and uses ZeRO for distributed optimization.

Picture

Picture

A phased training method is used here, as shown in the picture above.

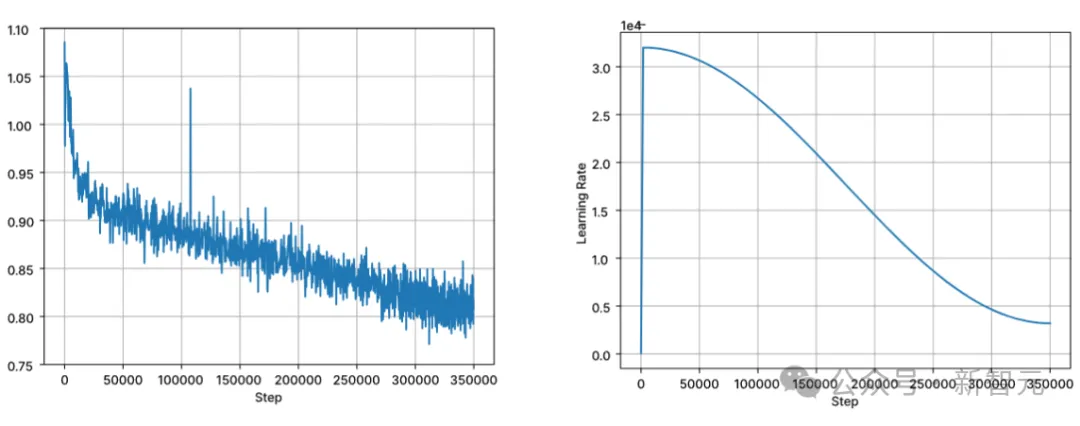

Training follows standard autoregressive sequence modeling to predict the next token. The model is initialized using the checkpoint of Stable LM 3B. The context length of the first stage of training is 4096, and then continuous pre-training is performed.

Training is performed with BFloat16 mixed precision, and FP32 is used for all-reduce. AdamW optimizer settings are: β1=0.9, β2=0.95, ε=1e−6, λ (weight decay)=0.1. Start with learning rate = 3.2e-4, set the minimum learning rate to 3.2e-5, and use cosine decay.

Picture

Picture

One of the core assumptions of natural language model training is the causal order from left to right, but for code Say, this assumption does not always hold (e.g., function calls and function declarations can be in any order for many functions).

To solve this problem, researchers used FIM (fill-in-the-middle). Randomly split the document into three segments: prefix, middle, and suffix, then move the middle segment to the end of the document. After rearrangement, the same autoregressive training process is followed.

Instruction fine-tuning

After pre-training, the author further improves the model’s dialogue skills through a fine-tuning stage, which includes supervised fine-tuning (SFT) and Direct Preference Optimization (DPO).

First perform SFT fine-tuning using publicly available datasets on Hugging Face: including OpenHermes, Code Feedback, CodeAlpaca.

After performing exact match deduplication, the three datasets provide a total of approximately 500,000 training samples.

Use the cosine learning rate scheduler to control the training process and set the global batch size to 512 to pack the input into sequences of length no longer than 4096.

After SFT, the DPO phase begins, using data from UltraFeedback to curate a dataset containing approximately 7,000 samples. In addition, in order to improve the security of the model, the author also included the Helpful and Harmless RLFH dataset.

The researchers adopted RMSProp as the optimization algorithm and increased the learning rate to a peak of 5e-7 in the initial stage of DPO training.

Performance Test

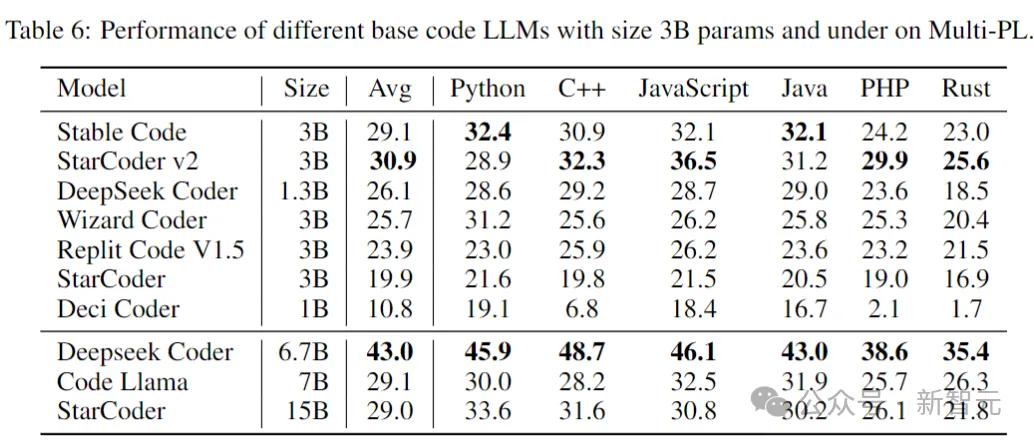

The following compares the performance of the model on the code completion task, using the Multi-PL benchmark to evaluate the model.

Stable Code Base

The following table shows the size of 3B parameters and below on Multi-PL Performance of different code models.

Picture

Picture

Although the parameters of Stable Code are less than 40% and 20% of those of Code Llama and StarCoder 15B, respectively, The model's average performance across programming languages is on par with them.

Stable Code Instruct

The following table evaluates the instructs of several models in the Multi-PL benchmark test Fine-tuned version.

Picture

Picture

SQL Performance

Code language An important application of the model is database query tasks. In this area, the performance of Stable Code Instruct is compared with other popular instruction-tuned models, and models trained specifically for SQL. Benchmarks created here using Defog AI.

Picture

Picture

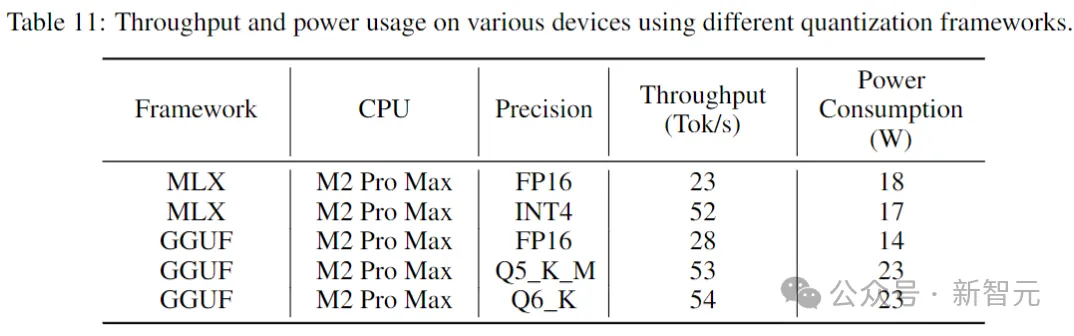

Inference performance

Table below The throughput and power consumption when running Stable Code on consumer-grade devices and corresponding system environments are given.

Picture

Picture

The results show that when using lower precision, the throughput increases by nearly two times. However, it is important to note that implementing lower precision quantization may result in some (potentially large) degradation in model performance.

Reference:https://www.php.cn/link/8cb3522da182ff9ea5925bbd8975b203

The above is the detailed content of The first post since the boss left! Stability official code model Stable Code Instruct 3B. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Do I need to use flexbox in the center of the Bootstrap picture?

Apr 07, 2025 am 09:06 AM

Do I need to use flexbox in the center of the Bootstrap picture?

Apr 07, 2025 am 09:06 AM

There are many ways to center Bootstrap pictures, and you don’t have to use Flexbox. If you only need to center horizontally, the text-center class is enough; if you need to center vertically or multiple elements, Flexbox or Grid is more suitable. Flexbox is less compatible and may increase complexity, while Grid is more powerful and has a higher learning cost. When choosing a method, you should weigh the pros and cons and choose the most suitable method according to your needs and preferences.

Is H5 page production a front-end development?

Apr 05, 2025 pm 11:42 PM

Is H5 page production a front-end development?

Apr 05, 2025 pm 11:42 PM

Yes, H5 page production is an important implementation method for front-end development, involving core technologies such as HTML, CSS and JavaScript. Developers build dynamic and powerful H5 pages by cleverly combining these technologies, such as using the <canvas> tag to draw graphics or using JavaScript to control interaction behavior.

How to customize the resize symbol through CSS and make it uniform with the background color?

Apr 05, 2025 pm 02:30 PM

How to customize the resize symbol through CSS and make it uniform with the background color?

Apr 05, 2025 pm 02:30 PM

The method of customizing resize symbols in CSS is unified with background colors. In daily development, we often encounter situations where we need to customize user interface details, such as adjusting...

How to control the top and end of pages in browser printing settings through JavaScript or CSS?

Apr 05, 2025 pm 10:39 PM

How to control the top and end of pages in browser printing settings through JavaScript or CSS?

Apr 05, 2025 pm 10:39 PM

How to use JavaScript or CSS to control the top and end of the page in the browser's printing settings. In the browser's printing settings, there is an option to control whether the display is...

How to elegantly solve the problem of too small spacing of Span tags after a line break?

Apr 05, 2025 pm 06:00 PM

How to elegantly solve the problem of too small spacing of Span tags after a line break?

Apr 05, 2025 pm 06:00 PM

How to elegantly handle the spacing of Span tags after a new line In web page layout, you often encounter the need to arrange multiple spans horizontally...

How to make the height of adjacent columns in the Element UI automatically adapt to the content?

Apr 05, 2025 am 06:12 AM

How to make the height of adjacent columns in the Element UI automatically adapt to the content?

Apr 05, 2025 am 06:12 AM

How to make the height of adjacent columns of the same row automatically adapt to the content? In web design, we often encounter this problem: when there are many in a table or row...

What changes have been made with the list style of Bootstrap 5?

Apr 07, 2025 am 11:09 AM

What changes have been made with the list style of Bootstrap 5?

Apr 07, 2025 am 11:09 AM

Bootstrap 5 list style changes are mainly due to detail optimization and semantic improvement, including: the default margins of unordered lists are simplified, and the visual effects are cleaner and neat; the list style emphasizes semantics, enhancing accessibility and maintainability.

How to center images in containers for Bootstrap

Apr 07, 2025 am 09:12 AM

How to center images in containers for Bootstrap

Apr 07, 2025 am 09:12 AM

Overview: There are many ways to center images using Bootstrap. Basic method: Use the mx-auto class to center horizontally. Use the img-fluid class to adapt to the parent container. Use the d-block class to set the image to a block-level element (vertical centering). Advanced method: Flexbox layout: Use the justify-content-center and align-items-center properties. Grid layout: Use the place-items: center property. Best practice: Avoid unnecessary nesting and styles. Choose the best method for the project. Pay attention to the maintainability of the code and avoid sacrificing code quality to pursue the excitement