Grok-1 was officially announced as open source less than half a month ago, and the newly upgraded Grok-1.5 is released.

Just now, Musk xAI officially announced that 128K context Grok-1.5 has greatly improved its reasoning capabilities.

And, it will be online soon.

11 days ago, the weights and architecture of the Grok-1 model were open sourced, demonstrating the progress Xai had made before last November.

Grok-1 has 314 billion parameters, which is 4 times the size of Llama 2, and uses a MoE architecture. 2 of the 8 experts are active experts.

Xai introduced that since then, the team has improved the reasoning and problem-solving capabilities of the latest model Grok-1.5.

The former head of developer relations at OpenAI said that their pace and sense of urgency can be seen from the timing of xAI’s major releases. Exciting!

According to the official introduction, Grok-1.5 Improved inference capabilities, context length is 128K.

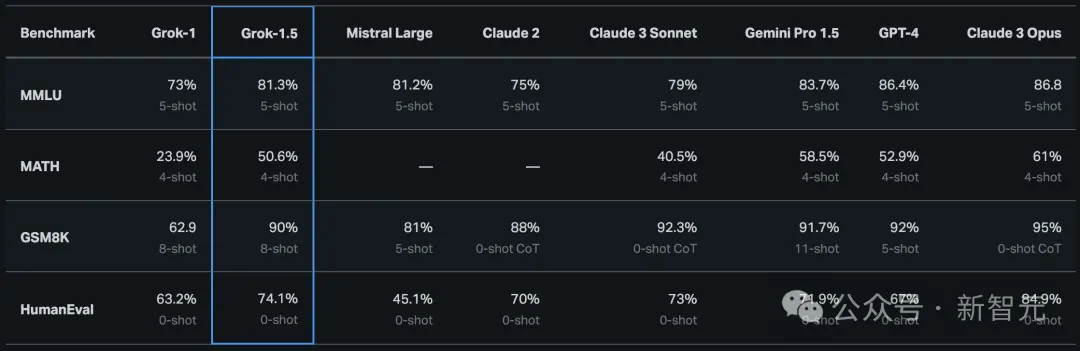

One of the most significant improvements to Grok-1.5 is its performance in coding and math-related tasks.

In the test, Grok-1.5 achieved a score of 50.6% on the math benchmark and 90% on the GSM8K benchmark. These two math benchmarks cover primary school to high school. Various competition questions.

In addition, Grok-1.5 achieved a high score of 74.1% on the HumanEval benchmark test that evaluates code generation and problem-solving capabilities.

From the figure below, compared with Grok-1, it can be seen that Grok-1.5's mathematical capabilities have been greatly improved, from 62.9% to 90% on GSM8K, and on MATH Increased from 23.9% to 50.6%.

##128K long context understanding, 16 times amplification

Another new feature of Grok-1.5 is the ability to handle text up to 128K tokens within its context window.This increases Grok's memory capacity to 16 times the previous context length, allowing it to utilize information from longer documents.

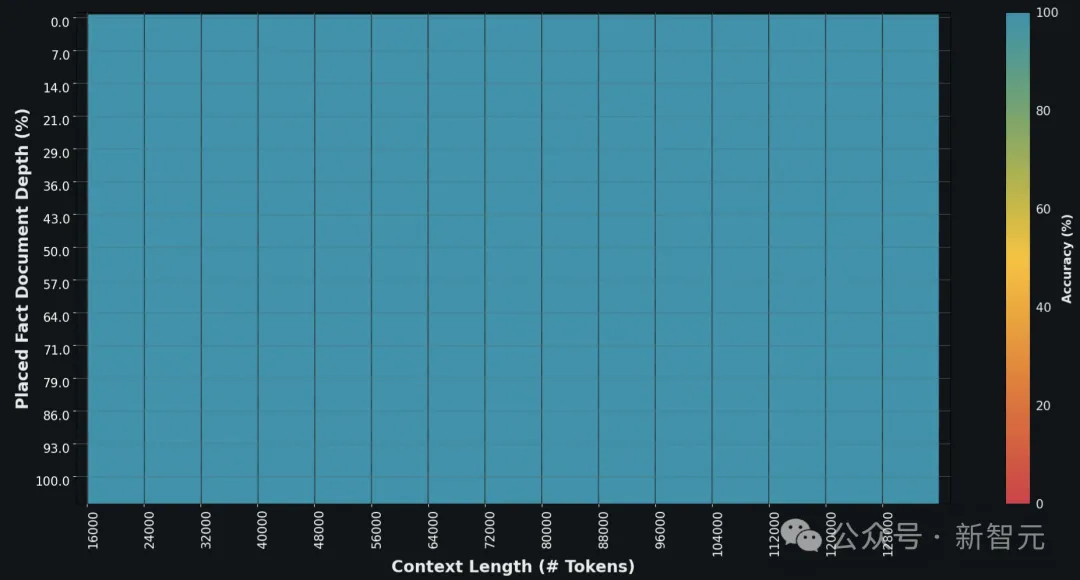

In the Needle In A Haystack (NIAH) evaluation, Grok-1.5 demonstrated strong retrieval capabilities, retrieving embedded text in context up to 128K bytes in length, and achieved Perfect search results.

Grok-1.5 Infrastructure##Grok-1.5 is built on JAX, Rust and Kubernetes’ customized distributed training framework.

This training stack allows xAI teams to build ideas at scale and train new architectures with minimal investment.

A major challenge in training LLM on large computing clusters is maximizing the reliability and uptime of the training tasks.

xAI’s customized training orchestrator ensures that problematic nodes are automatically detected and eliminated from training tasks.

At the same time, they also optimized checkpointing, data loading, and restarting of training tasks to minimize downtime in the event of a failure.

xAI stated that Grok-1.5 will soon be available to early testers to help improve the model.

The blog also previewed several new features that Grok-1.5 will launch in the next few days.

Finally, xAI posted the recruitment information as always.

The above is the detailed content of After 11 days of open source, Musk releases Grok-1.5 again! 128K code defeats GPT-4. For more information, please follow other related articles on the PHP Chinese website!

How to bind data in dropdownlist

How to bind data in dropdownlist

How to comment code in html

How to comment code in html

Introduction to monitoring equipment of weather stations

Introduction to monitoring equipment of weather stations

readyfor4gb

readyfor4gb

Introduction to virtualization software

Introduction to virtualization software

How to register on Binance

How to register on Binance

Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis?

How to cast screen from Huawei mobile phone to TV

How to cast screen from Huawei mobile phone to TV