Technology peripherals

Technology peripherals

AI

AI

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

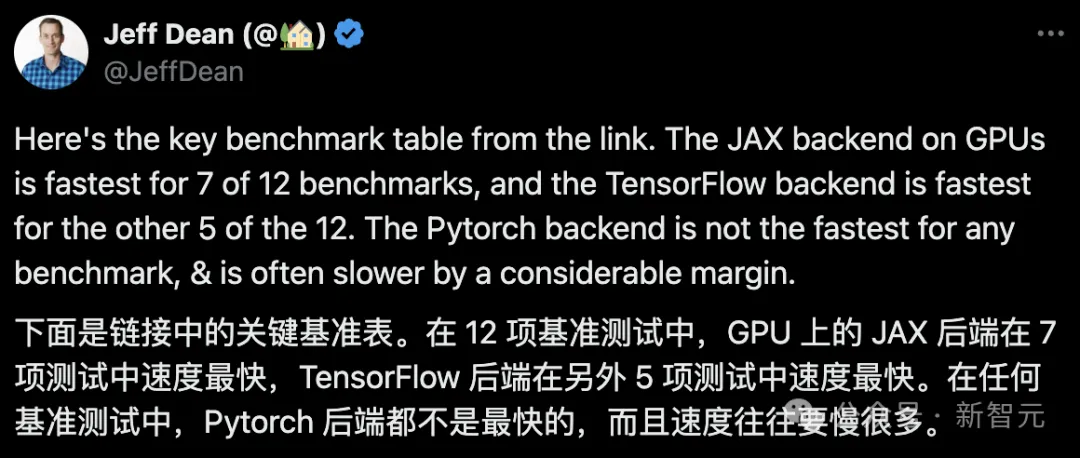

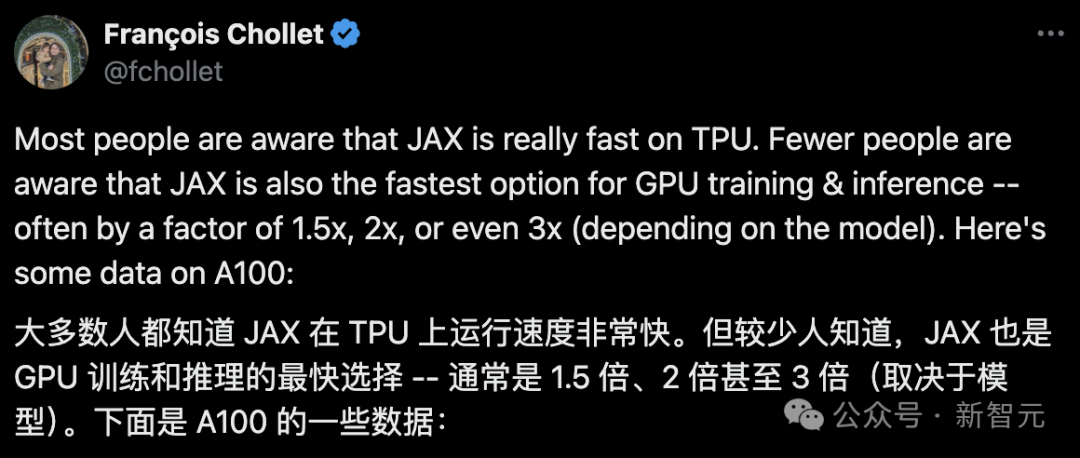

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators.

And the test was not completed on the TPU with the best JAX performance.

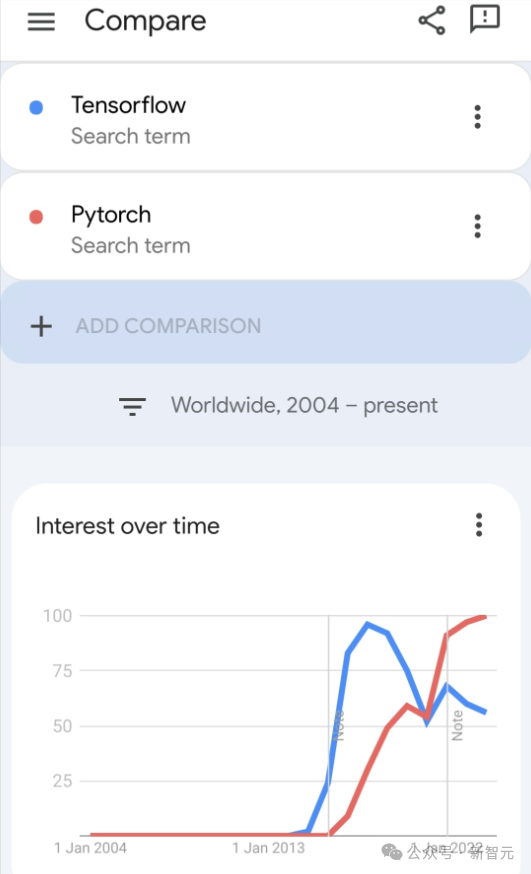

Although now among developers, Pytorch is still more popular than Tensorflow.

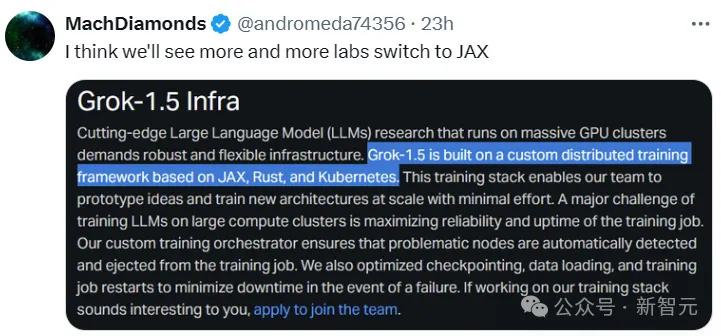

But in the future, perhaps more large models will be trained and run based on the JAX platform.

Model

Recently, the Keras team implemented and paired the three backends (TensorFlow, JAX, PyTorch) with native PyTorch TensorFlow's Keras 2 was benchmarked.

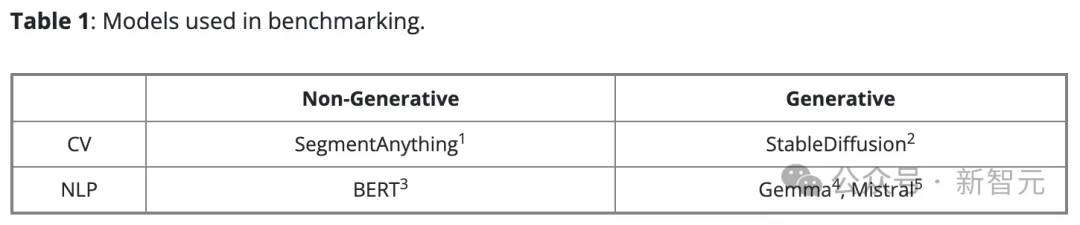

First, they selected a set of mainstream computer vision and natural language processing models for generative and non-generative AI tasks:

For the Keras version of the model, it is built using the existing implementations in KerasCV and KerasNLP. For the native PyTorch version, we chose the most popular options on the Internet:

- BERT, Gemma, Mistral from HuggingFace Transformers

- StableDiffusion from HuggingFace Diffusers

- SegmentAnything from Meta

They call this set of models "Native PyTorch" to distinguish it from the Keras 3 version that uses the PyTorch backend.

They used synthetic data for all benchmarks and used bfloat16 precision in all LLM training and inference, while using LoRA (fine-tuning) in all LLM training.

According to the suggestion of the PyTorch team, they used torch.compile(model, mode="reduce-overhead") in the native PyTorch implementation (except for Gemma and Mistral training due to incompatibility ).

To measure out-of-the-box performance, they use high-level APIs (such as HuggingFace’s Trainer(), standard PyTorch training loops, and Keras model.fit()) and minimize configuration.

Hardware configuration

All benchmark tests were conducted using Google Cloud Compute Engine, configured as: an NVIDIA A100 GPU with 40GB of video memory, 12 virtual CPUs and 85GB Host memory.

Benchmark Results

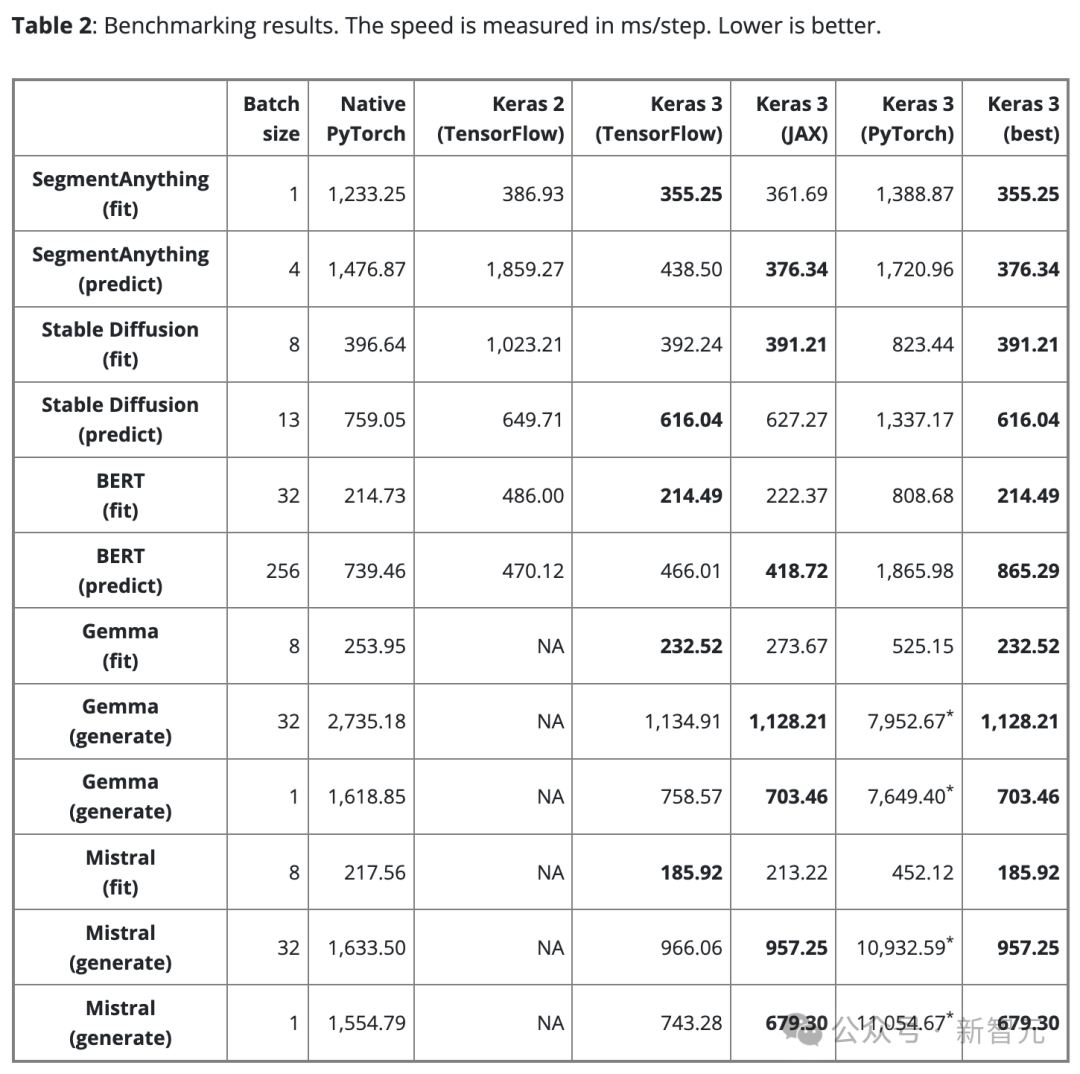

Table 2 shows the benchmark results in steps/ms. Each step involves training or prediction on a single batch of data.

The result is the average of 100 steps, but the first step is excluded because the first step includes model creation and compilation, which takes extra time.

To ensure a fair comparison, the same batch size is used for the same model and task (whether training or inference).

However, for different models and tasks, due to their different scale and architecture, the data batch size can be adjusted as needed to avoid memory overflow due to being too large, or The batch size is too small and the GPU is underutilized.

A batch size that is too small can also make PyTorch appear slower because it increases Python overhead.

For the large language models (Gemma and Mistral), the same batch size was also used when testing because they are the same type of model with a similar number of parameters (7B).

Considering users’ needs for single-batch text generation, a benchmark test was also conducted on text generation with a batch size of 1.

Key findings

Discover that 1

There is no "optimal" end.

The three backends of Keras each have their own strengths. The important thing is that in terms of performance, no one backend can always win.

Choosing which backend is the fastest often depends on the architecture of the model.

This point highlights the importance of choosing different frameworks to pursue optimal performance. Keras 3 makes it easy to switch backends to find the best fit for your model.

Found 2

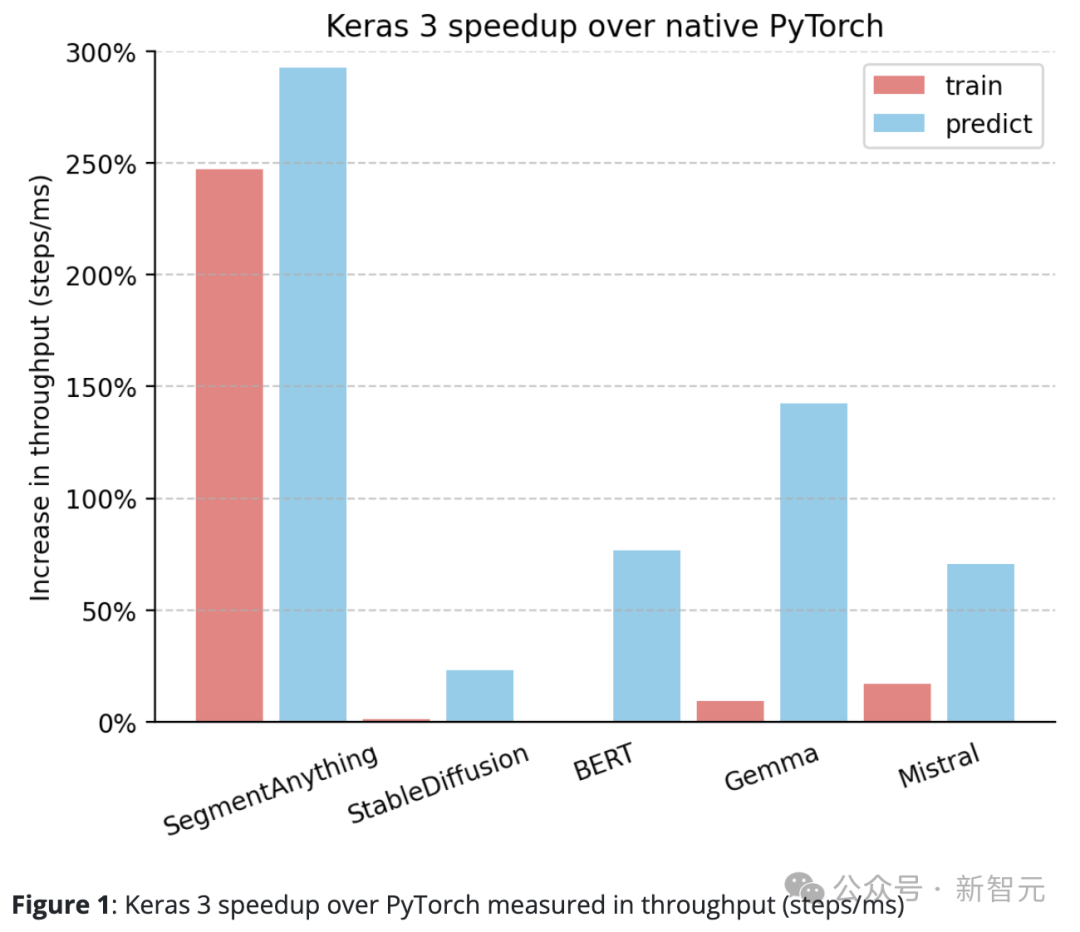

The performance of Keras 3 generally exceeds the standard implementation of PyTorch.

Compared to native PyTorch, Keras 3 has a significant improvement in throughput (steps/ms).

In particular, in 5 of the 10 test tasks, the speed increase exceeded 50%. Among them, the highest reached 290%.

If it is 100%, it means that Keras 3 is twice as fast as PyTorch; if it is 0%, it means that the performance of the two is equivalent

Discover 3

Keras 3 delivers best-in-class performance “out of the box”.

In other words, all Keras models participating in the test have not been optimized in any way. In contrast, when using native PyTorch implementation, users usually need to perform more performance optimizations on their own.

In addition to the data shared above, it was also noticed during the test that when upgrading the StableDiffusion inference function of HuggingFace Diffusers from version 0.25.0 to 0.3.0, the performance improved by more than 100% .

Similarly, in HuggingFace Transformers, upgrading Gemma from version 4.38.1 to version 4.38.2 also significantly improved performance.

These performance improvements highlight HuggingFace’s focus and efforts in performance optimization.

For some models with less manual optimization, such as SegmentAnything, the implementation provided by the study author is used. In this case, the performance gap compared to Keras is larger than most other models.

This shows that Keras can provide excellent out-of-the-box performance, and users can enjoy fast model running speeds without having to delve into all optimization techniques.

Found 4

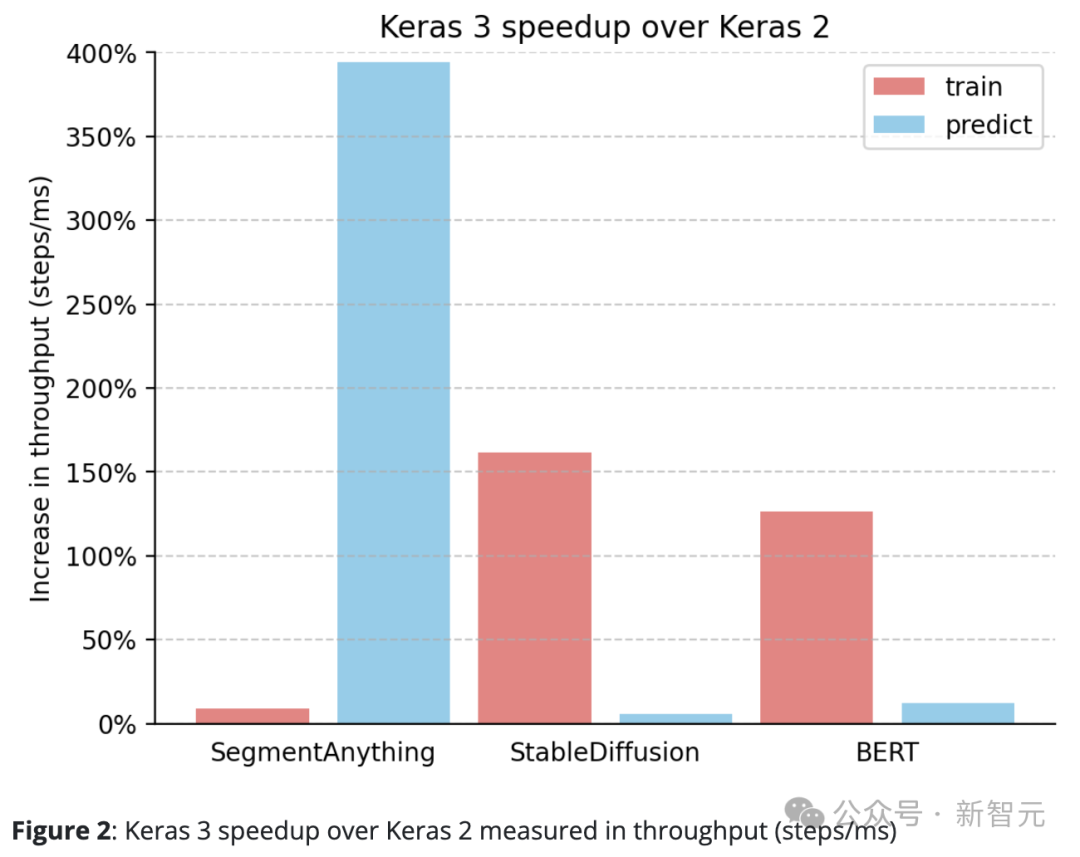

Keras 3 consistently outperforms Keras 2.

For example, SegmentAnything’s inference speed has increased by an astonishing 380%, StableDiffusion’s training processing speed has increased by more than 150%, and BERT’s training processing speed has also increased by more than 100%.

This is mainly because Keras 2 directly uses more TensorFlow fusion operations in some cases, which may not be the best choice for XLA compilation.

It’s worth noting that even just upgrading to Keras 3 and continuing to use the TensorFlow backend can result in significant performance improvements.

Conclusion

The performance of the framework depends largely on the specific model used.

Keras 3 can help choose the fastest framework for the task, and this choice will almost always outperform Keras 2 and PyTorch implementations.

More importantly, Keras 3 models provide excellent out-of-the-box performance without complex underlying optimizations.

The above is the detailed content of Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,