Technology peripherals

Technology peripherals

AI

AI

Transformer leads the flourishing of AI: from algorithm innovation to industrial application, understand the future of artificial intelligence in one article

Transformer leads the flourishing of AI: from algorithm innovation to industrial application, understand the future of artificial intelligence in one article

Transformer leads the flourishing of AI: from algorithm innovation to industrial application, understand the future of artificial intelligence in one article

1. Introduction

In recent years, artificial intelligence technology has achieved world-renowned results. Among them, natural language processing (NLP) ) and computer vision are particularly prominent. In these fields, a model called Transformer has gradually become a research hotspot, and innovative results with it as its core are emerging one after another. This article will explore how Transformer leads the flourishing of AI technology from aspects such as its principles, applications, and industrial practices.

2. Brief analysis of Transformer principle

Background knowledge

Before introducing Transformer, you need to understand its background knowledge-Recurrent Neural Network (RNN) and Long Short-term Memory Network ( LSTM). RNN has the problems of gradient disappearance and gradient explosion when processing sequence data, which makes it perform poorly in long sequence tasks. In order to solve this problem, LSTM came into being and effectively alleviated the vanishing and exploding gradient problems by introducing a gating mechanism. In order to solve this problem, LSTM came into being and effectively alleviated the vanishing and exploding gradient problems by introducing a gating mechanism.

Proposal of Transformer

In 2017, the Google team launched a brand new model-Transformer. Its core idea is to use the self-attention (Self-Attention) mechanism to replace the traditional of recurrent neural networks. Transformer has achieved remarkable results in the field of NLP, especially in machine translation tasks, and its performance far exceeds LSTM. This model has been widely used in natural language processing tasks such as machine translation and question answering systems.

Transformer architecture

Transformer consists of two parts: encoder (Encoder) and decoder (Decoder). The encoder is responsible for mapping the input sequence into a series of vectors, and the decoder is responsible for mapping the input sequence into a series of vectors. The output of the controller and the known partial output are used to predict the next output. In sequence-to-sequence tasks, such as machine translation, the encoder maps the source language sentence into a series of vectors, and the decoder generates the target language sentence based on the output of the encoder and the known partial output.

"(1) Encoder: The encoder consists of multiple identical layers, and each layer includes two sub-layers: multi-head self-attention mechanism and positional fully connected feed-forward network." Note: The paragraph in this article is about the structure of the encoder in the neural network. The original meaning should be retained after modification, and the number of words should not exceed 114.

The decoder is composed of multiple identical layers, each layer including three sub-layers: multi-head attention mechanism, encoder-decoder attention mechanism and forward pass network. The multi-head self-attention mechanism, encoder-decoder attention mechanism and position encoder are its key components, which can implement the decoder attention mechanism while covering position and fully connected feed-forward networks. In addition, the decoder's attention mechanism and position encoder can also improve its performance through network connections that can be used throughout the network

Self-attention mechanism

The self-attention mechanism is The core of Transformer, its calculation process is as follows:

(1) Calculate three matrices of Query, Key and Value. These three matrices are obtained by linear transformation of the input vector. .

(2) Calculate the attention score, which is the dot product of Query and Key.

(3) Divide the attention score by a constant to obtain the attention weight.

(4) Multiply the attention weight and Value to obtain the weighted output.

(5) Perform linear transformation on the weighted output to obtain the final output.

3. Application of Transformer

Natural Language Processing

Transformer has achieved remarkable results in the field of NLP, mainly including the following aspects:

( 1) Machine translation: Transformer achieved the best results at the time in the WMT2014 English-German translation task.

(2) Text classification: Transformer performs well in text classification tasks, especially in long text classification tasks, its performance far exceeds LSTM.

(3) Sentiment analysis: Transformer can capture long-distance dependencies and therefore has a high accuracy in sentiment analysis tasks.

Computer Vision

With the success of Transformer in the field of NLP, researchers began to apply it to the field of computer vision and achieved the following results:

(1) Image Classification: Transformer-based models have achieved good results in the ImageNet image classification task.

(2) Target detection: Transformer performs well in target detection tasks, such as DETR (Detection Transformer) model.

(3) Image generation: Transformer-based models such as GPT-3 have achieved impressive results in image generation tasks.

4. my country’s research progress in the field of Transformer

Academic research

Chinese scholars have achieved fruitful results in the field of Transformer, such as:

(1) The ERNIE model proposed by Tsinghua University improves the performance of pre-trained language models through knowledge enhancement.

(2) The BERT-wwm model proposed by Shanghai Jiao Tong University improves the performance of the model on Chinese tasks by improving the pre-training objectives.

Industrial Application

Chinese enterprises have also achieved remarkable results in the application of Transformer, such as:

(1) The ERNIE model proposed by Baidu is used in search engines, speech recognition and other fields.

(2) The M6 model proposed by Alibaba is applied to e-commerce recommendation, advertising prediction and other businesses.

5. The application status and future development trend of Transformer in the industry

Application status

Transformer is increasingly widely used in the industry, mainly including the following aspects:

(1) Search engine: Use Transformer for semantic understanding and improve search quality.

(2) Speech recognition: Through the Transformer model, more accurate speech recognition is achieved.

(3) Recommendation system: Transformer-based recommendation model improves recommendation accuracy and user experience.

- Future Development Trend

(1) Model compression and optimization: As the scale of the model continues to expand, how to compress and optimize the Transformer model has become a research hotspot.

(2) Cross-modal learning: Transformer has advantages in processing multi-modal data and is expected to make breakthroughs in the field of cross-modal learning in the future.

(3) Development of pre-training models: As computing power increases, pre-training models will continue to develop.

The above is the detailed content of Transformer leads the flourishing of AI: from algorithm innovation to industrial application, understand the future of artificial intelligence in one article. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

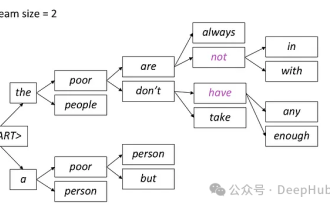

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

In natural language generation tasks, sampling method is a technique to obtain text output from a generative model. This article will discuss 5 common methods and implement them using PyTorch. 1. GreedyDecoding In greedy decoding, the generative model predicts the words of the output sequence based on the input sequence time step by time. At each time step, the model calculates the conditional probability distribution of each word, and then selects the word with the highest conditional probability as the output of the current time step. This word becomes the input to the next time step, and the generation process continues until some termination condition is met, such as a sequence of a specified length or a special end marker. The characteristic of GreedyDecoding is that each time the current conditional probability is the best

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

The bottom layer of the C++sort function uses merge sort, its complexity is O(nlogn), and provides different sorting algorithm choices, including quick sort, heap sort and stable sort.

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

The convergence of artificial intelligence (AI) and law enforcement opens up new possibilities for crime prevention and detection. The predictive capabilities of artificial intelligence are widely used in systems such as CrimeGPT (Crime Prediction Technology) to predict criminal activities. This article explores the potential of artificial intelligence in crime prediction, its current applications, the challenges it faces, and the possible ethical implications of the technology. Artificial Intelligence and Crime Prediction: The Basics CrimeGPT uses machine learning algorithms to analyze large data sets, identifying patterns that can predict where and when crimes are likely to occur. These data sets include historical crime statistics, demographic information, economic indicators, weather patterns, and more. By identifying trends that human analysts might miss, artificial intelligence can empower law enforcement agencies

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images

Practice and reflections on Jiuzhang Yunji DataCanvas multi-modal large model platform

Oct 20, 2023 am 08:45 AM

Practice and reflections on Jiuzhang Yunji DataCanvas multi-modal large model platform

Oct 20, 2023 am 08:45 AM

1. The historical development of multi-modal large models. The photo above is the first artificial intelligence workshop held at Dartmouth College in the United States in 1956. This conference is also considered to have kicked off the development of artificial intelligence. Participants Mainly the pioneers of symbolic logic (except for the neurobiologist Peter Milner in the middle of the front row). However, this symbolic logic theory could not be realized for a long time, and even ushered in the first AI winter in the 1980s and 1990s. It was not until the recent implementation of large language models that we discovered that neural networks really carry this logical thinking. The work of neurobiologist Peter Milner inspired the subsequent development of artificial neural networks, and it was for this reason that he was invited to participate in this project.

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

1. Background of the Construction of 58 Portraits Platform First of all, I would like to share with you the background of the construction of the 58 Portrait Platform. 1. The traditional thinking of the traditional profiling platform is no longer enough. Building a user profiling platform relies on data warehouse modeling capabilities to integrate data from multiple business lines to build accurate user portraits; it also requires data mining to understand user behavior, interests and needs, and provide algorithms. side capabilities; finally, it also needs to have data platform capabilities to efficiently store, query and share user profile data and provide profile services. The main difference between a self-built business profiling platform and a middle-office profiling platform is that the self-built profiling platform serves a single business line and can be customized on demand; the mid-office platform serves multiple business lines, has complex modeling, and provides more general capabilities. 2.58 User portraits of the background of Zhongtai portrait construction