Technology peripherals

Technology peripherals

AI

AI

Open source large-model AI agent operating system: control AI agents like Windos

Open source large-model AI agent operating system: control AI agents like Windos

Open source large-model AI agent operating system: control AI agents like Windos

This article is reprinted with the authorization of AIGC Open Community . Please contact the source for reprinting.

To learn more about AIGC, please visit: 51CTO AI.x Community

https://www.51cto.com/aigc/

Last year, the emergence of AutoGPT made us realize the powerful automation capabilities of AI agents and created a new AI agent track. However, there are still many problems that need to be solved in sub-task scheduling, resource allocation and collaboration between AI.

So researchers at Rutgers University developed AIOS, an AI agent operating system with large models at its core. It can effectively solve the problem of low resource call rate as the number of AI agents increases. It can also promote context switching between agents, implement concurrent agents, and maintain agent access control.

Open source address: https://github.com/agiresearch/AIOS

Paper address: https://arxiv.org/abs /2403.16971

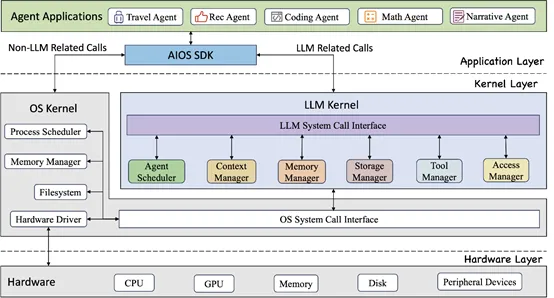

##The architecture of AIOS is similar to the PC operating system we use. It is mainly divided into application layer, kernel layer and hardware layer. Chunk . The only difference is that AIOS builds a kernel manager in the kernel layer that specifically manages tasks related to large models.

Application layer , mainly consists of proxy applications (e.g. , travel agent, mathematical agent, code agent, etc.); the kernel layer is developed by combining the traditional OS system and large model. The OS system is mainly used for file management, and the large model is used for the scheduling and management of AI agents;

The hardware layer consists of hardware devices such as CPU, GPU, memory and peripherals. However, the kernel of the large model cannot directly interact with the hardware. Instead, it manages hardware resources indirectly through calls provided by the kernel layer to ensure System integrity and efficiency.

AI agent scheduler

The AI agent scheduler is mainly responsible for reasonably scheduling and optimizing the agent requests of large models to make full use of the large model. Computational resources for the model. When multiple agents initiate requests to a large model at the same time, the scheduler needs to sort the requests according to a specific scheduling algorithm to avoid a single agent occupying the large model for a long time and causing other agents to wait for a long time.

In addition, the design of AIOS also supports more complex scheduling strategies, for example, considering the dependencies between proxy requests to achieve more optimized resource allocation.

When there is no scheduling instruction, the agents need to execute tasks one by one in order, and subsequent agents need to wait for a long time;After using the scheduling algorithm, the requests of each agent can be interleaved and parallelized execution, significantly reducing overall wait time and response latency.

Context Manager

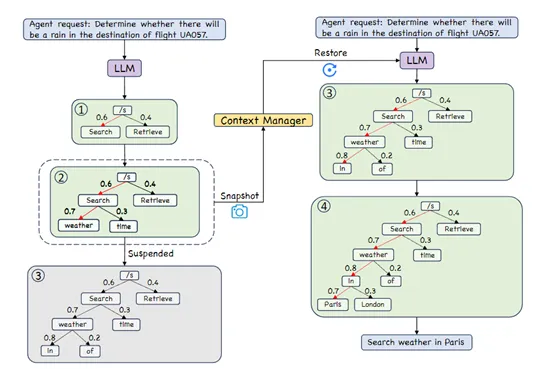

Since the large model generation process generally uses heuristic searches such as Beam Search, the search tree will be gradually built and different paths will be evaluated. Finally the result is given.

However, if a large model is interrupted by the scheduler during the generation process, in order to avoid losing all intermediate states and wasting previous calculations, the context manager will update the current Beam Search tree state (including Probability of each path, etc.) to save snapshots.

When the large model regains execution resources, the context manager can accurately resume the previous Beam Search state from the point of interruption and continue to generate the remaining parts , ensuring the completeness and accuracy of the final result.

In addition, most large models have context length limitations, and the input context in actual scenarios often exceeds this limit. To solve this problem, the context manager integrates functions such as text summarization, which can compress or block long contexts, allowing large models to efficiently understand and process long context information.

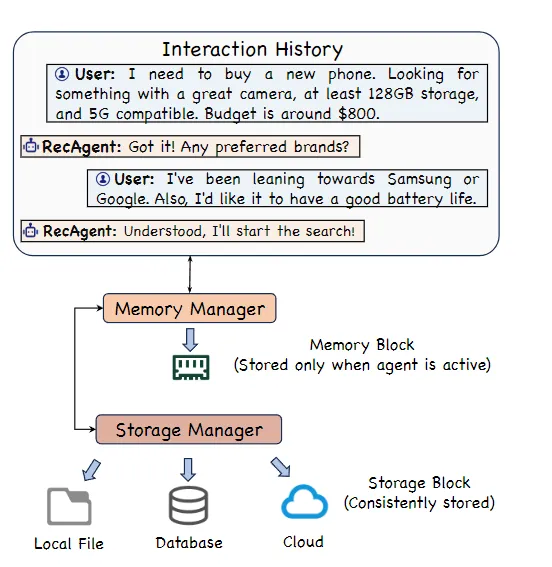

Memory Manager

The memory manager is mainly responsible for managing short-term memory resources and providing efficient interaction logs and intermediate data for each AI agent. temporary storage.

當AI代理處於等待執行或正在運行狀態時,其所需的資料將被保存在由記憶體管理器分配的記憶體區塊中。一旦代理任務結束,對應的記憶體區塊也會被系統回收,以確保記憶體資源的高效利用。

AIOS會為每個AI代理程式分配獨立的記憶體,並透過存取管理器來實現不同代理程式之間記憶體隔離。未來,AIOS會引入更複雜的記憶體共享機制和層級快取策略,以進一步優化AI代理的整體效能。

想了解更多AIGC的內容,請造訪:51CTO AI.x社群

https://www.51cto.com/ aigc/

The above is the detailed content of Open source large-model AI agent operating system: control AI agents like Windos. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1653

1653

14

14

1413

1413

52

52

1304

1304

25

25

1251

1251

29

29

1224

1224

24

24

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Face detection and recognition technology is already a relatively mature and widely used technology. Currently, the most widely used Internet application language is JS. Implementing face detection and recognition on the Web front-end has advantages and disadvantages compared to back-end face recognition. Advantages include reducing network interaction and real-time recognition, which greatly shortens user waiting time and improves user experience; disadvantages include: being limited by model size, the accuracy is also limited. How to use js to implement face detection on the web? In order to implement face recognition on the Web, you need to be familiar with related programming languages and technologies, such as JavaScript, HTML, CSS, WebRTC, etc. At the same time, you also need to master relevant computer vision and artificial intelligence technologies. It is worth noting that due to the design of the Web side

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

New SOTA for multimodal document understanding capabilities! Alibaba's mPLUG team released the latest open source work mPLUG-DocOwl1.5, which proposed a series of solutions to address the four major challenges of high-resolution image text recognition, general document structure understanding, instruction following, and introduction of external knowledge. Without further ado, let’s look at the effects first. One-click recognition and conversion of charts with complex structures into Markdown format: Charts of different styles are available: More detailed text recognition and positioning can also be easily handled: Detailed explanations of document understanding can also be given: You know, "Document Understanding" is currently An important scenario for the implementation of large language models. There are many products on the market to assist document reading. Some of them mainly use OCR systems for text recognition and cooperate with LLM for text processing.