The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com.

From the international top GPT-4 128K, Claude 200K to the domestic "Red Fried Chicken" Kimi Chat that supports more than 2 million words of text, large language model (LLM) in long context technology Rolled up involuntarily. When the smartest minds in the world are working on something, the importance and difficulty of the matter are self-evident.

Extremely long contexts can greatly expand the productivity value of large models. With the popularity of AI, users are no longer satisfied with playing with large models and doing a few brain teasers. Users are beginning to desire to use large models to truly improve productivity. After all, the PPT that used to take a week to create can now be generated in minutes by just feeding the large model a string of prompt words and a few reference documents. Who wouldn't love it as a working person?

Recently, some new efficient sequence modeling methods have emerged, such as Lightning Attention (TransNormerLLM), State Space Modeling (Mamba), Linear RNN (RWKV, HGRN, Griffin), etc., which have become a hot research direction. Researchers are eager to transform the already mature 7-year-old Transformer architecture to obtain a new architecture with comparable performance but only linear complexity. This type of approach focuses on model architecture design and provides hardware-friendly implementation based on CUDA or Triton, allowing it to be efficiently calculated inside a single-card GPU like FlashAttention.

At the same time, another controller of long sequence training has also adopted a different strategy: sequence parallelism has gained more and more attention. By dividing the long sequence into multiple equally divided short sequences in the sequence dimension, and dispersing the short sequences to different GPU cards for parallel training, and supplemented by inter-card communication, the effect of sequence parallel training is achieved. From the earliest Colossal-AI sequence parallelism, to Megatron sequence parallelism, to DeepSpeed Ulysses, and more recently, Ring Attention, researchers have continued to design more elegant and efficient communication mechanisms to improve the training efficiency of sequence parallelism. Of course, these known methods are all designed for traditional attention mechanisms, which we call Softmax Attention in this article. These methods have already been analyzed by various experts, so this article will not discuss them in detail.

It should be noted that the name of the natural language processing method includes Linear Attention, but it is not limited to the Linear Attention method, but can be widely used including Lightning Attention (TransNormerLLM), State Space Modeling (Mamba ), Linear RNN (RWKV, HGRN, Griffin), etc. Linear sequence modeling methods.

Introduction to LASP method

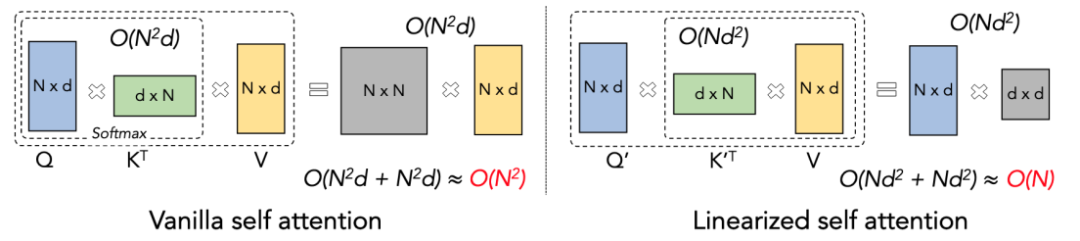

In order to better understand the idea of LASP, let us first review the calculation formula of traditional Softmax Attention: O=softmax((QK^T)⊙M)V, where Q, K, V, M, and O are respectively Query, Key, Value, Mask and Output matrices, M here is a lower triangular all-1 matrix in one-way tasks (such as GPT), and can be ignored in two-way tasks (such as BERT), that is, there is no Mask matrix for bi-directional tasks . We will split LASP into four points for explanation below:

Linear Attention Principle

Linear Attention can be regarded as a variant of Softmax Attention. Linear Attention removes the computationally expensive Softmax operator, and the calculation formula of Attention can be written as a concise form of O=((QK^T)⊙M) V. However, due to the existence of the Mask matrix M in the one-way task, this form can still only perform left multiplication calculation (that is, calculate QK^T first), so the linear complexity of O (N) cannot be obtained. But for bidirectional tasks, since there is no Mask matrix, the calculation formula can be further simplified to O=(QK^T) V. The clever thing about Linear Attention is that by simply using the associative law of matrix multiplication, its calculation formula can be further transformed into: O=Q (K^T V). This calculation form is called right multiplication. It can be seen that Linear Attention is Tempting O (N) complexity can be achieved in this bidirectional task!

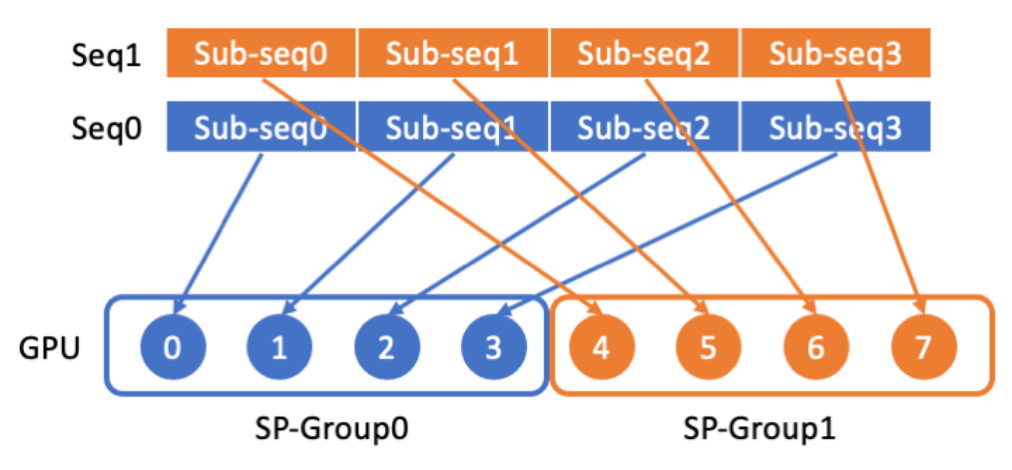

LASP data distribution

LASP first divides the long sequence data into multiple equally divided subsequences from the sequence dimension. The subsequence is then distributed to all GPUs in the sequence parallel communication group, so that each GPU has a subsequence for subsequent sequence parallel calculations.

LASP core mechanism

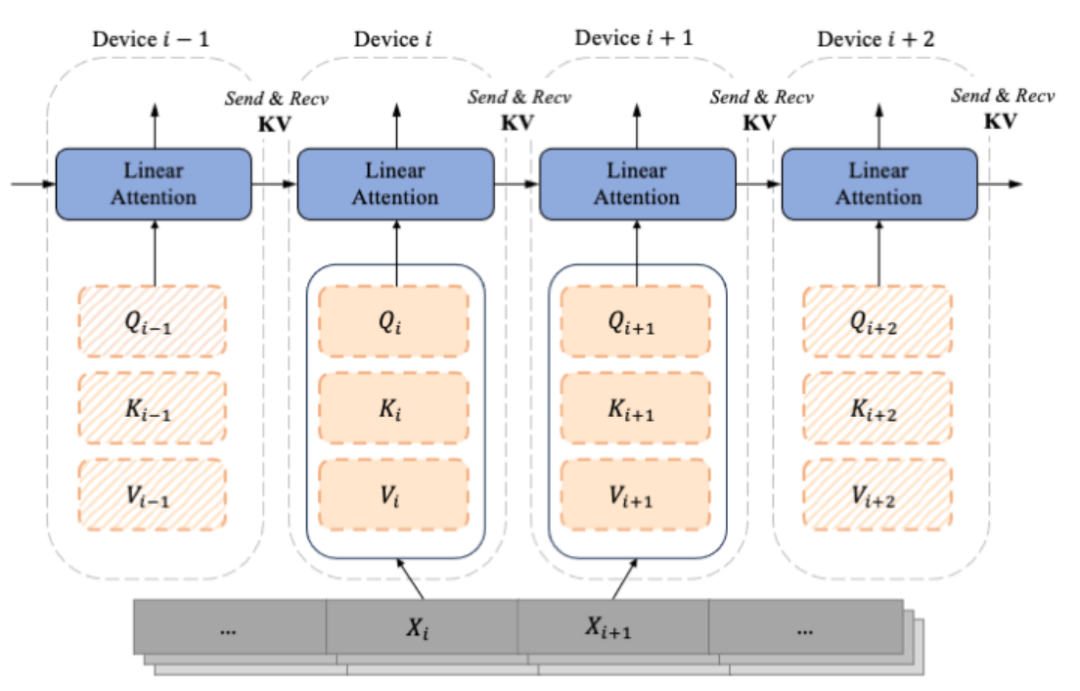

As the decoder-only GPT-like model gradually becomes the de facto standard of LLM, LASP The design fully considers the scenario of one-way Casual tasks. Calculated from the segmented subsequence Xi are Qi, Ki, and Vi segmented according to the sequence dimensions. Each index i corresponds to a Chunk and a Device (i.e., a GPU). Due to the existence of the Mask matrix, the LASP author cleverly distinguishes the Qi, Ki, and Vi corresponding to each Chunk into two types, namely: Intra-Chunk and Inter-Chunk. Among them, Intra-Chunk is the Chunk on the diagonal after the Mask matrix is divided into blocks. It can be considered that the Mask matrix still exists, and left multiplication still needs to be used; Inter-Chunk is the Chunk on the off-diagonal line of the Mask matrix, which can be considered not With the existence of the Mask matrix, right multiplication can be used; obviously, when the more Chunks are divided, the proportion of Chunks on the diagonal will be smaller, and the proportion of Chunks on the off-diagonal will be larger. Right multiplication can be used to achieve linear complexity. The more chunks the Attention calculates. Among them, for the calculation of the right multiplied Inter-Chunk, during forward calculation, each device needs to use point-to-point communication to Recive the KV of the previous device and send its own updated KV to the next device. When calculating in reverse, it is just the opposite, except that the objects of Send and Recive become the gradient dKV of KV. The forward calculation process is shown in the figure below:

LASP code implementation

In order to improve the computing efficiency of LASP on the GPU, The author performed Kernel Fusion for the calculations of Intra-Chunk and Inter-Chunk respectively, and also integrated the update calculations of KV and dKV into the calculations of Intra-Chunk and Inter-Chunk. Additionally, in order to avoid recomputing the activation KV during backpropagation, the authors chose to store it in the GPU's HBM immediately after the forward propagation calculation. During the subsequent backpropagation, LASP directly accesses the KV for use. It should be noted that the KV size stored in HBM is d x d and is completely unaffected by the sequence length N. When the input sequence length N is large, the memory footprint of KV becomes insignificant. Inside a single GPU, the author implemented Lightning Attention implemented by Triton to reduce the IO overhead between HBM and SRAM, thereby accelerating single-card Linear Attention calculations.

Readers who want to know more details can read Algorithm 2 (LASP forward process) and Algorithm 3 (LASP reverse process) in the paper, as well as the detailed derivation process in the paper.

Traffic Analysis

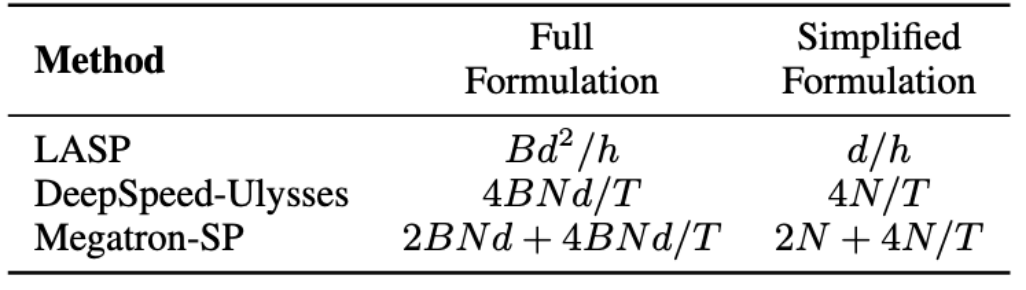

In the LASP algorithm, it should be noted that forward propagation requires KV activation communication at each Linear Attention module layer. The traffic is Bd^2/h, where B is the batch size and h is the number of heads. In contrast, Megatron-SP uses an All-Gather operation after the two Layer Norm layers in each Transformer layer, and a Reduce-Scatter operation after the Attention and FFN layers, which causes its communication The quantity is 2BNd 4BNd/T, where T is the sequence parallel dimension. DeepSpeed-Ulysses uses an All-to-All set communication operation to process the input Q, K, V and output O of each Attention module layer, resulting in a communication volume of 4BNd/T. The communication volume comparison between the three is shown in the table below. where d/h is the head dimension, usually set to 128. In practical applications, LASP can achieve the lowest theoretical communication volume when N/T>=32. Furthermore, LASP's communication volume is not affected by sequence length N or subsequence length C, which is a huge advantage for parallel computing of extremely long sequences across large GPU clusters.

Data-Sequence Hybrid Parallel

Data parallelism (i.e. Batch-level data segmentation) is already distributed training For regular operations, based on original data parallelism (PyTorch DDP), sliced data parallelism has evolved to save more graphics memory. From the original DeepSpeed ZeRO series to the FSDP officially supported by PyTorch, sliced data parallelism has been mature enough and has been More and more users are using it. As a sequence-level data segmentation method, LASP is compatible with various data parallel methods including PyTorch DDP, Zero-1/2/3, and FSDP. This is undoubtedly good news for LASP users.

Accuracy Experiment

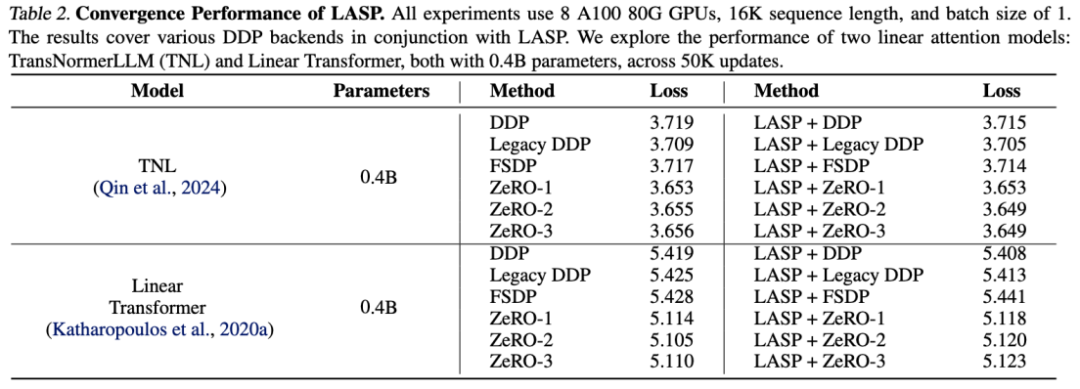

The experimental results on TransNormerLLM (TNL) and Linear Transformer show that LASP, as a system optimization method, can be combined with various DDP backends, and Both can achieve the same performance as Baseline.

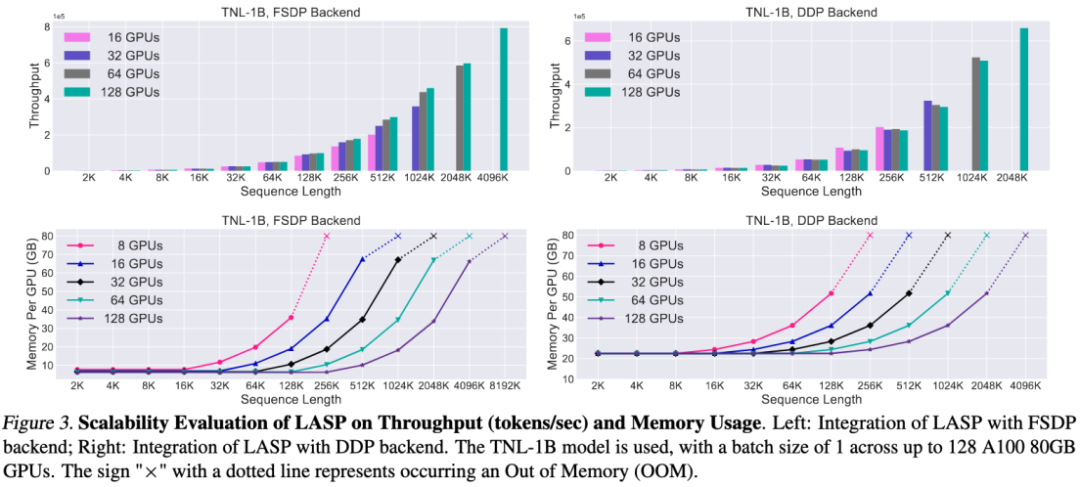

Scalability Experiment

Thanks to the efficient communication mechanism design, LASP can be easily expanded to hundreds of GPU cards, and Maintains great scalability.

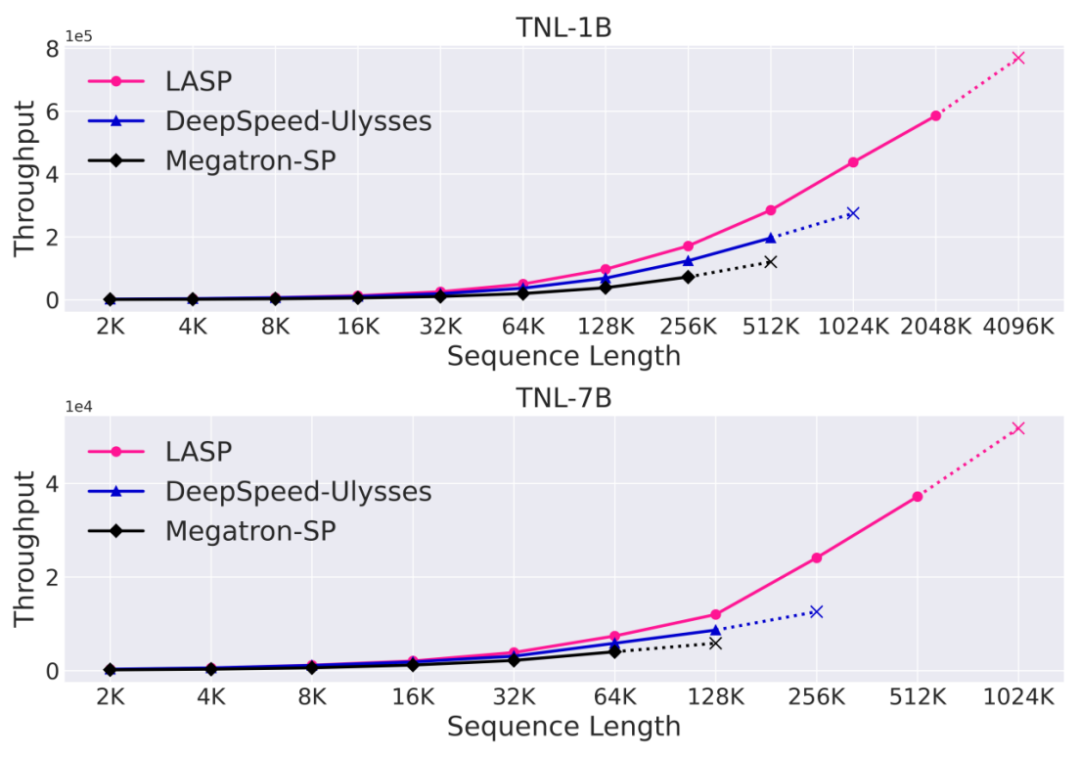

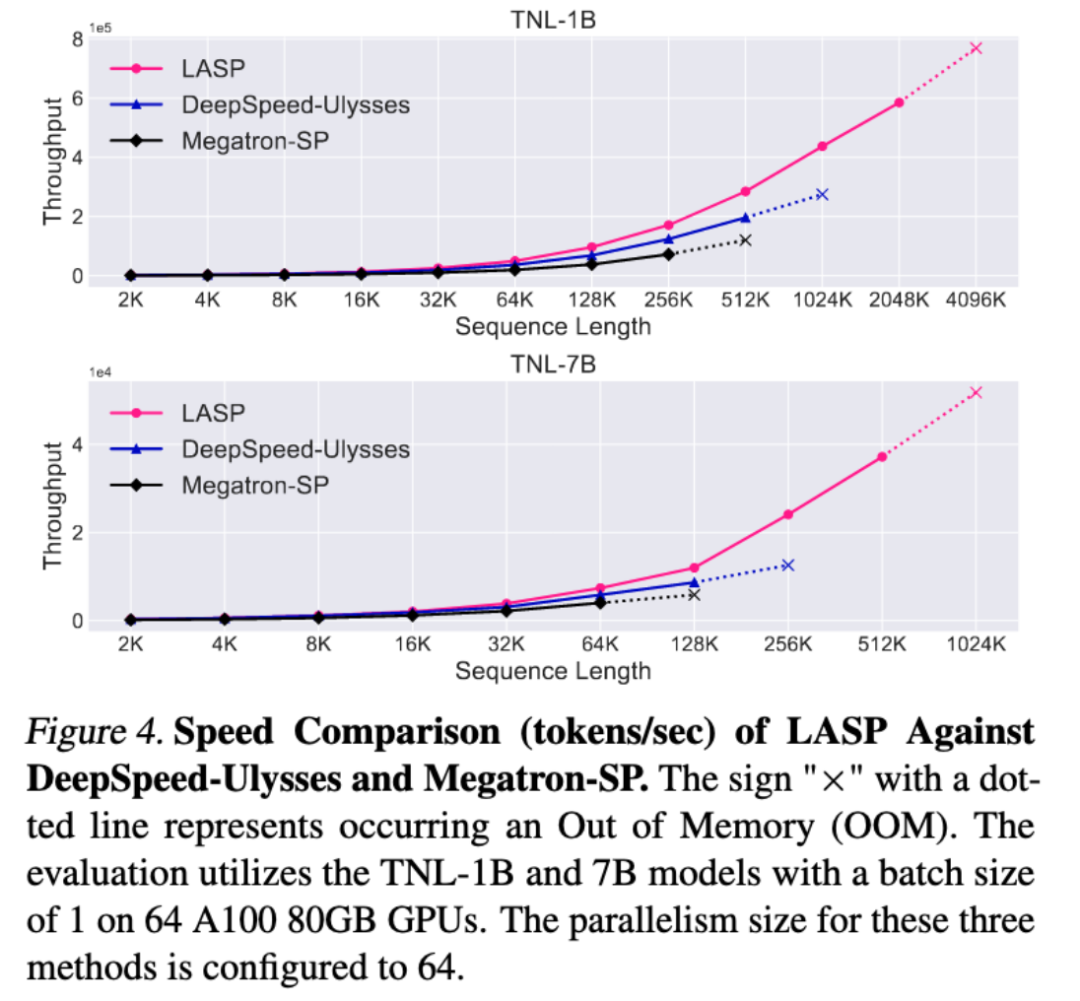

Speed comparison experiment

Compared with the mature sequence parallel methods Megatron-SP and DeepSpeed-Ulysses, LASP is the most trainable The long sequence length is 8 times that of Megatron-SP and 4 times that of DeepSpeed-Ulysses, and the speed is 136% and 38% faster respectively.

Conclusion

In order to facilitate your trial, the author has provided a ready-to-use LASP code implementation without downloading data. Set and model, just use PyTorch to experience the extremely long and extremely fast sequence parallel capabilities of LASP in minutes.

Code portal: https://github.com/OpenNLPLab/LASP

The above is the detailed content of Extremely long sequences, extremely fast: LASP sequence parallelism for a new generation of efficient large language models. For more information, please follow other related articles on the PHP Chinese website!