Technology peripherals

Technology peripherals

AI

AI

Tsinghua's latest! RoadBEV: How to achieve road surface reconstruction under BEV?

Tsinghua's latest! RoadBEV: How to achieve road surface reconstruction under BEV?

Tsinghua's latest! RoadBEV: How to achieve road surface reconstruction under BEV?

Original title: RoadBEV: Road Surface Reconstruction in Bird's Eye View

Paper link: https://arxiv.org/pdf/2404.06605.pdf

Code link: https ://github.com/ztsrxh/RoadBEV

Author affiliation: Tsinghua University, University of California, Berkeley

## Paper idea:

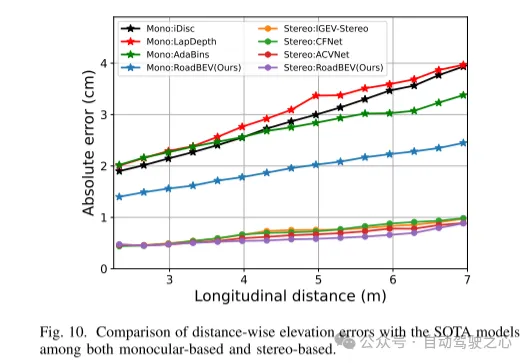

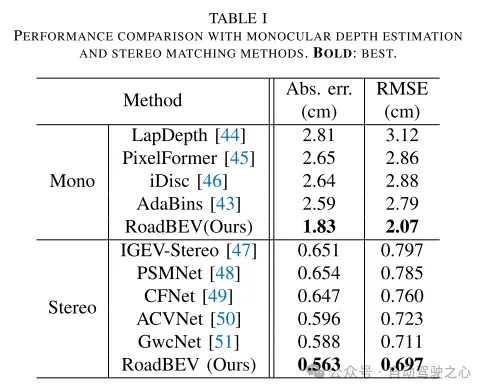

Road surface conditions, especially geometric contours, greatly affect the driving ability of autonomous vehicles. Vision-based online road reconstruction is expected to capture road information in advance. Existing solutions such as monocular depth estimation and stereo vision estimation have their limitations. Recent bird's-eye view (BEV) perception technology provides tremendous motivation for more reliable and accurate reconstruction. This paper uniformly proposes two effective BEV road elevation reconstruction models, named RoadBEV-mono and RoadBEV-stereo respectively, which are different from the use of monocular and binocular images for road elevation estimation. The former estimates road elevation directly from a single image, while the latter estimates road elevation using left and right volumetric views. In-depth analysis reveals their consistency and differences with the perspectives. Experiments on real-world datasets demonstrate the effectiveness and superiority of the model. The elevation errors of RoadBEV-mono and RoadBEV-stereo are 1.83 meters and 0.56 meters respectively. The performance of BEV estimation based on monocular images is improved by 50%. The model in this article is expected to provide a valuable reference in vision-based autonomous driving technology.Main contributions:

This paper demonstrates for the first time the necessity and superiority of road surface reconstruction from a bird's-eye perspective from both theoretical and experimental aspects. This article introduces two models, named RoadBEV-mono and RoadBEV-stereo. For monocular and stereo based schemes, this article explains their mechanisms in detail. This paper comprehensively tests and analyzes the performance of the proposed model, providing valuable insights and prospects for future research.Network Design:

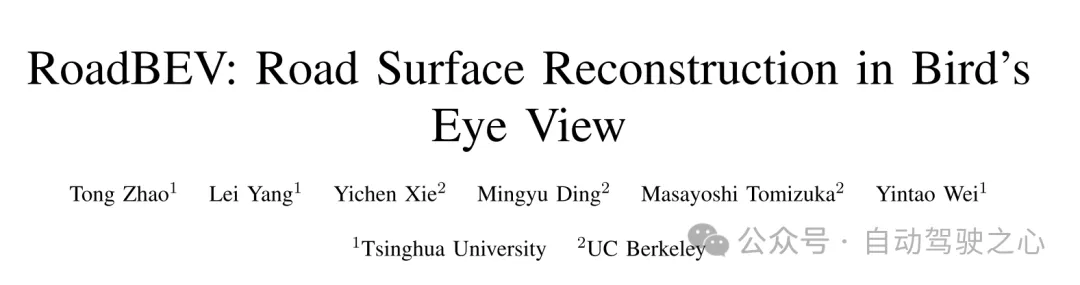

In recent years, the rapid development of unmanned ground vehicles (UGVs) has put forward higher requirements for vehicle-mounted perception systems. Real-time understanding of the driving environment and conditions is crucial for accurate motion planning and control [1]-[3]. For vehicles, roads are the only medium of contact with the physical world. Road surface conditions determine many vehicle characteristics and drivability [4]. As shown in Figure 1(a), road irregularities, such as bumps and potholes, will exacerbate the riding experience of the vehicle, which is intuitively perceptible. Real-time road surface condition perception, especially geometric elevation, greatly helps improve ride comfort [5], [6]. Compared with other perception tasks in unmanned ground vehicles (UGVs) such as segmentation and detection, road surface reconstruction (RSR) is an emerging technology that has received increasing attention recently. Similar to existing perception processes, RSR typically utilizes onboard LiDAR and camera sensors to retain road surface information. LiDAR directly scans road contours and derives point clouds [7], [8]. Road elevation on vehicle trajectories can be extracted directly without complex algorithms. However, the high cost of lidar sensors limits their application in economical mass-produced vehicles. Unlike larger traffic objects such as vehicles and pedestrians, road irregularities are typically small in magnitude, so the accuracy of the point cloud is critical. Motion compensation and filtering are required on real-time road scanning, which further requires high-precision positioning at the centimeter level. Image-based road surface reconstruction (RSR), as a three-dimensional vision task, is more promising than LiDAR in terms of accuracy and resolution. It also retains the road surface texture, making the road perception more comprehensive. Vision-based road elevation reconstruction is actually a depth estimation problem. For monocular cameras, monocular depth estimation can be implemented based on a single image, or multi-view stereo (MVS) can be implemented based on sequences to directly estimate depth [9]. For binocular cameras, binocular matching regresses disparity maps, which can be converted to depth [10], [11]. Given the camera parameters, the road point cloud in the camera coordinate system can be recovered. Through a preliminary post-processing process, road structure and elevation information are finally obtained. Under the guidance of ground-truth (GT) labels, high-precision and reliable RSR can be achieved.However, road surface reconstruction (RSR) from the image perspective has inherent shortcomings. The depth estimation for a specific pixel is actually to find optimal bins along the direction perpendicular to the image plane (shown as the orange point in Figure 1(b)). There is a certain angular deviation between the depth direction and the road surface. Changes and trends in road profile features are inconsistent with changes and trends in the search direction. Information cues about road elevation changes are sparse in the depth view. Furthermore, the depth search range is the same for each pixel, causing the model to capture global geometric hierarchy rather than local surface structure. Due to the global but coarse depth search, fine road elevation information is destroyed. Since this paper focuses on elevation in the vertical direction, the effort in the depth direction is wasted. In perspective views, texture details at long distances are lost, which further poses challenges for efficient depth regression unless further a priori constraints are introduced [12].

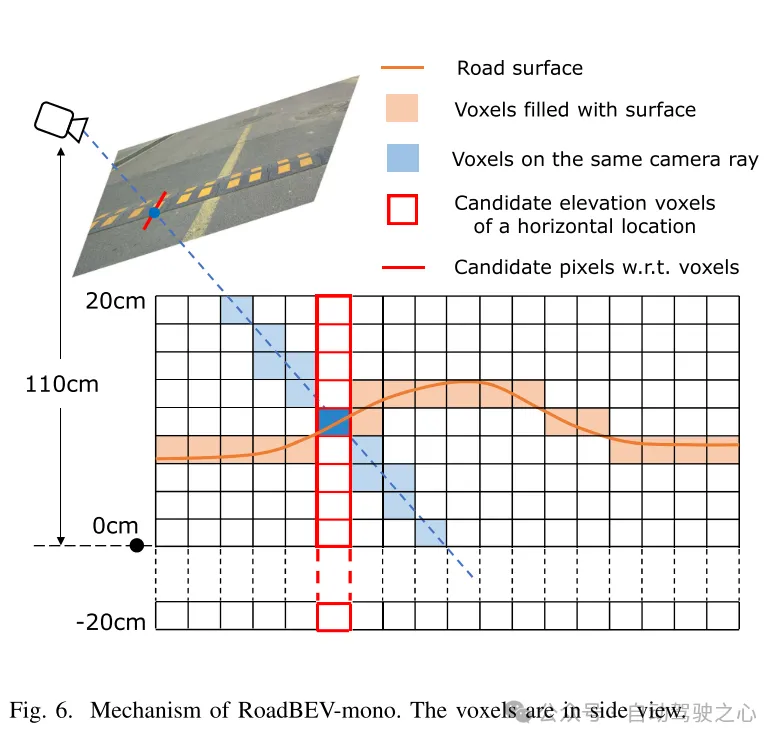

Estimating road elevation from a top view (i.e., bird's eye view, BEV) is a natural idea because elevation essentially describes vibrations in the vertical direction. Bird's eye view is an effective paradigm for representing multi-modal and multi-view data in unified coordinates [13], [14]. Recent state-of-the-art performance on 3D object detection and segmentation tasks was achieved by approaches based on bird's-eye views [15], as opposed to perspective views, which are performed by introducing estimated heads on view-transformed image features. Figure 1 illustrates the motivation for this paper. Instead of focusing on the global structure in the image view, the reconstruction in the bird's-eye view directly identifies road features within a specific small range in the vertical direction. Road features projected in a bird's-eye view densely reflect structural and contour changes, facilitating efficient and refined searches. The influence of perspective effects is also suppressed because roads are represented uniformly on a plane perpendicular to the viewing angle. Road reconstruction based on bird's-eye view features is expected to achieve higher performance.

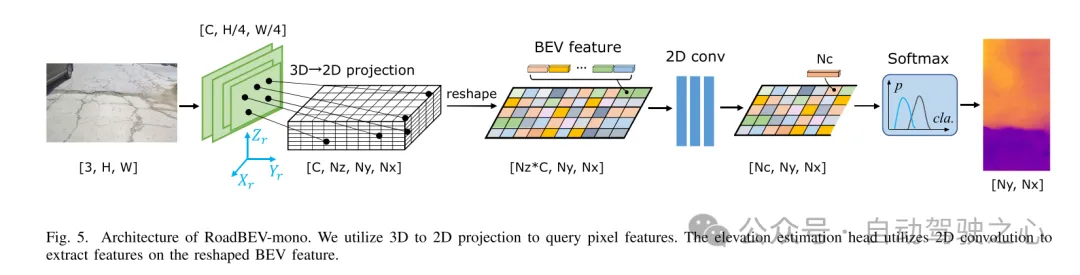

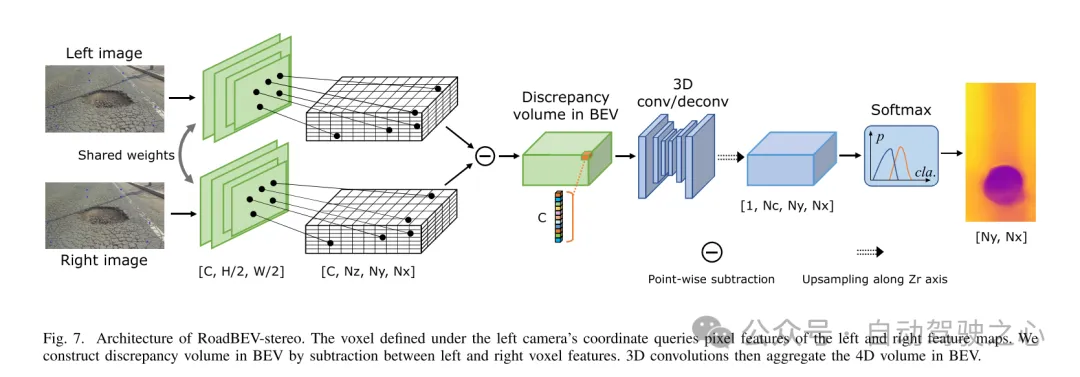

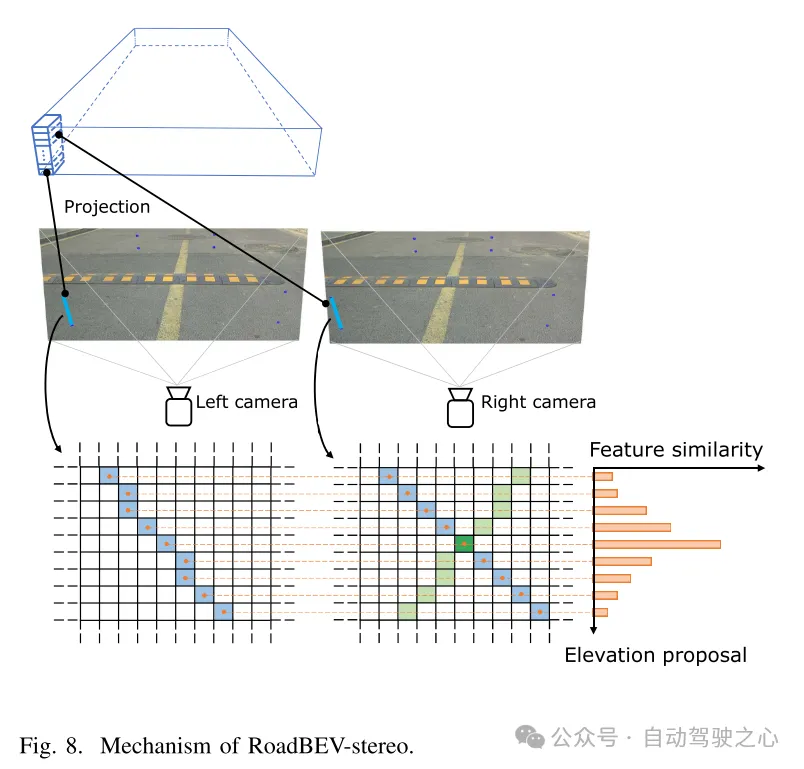

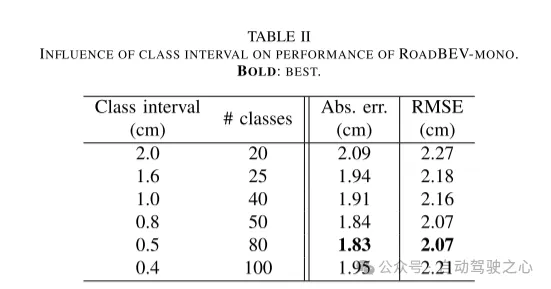

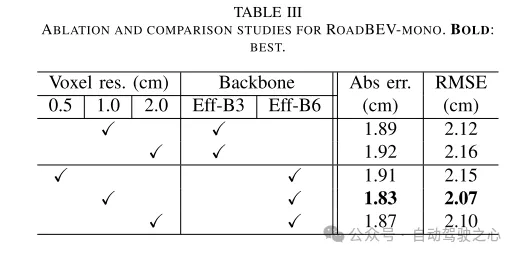

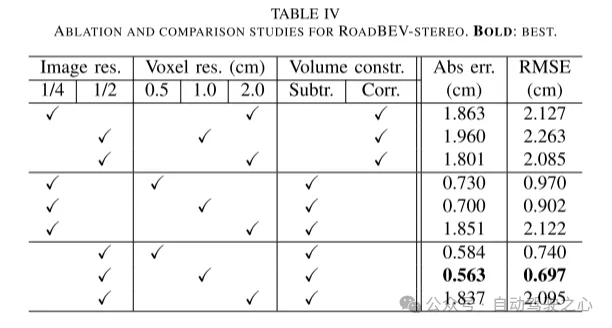

This paper reconstructs the road surface under BEV to solve the problems identified above. In particular, this paper focuses on road geometry, namely elevation. In order to utilize monocular and binocular images and demonstrate the broad feasibility of bird's-eye view perception, this paper proposes two sub-models named RoadBEV-mono and RoadBEV-stereo. Following the paradigm of a bird's eye view, this paper defines voxels of interest covering potential road relief. These voxels query pixel features through 3D-2D projection. For RoadBEV-mono, this paper introduces a height estimation head on the reshaped voxel features. The structure of RoadBEV-stereo is consistent with binocular matching in image views. Based on the left and right voxel features, a 4D cost volume is constructed in the bird's-eye view, which is aggregated through 3D convolution. Elevation regression is considered as a classification of predefined bins to enable more efficient model learning. This paper validates these models on a real-world dataset previously published by the authors, showing that they have huge advantages over traditional monocular depth estimation and stereo matching methods.

Figure 1. Motivation for this article. (a) Regardless of monocular or binocular configuration, our reconstruction method in bird's-eye view (BEV) outperforms the method in image view. (b) When performing depth estimation in the image view, the search direction is biased from the road elevation direction. In the depth view, road outline features are sparse. Potholes are not easily identified. (c) In a bird's-eye view, contour vibrations such as potholes, curb steps and even ruts can be accurately captured. Road elevation features in the vertical direction are denser and easier to identify.

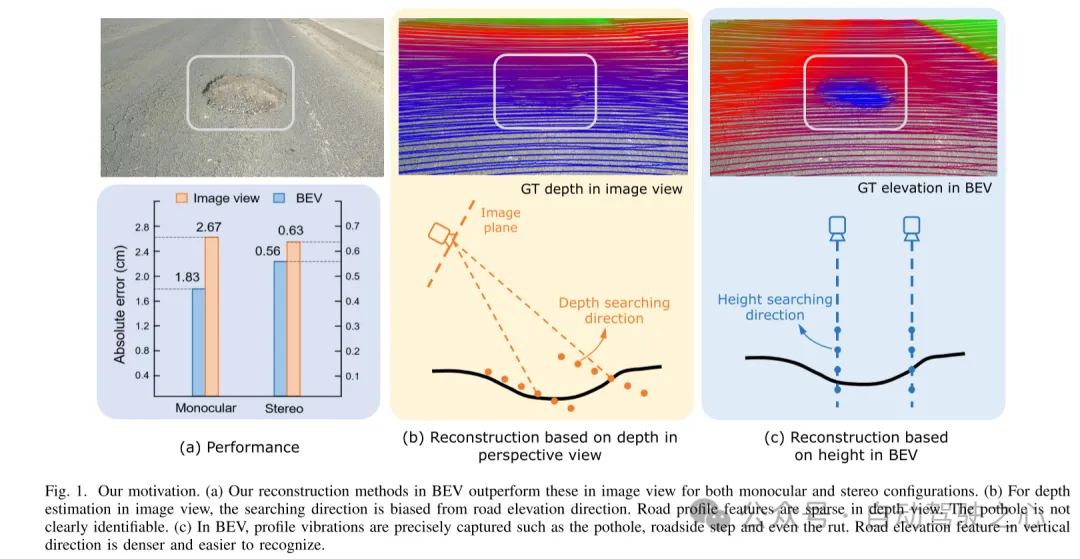

Figure 2. Coordinate representation and generation of true value (GT) elevation labels. (a) Coordinates (b) Region of interest (ROI) in image view (c) Region of interest (ROI) in bird's eye view (d) Generating ground truth (GT) labels in grid

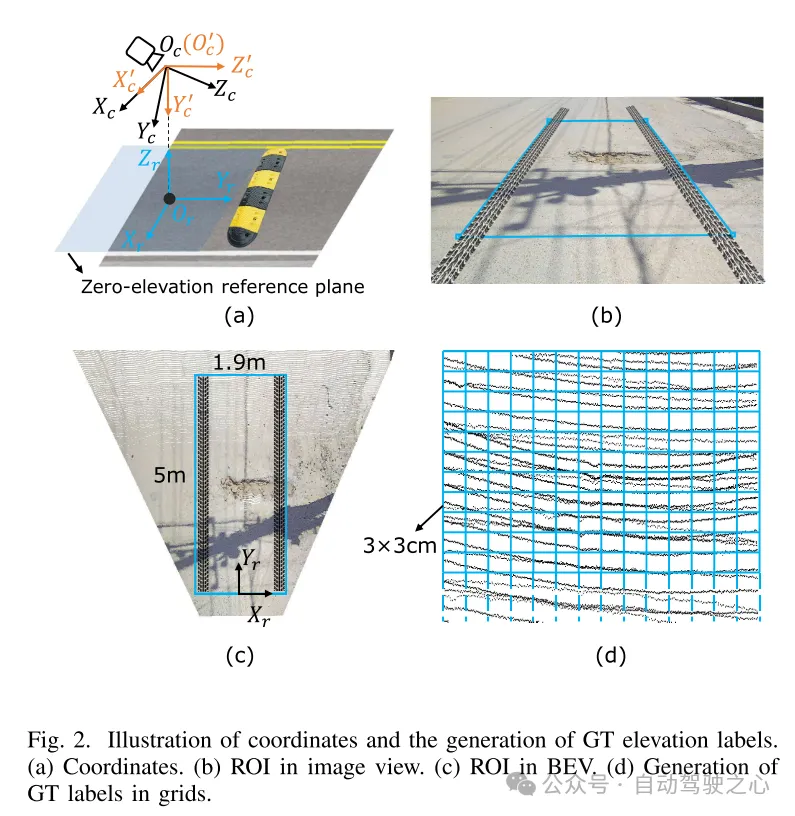

Figure 3. Example of road image and ground truth (GT) elevation map.

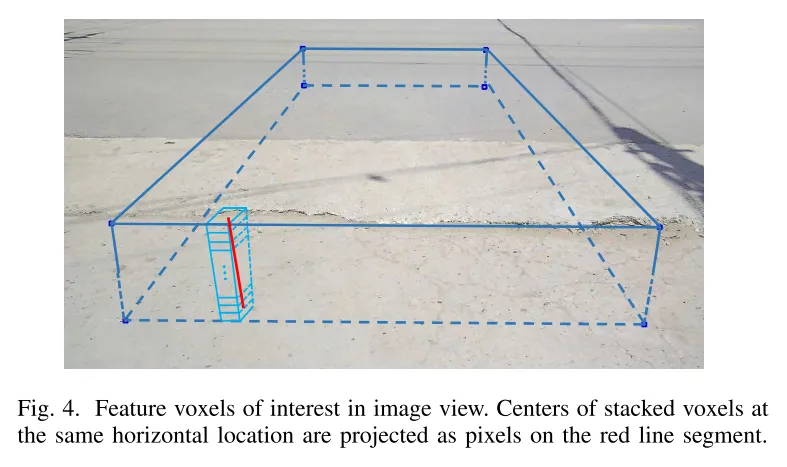

Figure 4. Feature voxels of interest in image view. The centers of stacked voxels located at the same horizontal position are projected to pixels on the red line segment.

Figure 5. Architecture of RoadBEV-mono. This paper uses 3D to 2D projection to query pixel features. The elevation estimation head uses 2D convolution to extract features on the reshaped Bird's Eye View (BEV) features.

Figure 6. Mechanism of RoadBEV-mono. Voxels are shown in side view.

Figure 7. RoadBEV-stereo architecture. The voxels defined in the left camera coordinate system query the pixel features of the left and right feature maps. This paper constructs a difference volume in the Bird's Eye View (BEV) through the subtraction between left and right voxel features. Then, 3D convolution aggregates the 4D volume in the bird's-eye view.

Figure 8. Mechanism of RoadBEV-stereo.

Experimental results:

Figure 9. Training loss of (a) RoadBEV-mono and (b) RoadBEV-stereo.

Figure 10. Comparison of elevation errors in the distance direction with the SOTA model based on monocular and binocular.

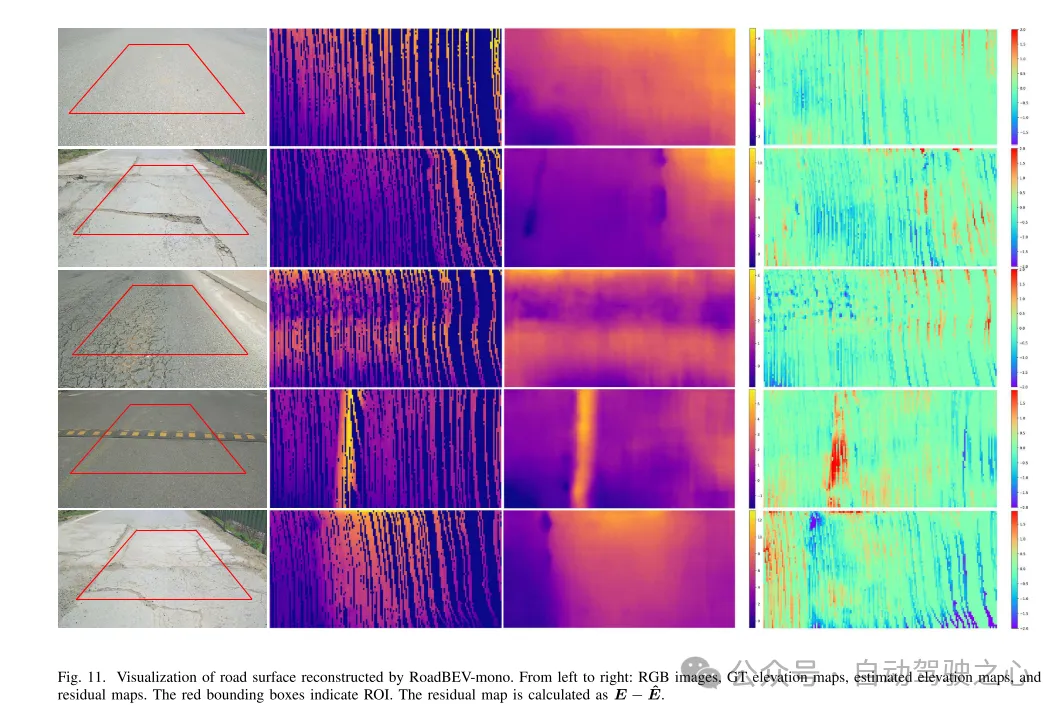

Figure 11. Visualization of road surface reconstructed by RoadBEV-mono.

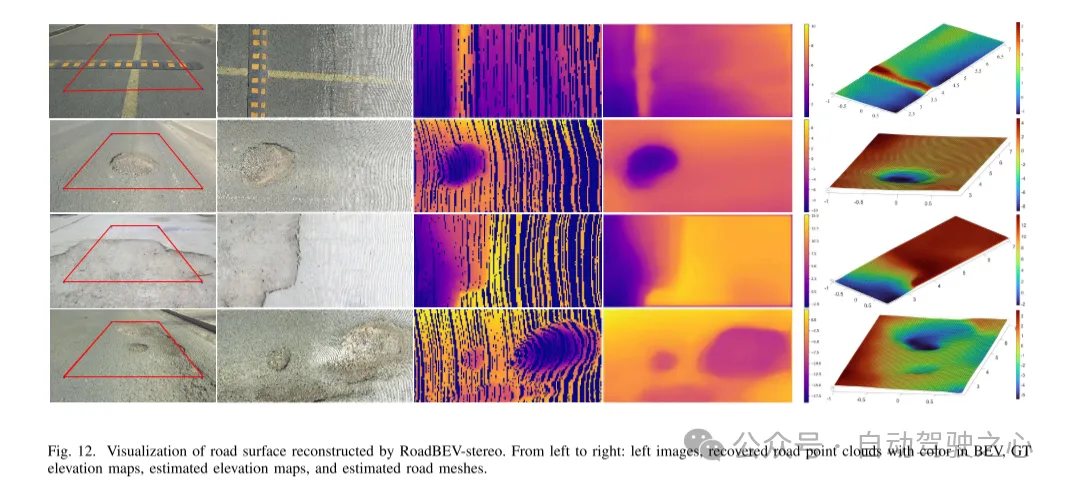

Figure 12. Visualization of road surface reconstructed by RoadBEV-stereo.

Summarize:

This article reconstructs the elevation of the road surface in a bird's-eye view for the first time. This paper proposes and analyzes two models based on monocular and binocular images, named RoadBEV-mono and RoadBEV-stereo respectively. This paper finds that monocular estimation and binocular matching in BEV are the same mechanisms as in perspective views, which are improved by narrowing the search range and mining features directly in the elevation direction. Comprehensive experiments on real-world datasets verify the feasibility and superiority of the proposed BEV volume, estimation head and parameter settings. For monocular cameras, the reconstruction performance in BEV is improved by 50% compared to perspective view. At the same time, in BEV, the performance of using binocular cameras is three times that of monocular cameras. This article provides in-depth analysis and guidance on the model. The groundbreaking exploration of this article also provides valuable reference for further research and applications related to BEV perception, 3D reconstruction and 3D detection.

The above is the detailed content of Tsinghua's latest! RoadBEV: How to achieve road surface reconstruction under BEV?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to run the h5 project

Apr 06, 2025 pm 12:21 PM

How to run the h5 project

Apr 06, 2025 pm 12:21 PM

Running the H5 project requires the following steps: installing necessary tools such as web server, Node.js, development tools, etc. Build a development environment, create project folders, initialize projects, and write code. Start the development server and run the command using the command line. Preview the project in your browser and enter the development server URL. Publish projects, optimize code, deploy projects, and set up web server configuration.

How to view the results after Bootstrap is modified

Apr 07, 2025 am 10:03 AM

How to view the results after Bootstrap is modified

Apr 07, 2025 am 10:03 AM

Steps to view modified Bootstrap results: Open the HTML file directly in the browser to ensure that the Bootstrap file is referenced correctly. Clear the browser cache (Ctrl Shift R). If you use CDN, you can directly modify CSS in the developer tool to view the effects in real time. If you modify the Bootstrap source code, download and replace the local file, or rerun the build command using a build tool such as Webpack.

How to use vue pagination

Apr 08, 2025 am 06:45 AM

How to use vue pagination

Apr 08, 2025 am 06:45 AM

Pagination is a technology that splits large data sets into small pages to improve performance and user experience. In Vue, you can use the following built-in method to paging: Calculate the total number of pages: totalPages() traversal page number: v-for directive to set the current page: currentPage Get the current page data: currentPageData()

Monitor MySQL and MariaDB Droplets with Prometheus MySQL Exporter

Apr 08, 2025 pm 02:42 PM

Monitor MySQL and MariaDB Droplets with Prometheus MySQL Exporter

Apr 08, 2025 pm 02:42 PM

Effective monitoring of MySQL and MariaDB databases is critical to maintaining optimal performance, identifying potential bottlenecks, and ensuring overall system reliability. Prometheus MySQL Exporter is a powerful tool that provides detailed insights into database metrics that are critical for proactive management and troubleshooting.

HadiDB: A lightweight, horizontally scalable database in Python

Apr 08, 2025 pm 06:12 PM

HadiDB: A lightweight, horizontally scalable database in Python

Apr 08, 2025 pm 06:12 PM

HadiDB: A lightweight, high-level scalable Python database HadiDB (hadidb) is a lightweight database written in Python, with a high level of scalability. Install HadiDB using pip installation: pipinstallhadidb User Management Create user: createuser() method to create a new user. The authentication() method authenticates the user's identity. fromhadidb.operationimportuseruser_obj=user("admin","admin")user_obj.

How to view the JavaScript behavior of Bootstrap

Apr 07, 2025 am 10:33 AM

How to view the JavaScript behavior of Bootstrap

Apr 07, 2025 am 10:33 AM

The JavaScript section of Bootstrap provides interactive components that give static pages vitality. By looking at the open source code, you can understand how it works: Event binding triggers DOM operations and style changes. Basic usage includes the introduction of JavaScript files and the use of APIs, and advanced usage involves custom events and extension capabilities. Frequently asked questions include version conflicts and CSS style conflicts, which can be resolved by double-checking the code. Performance optimization tips include on-demand loading and code compression. The key to mastering Bootstrap JavaScript is to understand its design concepts, combine practical applications, and use developer tools to debug and explore.

Is Git the same as GitHub?

Apr 08, 2025 am 12:13 AM

Is Git the same as GitHub?

Apr 08, 2025 am 12:13 AM

Git and GitHub are not the same thing. Git is a version control system, and GitHub is a Git-based code hosting platform. Git is used to manage code versions, and GitHub provides an online collaboration environment.

How to build a bootstrap framework

Apr 07, 2025 pm 02:54 PM

How to build a bootstrap framework

Apr 07, 2025 pm 02:54 PM

Bootstrap framework building guide: Download Bootstrap and link it to your project. Create an HTML file to add the necessary elements. Create a responsive layout using the Bootstrap mesh system. Add Bootstrap components such as buttons and forms. Decide yourself whether to customize Bootstrap and compile stylesheets if necessary. Use the version control system to track your code.