Technology peripherals

Technology peripherals

It Industry

It Industry

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

News from this site on April 17, TrendForce recently released a report, believing that demand for Nvidia’s new Blackwell platform products is bullish, is expected to drive TSMC’s total CoWoS packaging production capacity to increase by more than 150% in 2024.

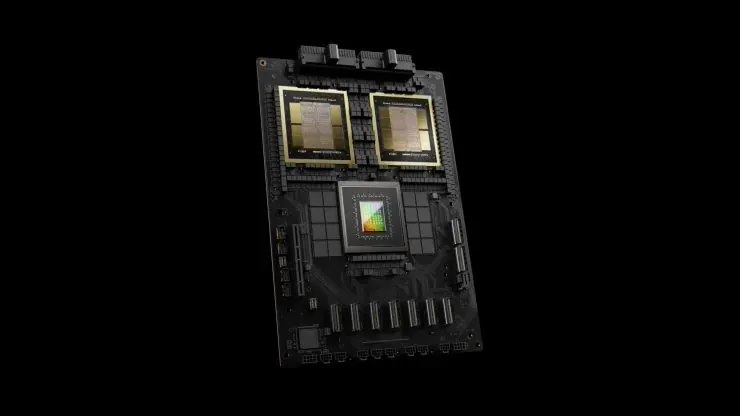

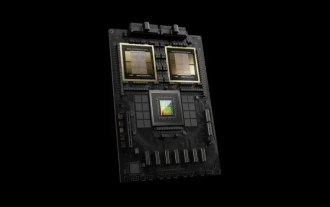

NVIDIA Blackwell’s new platform products include B-series GPUs and GB200 accelerator cards integrating NVIDIA’s own Grace Arm CPU.

TrendForce confirmed that the supply chain is currently very optimistic about GB200. It is estimated that shipments in 2025 are expected to exceed one million units, accounting for 40-50% of Nvidia's high-end GPUs.

NVIDIA plans to deliver products such as GB200 and B100 in the second half of the year, but upstream wafer packaging must further adopt CoWoS-L technology with more complex and high-precision requirements, and the verification and testing process will be more time-consuming, so TrendForce It is believed that related products will not start to increase in volume until the fourth quarter of this year or early next year.

CoWoS technology, as NVIDIA’s B series including GB200, B100, B200, etc. will consume more CoWoS production capacity, TSMC has also increased its CoWoS production capacity demand for the whole year of 2024, and it is estimated that the monthly production capacity will be close to the end of the year 4 million, an increase of more than 150% compared to the total production capacity in 2023.

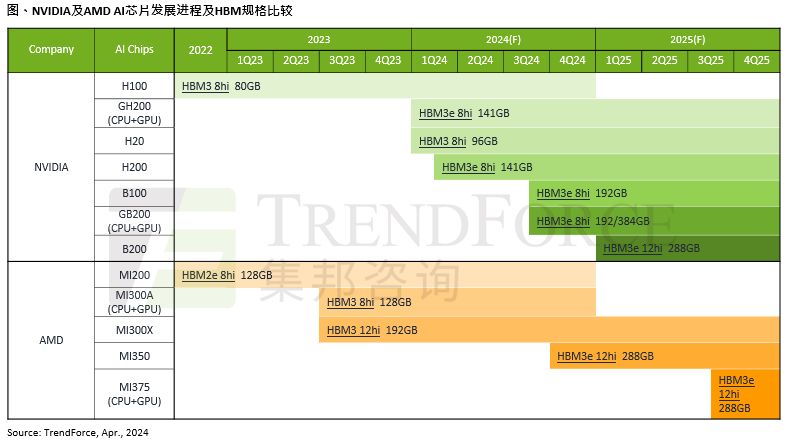

In addition, the report believes that Nvidia and AI development, HBM3e will become the mainstream of the market in the second half of the year. The agency predicts that Nvidia will begin to expand shipments of H200 equipped with HBM3e in the second half of this year, replacing H100 as the mainstream. Later, GB200 and B100 will also adopt HBM3e.

Attached to this site is the reference address

TrendForce TrendForce: NVIDIA Blackwell's new platform product demand is expected to increase, which is expected to drive TSMC's CoWoS total production capacity to increase by more than 150% in 2024

The above is the detailed content of TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Innolux plans to mass-produce fan-out panel-level semiconductor packaging technology by the end of the year

Aug 07, 2024 pm 06:18 PM

Innolux plans to mass-produce fan-out panel-level semiconductor packaging technology by the end of the year

Aug 07, 2024 pm 06:18 PM

According to news from this site on August 6, Yang Zhuxiang, general manager of Innolux Corporation, said yesterday (August 5) that the company is actively deploying and promoting semiconductor fan-out panel-level packaging (FOPLP) and is expected to mass-produce ChipFirst before the end of this year. The contribution of process technology to revenue will be apparent in the first quarter of next year. Fenye Innolux stated that it is expected to mass-produce the redistribution layer (RDLFirst) process technology for mid-to-high-end products in the next 1-2 years, and will work with partners to develop the most technically difficult glass drilling (TGV) process, which will take another 2-3 years. It can be put into mass production within a year. Yang Zhuxiang said that Innolux’s FOPLP technology is “ready for mass production” and will enter the market with low-end and mid-range products.

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

According to news from this site on April 17, TrendForce recently released a report, believing that demand for Nvidia's new Blackwell platform products is bullish, and is expected to drive TSMC's total CoWoS packaging production capacity to increase by more than 150% in 2024. NVIDIA Blackwell's new platform products include B-series GPUs and GB200 accelerator cards integrating NVIDIA's own GraceArm CPU. TrendForce confirms that the supply chain is currently very optimistic about GB200. It is estimated that shipments in 2025 are expected to exceed one million units, accounting for 40-50% of Nvidia's high-end GPUs. Nvidia plans to deliver products such as GB200 and B100 in the second half of the year, but upstream wafer packaging must further adopt more complex products.

It is reported that Zhuang Zishou will be stationed in the United States next month to promote the construction of TSMC's US factory: sprinting to put into production in 2025

Apr 10, 2024 pm 04:52 PM

It is reported that Zhuang Zishou will be stationed in the United States next month to promote the construction of TSMC's US factory: sprinting to put into production in 2025

Apr 10, 2024 pm 04:52 PM

According to news from this website on April 10, according to the Liberty Times, TSMC plans to send Dr. Zhuang Zishou to the United States in May this year to cooperate with Wang Yinglang to jointly promote the construction of TSMC’s US factory. The U.S. Department of Commerce has currently finalized the amount of subsidies for TSMC, Intel, and Samsung, but TSMC's factories in the United States still have many problems. TSMC’s leadership hopes to promote the implementation of advanced processes as soon as possible by sending Dr. Zhuang Zishou to work with Wang Yinglang, who specializes in production and manufacturing. Check out the official TSMC leadership team on this site: Dr. Zhuang Zishou is currently the deputy general manager of factory affairs at TSMC, responsible for the planning, design, construction and maintenance of new factories, as well as the operation and upgrade of existing factory facilities. Dr. Zhuang joined TSMC in 1989 as a

The computer I spent 300 yuan to assemble successfully ran through the local large model

Apr 12, 2024 am 08:07 AM

The computer I spent 300 yuan to assemble successfully ran through the local large model

Apr 12, 2024 am 08:07 AM

If 2023 is recognized as the first year of AI, then 2024 is likely to be a key year for the popularization of large AI models. In the past year, a large number of large AI models and a large number of AI applications have emerged. Manufacturers such as Meta and Google have also begun to launch their own online/local large models to the public, similar to "AI artificial intelligence" that is out of reach. The concept suddenly came to people. Nowadays, people are increasingly exposed to artificial intelligence in their lives. If you look carefully, you will find that almost all of the various AI applications you have access to are deployed on the "cloud". If you want to build a device that can run large models locally, then the hardware is a brand-new AIPC priced at more than 5,000 yuan. For ordinary people,

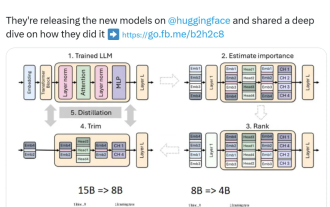

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

The rise of small models. Last month, Meta released the Llama3.1 series of models, which includes Meta’s largest model to date, the 405B model, and two smaller models with 70 billion and 8 billion parameters respectively. Llama3.1 is considered to usher in a new era of open source. However, although the new generation models are powerful in performance, they still require a large amount of computing resources when deployed. Therefore, another trend has emerged in the industry, which is to develop small language models (SLM) that perform well enough in many language tasks and are also very cheap to deploy. Recently, NVIDIA research has shown that structured weight pruning combined with knowledge distillation can gradually obtain smaller language models from an initially larger model. Turing Award Winner, Meta Chief A

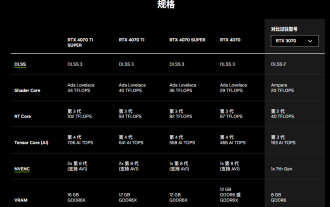

Nvidia releases GDDR6 memory version of GeForce RTX 4070 graphics card, available from September

Aug 21, 2024 am 07:31 AM

Nvidia releases GDDR6 memory version of GeForce RTX 4070 graphics card, available from September

Aug 21, 2024 am 07:31 AM

According to news from this site on August 20, multiple sources reported in July that Nvidia RTX4070 and above graphics cards will be in tight supply in August due to the shortage of GDDR6X video memory. Subsequently, speculation spread on the Internet about launching a GDDR6 memory version of the RTX4070 graphics card. As previously reported by this site, Nvidia today released the GameReady driver for "Black Myth: Wukong" and "Star Wars: Outlaws". At the same time, the press release also mentioned the release of the GDDR6 video memory version of GeForce RTX4070. Nvidia stated that the new RTX4070's specifications other than the video memory will remain unchanged (of course, it will also continue to maintain the price of 4,799 yuan), providing similar performance to the original version in games and applications, and related products will be launched from