The journey to building large-scale language models in 2024

2024 will see a technological leap forward in large language models (LLMs) as researchers and engineers continue to push the boundaries of natural language processing. These parameter-rich LLMs are revolutionizing how we interact with machines, enabling more natural conversations, code generation, and complex reasoning. However, building these behemoths is no easy task, involving the complexity of data preparation, advanced training techniques, and scalable inference. This review delves into the technical details required to build LLMs, covering recent advances from data sourcing to training innovations and alignment strategies.

2024 promises to be a landmark era for large language models (LLMs) as researchers and engineers push the boundaries of what is possible in natural language processing. These large-scale neural networks with billions or even trillions of parameters will revolutionize the way we interact with machines, enabling more natural and open-ended conversations, code generation, and multimodal reasoning.

However, establishing such a large LL.M. is not a simple matter. It requires a carefully curated pipeline, from data sourcing and preparation to advanced training techniques and scalable inference. In this post, we’ll take a deep dive into the technical complexities involved in building these cutting-edge language models, exploring the latest innovations and challenges across the stack.

Data preparation

1. Data source

The foundation of any Master of Laws is the data it is trained on, and Modern models ingest staggering amounts of text (often over a trillion tokens) from web crawlers, code repositories, books, and more. Common data sources include:

Generally crawled web corpora

Code repositories such as GitHub and Software Heritage

Wikipedia and curated datasets such as books (public domain and Copyrighted)

Synthetically generated data

2. Data filtering

Simply getting all available data is usually not optimal because It may introduce noise and bias. Therefore, careful data filtering techniques are employed:

Quality filtering

Heuristic filtering based on document properties such as length and language

Conducted using examples of good and bad data Classifier-based filtering

Perplexity threshold for language model

Domain-specific filtering

Check the impact on domain-specific subsets

Develop custom rules and threshold

Selection strategy

Deterministic hard threshold

Probabilistic random sampling

3. Deduplication

Large web corpora contain significant overlap, and redundant documents may cause the model to effectively "memorize" too many regions. Utilize efficient near-duplicate detection algorithms such as MinHash to reduce this redundancy bias.

4. Tokenization

Once we have a high-quality, deduplicated text corpus, we need to tokenize it - convert it into a neural network for training Tag sequences that can be ingested during. Ubiquitous byte-level BPE encoding is preferred and handles code, mathematical notation, and other contexts elegantly. Careful sampling of the entire data set is required to avoid overfitting the tokenizer itself.

5. Data Quality Assessment

Assessing data quality is a challenging but crucial task, especially at such a large scale. Techniques employed include:

Monitoring of high-signal benchmarks such as Commonsense QA, HellaSwag and OpenBook QA during subset training

Manual inspection of domains/URLs and inspection of retained/dropped examples

Data Clustering and Visualization Tools

Train auxiliary taggers to analyze tags

Training

1. Model Parallelism

The sheer scale of modern LLMs (often too large to fit on a single GPU or even a single machine) requires advanced parallelization schemes to split the model across multiple devices and machines in various ways:

Data Parallelism: Spread batches across multiple devices

Tensor Parallelism: Split model weights and activations across devices

Pipeline Parallelism: Treat the model as a series of stages and Pipelining across devices

Sequence parallelism: splitting individual input sequences to further scale

Combining these 4D parallel strategies can scale to models with trillions of parameters.

2. Efficient attention

The main computational bottleneck lies in the self-attention operation at the core of the Transformer architecture. Methods such as Flash Attention and Factorized Kernels provide highly optimized attention implementations that avoid unnecessarily implementing the full attention matrix.

3. Stable training

Achieving stable convergence at such an extreme scale is a major challenge. Innovations in this area include:

Improved initialization schemes

Hyperparameter transfer methods such as MuTransfer

Optimized learning rate plans such as cosine annealing

4. Architectural Innovation

Recent breakthroughs in model architecture have greatly improved the capabilities of the LL.M.:

Mixture-of-Experts (MoE): Only active per example A subset of model parameters, enabled by routing networks

Mamba: an efficient implementation of hash-based expert mixing layers

alignment

While competency is crucial, we also need LLMs that are safe, authentic, and aligned with human values and guidance. This is the goal of this emerging field of artificial intelligence alignment:

Reinforcement Learning from Human Feedback (RLHF): Use reward signals derived from human preferences for model outputs to fine-tune models; PPO, DPO, etc. Methods are being actively explored.

Constitutional AI: Constitutional AI encodes rules and instructions into the model during the training process, instilling desired behaviors from the ground up.

Inference

Once our LLM is trained, we need to optimize it for efficient inference - providing model output to the user with minimal latency:

Quantization: Compress large model weights into a low-precision format such as int8 for cheaper computation and memory footprint; commonly used technologies include GPTQ, GGML and NF4.

Speculative decoding: Accelerate inference by using a small model to launch a larger model, such as the Medusa method

System optimizations: Just-in-time compilation, kernel fusion, and CUDA graphics optimization can further increase speed.

Conclusion

Building large-scale language models in 2024 requires careful architecture and innovation across the entire stack—from data sourcing and cleansing to scalable training systems and Efficient inference deployment. We've only covered a few highlights, but the field is evolving at an incredible pace, with new technologies and discoveries emerging all the time. Challenges surrounding data quality assessment, large-scale stable convergence, consistency with human values, and robust real-world deployment remain open areas. But the potential for an LL.M. is huge – stay tuned as we push the boundaries of what’s possible with linguistic AI in 2024 and beyond!

The above is the detailed content of The journey to building large-scale language models in 2024. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to run the h5 project

Apr 06, 2025 pm 12:21 PM

How to run the h5 project

Apr 06, 2025 pm 12:21 PM

Running the H5 project requires the following steps: installing necessary tools such as web server, Node.js, development tools, etc. Build a development environment, create project folders, initialize projects, and write code. Start the development server and run the command using the command line. Preview the project in your browser and enter the development server URL. Publish projects, optimize code, deploy projects, and set up web server configuration.

Gitee Pages static website deployment failed: How to troubleshoot and resolve single file 404 errors?

Apr 04, 2025 pm 11:54 PM

Gitee Pages static website deployment failed: How to troubleshoot and resolve single file 404 errors?

Apr 04, 2025 pm 11:54 PM

GiteePages static website deployment failed: 404 error troubleshooting and resolution when using Gitee...

Does H5 page production require continuous maintenance?

Apr 05, 2025 pm 11:27 PM

Does H5 page production require continuous maintenance?

Apr 05, 2025 pm 11:27 PM

The H5 page needs to be maintained continuously, because of factors such as code vulnerabilities, browser compatibility, performance optimization, security updates and user experience improvements. Effective maintenance methods include establishing a complete testing system, using version control tools, regularly monitoring page performance, collecting user feedback and formulating maintenance plans.

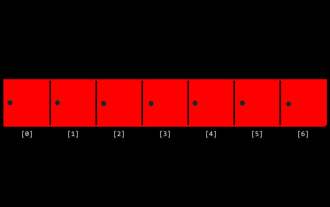

CS-Week 3

Apr 04, 2025 am 06:06 AM

CS-Week 3

Apr 04, 2025 am 06:06 AM

Algorithms are the set of instructions to solve problems, and their execution speed and memory usage vary. In programming, many algorithms are based on data search and sorting. This article will introduce several data retrieval and sorting algorithms. Linear search assumes that there is an array [20,500,10,5,100,1,50] and needs to find the number 50. The linear search algorithm checks each element in the array one by one until the target value is found or the complete array is traversed. The algorithm flowchart is as follows: The pseudo-code for linear search is as follows: Check each element: If the target value is found: Return true Return false C language implementation: #include#includeintmain(void){i

How to convert xml to excel

Apr 03, 2025 am 08:54 AM

How to convert xml to excel

Apr 03, 2025 am 08:54 AM

There are two ways to convert XML to Excel: use built-in Excel features or third-party tools. Third-party tools include XML to Excel converter, XML2Excel, and XML Candy.

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a front-end page in back-end development? As a backend developer with three or four years of experience, he has mastered the basic JavaScript, CSS and HTML...

Can you learn how to make H5 pages by yourself?

Apr 06, 2025 am 06:36 AM

Can you learn how to make H5 pages by yourself?

Apr 06, 2025 am 06:36 AM

It is feasible to self-study H5 page production, but it is not a quick success. It requires mastering HTML, CSS, and JavaScript, involving design, front-end development, and back-end interaction logic. Practice is the key, and learn by completing tutorials, reviewing materials, and participating in open source projects. Performance optimization is also important, requiring optimization of images, reducing HTTP requests and using appropriate frameworks. The road to self-study is long and requires continuous learning and communication.

How to optimize the performance of XML conversion into images?

Apr 02, 2025 pm 08:12 PM

How to optimize the performance of XML conversion into images?

Apr 02, 2025 pm 08:12 PM

XML to image conversion is divided into two steps: parsing XML to extract image information and generating images. Performance optimization can be started with selecting parsing methods (such as SAX), graphics libraries (such as PIL), and utilizing multithreading/GPU acceleration. SAX parsing is more suitable for handling large XML. The PIL library is simple and easy to use but has limited performance. Making full use of multithreading and GPU acceleration can significantly improve performance.