Technology peripherals

Technology peripherals

It Industry

It Industry

Innovative interactive methods! Google Pixel tablet tests 'Look and Sign' gesture control

Innovative interactive methods! Google Pixel tablet tests 'Look and Sign' gesture control

Innovative interactive methods! Google Pixel tablet tests 'Look and Sign' gesture control

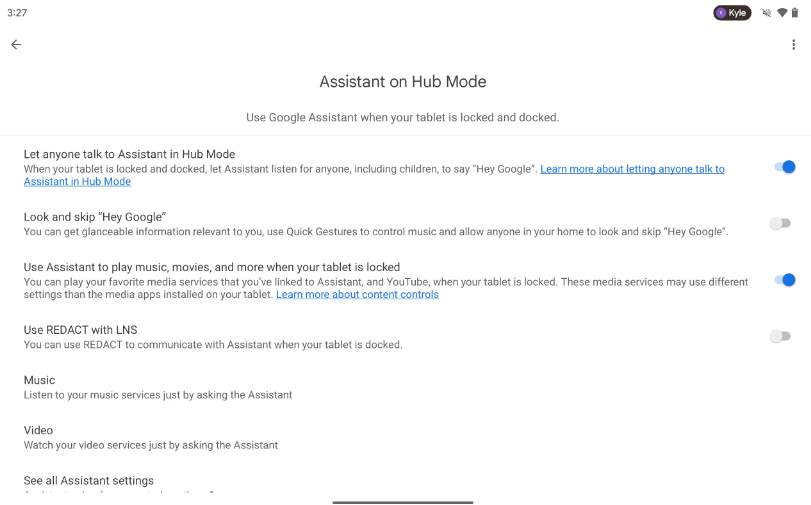

Recently, foreign technology media PiunikaWeb disclosed that Google is preparing to introduce a new feature called “Look and Sign” on the Pixel Tablet that is being released. This innovative move aims to further optimize and enhance the interactive experience between users and device AI.

"Look and Sign" is the introduction of a feature that will allow users to express commands through simple gestures and will allow for more direct interaction with the Google Assistant . For example, users can communicate their intentions through a thumbs-up or other obvious gesture, greatly simplifying the communication process between the user and the device and reducing reliance on verbal instructions. The launch of this feature will not only provide ordinary users with a more convenient way of interaction, but is also expected to bring good news to user groups who use sign language. Become an important feature with accessibility features.

Google has previously launched a similar “Look and Talk” feature on its Nest Hub Max device. By leveraging the device's camera, users don't need to speak the "OK Google" wake word, they can simply speak into the designated area toward the Nest Hub Max and the device will respond. Today, the emergence of the "Look and Sign" function will undoubtedly further enrich and expand the interaction between users and smart devices, making the application of artificial intelligence technology closer to user needs. Through the "Look and Sign" feature, it's likely to further enrich and extend the modes of interaction between users and smart devices, undoubtedly bringing AI technology applications closer to user needs.

The above is the detailed content of Innovative interactive methods! Google Pixel tablet tests 'Look and Sign' gesture control. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Top 10 Best Free Backlink Checker Tools in 2025

Mar 21, 2025 am 08:28 AM

Top 10 Best Free Backlink Checker Tools in 2025

Mar 21, 2025 am 08:28 AM

Website construction is just the first step: the importance of SEO and backlinks Building a website is just the first step to converting it into a valuable marketing asset. You need to do SEO optimization to improve the visibility of your website in search engines and attract potential customers. Backlinks are the key to improving your website rankings, and it shows Google and other search engines the authority and credibility of your website. Not all backlinks are beneficial: Identify and avoid harmful links Not all backlinks are beneficial. Harmful links can harm your ranking. Excellent free backlink checking tool monitors the source of links to your website and reminds you of harmful links. In addition, you can also analyze your competitors’ link strategies and learn from them. Free backlink checking tool: Your SEO intelligence officer

Building a Network Vulnerability Scanner with Go

Apr 01, 2025 am 08:27 AM

Building a Network Vulnerability Scanner with Go

Apr 01, 2025 am 08:27 AM

This Go-based network vulnerability scanner efficiently identifies potential security weaknesses. It leverages Go's concurrency features for speed and includes service detection and vulnerability matching. Let's explore its capabilities and ethical

CNCF Arm64 Pilot: Impact and Insights

Apr 15, 2025 am 08:27 AM

CNCF Arm64 Pilot: Impact and Insights

Apr 15, 2025 am 08:27 AM

This pilot program, a collaboration between the CNCF (Cloud Native Computing Foundation), Ampere Computing, Equinix Metal, and Actuated, streamlines arm64 CI/CD for CNCF GitHub projects. The initiative addresses security concerns and performance lim

Serverless Image Processing Pipeline with AWS ECS and Lambda

Apr 18, 2025 am 08:28 AM

Serverless Image Processing Pipeline with AWS ECS and Lambda

Apr 18, 2025 am 08:28 AM

This tutorial guides you through building a serverless image processing pipeline using AWS services. We'll create a Next.js frontend deployed on an ECS Fargate cluster, interacting with an API Gateway, Lambda functions, S3 buckets, and DynamoDB. Th