OpenAI offers new fine-tuning and customization options

Fine-tuning plays a vital role in building valuable artificial intelligence tools. This process of refining pre-trained models using more targeted data sets allows users to greatly increase the model's understanding of professional connotations, allowing users to add ready-made knowledge to the model for specific tasks.

While this process may take time, it is often three times more cost-effective than training a model from scratch. This value is reflected in OpenAI’s recent announcement of an expansion of its custom model program and various new features for its fine-tuning API.

New features of self-service fine-tuning API

OpenAI first announced the launch of the self-service fine-tuning API for GPT-3 in August 2023, and was enthusiastically received by the AI community response. OpenAI reports that thousands of groups have leveraged APIs to train tens of thousands of models, such as using specific programming languages to generate code, summarize text into specific formats, or create personalized content based on user behavior.

Since its launch in August 2023, the job matching and recruitment platform Indeed has achieved significant success. In order to match job seekers with relevant job openings, Indeed sends personalized recommendations to users. By fine-tuning GPT 3.5 Turbo to produce a more accurate explanation of the process and being able to reduce the number of tokens in alerts by 80%. This has increased the number of messages the company sends to job seekers each month from less than 1 million to approximately 20 million.

New fine-tuning API features build on this success and hopefully improve functionality for future users:

Epoch-based checkpoint creation : Automatically generates a complete fine-tuned model checkpoint at every training epoch, which reduces the need for subsequent retraining, especially in the case of overfitting.

Comparity Playground: A new parallel playground UI for comparing model quality and performance, allowing manual evaluation of the output of multiple models or fine-tuning snapshots for a single prompt.

Third-party integrations: Supports integrations with third-party platforms (starting with permissions and biases), enabling developers to share detailed fine-tuning data to the rest of the stack.

Comprehensive validation metrics: Ability to calculate metrics such as loss and accuracy for the entire validation data set to better understand model quality.

Hyperparameter configuration: Ability to configure available hyperparameters from the dashboard (not just through the API or SDK).

Fine-tuning dashboard improvements: including the ability to configure hyperparameters, view more detailed training metrics, and rerun jobs from previous configurations.

Building on past success, OpenAI believes these new features will give developers more fine-grained control over their fine-tuning efforts.

Assisted fine-tuning and custom training models

OpenAI has also improved the custom model plan based on the release on DevDay in November 2023. One of the major changes is the emergence of assisted fine-tuning, a means of leveraging valuable techniques beyond API fine-tuning, such as adding additional hyperparameters and various parameter effective fine-tuning (PEFT) methods on a larger scale.

SK Telecom is an example of realizing the full potential of this service. The telecom operator has more than 30 million users in South Korea, so they wanted to customize an artificial intelligence model that can act as a telecom customer service expert.

By fine-tuning GPT-4 in collaboration with OpenAI to focus on Korean Telecom-related conversations, SK Telecom’s conversation summary quality improved by 35% and intent recognition accuracy increased. 33%. When comparing their new fine-tuned model to generalized GPT-4, their satisfaction score also improved from 3.6 to 4.5 out of 5.

OpenAI also introduces the ability to build custom models for companies that require deep fine-tuning of domain-specific knowledge models. A partnership with legal AI company Harvey demonstrates the value of this feature. Legal work requires a lot of reading-intensive documents, and Harvey wanted to use LLMs (Large Language Models) to synthesize information from these documents and submit them to lawyers for review. However, many laws are complex and context-dependent, and Harvey hopes to work with OpenAI to build a custom-trained model that can incorporate new knowledge and reasoning methods into the base model.

Harvey partnered with OpenAI and added the equivalent of 10 billion tokens of data to custom train this case law model. By adding the necessary contextual depth to make informed legal judgments, the resulting model improved factual answers by 83%.

AI tools are never a “cure-all” solution. Customizability is at the heart of this technology’s usefulness, and OpenAI’s work in fine-tuning and customizing training models will help expand the organizations already gaining from the tool.

The above is the detailed content of OpenAI offers new fine-tuning and customization options. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

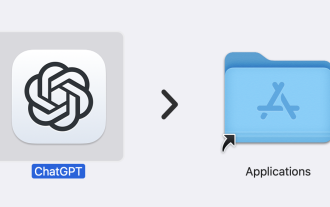

ChatGPT is now available for macOS with the release of a dedicated app

Jun 27, 2024 am 10:05 AM

ChatGPT is now available for macOS with the release of a dedicated app

Jun 27, 2024 am 10:05 AM

Open AI’s ChatGPT Mac application is now available to everyone, having been limited to only those with a ChatGPT Plus subscription for the last few months. The app installs just like any other native Mac app, as long as you have an up to date Apple S

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year