Technology peripherals

Technology peripherals

AI

AI

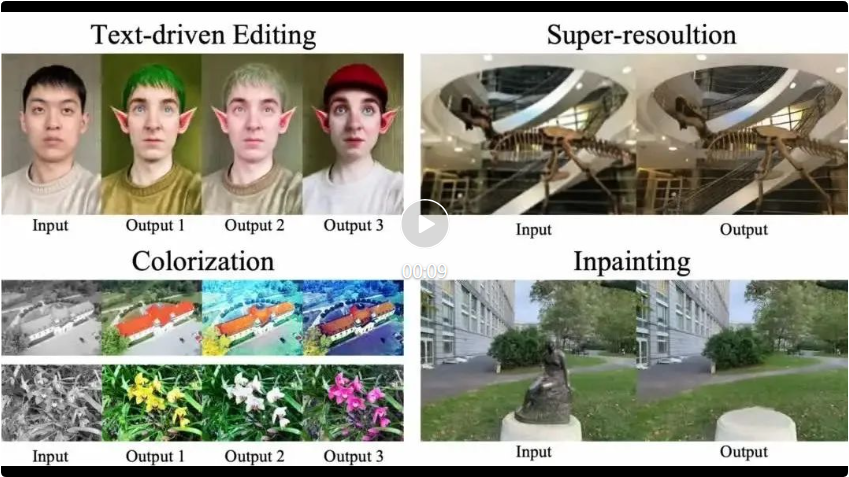

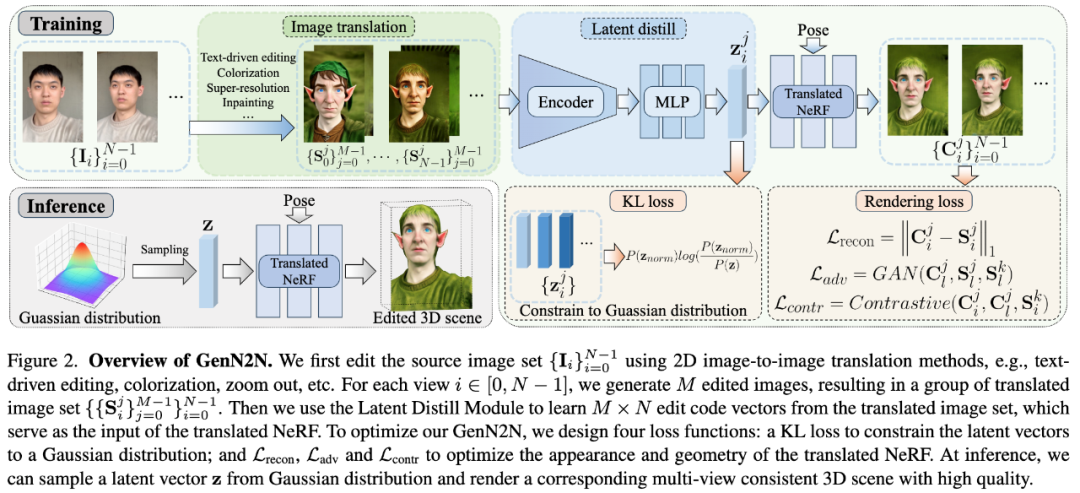

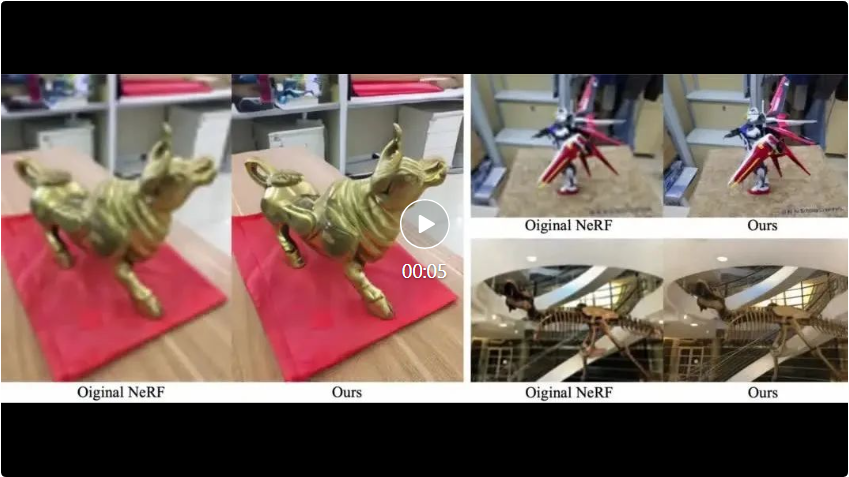

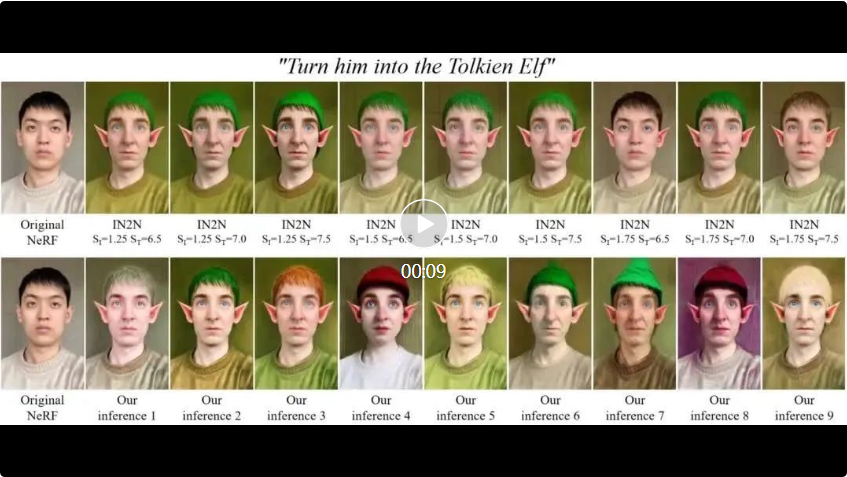

CVPR 2024 high-scoring paper: New generative editing framework GenN2N, unifying NeRF conversion tasks

CVPR 2024 high-scoring paper: New generative editing framework GenN2N, unifying NeRF conversion tasks

CVPR 2024 high-scoring paper: New generative editing framework GenN2N, unifying NeRF conversion tasks

#The AIxiv column of our website is a column about academic and technical content. In the past few years, the AIxiv column on our website has received more than 2,000 pieces of content, covering top laboratories from major universities and companies around the world, helping to promote academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. The submission email address is liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com.

Paper address: https://arxiv.org/abs/2404.02788 Paper homepage: https://xiangyueliu.github.io/GenN2N/ -

Github address: https://github.com/Lxiangyue/GenN2N Paper title: GenN2N: Generative NeRF2NeRF Translation

(positive sample) in the training data, we select an edited picture of the same perspective from the training data Picture

(positive sample) in the training data, we select an edited picture of the same perspective from the training data Picture  is used as a condition, which prevents the discriminator from being interfered by perspective factors when distinguishing positive and negative samples.

is used as a condition, which prevents the discriminator from being interfered by perspective factors when distinguishing positive and negative samples.

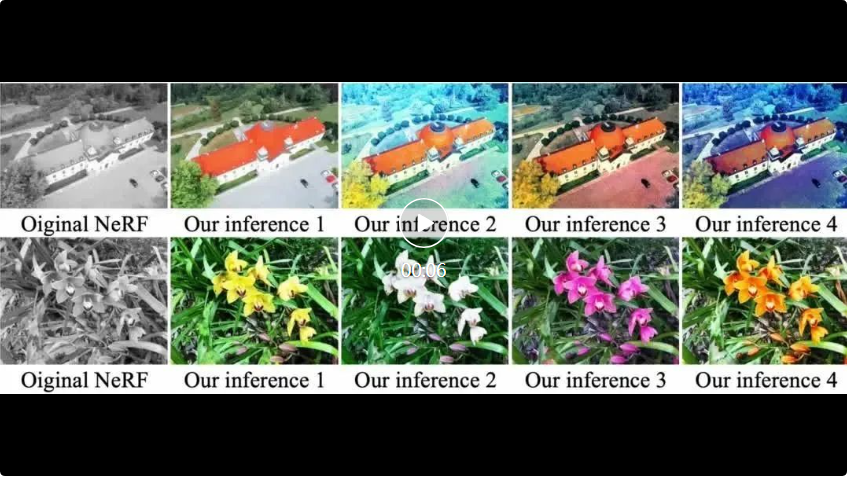

B.NeRF coloring

B.NeRF coloring  C.NeRF Super Resolution

C.NeRF Super Resolution  D.NeRF Repair

D.NeRF Repair

The above is the detailed content of CVPR 2024 high-scoring paper: New generative editing framework GenN2N, unifying NeRF conversion tasks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to update code in git

Apr 17, 2025 pm 04:45 PM

How to update code in git

Apr 17, 2025 pm 04:45 PM

Steps to update git code: Check out code: git clone https://github.com/username/repo.git Get the latest changes: git fetch merge changes: git merge origin/master push changes (optional): git push origin master

How to download git projects to local

Apr 17, 2025 pm 04:36 PM

How to download git projects to local

Apr 17, 2025 pm 04:36 PM

To download projects locally via Git, follow these steps: Install Git. Navigate to the project directory. cloning the remote repository using the following command: git clone https://github.com/username/repository-name.git

How to merge code in git

Apr 17, 2025 pm 04:39 PM

How to merge code in git

Apr 17, 2025 pm 04:39 PM

Git code merge process: Pull the latest changes to avoid conflicts. Switch to the branch you want to merge. Initiate a merge, specifying the branch to merge. Resolve merge conflicts (if any). Staging and commit merge, providing commit message.

How to use git commit

Apr 17, 2025 pm 03:57 PM

How to use git commit

Apr 17, 2025 pm 03:57 PM

Git Commit is a command that records file changes to a Git repository to save a snapshot of the current state of the project. How to use it is as follows: Add changes to the temporary storage area Write a concise and informative submission message to save and exit the submission message to complete the submission optionally: Add a signature for the submission Use git log to view the submission content

What to do if the git download is not active

Apr 17, 2025 pm 04:54 PM

What to do if the git download is not active

Apr 17, 2025 pm 04:54 PM

Resolve: When Git download speed is slow, you can take the following steps: Check the network connection and try to switch the connection method. Optimize Git configuration: Increase the POST buffer size (git config --global http.postBuffer 524288000), and reduce the low-speed limit (git config --global http.lowSpeedLimit 1000). Use a Git proxy (such as git-proxy or git-lfs-proxy). Try using a different Git client (such as Sourcetree or Github Desktop). Check for fire protection

How to update local code in git

Apr 17, 2025 pm 04:48 PM

How to update local code in git

Apr 17, 2025 pm 04:48 PM

How to update local Git code? Use git fetch to pull the latest changes from the remote repository. Merge remote changes to the local branch using git merge origin/<remote branch name>. Resolve conflicts arising from mergers. Use git commit -m "Merge branch <Remote branch name>" to submit merge changes and apply updates.

How to solve the efficient search problem in PHP projects? Typesense helps you achieve it!

Apr 17, 2025 pm 08:15 PM

How to solve the efficient search problem in PHP projects? Typesense helps you achieve it!

Apr 17, 2025 pm 08:15 PM

When developing an e-commerce website, I encountered a difficult problem: How to achieve efficient search functions in large amounts of product data? Traditional database searches are inefficient and have poor user experience. After some research, I discovered the search engine Typesense and solved this problem through its official PHP client typesense/typesense-php, which greatly improved the search performance.

How to delete a repository by git

Apr 17, 2025 pm 04:03 PM

How to delete a repository by git

Apr 17, 2025 pm 04:03 PM

To delete a Git repository, follow these steps: Confirm the repository you want to delete. Local deletion of repository: Use the rm -rf command to delete its folder. Remotely delete a warehouse: Navigate to the warehouse settings, find the "Delete Warehouse" option, and confirm the operation.