Technology peripherals

Technology peripherals

AI

AI

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Write in front

Project link: https://nianticlabs.github.io/mickey/

Given two pictures, you can pass Correspondences between images are established to estimate camera poses between them. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale.

This article proposes MicKey, a key point matching process that can predict metric correspondences in three-dimensional camera space. By learning 3D coordinate matching across images, we are able to infer metric relative pose without depth testing. There is also no need for depth testing, scene reconstruction or image overlap information during training. MicKey is supervised only by image pairs and their relative poses. MicKey achieves state-of-the-art performance on map-free relocalization benchmarks while requiring less supervision than other competing methods.

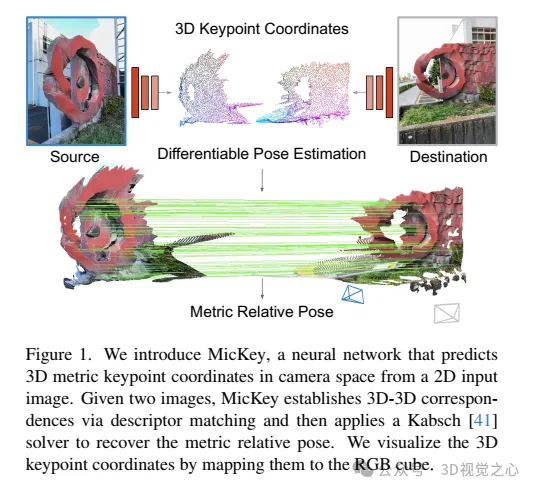

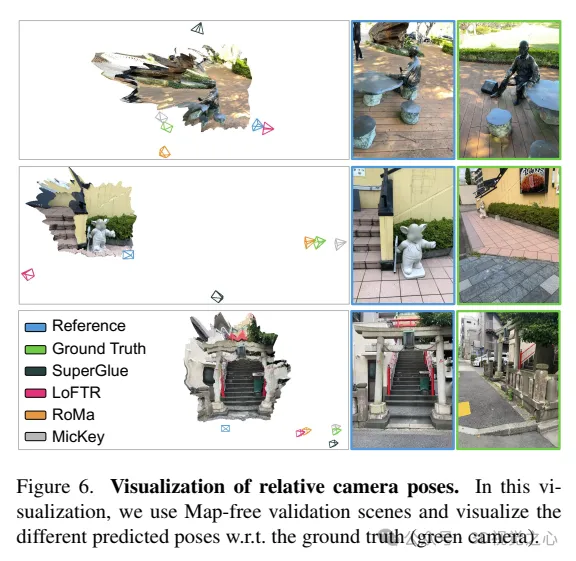

"Metric Keypoints (MicKey) is a feature detection process that solves two problems. First, MicKey regresses keypoint locations in camera space, which allows The matching establishes the metric correspondence. From the metric correspondence, the metric relative pose can be recovered, as shown in Figure 1. Secondly, by using differentiable pose optimization for end-to-end training, MicKey only requires image pairs and their true relative poses. Supervision is required. MicKey learns the correct depth of keypoints implicitly during training, and our training process is robust to image pairs with unknown visual overlap. , therefore, information obtained through SFM (such as image overlap) is not needed. This weak supervision makes MicKey very accessible and attractive because training it on new domains does not require any additional information except pose.

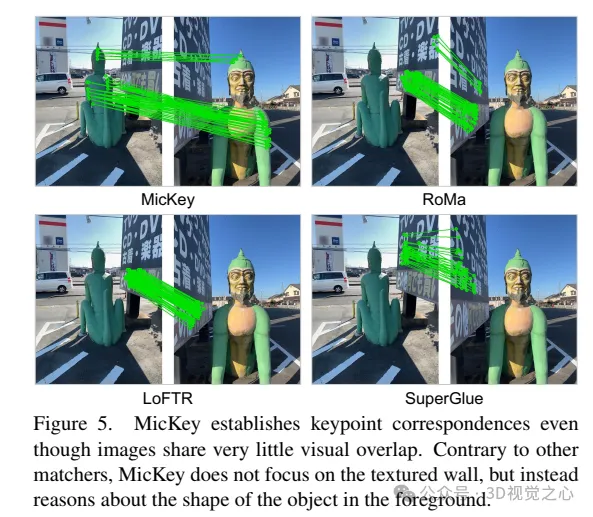

In the map-free relocalization benchmark, MicKey ranks first, outperforming recent state-of-the-art methods. MicKey provides reliable scale-metric pose estimation even under extreme viewing angle changes supported by depth prediction specifically targeted at sparse feature matching. The deformation matching under extreme viewing angle changes supported by this accuracy makes MicKey ideal for supporting the depth estimation necessary for depth estimation matching supported by depth prediction specifically targeting sparse feature matching.

The main contributions are as follows:

MicKey is a neural network that can predict key points from a single image and describe them. Such descriptors can allow estimation of metric relative poses between images.

This training strategy only requires relative pose monitoring, no depth measurement, and no knowledge about image pair overlap.

MicKey introduction

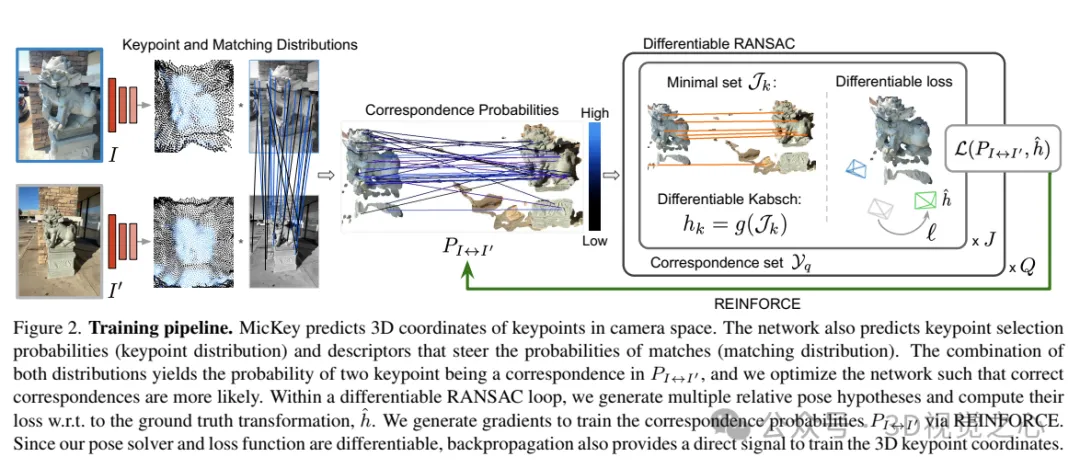

MicKey predicts the three-dimensional coordinates of key points in camera space. The network also predicts keypoint selection probabilities (keypoint distribution) and descriptors that guide the probability of matching (matching distribution). Combining these two distributions, we get the probability that two key points in become corresponding points, and optimize the network to make corresponding points more likely to appear. In a differentiable RANSAC loop, multiple relative pose hypotheses are generated and their losses relative to the true transformation are calculated. Generate gradients through REINFORCE to train corresponding probabilities. Since our pose solver and loss function are differentiable, backpropagation also provides a direct signal for training 3D keypoint coordinates.

#1) Metric pose supervised learning

Given two images, calculate their metric relative poses, and key points Score, match probability, and pose confidence (in the form of soft inlier counts). Our goal is to train all relative pose estimation modules in an end-to-end manner. During the training process, we assume that the training data is, where is the real transformation and K/K' is the camera intrinsic parameter. The schematic diagram of the entire system is shown in Figure 2.

In order to learn the coordinates, confidence and descriptors of 3D key points, we need the system to be fully differentiable. However, since some elements in the pipeline are not differentiable, such as keypoint sampling or inlier counting, the relative pose estimation pipeline is redefined as probabilistic. This means that we treat the output of the network as the probability of a potential match, and during training the network optimizes its output to generate probabilities such that the correct match is more likely to be selected.

2) Network structure

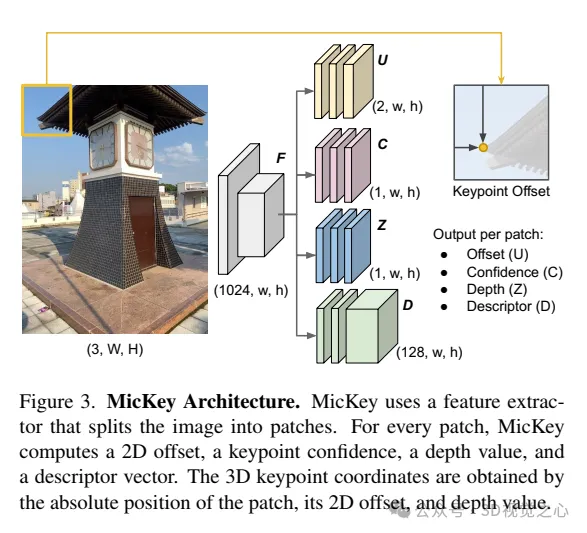

MicKey follows a multi-head network architecture with a shared encoder that infers 3D metric keypoints as well as descriptors from the input image, As shown in Figure 3.

Encoder. Adopt a pre-trained DINOv2 model as a feature extractor and use its features directly without further training or fine-tuning. DINOv2 divides the input image into blocks of size 14×14 and provides a feature vector for each block. The final feature map F has a resolution of (1024, w, h), where w = W/14 and h = H/14.

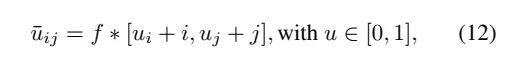

Key point Head. Four parallel heads are defined here, which process the feature map F and calculate the xy offset (U), depth (Z), confidence (C) and descriptor (D) maps; where each entry of the map corresponds to the input A 14×14 block in the image. MicKey has the rare property of predicting keypoints as relative offsets from a sparse regular grid. The absolute 2D coordinates are obtained as follows:

Experimental comparison

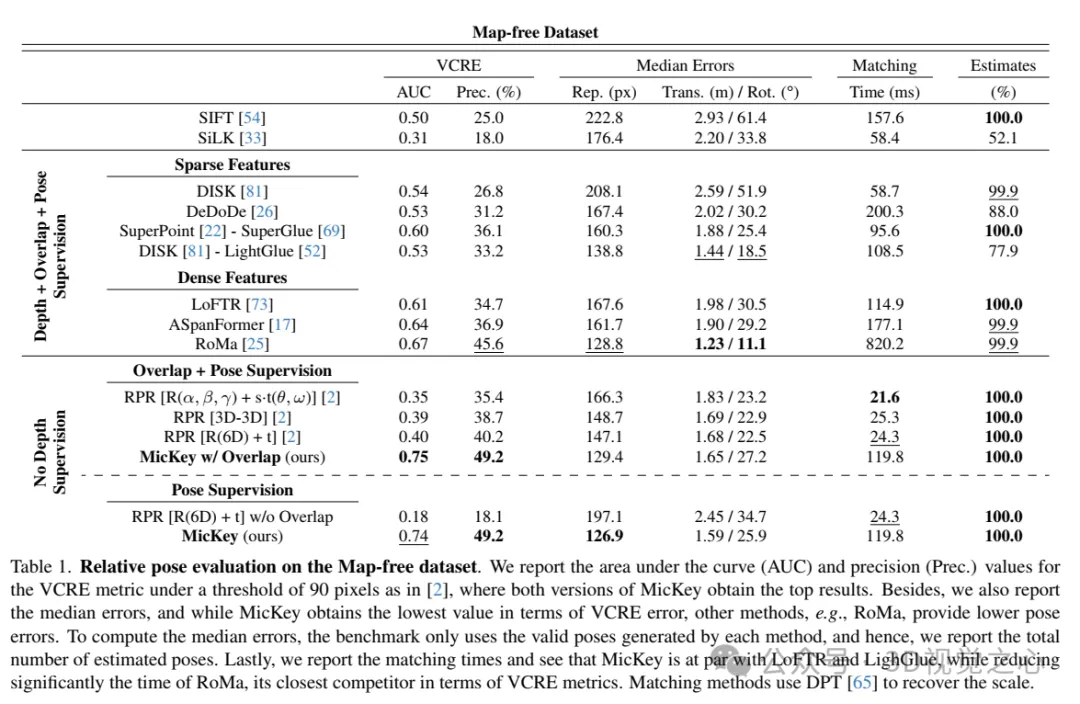

Relative pose evaluation on the map-free dataset. Area under the curve (AUC) and precision (Prec.) values for the VCRE metric at a 90-pixel threshold are reported, with both versions of MicKey achieving the highest results. Additionally, the median error is also reported, and while MicKey obtains the lowest value in terms of VCRE error, other methods, such as RoMa, provide lower pose errors. To calculate the median error, the baseline only uses valid poses generated by each method, therefore, we report the estimated total number of poses. Finally, matching times are reported and MicKey is found to be comparable to LoFTR and LighGlue while significantly reducing the times of RoMa, the closest competitor to MicKey in terms of VCRE metrics. The matching method uses DPT to recover the scale.

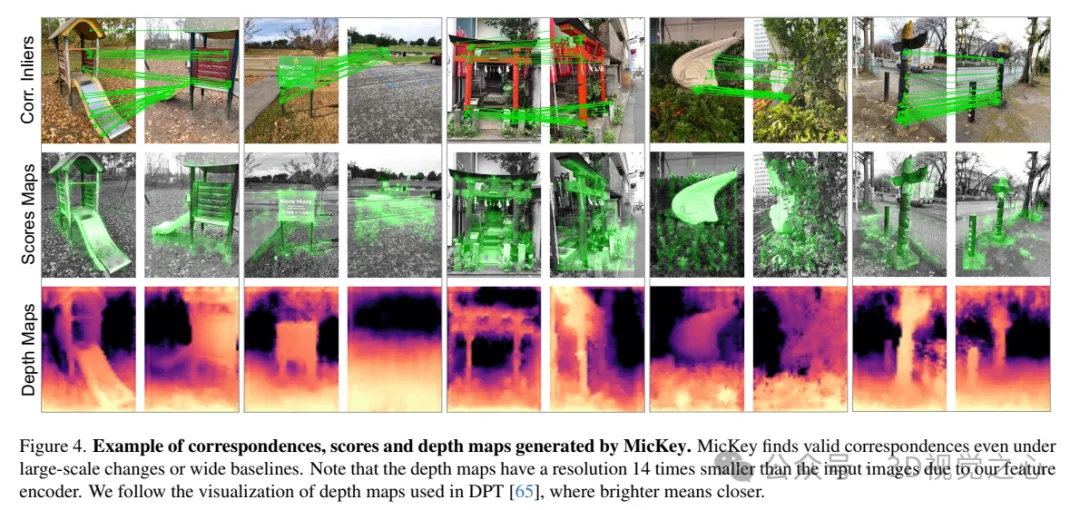

#Example of corresponding point, score and depth maps generated by MicKey. MicKey finds effective correspondence points even in the presence of large-scale changes or wide baselines. Note that due to our feature encoder, the resolution of the depth map is 14 times smaller than the input image. We follow the depth map visualization method used in DPT, where lighter colors represent closer distances.

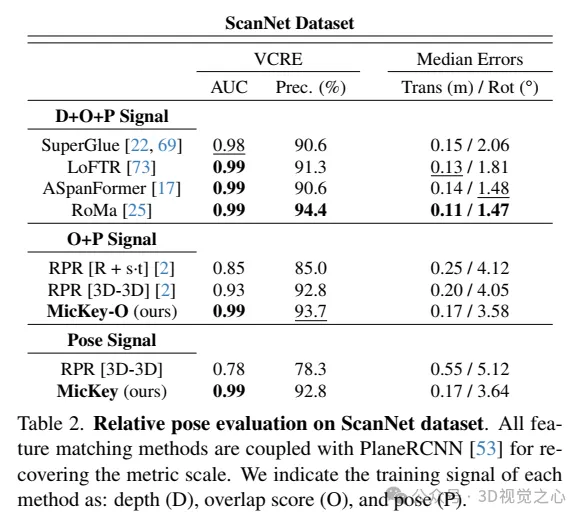

Relative pose evaluation on the ScanNet dataset. All feature matching methods are used in conjunction with PlaneRCNN to recover metric scales. We indicate the training signals for each method: depth (D), overlap score (O), and pose (P).

The above is the detailed content of The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

What libraries are used for floating point number operations in Go?

Apr 02, 2025 pm 02:06 PM

What libraries are used for floating point number operations in Go?

Apr 02, 2025 pm 02:06 PM

The library used for floating-point number operation in Go language introduces how to ensure the accuracy is...

How to run the h5 project

Apr 06, 2025 pm 12:21 PM

How to run the h5 project

Apr 06, 2025 pm 12:21 PM

Running the H5 project requires the following steps: installing necessary tools such as web server, Node.js, development tools, etc. Build a development environment, create project folders, initialize projects, and write code. Start the development server and run the command using the command line. Preview the project in your browser and enter the development server URL. Publish projects, optimize code, deploy projects, and set up web server configuration.

Gitee Pages static website deployment failed: How to troubleshoot and resolve single file 404 errors?

Apr 04, 2025 pm 11:54 PM

Gitee Pages static website deployment failed: How to troubleshoot and resolve single file 404 errors?

Apr 04, 2025 pm 11:54 PM

GiteePages static website deployment failed: 404 error troubleshooting and resolution when using Gitee...

How to specify the database associated with the model in Beego ORM?

Apr 02, 2025 pm 03:54 PM

How to specify the database associated with the model in Beego ORM?

Apr 02, 2025 pm 03:54 PM

Under the BeegoORM framework, how to specify the database associated with the model? Many Beego projects require multiple databases to be operated simultaneously. When using Beego...

Which libraries in Go are developed by large companies or provided by well-known open source projects?

Apr 02, 2025 pm 04:12 PM

Which libraries in Go are developed by large companies or provided by well-known open source projects?

Apr 02, 2025 pm 04:12 PM

Which libraries in Go are developed by large companies or well-known open source projects? When programming in Go, developers often encounter some common needs, ...

How to solve the user_id type conversion problem when using Redis Stream to implement message queues in Go language?

Apr 02, 2025 pm 04:54 PM

How to solve the user_id type conversion problem when using Redis Stream to implement message queues in Go language?

Apr 02, 2025 pm 04:54 PM

The problem of using RedisStream to implement message queues in Go language is using Go language and Redis...

Does H5 page production require continuous maintenance?

Apr 05, 2025 pm 11:27 PM

Does H5 page production require continuous maintenance?

Apr 05, 2025 pm 11:27 PM

The H5 page needs to be maintained continuously, because of factors such as code vulnerabilities, browser compatibility, performance optimization, security updates and user experience improvements. Effective maintenance methods include establishing a complete testing system, using version control tools, regularly monitoring page performance, collecting user feedback and formulating maintenance plans.

When using sql.Open, why does not report an error when DSN passes empty?

Apr 02, 2025 pm 12:54 PM

When using sql.Open, why does not report an error when DSN passes empty?

Apr 02, 2025 pm 12:54 PM

When using sql.Open, why doesn’t the DSN report an error? In Go language, sql.Open...