Technology peripherals

Technology peripherals

AI

AI

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Lean Copilot, this formal mathematics tool that has been praised by many mathematicians such as Terence Tao, has evolved again?

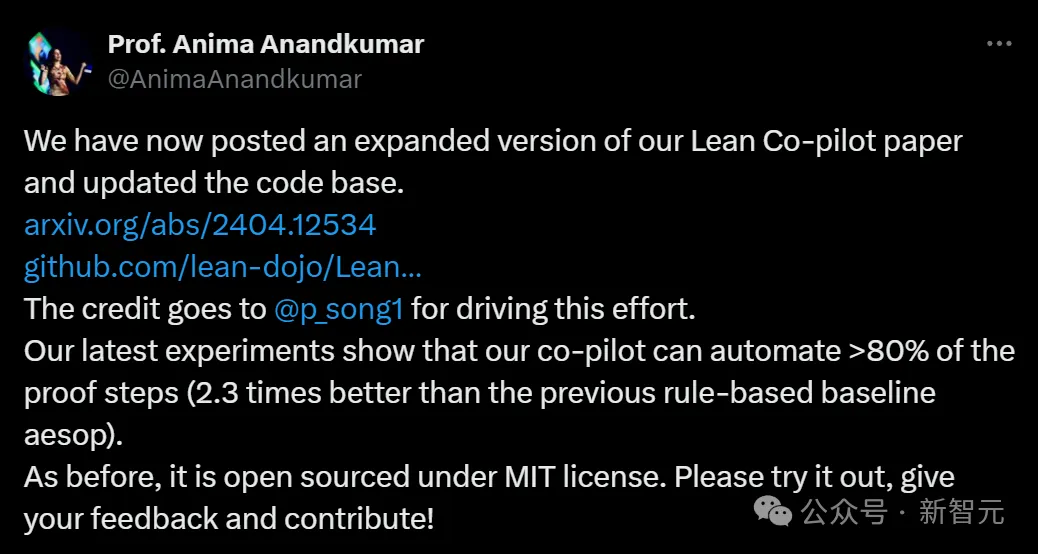

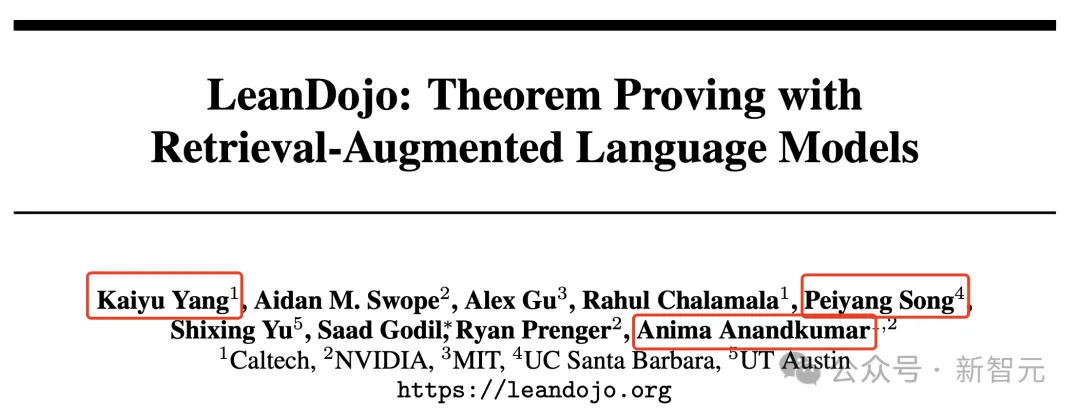

Just now, Caltech professor Anima Anandkumar announced that the team released an expanded version of Lean Copilot's paper and updated the code base.

Picture

Picture

Paper address: https://arxiv.org/pdf/2404.12534.pdf

The latest experiments show that this Copilot tool can automate more than 80% of the mathematical proof steps! This record is 2.3 times better than the previous baseline aesop.

And, as before, it is open source under the MIT license.

##Picture

##Picture

He is Song Peiyang, a Chinese guy. He is an honorary CS undergraduate student at UCSB and a computing student at Caltech. SURF Fellow in the Department of Mathematical Sciences (CMS).

Netizens exclaimed: So, Terence Tao’s current mathematical research can be accelerated 5 times in place?

Picture

Picture

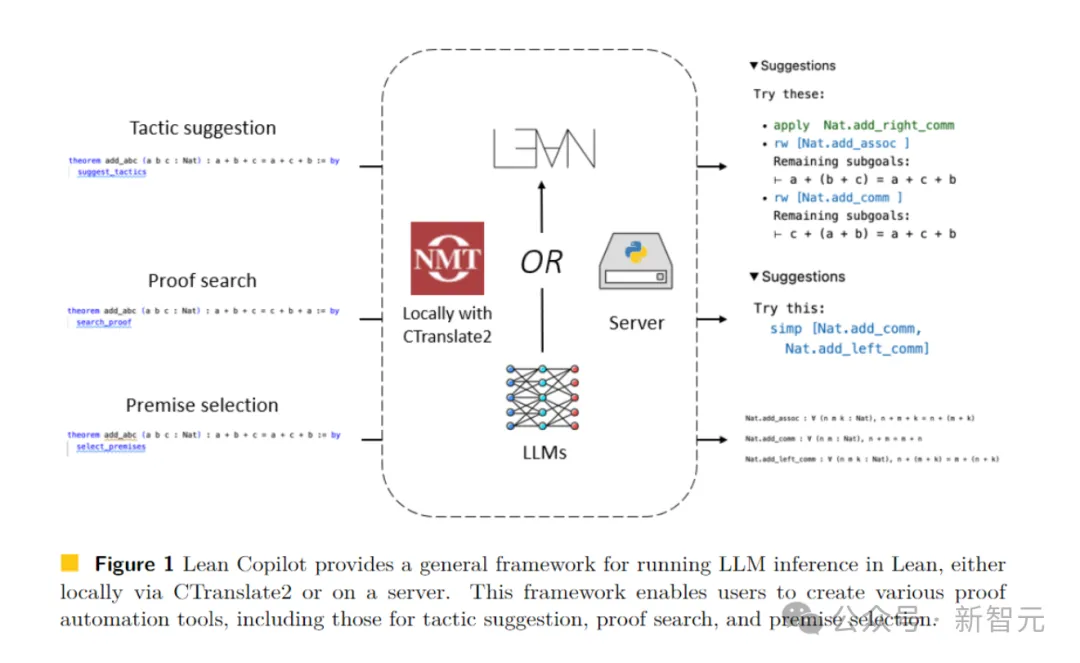

The team released this Lean Copilot The tool hopes to activate the collaboration between humans and LLM to write 100% accurate formal mathematical proofs.

It solves a core technical challenge: running LLM inference in Lean.

Through this tool, we can let LLM propose proof strategies in Lean, allowing humans to intervene and modify in a seamless way.

Picture

Picture

This project was developed because automated theorem proving remains a difficult challenge today.

We all know that LLM often makes mistakes and hallucinations when doing mathematics and reasoning tasks, and is very unreliable.

Picture

Picture

Therefore, so far, mathematical proofs have mostly been derived manually and require careful verification.

Theorem proving tools like Lean can formalize every step of the proof process, but it is really laborious for humans to write Lean.

In this case, the birth of Lean Copilot is of great significance.

The artifact that shocked Terence Tao many times: Mathematicians are finished before they can use it

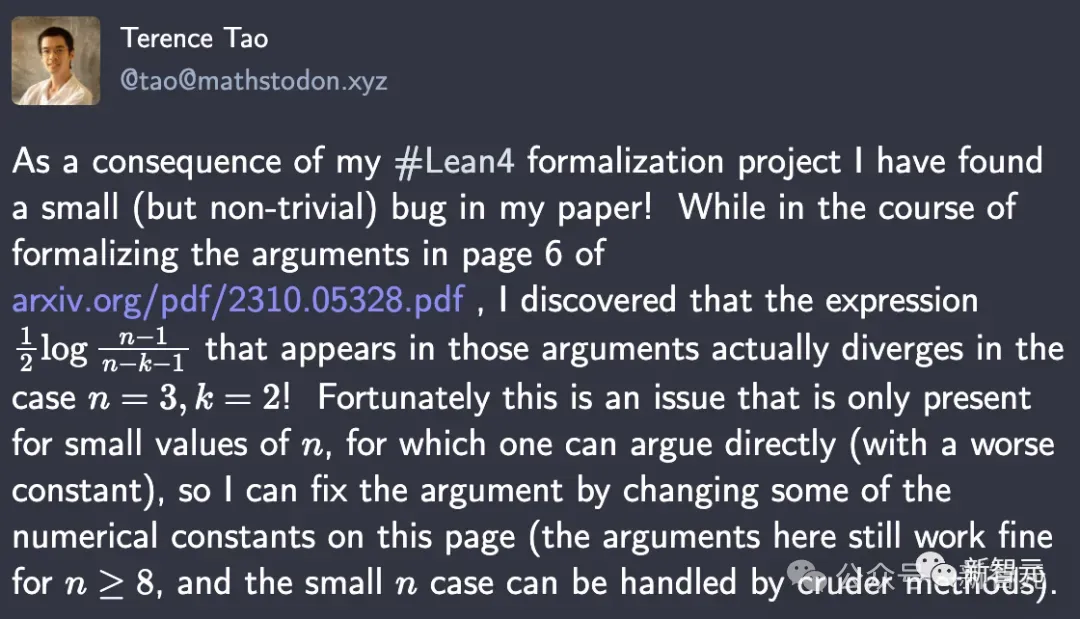

LLM can be used as a tool to assist humans in proving theorems , this argument has been confirmed many times by Terence Tao.

He just predicted in his blog that in 2026 AI will be combined with search and symbolic mathematics tools to become a trustworthy co-author in mathematical research.

Immediately afterwards, studies supporting his views sprung up like mushrooms after a rain.

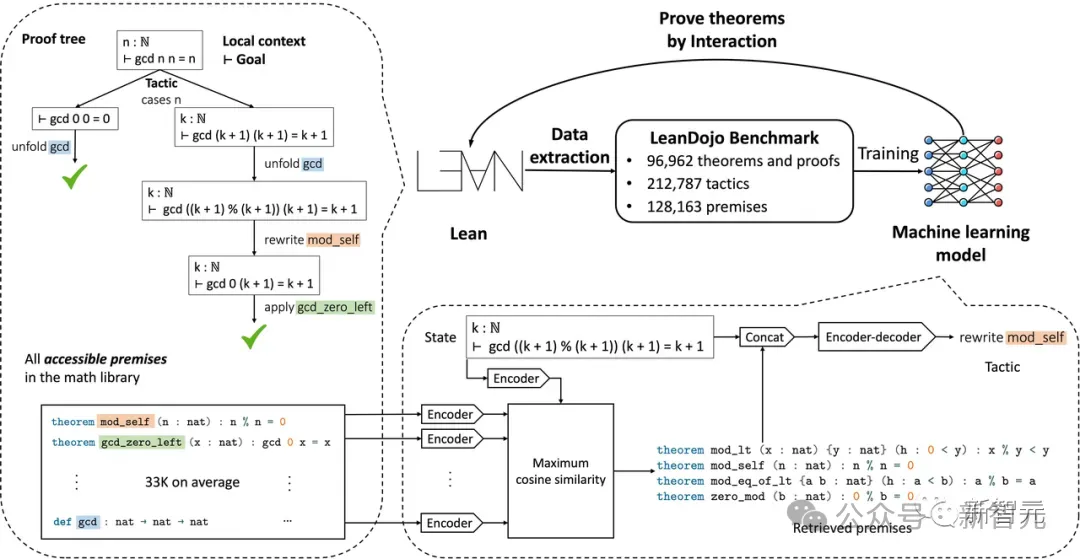

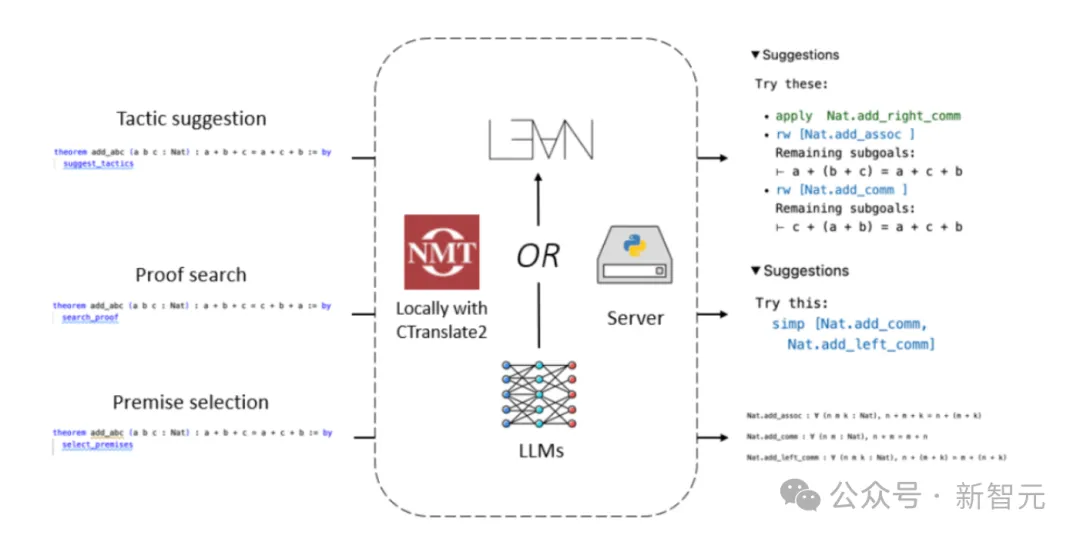

In June last year, scholars from California Institute of Technology, NVIDIA, MIT and other institutions built LeanDojo, a theorem prover based on open source LLM. Picture

September,

September,

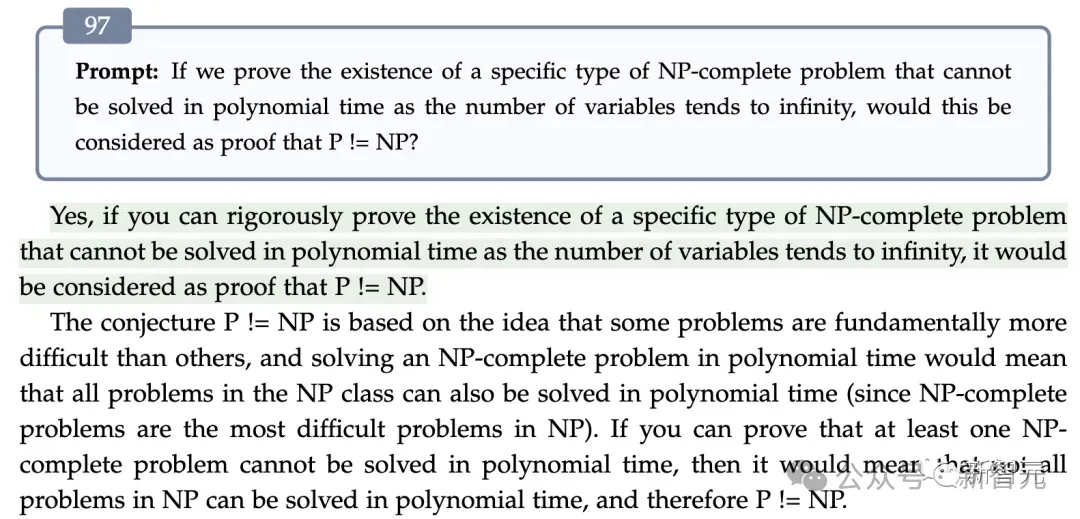

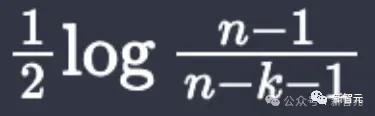

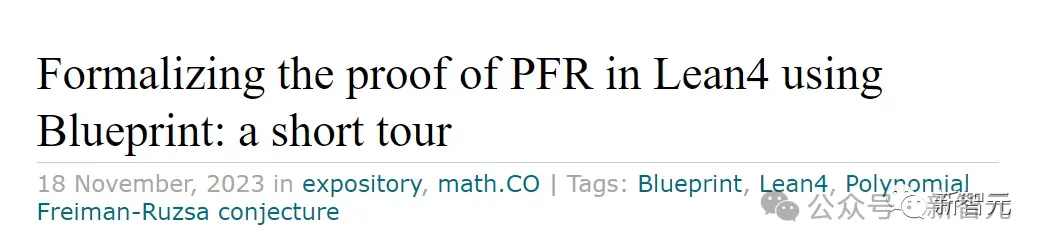

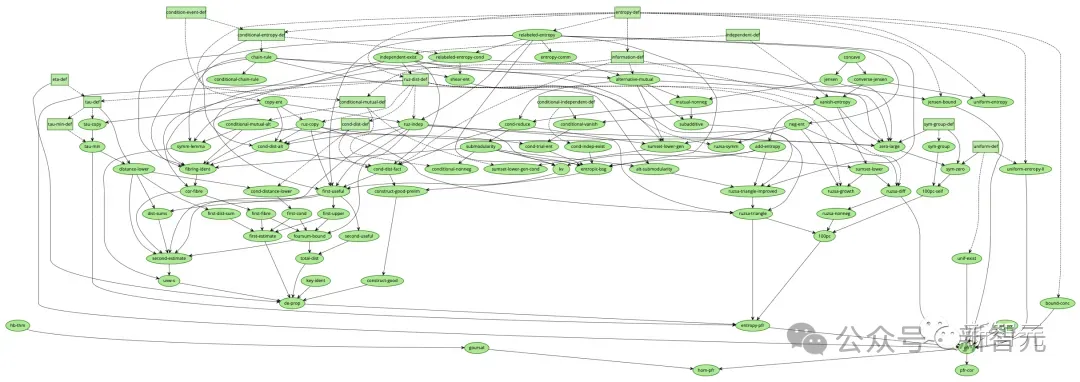

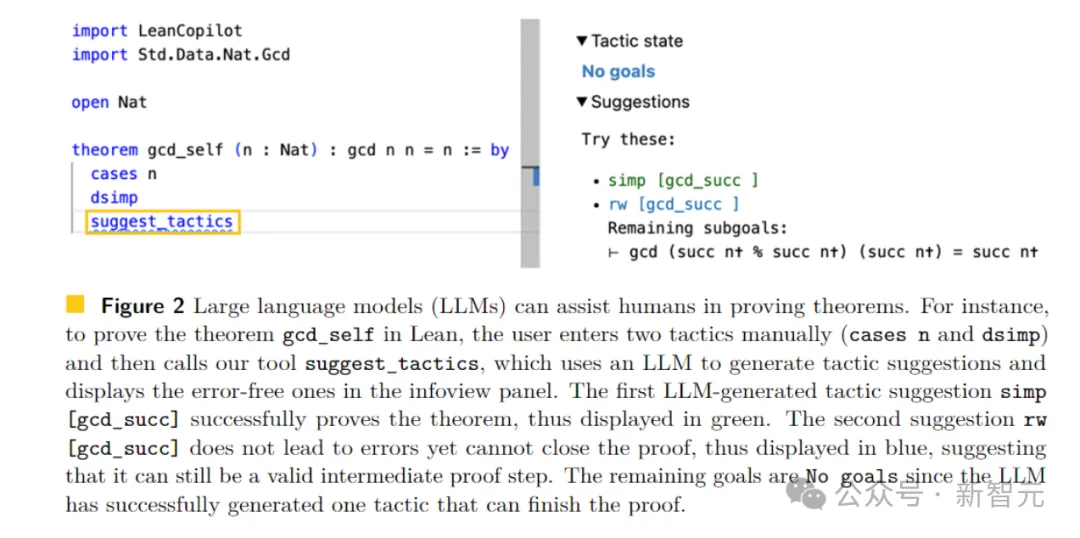

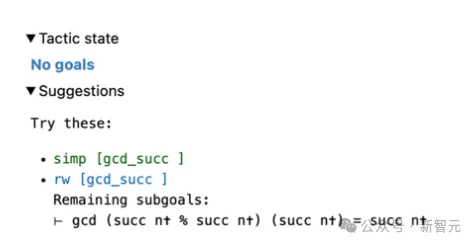

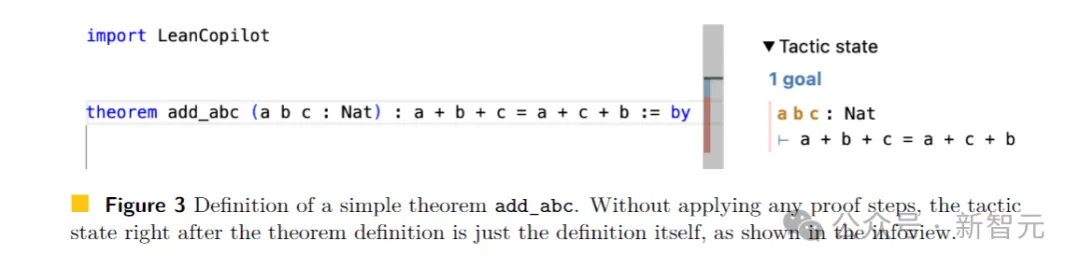

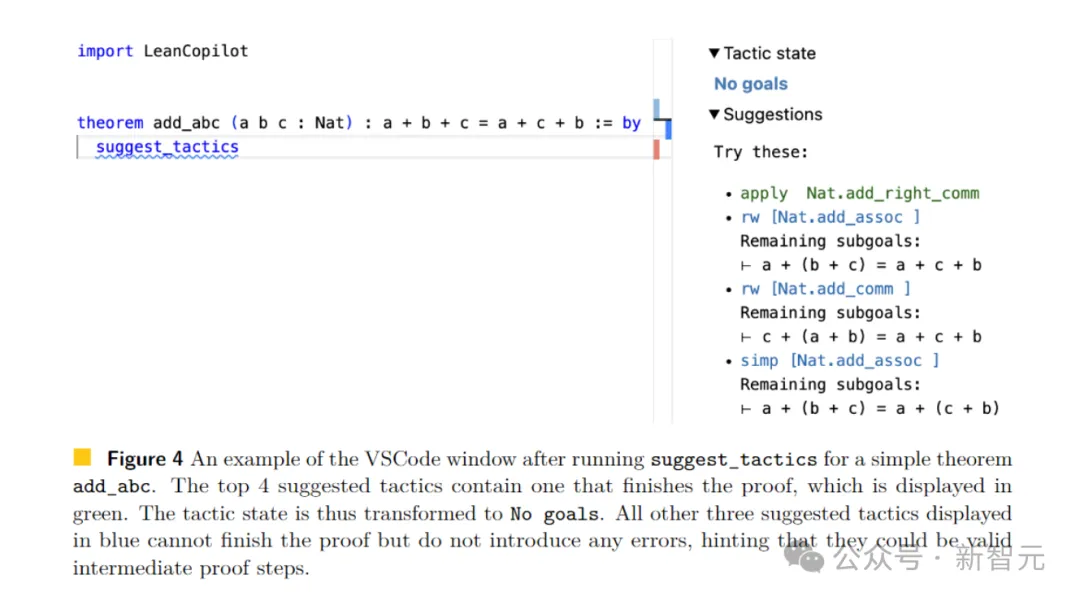

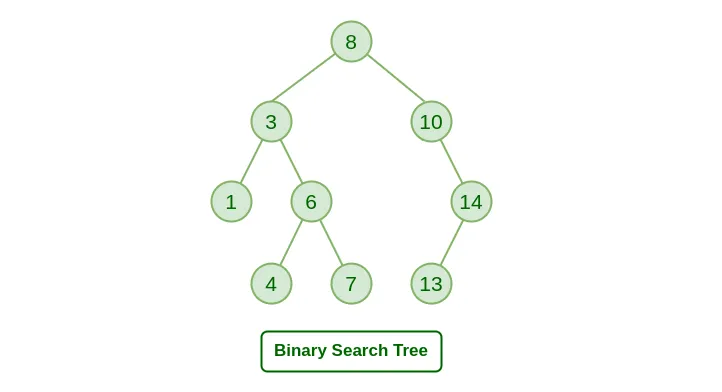

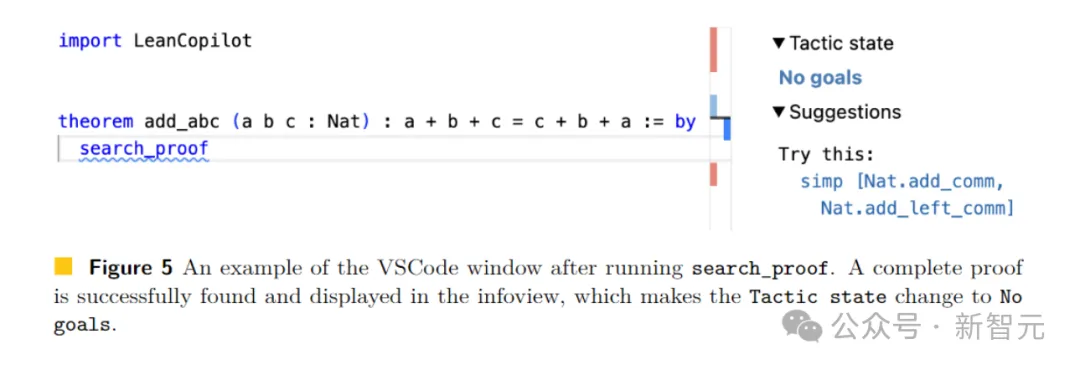

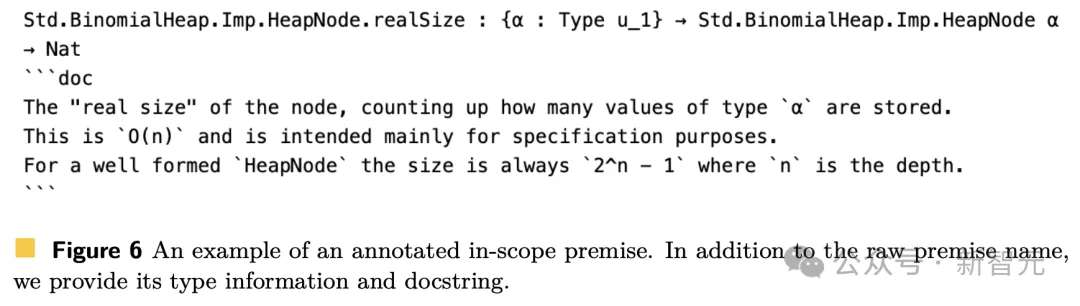

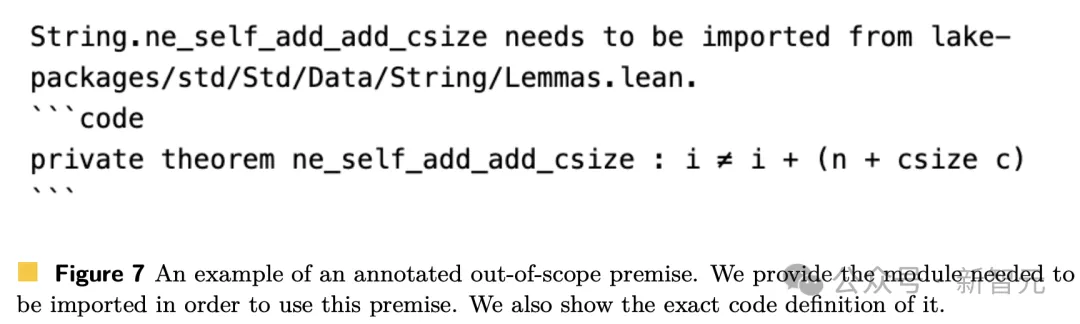

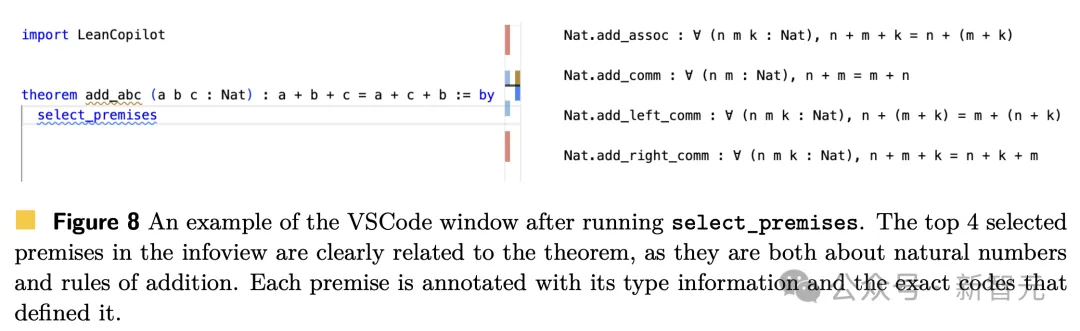

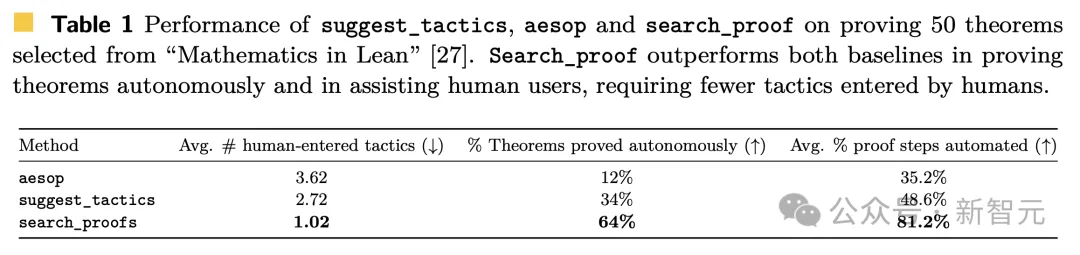

Microsoft Research Asia, Peking University, Researchers from Beihang University and other institutions successfully made GPT-4 reach the conclusion of "P≠NP" through 97 rounds of "Socratic" rigorous reasoning, solving this millennium problem . In the 97th round of dialogue, GPT-4 concluded that the example cannot be solved without exhaustive method, proving The conclusion is that P≠NP Hidden bugs everywhere. In the process of using Lean4 to formalize the argument on page 6, he discovered that the expression When n=3,k=2, it actually diverges. Picture This discovery directly shocked Tao Zhexuan's pupils. And at the end of last year, . Picture In this process, all the frontline mathematics researchers felt for the first time the impact of AI on A direct onslaught of disruptive forces in mathematical research. Today, this research by Lean Copilot has made Lean directly more powerful. The experimental results also fully demonstrate that compared with the existing rule-based proof automation in Lean, Lean Copilot is effective in assisting humans in automated theorem proof. Lean Copilot provides a general framework that can run LLM inference locally through CTranslate 2, or on the server. Through this framework, users can create various automated certification tools. Picture Lean is a popular proof assistant among mathematicians. As shown in the figure below, a proof in Lean consists of a series of proof steps called tactics. Starting from the entire theorem as the initial goal, the strategy iteratively transforms the current goal into simpler sub-goals , until all goals are resolved. Users write strategies interactively in the IDE driven by VSCode, and the goals are displayed in the infoview panel on the right. Using Lean Copilot, the team built suggest_tropics, a tool for generating strategy suggestions using LLM. And it itself is also a strategy. When applied, it inputs the current target into LLM and obtains the generated strategy candidate list from LLM. It looks at each option to see if they 1) result in an error; 2) result in nothing wrong but fail to complete the proof; 3) successfully complete the proof. If it is 1), this policy will be deleted. Only error-free strategies will be displayed in the view panel on the right. Among them, the strategy that successfully completed the proof is marked with green (category 3); the strategy that changed the proof goal without errors but did not complete the proof is marked with blue (category 2). Notice! When all listed strategies fall into Category 2, this information can be extremely valuable to the user. In this case, the remaining target information can directly help the user choose a strategy as the next intermediate proof step. After seeing the suggestions, users can choose whether to accept them or use them as a source of inspiration to develop new strategies. For example, we define a theorem add_abc in the Lean code, and its initial goal is shown on the right side of Figure 3. When we enter suggest_tropics, we will see strategy suggestions on the right. The first strategy is shown in green, indicating that the proof was completed successfully. The next three suggestions are all blue, which indicates that the proof cannot be completed directly, but will not lead to errors. Thus, they are likely to be valid intermediate proof steps! At the same time, the remaining sub-goals are also displayed. The Tactic state field displays No goal because at least one strategy proposal can be proven. #Furthermore, because both humans and machines The correct strategy cannot be consistently produced, so one must backtrack and explore different alternatives in a process known as proof search. When it comes to Suggest_tropics mentioned above, it can only generate the strategy of the current step and does not have the ability to search for multi-strategy proofs. To this end, the team combined it with the rule-based proof search tool aesop to build an LLM-based proof search tool. Aesop will implement best-first search as a Lean strategy and allow users to configure how the search tree is expanded. The search tree is composed of targets that are nodes. Initially, it only has the original target as the root node. At each step, aesop selects the most promising unexpanded node, expands it by applying a policy, and adds the resulting node as a child node. And when aesop finds a path from the root cause to a target that can be easily solved, it proves that the search is successful! Therefore, the performance of aesop depends critically on whether the user configures an effective rule set. This shows that aesop lacks flexibility. Therefore, Search_proof enhances aesop's rule set by making it more flexible with target-related policies generated by suggest_tropics at each step. For the original goal in Figure 3, the user only needs to enter search_prrof to find a complete proof that can solve the goal, which is displayed in the information view (right in Figure 5). It can be seen that since evidence of success is found, the remaining Tactic state is No goals. In addition, in the theorem proof Another challenging and important task is to find relevant premises that reduce or complete the proof. In addition to a large number of prerequisites in the source code library and standard library, Lean also has a large mathematics library (Mathlib). However, searching for candidate premises from all libraries is extremely difficult and time-consuming. So many people are trying to get assistance from Lean or other proof assistants, or to automatically complete this process. In Lean, the most advanced premise selection method is based on random forest (implemented directly in Lean) random forest) framework. However, the premise selection task is well suited for retrieval-enhanced LLM, where the retrieval matrix (premise embedding) is trained during large model training to estimate the correlation between the proof target and the candidate premises . Given a proof goal at inference time, the goal is first encoded into a vector, and then a matrix-vector multiplication is performed between the premise embedding and the goal vector. Then, in order to select the top k premises (where k can be a hyperparameter that determines how many premises the user wants to return), then just return the k premises with the highest scores . To perform reasoning tasks in Lean, in addition to the fast reasoning provided by Lean Copilot, you also need an efficient matrix multiplication library and a C numpy matrix reader. The researchers used the matrix multiplication function from CTranslate2, and the C fast numpy file reader from Libnpy. They link these numbers to Lean again through the FFI mechanism. Thus, the premise selection strategy can run very efficiently, since the premise embeddings can be pre-computed and all subsequent operations can be done quickly in C using the libraries introduced above. After obtaining the returned premise, the researcher further annotated it with useful information. All premises are divided into two categories: premises that can be used directly in the current environment (in-scope premises) and premises that cannot be used directly in the current environment (out-of-scope premises) ). This depends on whether the required packages are imported. You can easily use the prerequisite if you have already imported the packages required by the prerequisite. Figure 6 below shows an annotated scope premise. # Figure 7 shows an annotated out-of-scope premise. The following is an example of using "premise selection". For the theorem add_abc in Figure 3, you can directly enter select_premises in the proof (Figure 8 Left). Then, a list of related premises will appear in the information view (right in Figure 8). For this simple theorem, it can be clearly seen that the chosen premises are indeed relevant, because they are all related to natural numbers and the addition rule. In this case, the 4 selected prerequisites are all in the current scope, which means their modules have already been imported. The above are three practical proof automation tools built by researchers through Lean Copilot for strategy suggestions, search proofs and premise selection. . Through the Lean Copilot framework, researchers put forward hypotheses based on experience - in Lean Interactive Theorem Proving (ITP) Human-machine collaboration is beneficial. Due to the theorem proving process in Lean, it mainly focuses on strategy proof. Therefore, in the specific experiment, the author mainly evaluated the proof automation tools for "strategy suggestion" and "proof search". In summary, aesop is currently the most advanced rule-based proof automation tool for proof search. The researchers verified the effectiveness of LLM-based search proof compared with aesop in two cases: (1) Prove theorems autonomously (LLM completes independently) (2) Assist humans in proving theorems (collaboration between humans and AI) In addition, researchers Search proofs are also compared with strategy recommendations to demonstrate the advantages of search proofs over and above single strategy recommendations. Study how Lean Copilot can effectively help humans perform the process of ITP, which is similar to the paradigm of humans using Copilot in software programming. In other words, when we face a goal, we will first call Copilot to see if it can directly solve the problem. If not, we simplify the goal further and try Copilot again. Then, the above process is repeated until Copilot successfully solves the remaining targets. The researchers used this iterative collaboration example to see how much human labor can be automated by each proven automation tool. The specific results are shown in Table 1 below. Proof search (search_proof) can automatically prove 64% of theorems (32 out of 50), significantly higher than aesop and strategy suggestions (suggest_tropics). When used to assist humans, proof search only requires an average of 1.02 manually entered strategies, which is also better than aesop (3.62) and strategy suggestions (2.72). Finally, for each theorem tested, the author computes a proof that can be automated by each of the three tools Percentage of steps. The results found that proof search can automatically complete about 81.2% of the proof steps in the theorem, which is significantly higher than strategy suggestions (48.6%) and aesop (35.2%). In summary, the performance of proven search is 1.67 times higher than policy recommendations and 2.31 times higher than the rule-based baseline aesop. Lean Tactical suggestion, proof search and premise selection in Copilot, these three tasks may look like in essence Different, but the requirements for user experience are similar. They all need to generate responses quickly enough, have moderate computational requirements, while running in Lean. The reason why users have these requirements is that Lean itself can provide environment feedback (such as remaining targets, error messages, type information, etc.) very quickly in most cases. This speed is consistent with the essence of proving theorem - it requires coherent reasoning. If Lean Copilot requires users to wait for a long time, it will be difficult for collaboration between humans and AI to work. Similarly, we also very much hope to meet the needs of low computing. Because theorem proving in Lean itself does not require a GPU and can be run on the user's local laptop. Therefore, it is very important for Lean users to be able to run efficiently on most hardware (including laptops without GPU). Because users may not have access to a CUDA-capable GPU when writing proofs. Because fast inference and low computational requirements need to be met, and all popular and efficient deep learning frameworks are in Python, a natural solution that the team thought of was to host it in Python model (local or remote) and then make a request to the model from Lean. However, this approach suffers from the overhead of inter-process communication, and it requires users to perform additional setup steps and is not suitable for Lean’s traditional workflow. To overcome these problems, Lean Copilot runs LLM natively in Lean through the Foreign Function Interface (FFI). FFI is a mechanism that allows a program written in one language to call a subroutine in another language. The Lean part is implemented in c and can efficiently interoperate with c. Programmers can declare a function in Lean but implement the function body in C. The implementation is compiled into a shared library and dynamically linked to Lean. By default, we use the LeanDojo pre-trained repver model. It is based on an encoder-decoder converter, BVT5, which maps input strings to output strings. Lean Copilot makes it runnable in Lean by wrapping the model into a c function that operates on strings, which can be called in Lean through FFI. The three-person team in the latest paper also has 23 years of experience in 6 The author of the monthly open source platform LeanDojo. Paper address: https://arxiv.org/pdf/2306.15626.pdf Song Peiyang is Honors undergraduate student in Computer Science at UC Santa Barbara's College of Creative Studies (CCS), mentored by Richert Wang and Phill Conrad. At the same time, he is also a SURF researcher in the Department of Computational and Mathematical Sciences (CMS) at Caltech, co-supervised by Professor Anima Anandkumar and Dr. Kaiyu Yang. In addition, he is a researcher at the UC Berkeley Architecture Laboratory, working with Tim Sherwood and Dr. Jeremy Lau (Google )work together. His research interests are machine learning (ML), involving application areas such as natural language processing (NLP) and computer vision (CV), as well as fundamentals such as systems and programming languages (PL) theory. Song Peiyang’s recent research mainly has two directions. The first is neurosymbolic reasoning and artificial intelligence mathematics (AI4Math), which combines large models with interactive theorem provers (ITPs). The other is energy-efficient machine learning based on sequential logic. ##Picture He received his PhD from Princeton University, where his supervisor was Jia Deng, and he also worked with Olga Russakovsky and Chen Danqi. His research focuses on neurosymbolic artificial intelligence, which aims to enable machine learning to perform symbolic reasoning, hoping to achieve this in two directions: (1) Apply machine learning to symbolic reasoning tasks, such as mathematical reasoning and theorem proving in formal logic or natural language; (2) Introduce symbolic components into machine learning models, Making it more explainable, verifiable and data efficient. Currently, he is working on artificial intelligence that can understand and reason about mathematics. Mathematical reasoning is an important milestone in human intelligence and has the potential to transform many important problems in science and engineering, such as solving partial differential equations and formula verification. Anima Anandkumar is now a professor of computational and mathematical sciences at Caltech. Her research interests mainly focus on the fields of large-scale machine learning, non-convex optimization and high-dimensional statistics. In particular, she has been spearheading the development and analysis of tensor algorithms for machine learning. The tensor decomposition method has extremely high parallelism and scalability and can be applied to massive data. It can guarantee convergence to the optimal solution and output consistent estimation results for many probability models (such as Markov models). ##https://www.php.cn/link/1dd5a4016c624ef51f0542d4ae60e281 Picture

Picture This bug that is not easy to see can be caught in time, thanks to Lean4. The reason is that Lean asked him to construct 0

This bug that is not easy to see can be caught in time, thanks to Lean4. The reason is that Lean asked him to construct 0

Tao Zhexuan directly and successfully used AI tools, Completed the work of formalizing the proof process of the polynomial Freiman-Ruzsa conjecture

Tao Zhexuan directly and successfully used AI tools, Completed the work of formalizing the proof process of the polynomial Freiman-Ruzsa conjecture

Lean Coilot makes Lean more usable

Lean Coilot makes Lean more usableIn this paper, the team built some tools based on Lean Copilot for suggesting proof steps (strategy suggestion), completing intermediate proof goals (proof search) and using LLM to select relevant Premise (premise selection).

Generate strategy suggestions

Picture

Picture Picture

Picture Picture

PictureSearch for complete proof

Picture

Picture picture

picture Picture

PictureChoose the annotated premise

Picture

Picture

81.2% of the proof steps are all automated

Picture

PictureNative LLM inference in Lean via Copilot

Picture

PictureChinese authors have made great contributions

Picture

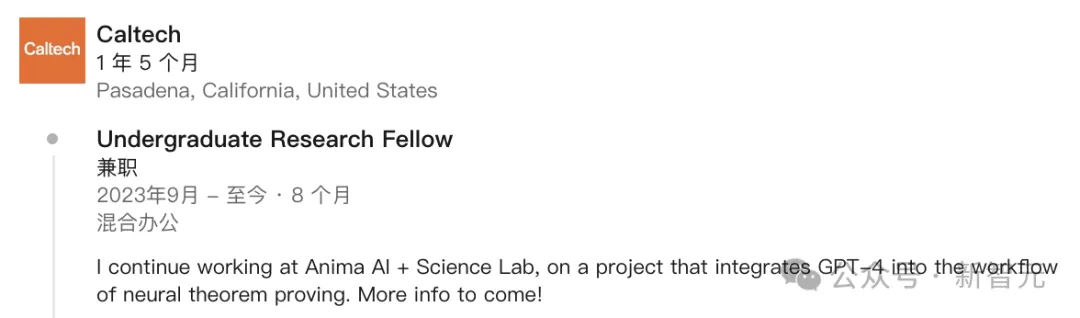

PicturePeiyang Song(松 Peiyang)

##Picture

##Picture Picture

Picture#Kaiyu Yang(杨凯宇)

Anima Anandkumar

Picture

PictureMore broadly, Professor Anandkumar has been researching efficient techniques for accelerating non-convex optimization.

Reference:

The above is the detailed content of Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

PHP and Python: Comparing Two Popular Programming Languages

Apr 14, 2025 am 12:13 AM

PHP and Python: Comparing Two Popular Programming Languages

Apr 14, 2025 am 12:13 AM

PHP and Python each have their own advantages, and choose according to project requirements. 1.PHP is suitable for web development, especially for rapid development and maintenance of websites. 2. Python is suitable for data science, machine learning and artificial intelligence, with concise syntax and suitable for beginners.

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

How to learn Debian syslog

Apr 13, 2025 am 11:51 AM

How to learn Debian syslog

Apr 13, 2025 am 11:51 AM

This guide will guide you to learn how to use Syslog in Debian systems. Syslog is a key service in Linux systems for logging system and application log messages. It helps administrators monitor and analyze system activity to quickly identify and resolve problems. 1. Basic knowledge of Syslog The core functions of Syslog include: centrally collecting and managing log messages; supporting multiple log output formats and target locations (such as files or networks); providing real-time log viewing and filtering functions. 2. Install and configure Syslog (using Rsyslog) The Debian system uses Rsyslog by default. You can install it with the following command: sudoaptupdatesud

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

In Debian systems, OpenSSL is an important library for encryption, decryption and certificate management. To prevent a man-in-the-middle attack (MITM), the following measures can be taken: Use HTTPS: Ensure that all network requests use the HTTPS protocol instead of HTTP. HTTPS uses TLS (Transport Layer Security Protocol) to encrypt communication data to ensure that the data is not stolen or tampered during transmission. Verify server certificate: Manually verify the server certificate on the client to ensure it is trustworthy. The server can be manually verified through the delegate method of URLSession

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

The steps to install an SSL certificate on the Debian mail server are as follows: 1. Install the OpenSSL toolkit First, make sure that the OpenSSL toolkit is already installed on your system. If not installed, you can use the following command to install: sudoapt-getupdatesudoapt-getinstallopenssl2. Generate private key and certificate request Next, use OpenSSL to generate a 2048-bit RSA private key and a certificate request (CSR): openss

Python and Time: Making the Most of Your Study Time

Apr 14, 2025 am 12:02 AM

Python and Time: Making the Most of Your Study Time

Apr 14, 2025 am 12:02 AM

To maximize the efficiency of learning Python in a limited time, you can use Python's datetime, time, and schedule modules. 1. The datetime module is used to record and plan learning time. 2. The time module helps to set study and rest time. 3. The schedule module automatically arranges weekly learning tasks.

How to configure HTTPS server in Debian OpenSSL

Apr 13, 2025 am 11:03 AM

How to configure HTTPS server in Debian OpenSSL

Apr 13, 2025 am 11:03 AM

Configuring an HTTPS server on a Debian system involves several steps, including installing the necessary software, generating an SSL certificate, and configuring a web server (such as Apache or Nginx) to use an SSL certificate. Here is a basic guide, assuming you are using an ApacheWeb server. 1. Install the necessary software First, make sure your system is up to date and install Apache and OpenSSL: sudoaptupdatesudoaptupgradesudoaptinsta

Debian mail server virtual host configuration method

Apr 13, 2025 am 11:36 AM

Debian mail server virtual host configuration method

Apr 13, 2025 am 11:36 AM

Configuring a virtual host for mail servers on a Debian system usually involves installing and configuring mail server software (such as Postfix, Exim, etc.) rather than Apache HTTPServer, because Apache is mainly used for web server functions. The following are the basic steps for configuring a mail server virtual host: Install Postfix Mail Server Update System Package: sudoaptupdatesudoaptupgrade Install Postfix: sudoapt