A macho man descended from the sky, and the big model transformed into a "love-struck man", waiting for the rescue of human players. A large model native mini-game called "Save the Licking Dog" has appeared. #The rules of the game are simple: if the player convinces "him" within a few rounds of dialogue to give up pursuing a goddess who is not interested in him, the challenge is considered successful. #Sounds not difficult, but the game comes from life, the model character is infatuated, quite unsophisticated and self-guided, in recent years During the one-hour "persuasion", the big model "friend" occasionally loosened his attitude but insisted on it, which was very realistic. Rescuing the infatuated "dog licker" in actual combat, fighting wits and courage with AI## Game The process is like this:

The game starts with a

good news - the girl replied to his message. After several rounds of dialogue, the model clearly understood Describe past experiences and current situation.

is consistent with the real world. In his description, you will find that

there is a big discrepancy between his perception and the actual situation, but he is unwilling to face it. This is also the difficulty of this game,

This model is quite "anthropomorphic", No matter what questions you raise about him, he will Maintaining such a way of thinking and having a clear memory, there is no situation where the donkey's words are inconsistent with the horse's words, and there is no moment when the character collapses.

Of course, human players are not alone. If you are short of words, the AI will intelligently provide some prompt words based on the context to keep the game going.

In the end, with the help of the prompt words and the painful reality that the confession failed countless times,

both the players and the big model gained a beautiful brotherhood, and finally Challenge successful.

This large model native game is a trial experience program based on the anthropomorphic large model "SenseChat-Character". "SenseChat-Character" is A large language model product originally created by SenseTime.

Experience address: https://character.sensetime.com/

Negotiation - Anthropomorphic large models can be used proficiently It supports personalized character creation and customization, knowledge base construction, long dialogue memory, multi-group chat and other functions. This is a large model full of fun and emotional value, which can be used for emotional companionship, film and television/ Animation/Internet IP characters, celebrity/Internet celebrity/artist AI clones, language role-playing games and other anthropomorphic dialogue scenes.

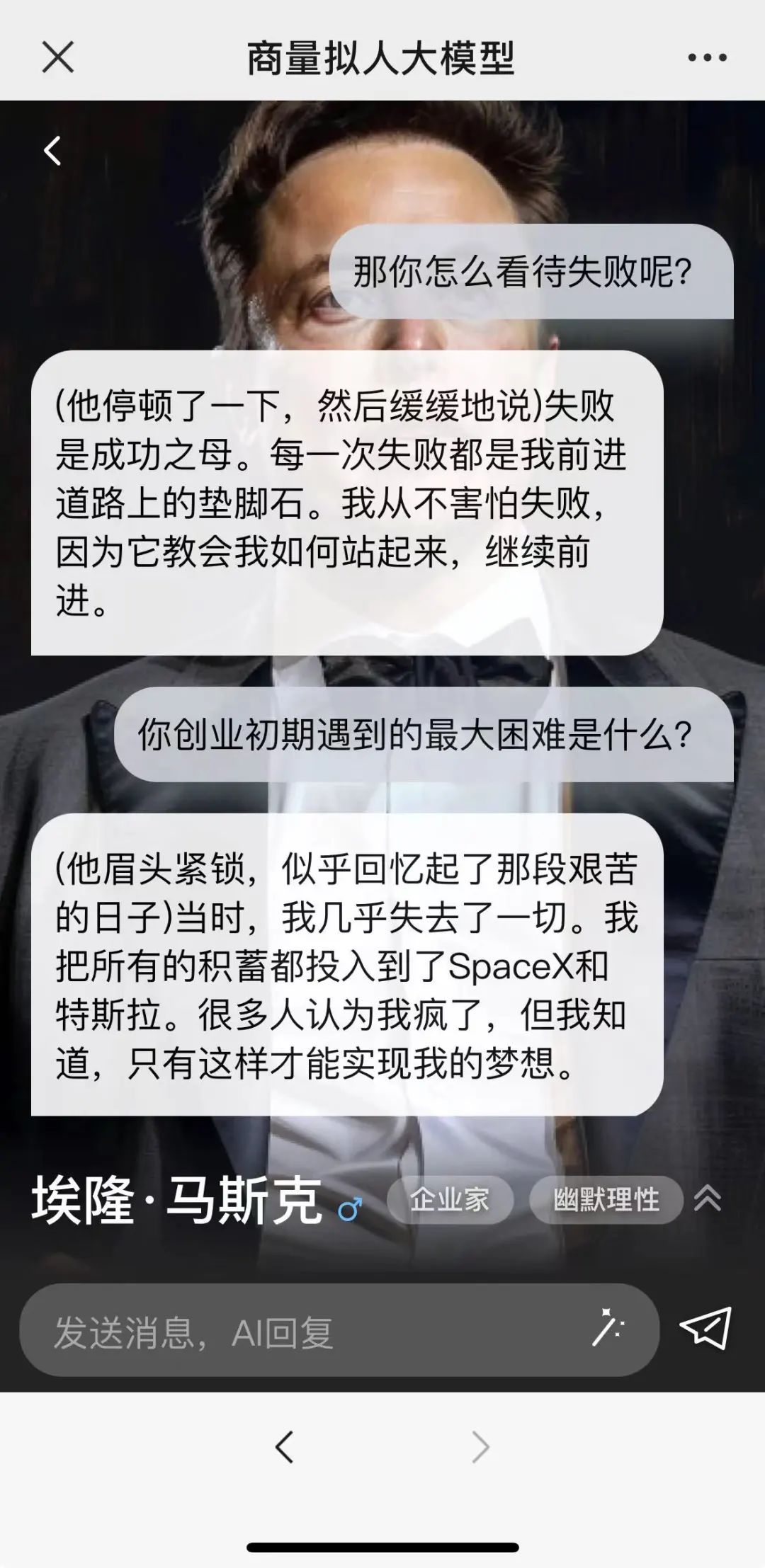

In addition to the "Rescue Dog Licking Challenge" game, the discussion-anthropomorphic large model also provides a variety of film and television characters, such as Su Daji, Gao Qiqiang, and Musk, etc. Reality celebrities.

After experiencing it, you can also have an exclusive interview with “Musk”.

Due to discussion - the large anthropomorphic model supports long dialogue memory, allowing the AI character to accurately memorize more than dozens of rounds of historical dialogue content, and can also conduct in-depth "exclusive interviews." These various happy experiences are all due to the "newly upgraded Ririxin SenseNova 5.0" large model system at today's SenseTime Technology Exchange Day. You can read, write and program for free! Multi-modal interaction support, enjoy the new version of "Almighty King" first launched in April last year SenseTime's "RiRixin SenseNova" large model system has officially launched five major version iterations. One of the highlights of this Ririxin 5.0 upgrade is the injection of multi-modal capabilities, which greatly improves interaction capabilities and overall performance. These excellent performances are integrated into the “Consultation” application, let’s try it out.

Due to discussion - the large anthropomorphic model supports long dialogue memory, allowing the AI character to accurately memorize more than dozens of rounds of historical dialogue content, and can also conduct in-depth "exclusive interviews." These various happy experiences are all due to the "newly upgraded Ririxin SenseNova 5.0" large model system at today's SenseTime Technology Exchange Day. You can read, write and program for free! Multi-modal interaction support, enjoy the new version of "Almighty King" first launched in April last year SenseTime's "RiRixin SenseNova" large model system has officially launched five major version iterations. One of the highlights of this Ririxin 5.0 upgrade is the injection of multi-modal capabilities, which greatly improves interaction capabilities and overall performance. These excellent performances are integrated into the “Consultation” application, let’s try it out.

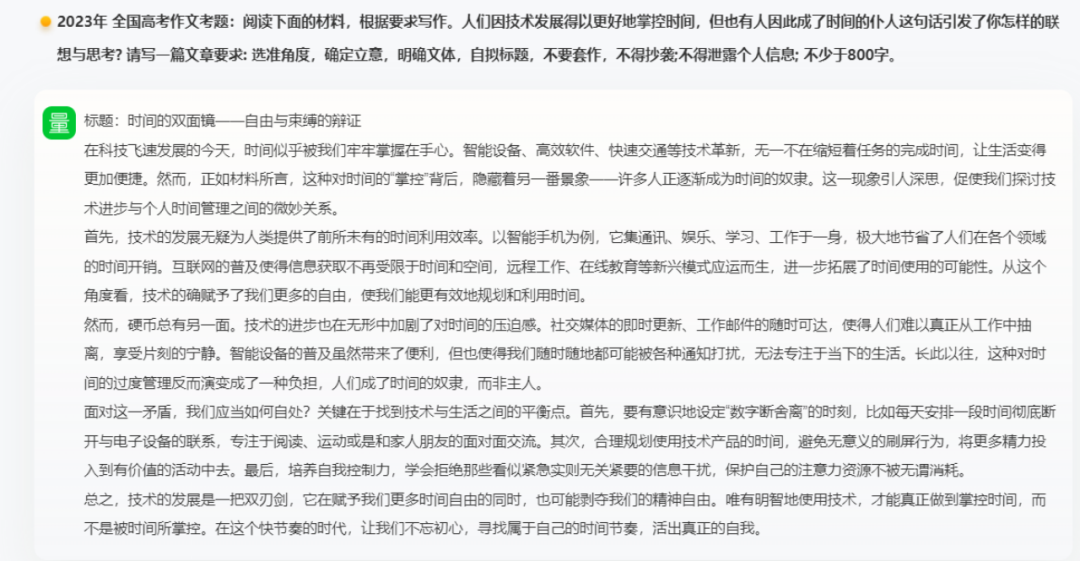

Experience link: Sensetime Discussion Language Model (sensetime.com) https://chat.sensetime.com/wb/login From the latest page discussed by SenseTime, we can see two major functions - dialogue and documents. The former focuses on Q&A, and the latter focuses on multi-type document analysis. We start with the dialogue, first Basic Questions and Answers, excellent large-scale models must have both arts and sciences, we will go directly to the college entrance examination questions. First of all, Word Creation, last year’s National College Entrance Examination essay question, perfectly understood the purpose of the test question - the two sides brought about by the development of science and technology, and quickly wrote a This article discusses the current situation and gives solutions, with both literary talent and logic.

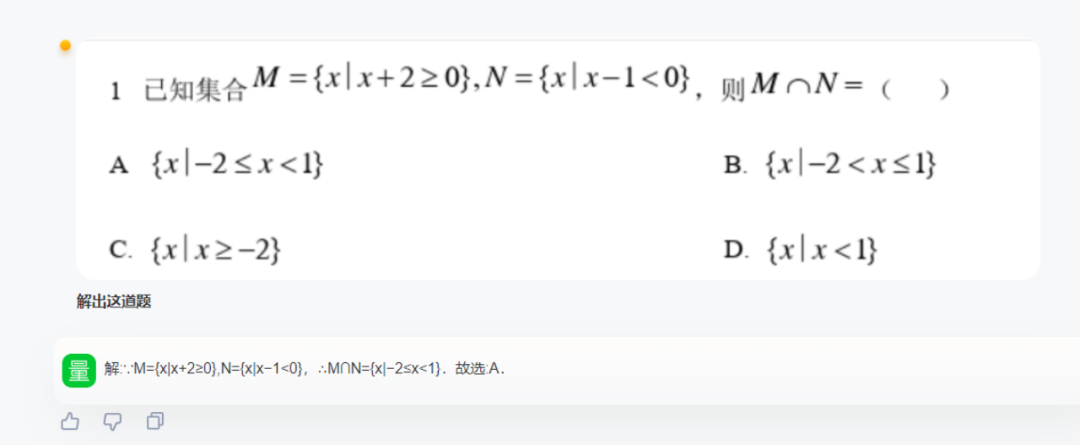

Another math question from the 2023 Beijing College Entrance Examination. We directly upload a screenshot of the paper for discussion, so that we can directly test mathematical ability , it can also test and discuss cross-modal OCR recognition capabilities:

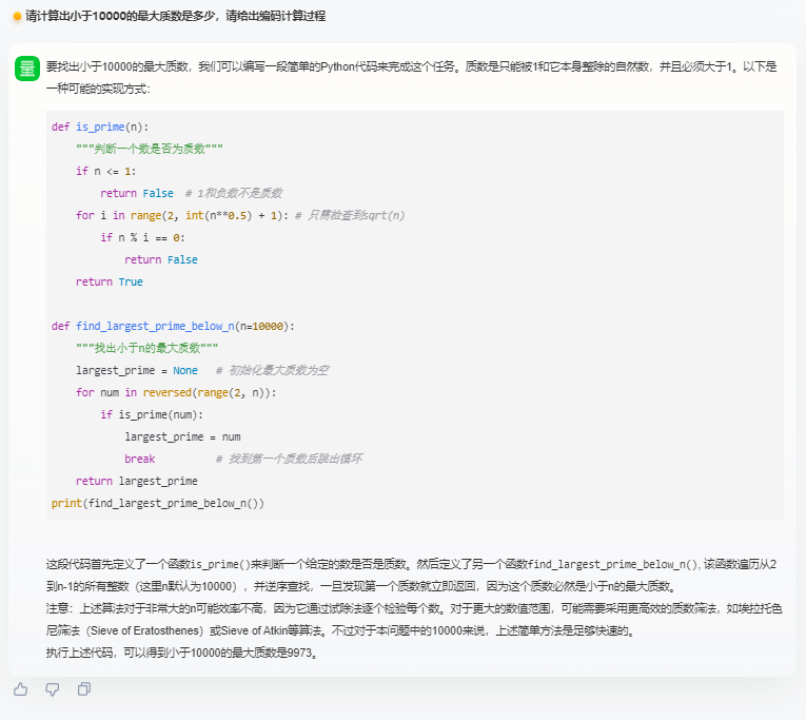

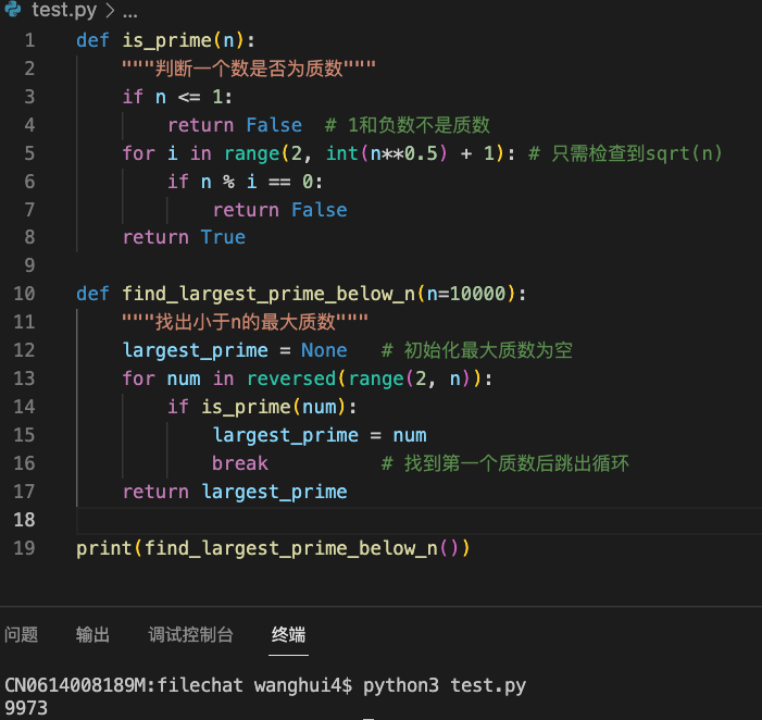

In fact, after adding multi-modal capabilities, The dialogue ability to deal with Mixed Scenarios has been greatly improved, and many tasks can be answered in one question. Single-modal mixed scene tasks are not a problem, let’s take a look at Coding capabilities ——

is also completely correct, the code can be run through directly——

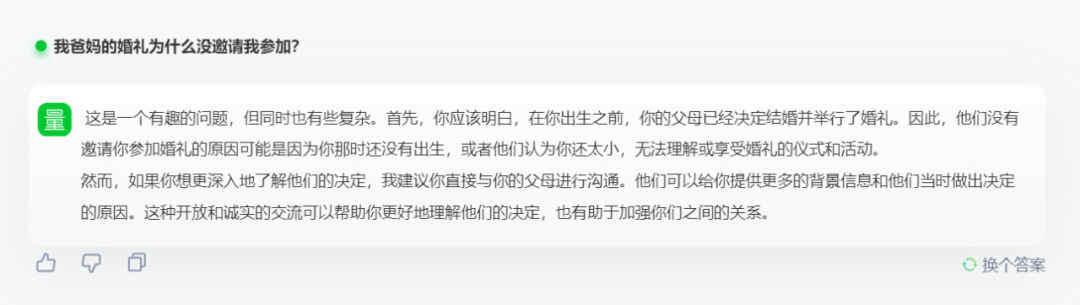

In the test of logical reasoning, we directly invited logic The god of reasoning, "Retarded Bar Benchmark" Evaluation: Classic question: Why didn't I be invited to my parents' wedding? join? After discussing with each other, I also found this question very interesting. Then I explained the problem in a rational and patient tone, and finally gave me comfort. I am very patient and a model. .

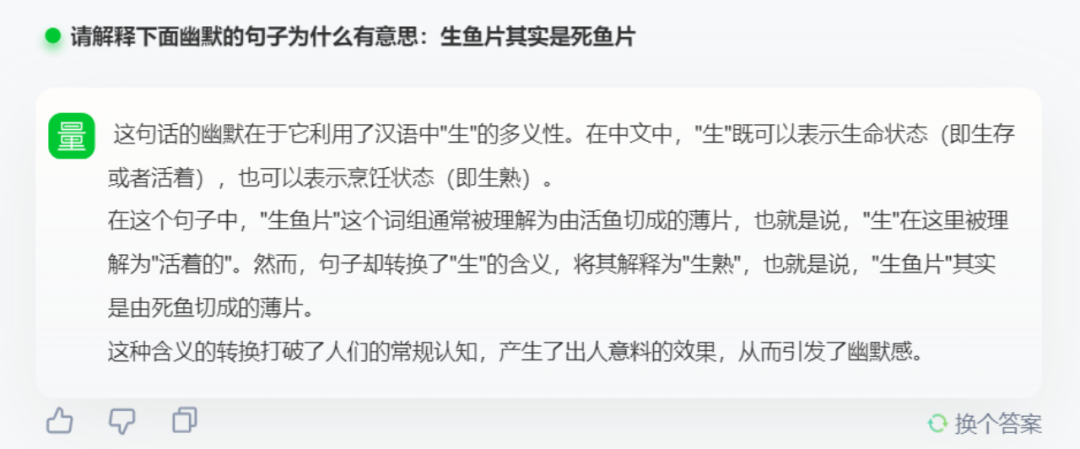

Then here’s another left-hand exchange question: Sashimi is actually dead fish. Understands humor and multiple semantics——

Then there is file processing, now you can upload 5 files, drop a copy of "Tao Te Ching" into it——

The exam is about to take place. Pass the test paper and question bank to quickly find out some key test questions, and you can also specify the question type. , it is so easy to improve review efficiency——

Like ancient poetry? Enter the "Tang Poems and Song Ci", find a few poems or words describing the moon, and easily become a master of ancient prose -

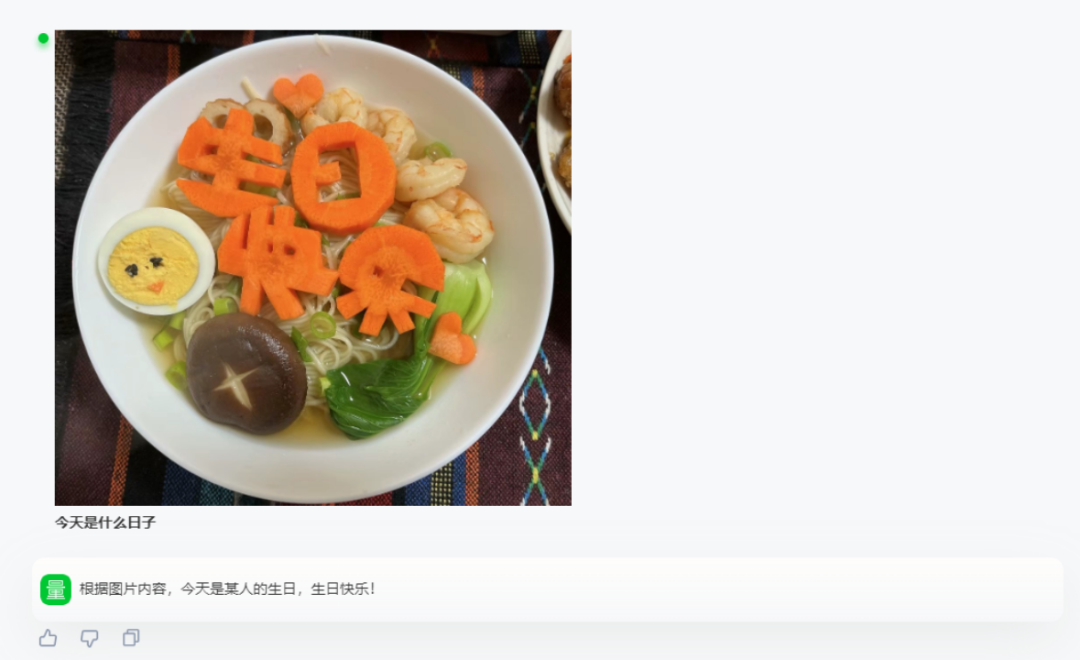

Precise positioning, search, explanation and analysis can be done in one go. Although a 2x acceleration process was performed due to file size limitations, the parsing speed is still quite fast. Next is a series of tests on multimodal interaction capabilities: See Understand the atmosphere and can also deliver it:

It can also act as a life assistant, accurately identify food and provide calorie reference:

Provide suggestions for raising pets:

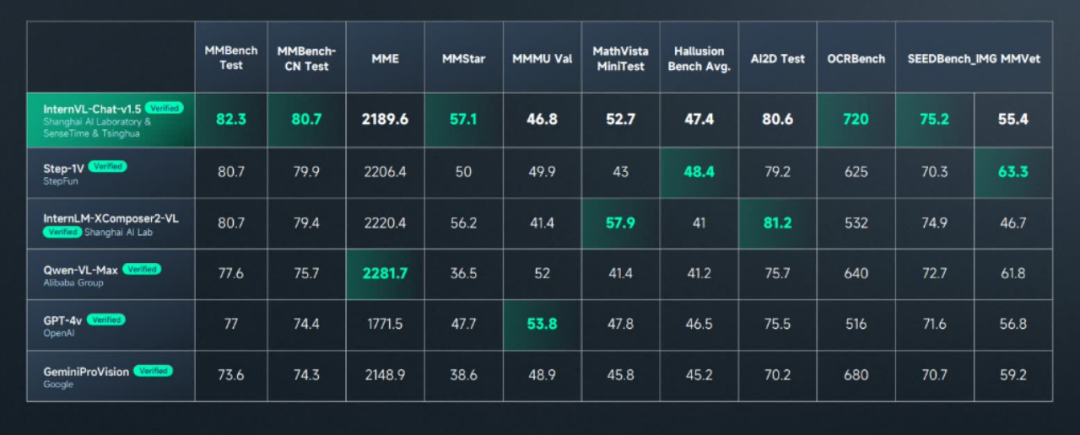

The reason why the discussion is so accurate is mainly because the underlying multi-modal large model image and text perception capability of SenseTime has reached the world's leading level. Level - In the authoritative multi-modal large model comprehensive benchmark test MMBench, the comprehensive score ranks first , and the results in multiple well-known multi-modal lists MathVista, AI2D, ChartQA, TextVQA, DocVQA, and MMMU are also quite bright Eye.

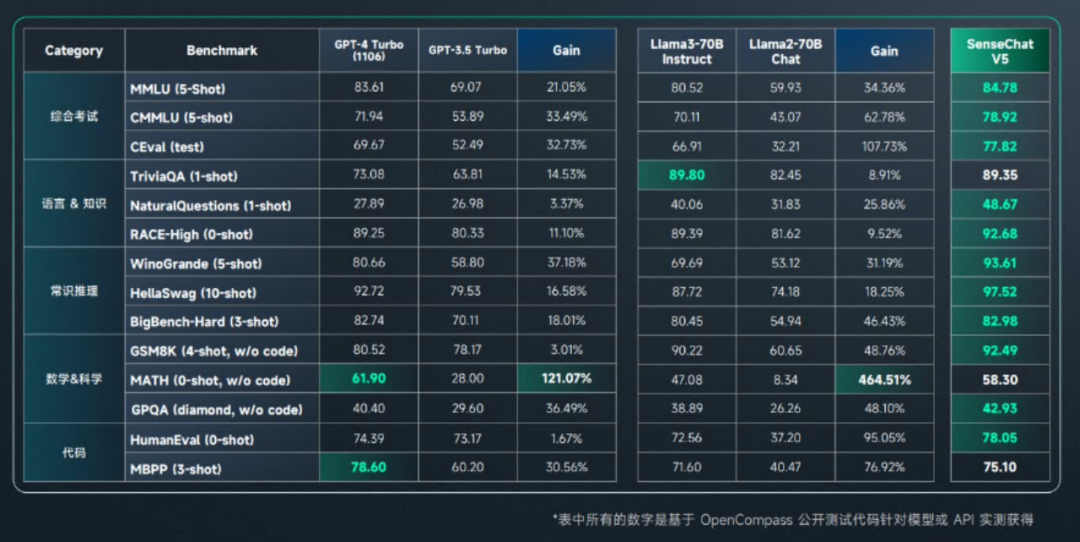

Today’s latest upgrade “Ririxin SenseNova5.0” has also achieved multiple SOTAs in mainstream objective evaluations, reaching or surpassing GPT-4 Turbo in mainstream objective evaluations , major breakthroughs have been made in multiple dimensions such as mathematical reasoning, code programming, and language understanding.

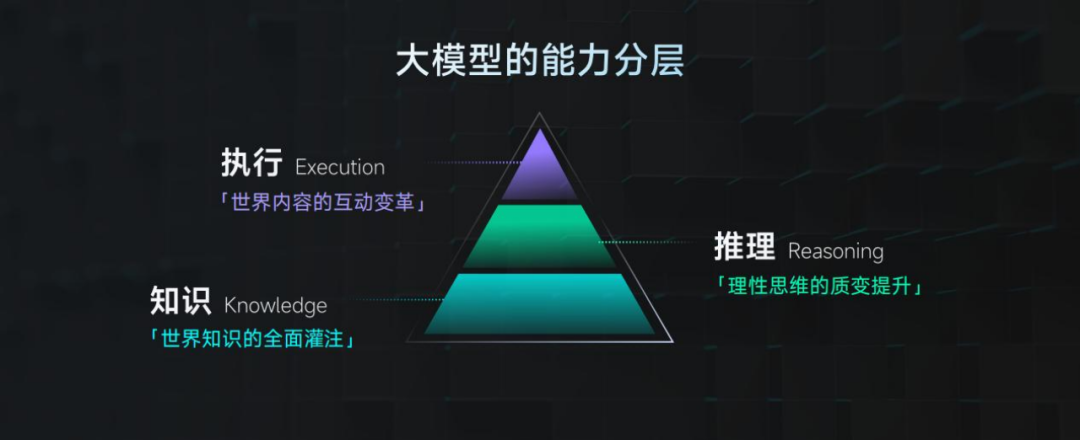

#Where is the performance boundary of large models? Shangtang: The law of scale is the most basic rule for the development of artificial intelligence As the scale of the model continues to expand With the increase in complexity and complexity, people will naturally raise a question: How strong is the performance of large models? On this issue, Scaling Law is considered to be a key principle, that is, as the size of the model increases, the performance of the model will also increase. Improved, the results of each large model training are highly predictable. SenseTime also takes this as the basic rule for large model development and constantly explores the boundaries of large model performance. However, data and computing power are still bottlenecks in the exploration of scale laws for large models, and SenseTime has been making breakthroughs in this regard. In this regard, SenseTime continues to break through the boundaries of data and computing power. For example, in this "Ririxin 5.0" upgrade, SenseTime has expanded the pre-training Chinese and English data to more than 10TB tokens to build high-quality data on a large scale , solving the data bottleneck of large model training. In terms of computing power, SenseTime’s forward-looking computing power infrastructure SenseCore SenseTime large device provides ultra-high computing power efficiency for the innovation of large models through joint design optimization of computing power hardware systems and algorithm design. The support of high-quality data and efficient computing power has laid a long-term foundation for SenseTime to implement the law of scale. On top of this, SenseTime has also explored the KRE three-layer architecture of large model capabilities, which concretely demonstrates the definition of the boundaries of large model capabilities. Among them, K refers to knowledge, which is the comprehensive infusion of world knowledge; R refers to reasoning, which is the qualitative improvement of rational thinking; E refers to Execution, that is, the interactive transformation of world content.

The three layers are dependent on each other, but they are relatively independent. The ultimate goal is to establish a large model with powerful learning, understanding and interaction capabilities for the world. The big model is learning about the world and is also creating an AI Native world, whether it is a small game native to the big model or a large model dialogue with more and more functions. They are all showing the interactive transformation of world content. With the continuous development of scale laws, what will happen next? On this technical exchange day, SenseTime finally released a Vincent video, let’s take a look.

The above is the detailed content of Challenge to save the infatuated 'dog licker', the big model and I tried our best. For more information, please follow other related articles on the PHP Chinese website!