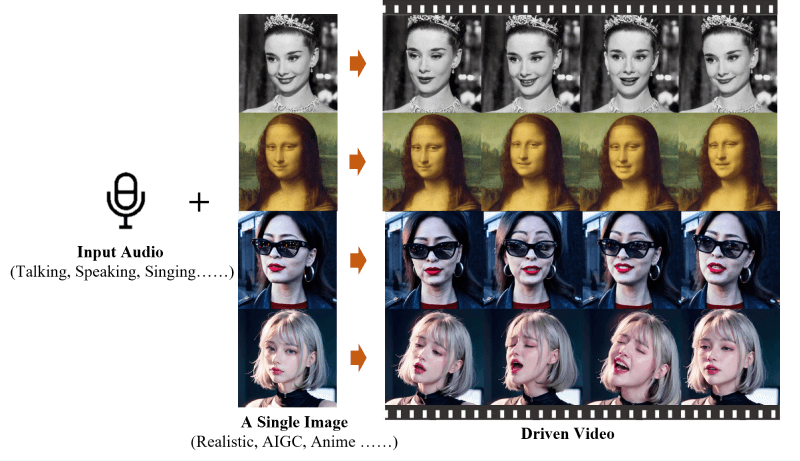

"This site reported on April 25 that EMO (Emote Portrait Alive) is a framework developed by the Alibaba Group Intelligent Computing Research Institute. It is an audio-driven AI portrait video generation system that can input a single reference image and Voice audio, generating videos with expressive facial expressions and various head postures."

Alibaba Cloud announced today that the AI model developed through the laboratory-EMO is officially launched on the general app and is open to everyone. Free for users. With this function, users can choose a template from songs, hot memes, and emoticons, and then upload a portrait photo to let EMO synthesize a singing video.

According to the introduction, Tongyi App has launched more than 80 EMO templates in the first batch, including the popular songs "Up Spring Mountain", "Wild Wolf Disco", etc. , there are also popular Internet memes such as "Bobo Chicken", "Back Hand Digging", etc., but currently no custom audio is provided.

This site is attached with the EMO official website entrance:

Official project homepage: https://humanaigc.github.io/emote-portrait-alive/

arXiv Research Paper: https://arxiv.org/abs/2402.17485

GitHub: https://github.com/HumanAIGC/EMO (Model and source code to be open source)

The above is the detailed content of Alibaba Cloud announced the launch of its self-developed EMO model on Tongyi App, which uses photos + audio to generate singing videos. For more information, please follow other related articles on the PHP Chinese website!

Build your own git server

Build your own git server

The difference between git and svn

The difference between git and svn

git undo submitted commit

git undo submitted commit

How to undo git commit error

How to undo git commit error

How to compare the file contents of two versions in git

How to compare the file contents of two versions in git

How to install third-party libraries in sublime

How to install third-party libraries in sublime

How to get Douyin Xiaohuoren

How to get Douyin Xiaohuoren

Binary representation of negative numbers

Binary representation of negative numbers

no such file solution

no such file solution