In recent years, the popularity of world models seems to play some crucial role in robot operation. For embodied intelligence, manipulation is the most important point to break through at this stage. Especially for the following long horizon tasks, how to build a robot "cerebellum" to achieve various complex operating requirements is the most urgent problem that needs to be solved at the moment.

When using LM to apply on robots, a common approach is to provide various APIs in the context, and then let LLM automatically write planning code according to the task prompt. Please refer to the article:

The advantage of this method is that it is very intuitive and can clearly grasp the disassembly logic of the task, such as moving to A, picking up B, moving to C, and putting down B. But the premise of this operation is to be able to split the entire task into atomic operations (move, grab, place, etc.). But if it is a more complex task, such as folding clothes, it is naturally difficult to split the task, so what should we do at this time? In fact, for manipulation, we should face a lot of tasks that are long horizon and difficult to split.

manipulation for long horizon that is difficult to split Task , a better way to deal with it is to study imitation learning, such as diffusion policy or ACT, to model and fit the entire operation trajectory. However, this method will encounter a problem, that is, there is no way to handle the cumulative error well - and the essence of this problem is the lack of an effective feedback mechanism.

Let’s take folding clothes as an example. When people fold clothes, they will actually constantly adjust their operating strategies based on the changes in the clothes they see visually, and finally fold the clothes to the desired look. There is actually a relatively implicit but very important point in this: people roughly know what kind of operations will cause what kind of changes in clothing. Then going one step further, people actually have a model about clothing deformation, and can roughly know what kind of input will lead to changes in the state (clothing placement) (the visual level is the pixel level), and more specifically, you can Expressed as:

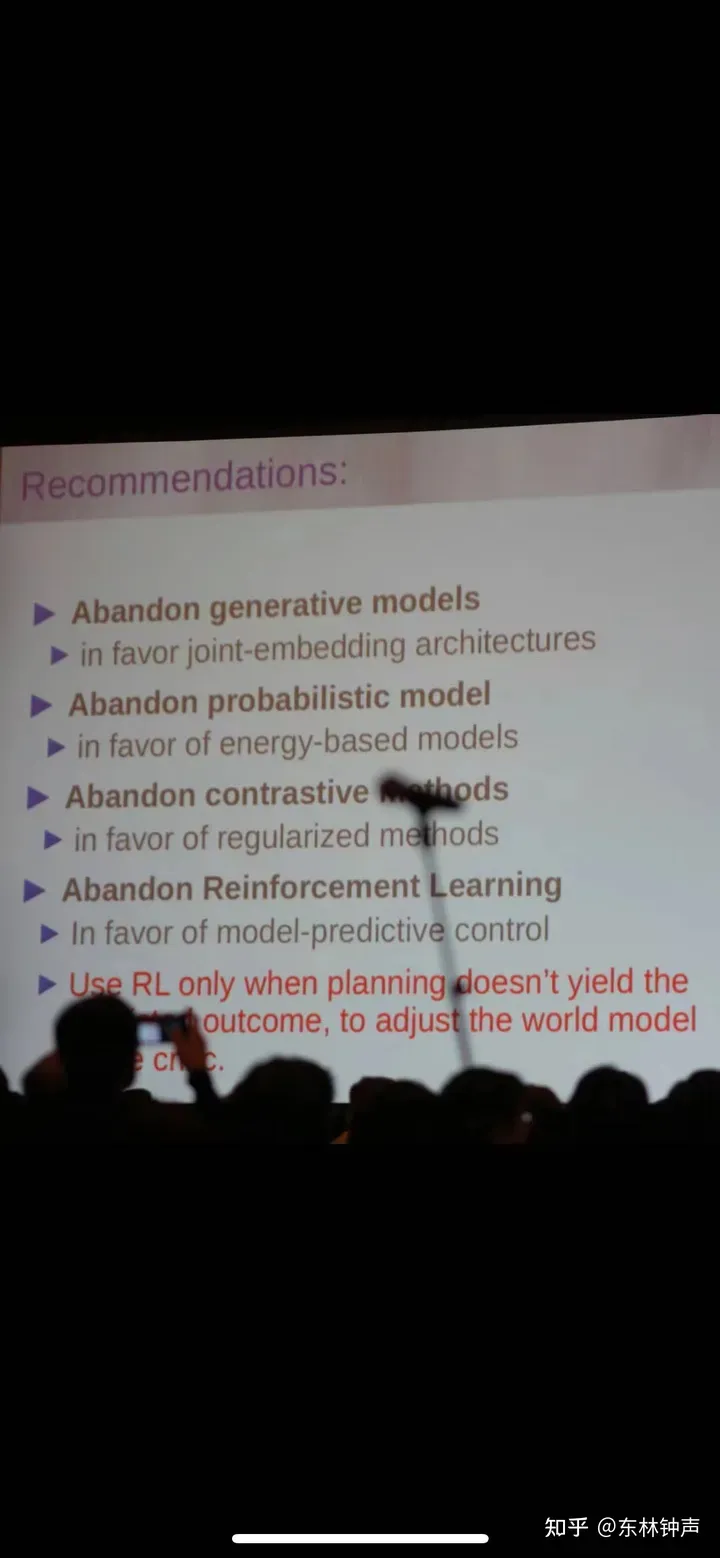

SORA actually gave me a shot in the arm, that is, as long as there is enough data, I can use the transformer diffusion layer to hard train one that can understand and predict changes. Model f. Assuming that we already have a very strong model f that predicts changes in clothing with operations, then when folding clothes, we can build a visual servo through pixel-level clothing status feedback and the idea of Model Predictive Control (Visual Servo) strategy to fold clothes to the state we want. This has actually been verified by some of LeCun’s recent “violent discussions”:

The above is the detailed content of Some thoughts on the world model for robot operation. For more information, please follow other related articles on the PHP Chinese website!