Technology peripherals

Technology peripherals

AI

AI

WizardLM-2, which is 'very close to GPT-4', was urgently withdrawn by Microsoft. What's the inside story?

WizardLM-2, which is 'very close to GPT-4', was urgently withdrawn by Microsoft. What's the inside story?

WizardLM-2, which is 'very close to GPT-4', was urgently withdrawn by Microsoft. What's the inside story?

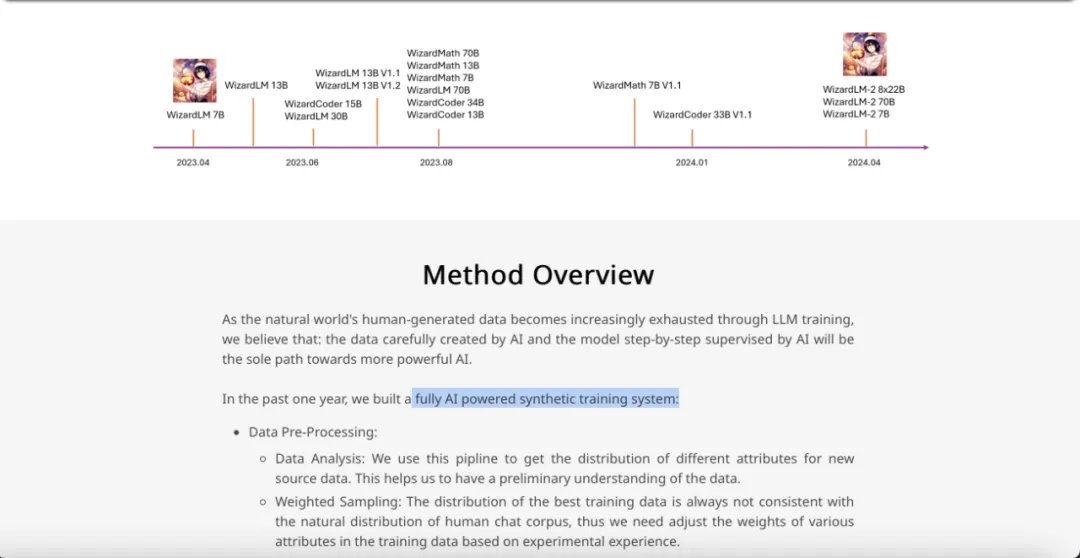

Some time ago, Microsoft made an own mistake: it grandly open sourced WizardLM-2, and then withdrew it cleanly soon after.

Currently queryable WizardLM-2 release information, this is an open source large model "truly comparable to GPT-4", with performance in complex chat, multi-language, reasoning and agency has been improved.

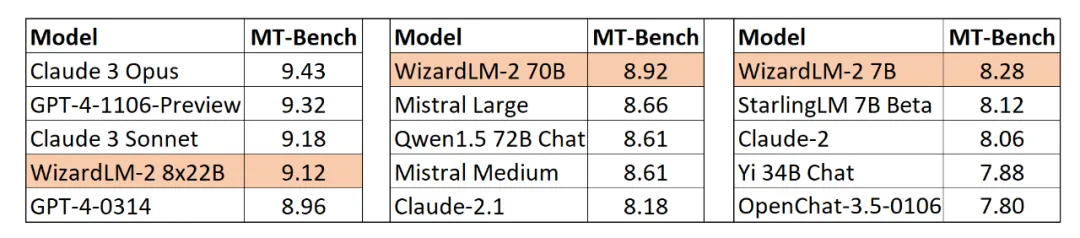

The series includes three models: WizardLM-2 8x22B, WizardLM-2 70B and WizardLM-2 7B. Among them:

- WizardLM-2 8x22B is the most advanced model and the best open source LLM based on internal evaluation for highly complex tasks.

- WizardLM-2 70B has top inference capabilities and is the first choice of the same scale;

- WizardLM-2 7B is the fastest, among which Performance is comparable to existing open source leading models that are 10 times larger.

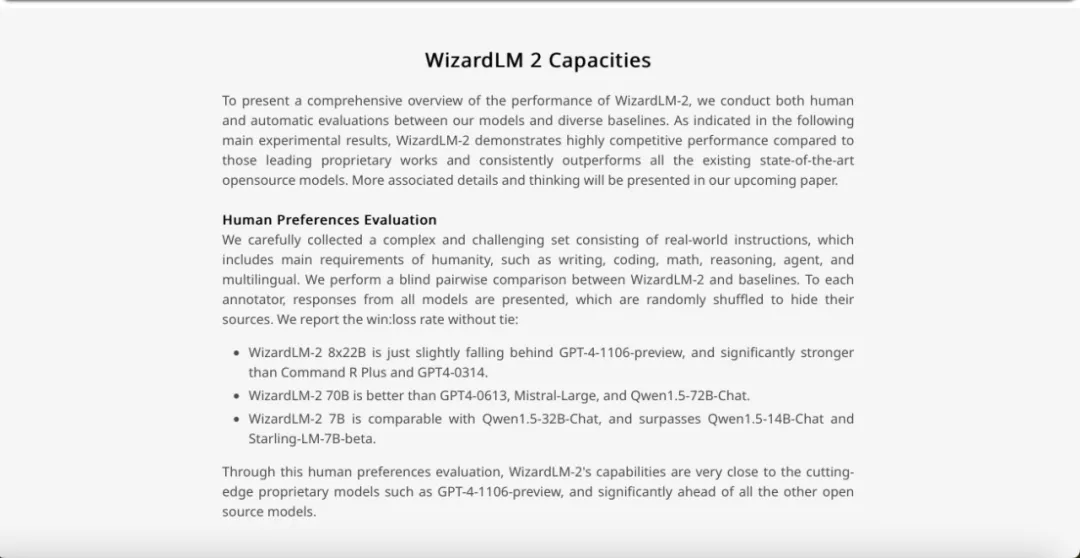

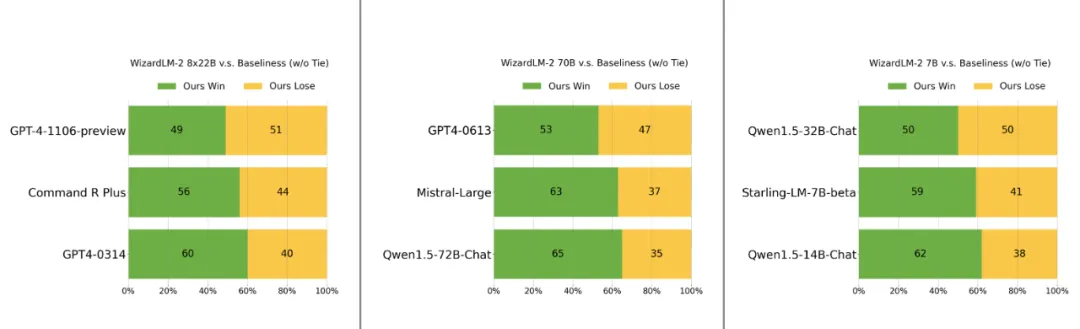

Additionally, based on human preference evaluation, WizardLM-28x22B’s capabilities “were only slightly behind the GPT-4-1106 preview, but Significantly stronger than CommandRPlus and GPT4-0314."

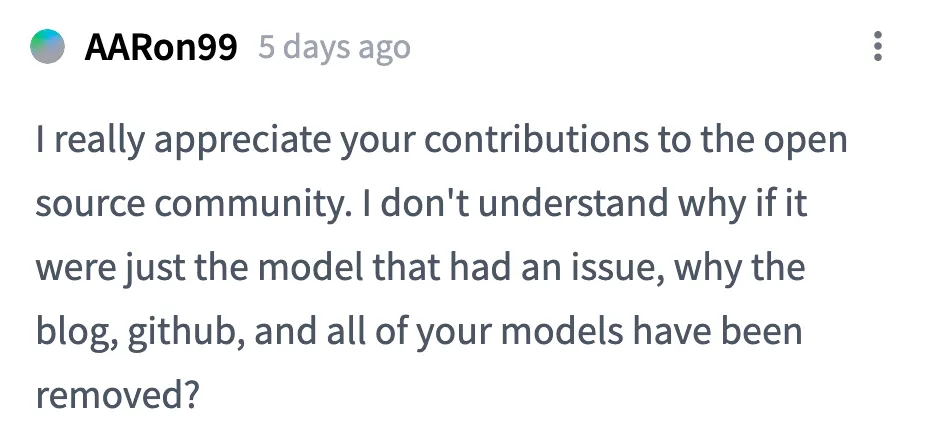

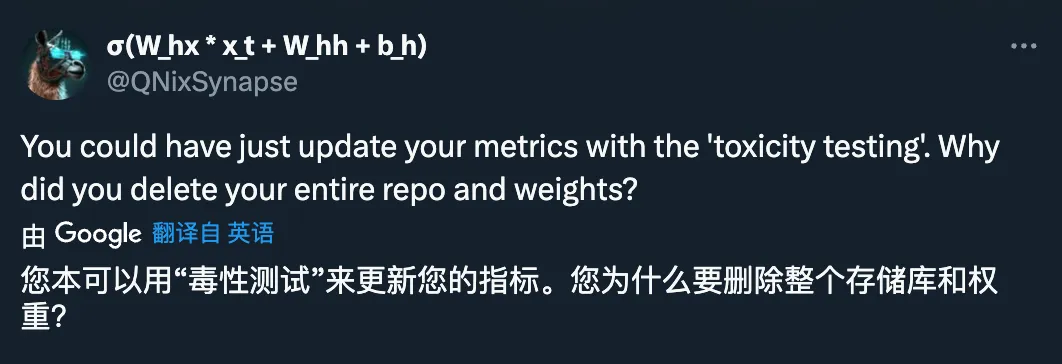

While everyone was busy downloading the model, the team suddenly withdrew everything: blog, GitHub, HuggingFace all got 404.

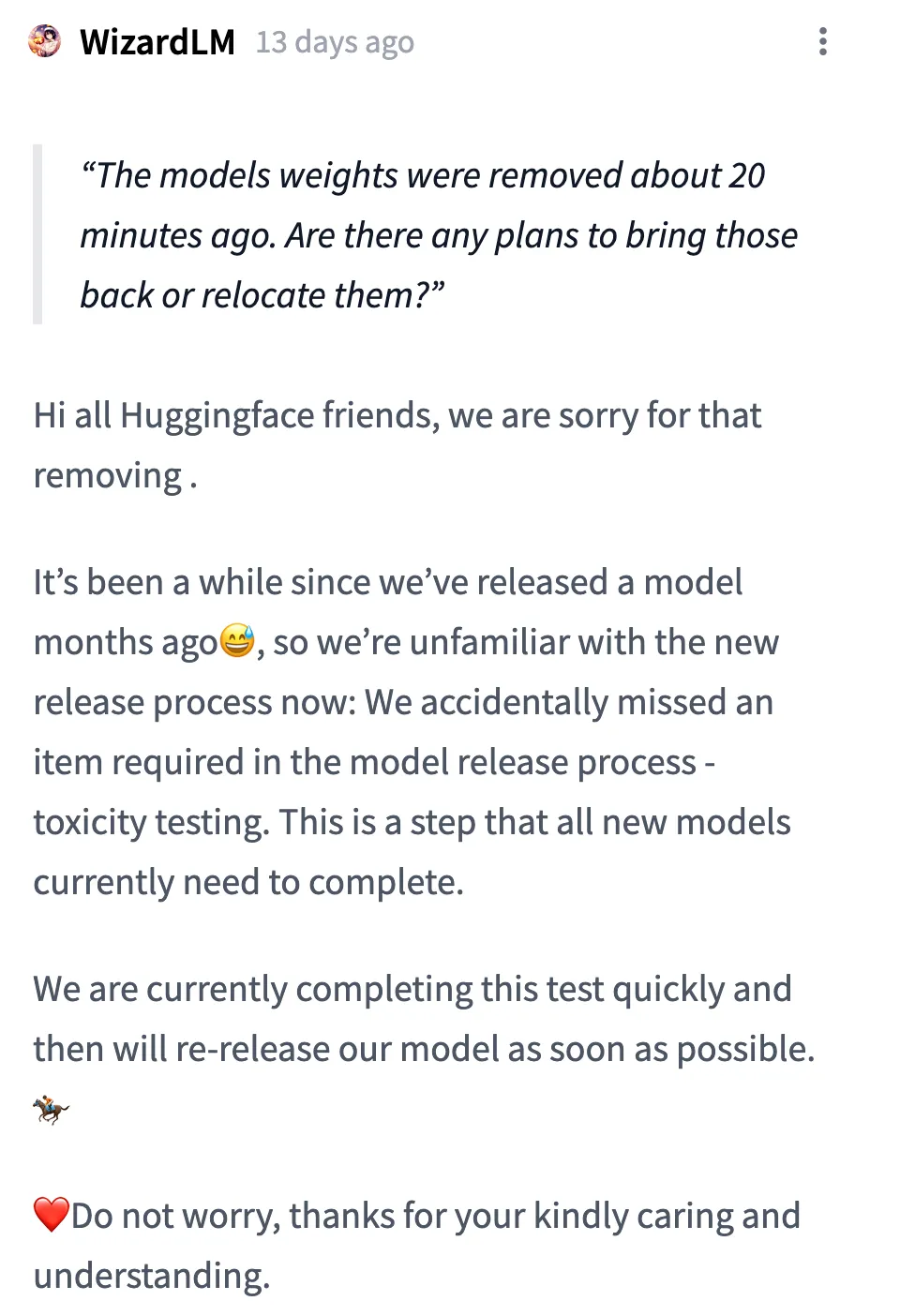

Team’s The explanation is:

Hello to all Huggingface friends! Sorry, we removed the model. It's been a while since we released a model from a few months ago, so we're not familiar with the new release process now: we accidentally left out a necessary item in the model release process - toxicity testing. This is a step that all new models currently need to complete.

We are currently completing this test quickly and will re-release our model as soon as possible. Don't worry, thank you for your concern and understanding.

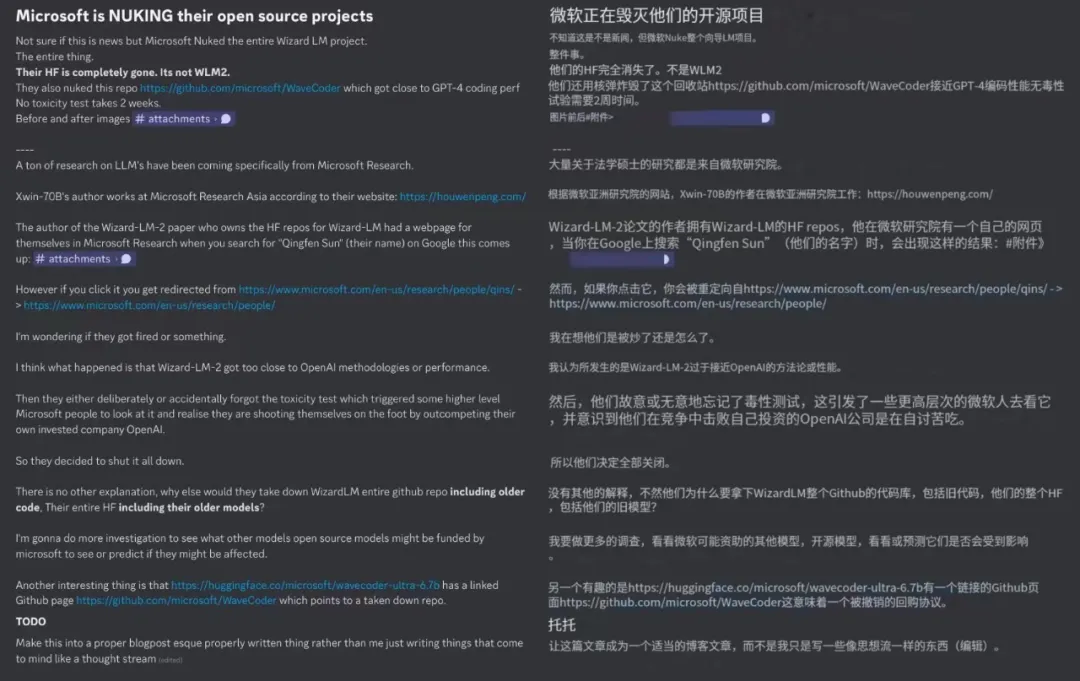

Third, there are also speculations that the team behind WizardLM has been fired, and that the withdrawal of the Wizard series project was also forced.

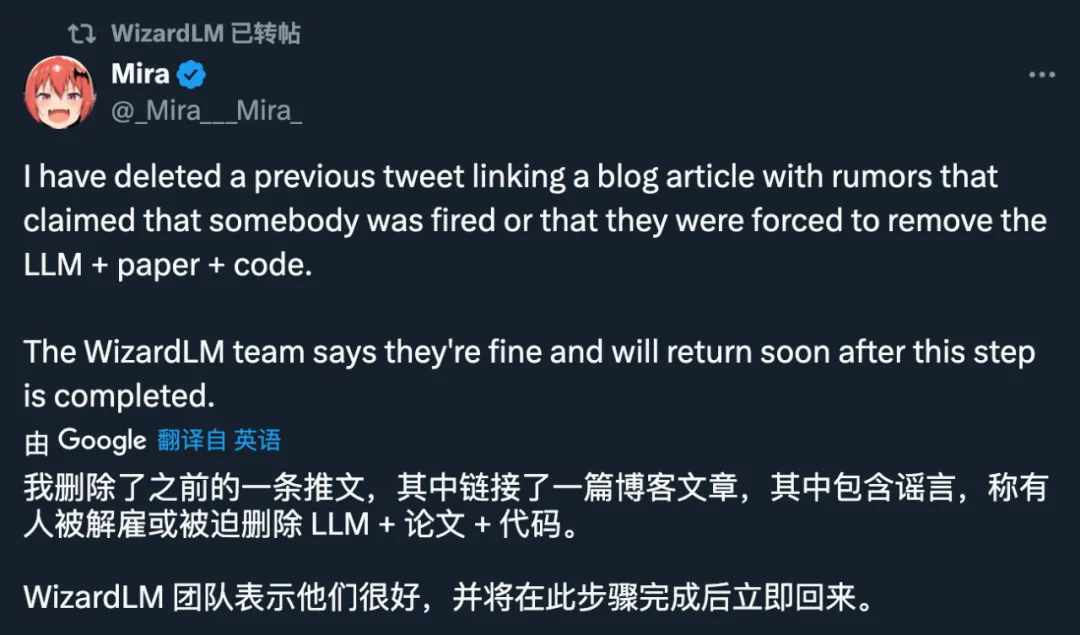

However, this speculation was denied by the team:

Picture source: https://x.com/_Mira___Mira_/status/1783716276944486751

##Picture source: https://x.com/ DavidFSWD/status/1783682898786152470

And when we search for the author’s name now, it has not completely disappeared from Microsoft’s official website:

Image source: https://www.microsoft.com/en-us/research/people/qins/

Fourth, some people speculate that Microsoft has withdrawn this The open source model is, firstly, because its performance is too close to GPT-4, and secondly, because it “collides” with OpenAI’s technical route.

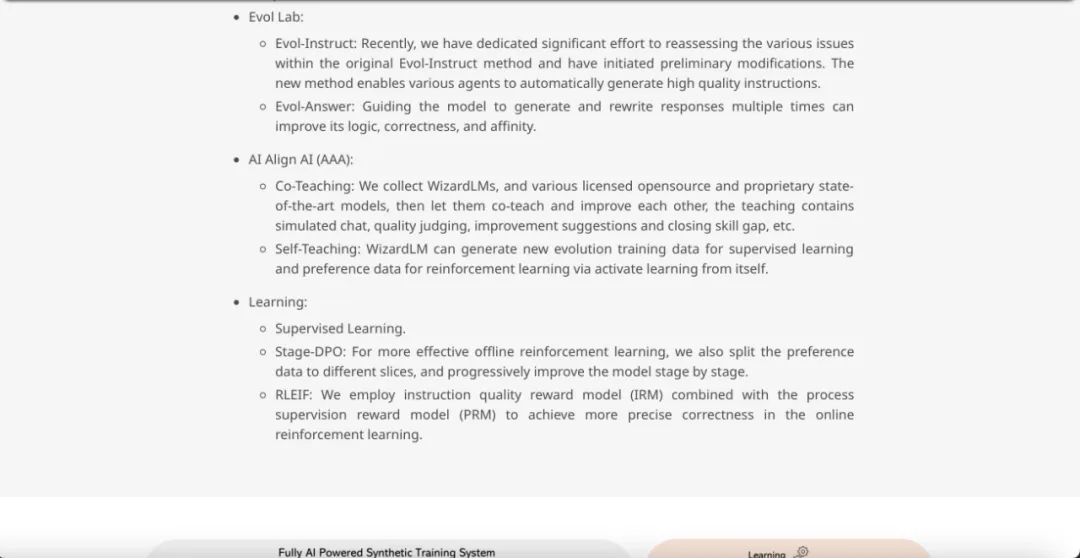

What is the specific route? We can take a look at the technical details of the original blog page.

The team stated that through LLM training, human-generated data in nature is increasingly exhausted, and AI-carefully created data and AI Step-by-Step supervised models will be the gateway to more powerful The only way to go with AI.

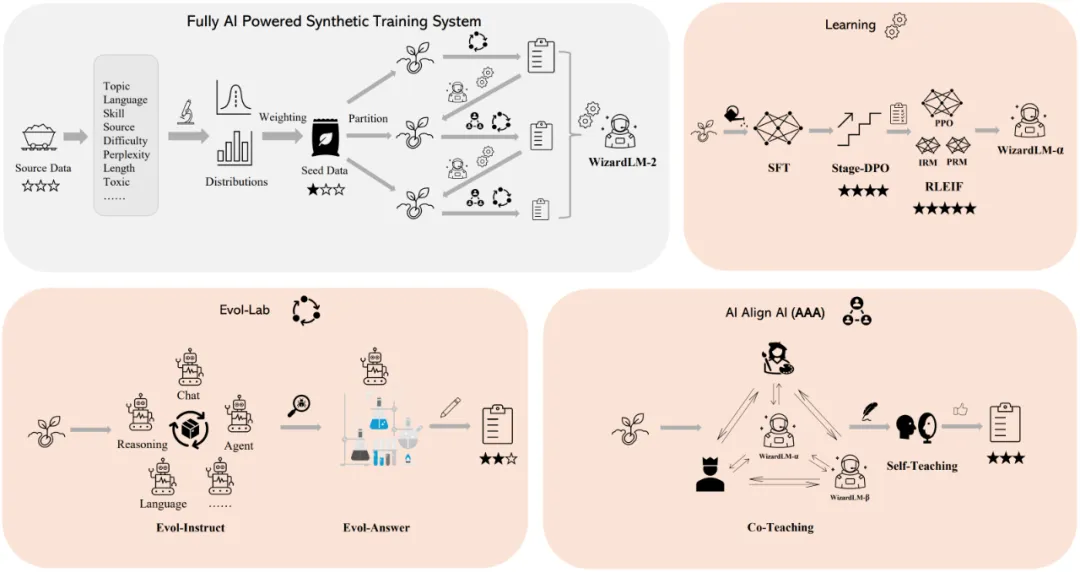

Over the past year, the Microsoft team has built a synthetic training system fully powered by artificial intelligence, as shown in the figure below.

is roughly divided into several sections:

Data preprocessing:

- Data analysis: Use this pipeline to obtain the distribution of different attributes of new source data, which helps to have a preliminary understanding of the data understanding.

- Weighted sampling: The distribution of the best training data is often inconsistent with the natural distribution of human chat corpus, and the weight of each attribute in the training data needs to be adjusted based on experimental experience.

Evol Lab:

- Evol-Instruct: A lot of effort has been invested in re-evaluating the various problems existing in the original Evol-Instruct method and making preliminary modifications to it. The new method can allow various agents to automatically Generate high-quality instructions.

- Evol-Answer: Guide the model to generate and rewrite responses multiple times, which can improve its logic, correctness and affinity.

AI Align AI (AAA):

- Co-teaching: Collect WizardLM and each Licensing state-of-the-art models, both open source and proprietary, and then letting them work together to teach and improve each other, including mock chats, quality critiques, suggestions for improvements, and closing skill gaps.

- Self-Teaching: WizardLM can generate new evolutionary training data for supervised learning and preference data for reinforcement learning through activation learning.

Learning:

- Supervised learning.

- Stage - DPO: In order to perform offline reinforcement learning more effectively, the preferred data is divided into different fragments and the model is improved step by step.

- RLEIF: Using a method that combines the instruction quality reward model (IRM) and the process supervision reward model (PRM) to achieve more precise correctness in online reinforcement learning.

The last thing to say is that any speculation is in vain, let us look forward to the comeback of WizardLM-2.

The above is the detailed content of WizardLM-2, which is 'very close to GPT-4', was urgently withdrawn by Microsoft. What's the inside story?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1381

1381

52

52

How to delete a repository by git

Apr 17, 2025 pm 04:03 PM

How to delete a repository by git

Apr 17, 2025 pm 04:03 PM

To delete a Git repository, follow these steps: Confirm the repository you want to delete. Local deletion of repository: Use the rm -rf command to delete its folder. Remotely delete a warehouse: Navigate to the warehouse settings, find the "Delete Warehouse" option, and confirm the operation.

How to connect to the public network of git server

Apr 17, 2025 pm 02:27 PM

How to connect to the public network of git server

Apr 17, 2025 pm 02:27 PM

Connecting a Git server to the public network includes five steps: 1. Set up the public IP address; 2. Open the firewall port (22, 9418, 80/443); 3. Configure SSH access (generate key pairs, create users); 4. Configure HTTP/HTTPS access (install servers, configure permissions); 5. Test the connection (using SSH client or Git commands).

How to generate ssh keys in git

Apr 17, 2025 pm 01:36 PM

How to generate ssh keys in git

Apr 17, 2025 pm 01:36 PM

In order to securely connect to a remote Git server, an SSH key containing both public and private keys needs to be generated. The steps to generate an SSH key are as follows: Open the terminal and enter the command ssh-keygen -t rsa -b 4096. Select the key saving location. Enter a password phrase to protect the private key. Copy the public key to the remote server. Save the private key properly because it is the credentials for accessing the account.

How to add public keys to git account

Apr 17, 2025 pm 02:42 PM

How to add public keys to git account

Apr 17, 2025 pm 02:42 PM

How to add a public key to a Git account? Step: Generate an SSH key pair. Copy the public key. Add a public key in GitLab or GitHub. Test the SSH connection.

How to deal with git code conflict

Apr 17, 2025 pm 02:51 PM

How to deal with git code conflict

Apr 17, 2025 pm 02:51 PM

Code conflict refers to a conflict that occurs when multiple developers modify the same piece of code and cause Git to merge without automatically selecting changes. The resolution steps include: Open the conflicting file and find out the conflicting code. Merge the code manually and copy the changes you want to keep into the conflict marker. Delete the conflict mark. Save and submit changes.

How to detect ssh by git

Apr 17, 2025 pm 02:33 PM

How to detect ssh by git

Apr 17, 2025 pm 02:33 PM

To detect SSH through Git, you need to perform the following steps: Generate an SSH key pair. Add the public key to the Git server. Configure Git to use SSH. Test the SSH connection. Solve possible problems according to actual conditions.

How to separate git commit

Apr 17, 2025 pm 02:36 PM

How to separate git commit

Apr 17, 2025 pm 02:36 PM

Use git to submit code separately, providing granular change tracking and independent work ability. The steps are as follows: 1. Add the changed files; 2. Submit specific changes; 3. Repeat the above steps; 4. Push submission to the remote repository.

How to resolve git merge conflict

Apr 17, 2025 pm 12:24 PM

How to resolve git merge conflict

Apr 17, 2025 pm 12:24 PM

Merge conflict occurs when there are different commit changes in the same line of code. Resolving conflicts requires: opening conflict files, checking conflict points, selecting and merging changes, deleting conflict markers, submitting and pushing changes. Use the git mergetool tool to resolve certain conflicts, seek help if you have difficulties, and frequently merge branches to reduce the number of conflicts.