Understanding GraphRAG (1): Challenges of RAG

RAG (Risk Assessment Grid) is a method of enhancing existing large language models (LLM) with external knowledge sources to provide answers that are more relevant to the context. In RAG, the retrieval component obtains additional information, the response is based on a specific source, and then feeds this information into the LLM prompt so that the LLM's response is based on this information (enhancement phase). RAG is more economical compared to other techniques such as trimming. It also has the advantage of reducing hallucinations by providing additional context based on this information (augmentation stage) - your RAG becomes the workflow method for today's LLM tasks (such as recommendation, text extraction, sentiment analysis, etc.).

If we break this idea down further, based on user intent, we typically query a database of vectors. Vector databases use a continuous vector space to capture the relationship between two concepts using proximity-based search.

Vector Database Overview

In vector space, whether it is text, images, audio, or any other type of information, it is transformed into vector. Vectors are numerical representations of data in high-dimensional space. Each dimension corresponds to a feature of the data, and the values in each dimension reflect the strength or presence of that feature. Through vector representation, we can perform operations such as mathematical operations, distance calculations, and similarity comparisons on the data. The values corresponding to different dimensions reflect the strength or presence of the feature. Taking text data as an example, each document can be represented as a vector, where each dimension represents the frequency of a word in the document. This way, two documents can be

# to perform a proximity based search in a database by calculating the distance between their vectors, involving and querying those databases using another vector, and searching A vector that is "close" to it in vector space. The proximity between vectors is usually determined by distance measures such as Euclidean distance, cosine similarity, or Manhattan distance. The proximity between vectors is usually determined by distance measures such as Euclidean distance, cosine similarity, or Manhattan distance.

When you perform a search into a database, you provide a query that the system converts into a vector. The database then calculates the distance or similarity between this query vector and vectors already stored in the database. Vectors that are close to the query vector (according to the chosen metric) are considered the most relevant results. These vectors that are closest to the query vector (according to the chosen metric) are considered the most relevant results.

Proximity-based search is particularly powerful in vector databases and is suitable for tasks such as recommendation systems, information retrieval, and anomaly detection.

This approach enables the system to operate more intuitively and respond to user queries more effectively by understanding the context and deeper meaning in the data, rather than relying solely on surface matches.

However, there are some limitations in applications connecting to databases for advanced searches, such as data quality, the ability to handle dynamic knowledge, and transparency.

Limitations of RAG

According to the size of the document, RAG is roughly divided into three categories: if the document is small, it can be accessed contextually; if the document Very large (or have multiple documents), at query time smaller chunks are generated which are indexed and used in response to the query.

Despite its success, RAG has some shortcomings.

The two main indicators to measure the performance of RAG are perplexity and hallucination. Perplexity represents the number of equally possible next word choices in the text generation process. That is, how "confused" the language model is in its selection. Hallucinations are untrue or imaginary statements made by AI.

While RAG helps reduce hallucination, it does not eliminate it. If you have a small and concise document, you can reduce confusion (since LLM options are few) and reduce hallucinations (if you only ask what's in the document). Of course, the flip side is that a single small document results in a trivial application. For more complex applications, you need a way to provide more context.

For example, consider the word "bark" - we have at least two different contexts:

Context of the tree: "oak rough" The bark protects it from the cold ”

##Dog context: “The neighbor’s dog barks loudly every time someone passes their house.”

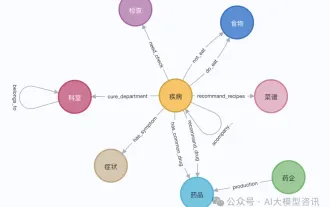

One way to provide more context is to combine a RAG with a knowledge graph (a GRAPHRAG).

In the knowledge graph, these words are connected with their associated context and meaning. For example, "bark" would be connected to nodes representing "tree" and "dog". Other connections can indicate common actions (e.g., the tree's "protection," the dog's "making noise") or properties (e.g., the tree's "roughness," the dog's "loudness"). This structured information allows the language model to choose the appropriate meaning based on other words in the sentence or the overall theme of the conversation.

In the next sections, we will see the limitations of RAG and how GRAPHRAG addresses them.

Original title: Understanding GraphRAG – 1: The challenges of RAG

Original author: ajitjaokar

The above is the detailed content of Understanding GraphRAG (1): Challenges of RAG. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Translator | Bugatti Review | Chonglou This article describes how to use the GroqLPU inference engine to generate ultra-fast responses in JanAI and VSCode. Everyone is working on building better large language models (LLMs), such as Groq focusing on the infrastructure side of AI. Rapid response from these large models is key to ensuring that these large models respond more quickly. This tutorial will introduce the GroqLPU parsing engine and how to access it locally on your laptop using the API and JanAI. This article will also integrate it into VSCode to help us generate code, refactor code, enter documentation and generate test units. This article will create our own artificial intelligence programming assistant for free. Introduction to GroqLPU inference engine Groq

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Apr 23, 2024 pm 03:01 PM

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Apr 23, 2024 pm 03:01 PM

LeanCopilot, this formal mathematics tool that has been praised by many mathematicians such as Terence Tao, has evolved again? Just now, Caltech professor Anima Anandkumar announced that the team released an expanded version of the LeanCopilot paper and updated the code base. Image paper address: https://arxiv.org/pdf/2404.12534.pdf The latest experiments show that this Copilot tool can automate more than 80% of the mathematical proof steps! This record is 2.3 times better than the previous baseline aesop. And, as before, it's open source under the MIT license. In the picture, he is Song Peiyang, a Chinese boy. He is

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

From 'human + RPA' to 'human + generative AI + RPA', how does LLM affect RPA human-computer interaction?

Jun 05, 2023 pm 12:30 PM

From 'human + RPA' to 'human + generative AI + RPA', how does LLM affect RPA human-computer interaction?

Jun 05, 2023 pm 12:30 PM

Image source@visualchinesewen|Wang Jiwei From "human + RPA" to "human + generative AI + RPA", how does LLM affect RPA human-computer interaction? From another perspective, how does LLM affect RPA from the perspective of human-computer interaction? RPA, which affects human-computer interaction in program development and process automation, will now also be changed by LLM? How does LLM affect human-computer interaction? How does generative AI change RPA human-computer interaction? Learn more about it in one article: The era of large models is coming, and generative AI based on LLM is rapidly transforming RPA human-computer interaction; generative AI redefines human-computer interaction, and LLM is affecting the changes in RPA software architecture. If you ask what contribution RPA has to program development and automation, one of the answers is that it has changed human-computer interaction (HCI, h

Plaud launches NotePin AI wearable recorder for $169

Aug 29, 2024 pm 02:37 PM

Plaud launches NotePin AI wearable recorder for $169

Aug 29, 2024 pm 02:37 PM

Plaud, the company behind the Plaud Note AI Voice Recorder (available on Amazon for $159), has announced a new product. Dubbed the NotePin, the device is described as an AI memory capsule, and like the Humane AI Pin, this is wearable. The NotePin is

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

GraphRAG enhanced for knowledge graph retrieval (implemented based on Neo4j code)

Jun 12, 2024 am 10:32 AM

GraphRAG enhanced for knowledge graph retrieval (implemented based on Neo4j code)

Jun 12, 2024 am 10:32 AM

Graph Retrieval Enhanced Generation (GraphRAG) is gradually becoming popular and has become a powerful complement to traditional vector search methods. This method takes advantage of the structural characteristics of graph databases to organize data in the form of nodes and relationships, thereby enhancing the depth and contextual relevance of retrieved information. Graphs have natural advantages in representing and storing diverse and interrelated information, and can easily capture complex relationships and properties between different data types. Vector databases are unable to handle this type of structured information, and they focus more on processing unstructured data represented by high-dimensional vectors. In RAG applications, combining structured graph data and unstructured text vector search allows us to enjoy the advantages of both at the same time, which is what this article will discuss. structure

Visualize FAISS vector space and adjust RAG parameters to improve result accuracy

Mar 01, 2024 pm 09:16 PM

Visualize FAISS vector space and adjust RAG parameters to improve result accuracy

Mar 01, 2024 pm 09:16 PM

As the performance of open source large-scale language models continues to improve, performance in writing and analyzing code, recommendations, text summarization, and question-answering (QA) pairs has all improved. But when it comes to QA, LLM often falls short on issues related to untrained data, and many internal documents are kept within the company to ensure compliance, trade secrets, or privacy. When these documents are queried, LLM can hallucinate and produce irrelevant, fabricated, or inconsistent content. One possible technique to handle this challenge is Retrieval Augmented Generation (RAG). It involves the process of enhancing responses by referencing authoritative knowledge bases beyond the training data source to improve the quality and accuracy of the generation. The RAG system includes a retrieval system for retrieving relevant document fragments from the corpus