Technology peripherals

Technology peripherals

AI

AI

How can OctopusV3, with less than 1 billion parameters, compare with GPT-4V and GPT-4?

How can OctopusV3, with less than 1 billion parameters, compare with GPT-4V and GPT-4?

How can OctopusV3, with less than 1 billion parameters, compare with GPT-4V and GPT-4?

Multimodal AI systems are characterized by their ability to process and learn various types of data including natural language, vision, audio, etc., to guide their behavioral decisions. Recently, research on incorporating visual data into large language models (such as GPT-4V) has made important progress, but how to effectively convert image information into executable operations for AI systems still faces challenges. In order to achieve the transformation of image information, a common method is to convert image data into corresponding text descriptions, and then the AI system operates based on the descriptions. This can be done by performing supervised learning on existing image data sets, allowing the AI system to automatically learn the image-to-text mapping relationship. In addition, reinforcement learning methods can also be used to learn how to make decisions based on image information by interacting with the environment. Another method is to directly combine image information with language models to construct

In a recent paper, researchers proposed a multi-modal model designed specifically for AI applications, introducing "functional "token" concept.

Paper title: Octopus v3: Technical Report for On-device Sub-billion Multimodal AI Agent

Paper link: https://arxiv .org/pdf/2404.11459.pdf

Model weights and inference code: https://www.nexa4ai.com/apply

This model can fully support edge devices, and researchers have optimized its parameter size to within 1 billion. Similar to GPT-4, this model can handle both English and Chinese. Experiments have proven that the model can run efficiently on various resource-limited terminal devices including Raspberry Pi.

#Research Background

The rapid development of artificial intelligence technology has completely changed the way human-computer interaction occurs, giving rise to a number of intelligent AI systems that can perform complex tasks and make decisions based on natural language, vision and other forms of input. These systems are expected to automate everything from simple tasks such as image recognition and language translation to complex applications such as medical diagnosis and autonomous driving. Multimodal language models are at the core of these intelligent systems, enabling them to understand and generate near-human responses by processing and integrating multimodal data such as text, images, and even audio and video. Compared with traditional language models that mainly focus on text processing and generation, multimodal language models are a big leap forward. By incorporating visual information, these models are able to better understand the context and semantics of the input data, resulting in more accurate and relevant output. The ability to process and integrate multimodal data is crucial for developing multimodal AI systems that can simultaneously understand tasks such as language and visual information, such as visual question answering, image navigation, multimodal sentiment analysis, etc.

One of the challenges in developing multimodal language models is how to effectively encode visual information into a format that the model can process. This is usually done with the help of neural network architectures, such as visual transformers (ViT) and convolutional neural networks (CNN). The ability to extract hierarchical features from images is widely used in computer vision tasks. Using these architectures as models, one can learn to extract more complex representations from input data. Furthermore, the transformer-based architecture is not only capable of capturing long-distance dependencies but also excels in understanding the relationships between objects in images. Very popular in recent years. These architectures enable models to extract meaningful features from input images and convert them into vector representations that can be combined with text input.

Another way to encode visual information is image symbolization (tokenization), which is to divide the image into smaller discrete units or tokens. This approach allows the model to process images in a similar way to text, enabling a more seamless integration of the two modalities. Image token information can be fed into the model along with text input, allowing it to focus on both modalities and produce more accurate and contextual output. For example, the DALL-E model developed by OpenAI uses a variant of VQ-VAE (Vector Quantized Variational Autoencoder) to symbolize images, allowing the model to generate novel images based on text descriptions. Developing small, efficient models that can act on user-supplied queries and images will have profound implications for the future development of AI systems. These models can be deployed on resource-constrained devices such as smartphones and IoT devices, expanding their application scope and scenarios. Leveraging the power of multimodal language models, these small systems can understand and respond to user queries in a more natural and intuitive way, while taking into account the visual context provided by the user. This opens up the possibility of more engaging, personalized human-machine interactions, such as virtual assistants that provide visual recommendations based on user preferences, or smart home devices that adjust settings based on the user’s facial expressions.

In addition, the development of multi-modal AI systems is expected to democratize artificial intelligence technology and benefit a wider range of users and industries. Smaller and more efficient models can be trained on hardware with weaker computing power, reducing the computing resources and energy consumption required for deployment. This may lead to the widespread application of AI systems in various fields such as medical care, education, entertainment, e-commerce, etc., ultimately changing the way people live and work.

Related Work

Multimodal models have attracted much attention due to their ability to process and learn multiple data types such as text, images, and audio. This type of model can capture the complex interactions between different modalities and use their complementary information to improve the performance of various tasks. Vision-Language Pre-trained (VLP) models such as ViLBERT, LXMERT, VisualBERT, etc. learn the alignment of visual and text features through cross-modal attention to generate rich multi-modal representations. Multi-modal transformer architectures such as MMT, ViLT, etc. have improved transformers to efficiently handle multiple modalities. Researchers have also tried to incorporate other modalities such as audio and facial expressions into models, such as multimodal sentiment analysis (MSA) models, multimodal emotion recognition (MER) models, etc. By utilizing the complementary information of different modalities, multimodal models achieve better performance and generalization capabilities than single-modal methods.

Terminal language models are defined as models with less than 7 billion parameters, because researchers have found that even with quantization, it is very difficult to run a 13 billion parameter model on edge devices. Recent advances in this area include Google’s Gemma 2B and 7B, Stable Diffusion’s Stable Code 3B, and Meta’s Llama 7B. Interestingly, Meta’s research shows that, unlike large language models, small language models perform better with deep and narrow architectures. Other techniques that are beneficial to the terminal model include embedding sharing, grouped query attention, and instant block weight sharing proposed in MobileLLM. These findings highlight the need to consider different optimization methods and design strategies when developing small language models for end applications than for large models.

Octopus Method

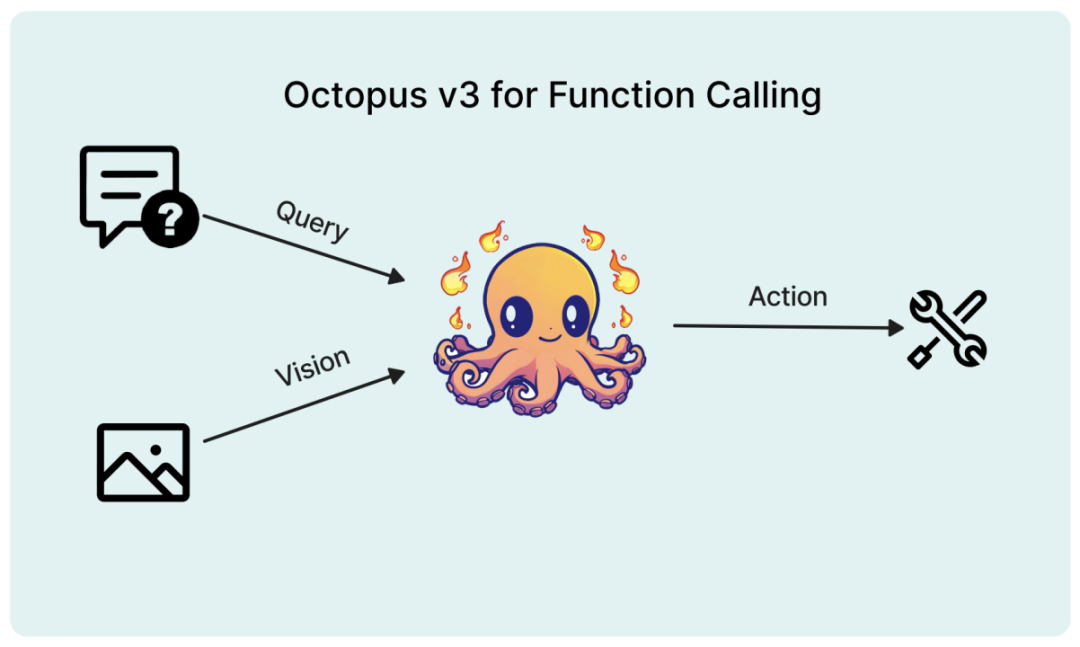

Main techniques used in the development of the Octopus v3 model. Two key aspects of multimodal model development are integrating image information with textual input and optimizing the model's ability to predict actions.

Visual information encoding

There are many visual information encoding methods in image processing, and embedding of hidden layers is commonly used. For example, the hidden layer embedding of the VGG-16 model is used for style transfer tasks. OpenAI’s CLIP model demonstrates the ability to align text and image embedding, leveraging its image encoder to embed images. Methods such as ViT use more advanced technologies such as image tokenization. The researchers evaluated various image coding techniques and found that the CLIP model method was the most effective. Therefore, this paper uses a CLIP-based model for image coding.

Functional token

Similar to tokenization applied to natural language and images, specific functions can also be encapsulated as functional tokens. The researchers introduced a training strategy for these tokens, drawing on the technology of natural language models to process unseen words. This method is similar to word2vec and enriches the semantics of the token through its context. For example, high-level language models may initially struggle with complex chemical terms such as PEGylation and Endosomal Escape. But through causal language modeling, especially by training on a data set that contains these terms, the model can learn these terms. Similarly, functional tokens can also be learned through parallel strategies, with the Octopus v2 model providing a powerful platform for such learning processes. Research shows that the definition space of functional tokens is infinite, allowing any specific function to be represented as a token.

Multi-stage training

In order to develop a high-performance multi-modal AI system, the researchers adopted a model architecture that integrates causal language models and image encoders. The training process of this model is divided into multiple stages. First, the causal language model and image encoder are trained separately to establish a basic model. Subsequently, the two components are merged and aligned and trained to synchronize image and text processing capabilities. On this basis, the method of Octopus v2 is used to promote the learning of functional tokens. In the final training phase, these functional tokens that interact with the environment provide feedback for further optimization of the model. Therefore, in the final stage, the researchers adopted reinforcement learning and selected another large language model as the reward model. This iterative training method enhances the model's ability to process and integrate multi-modal information.

Model Evaluation

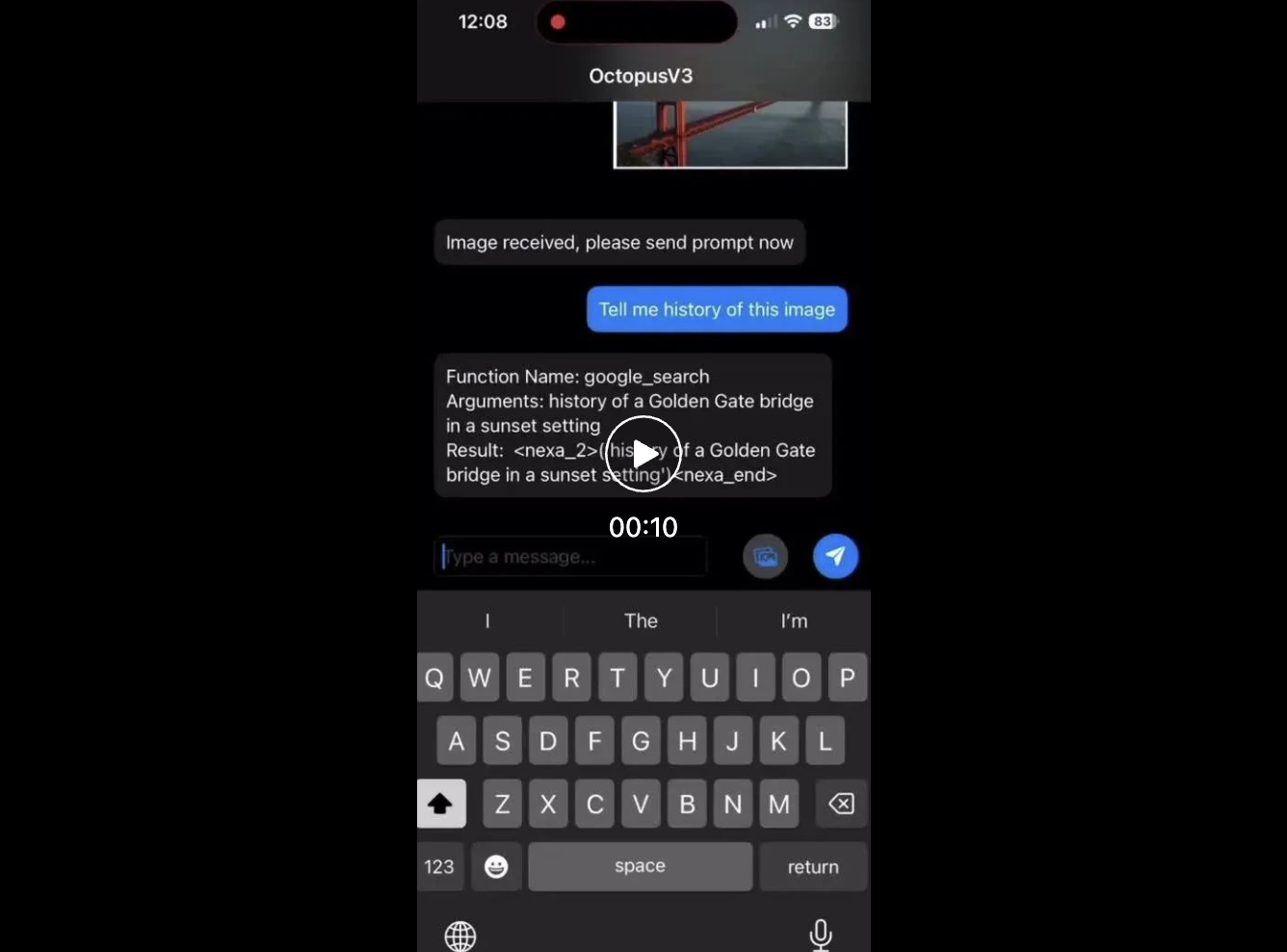

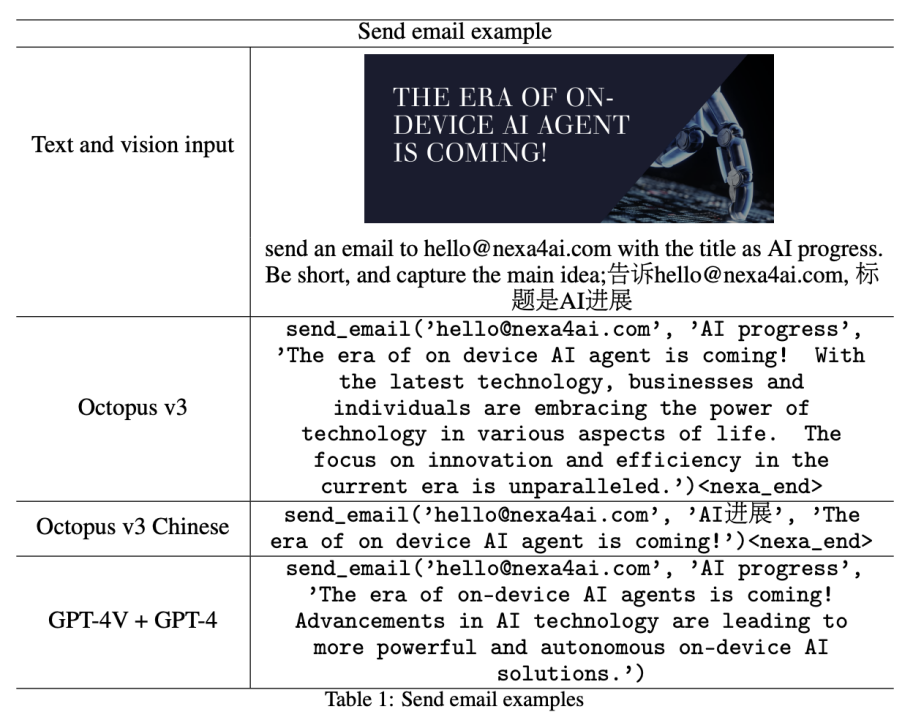

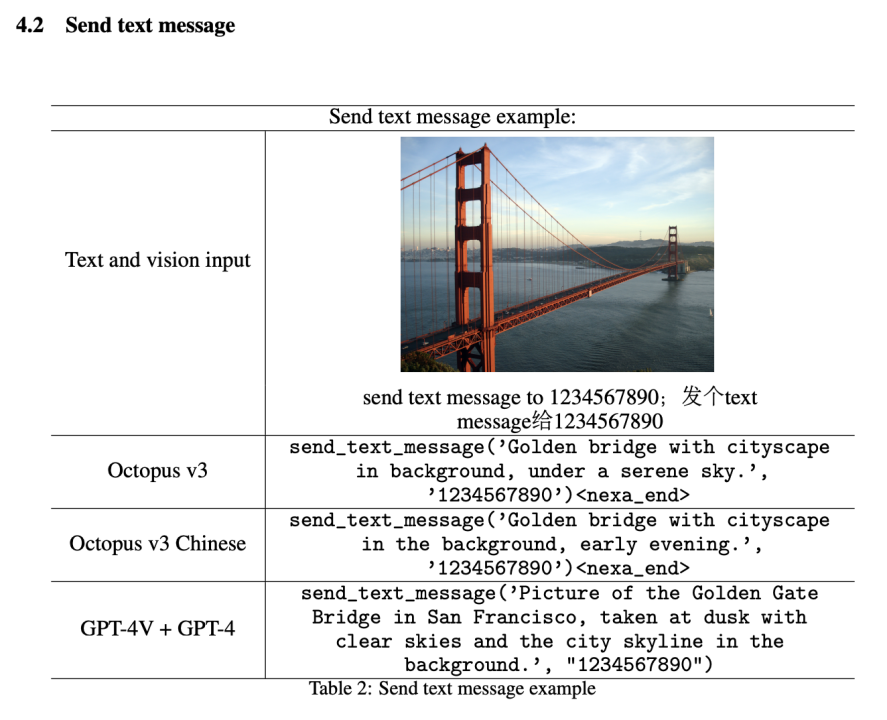

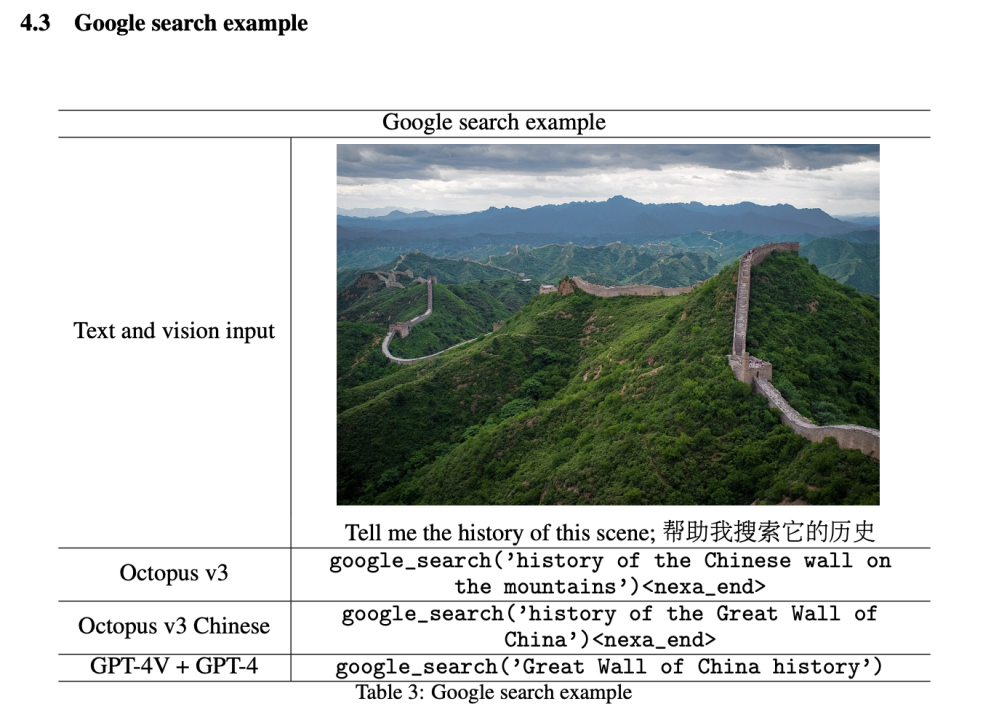

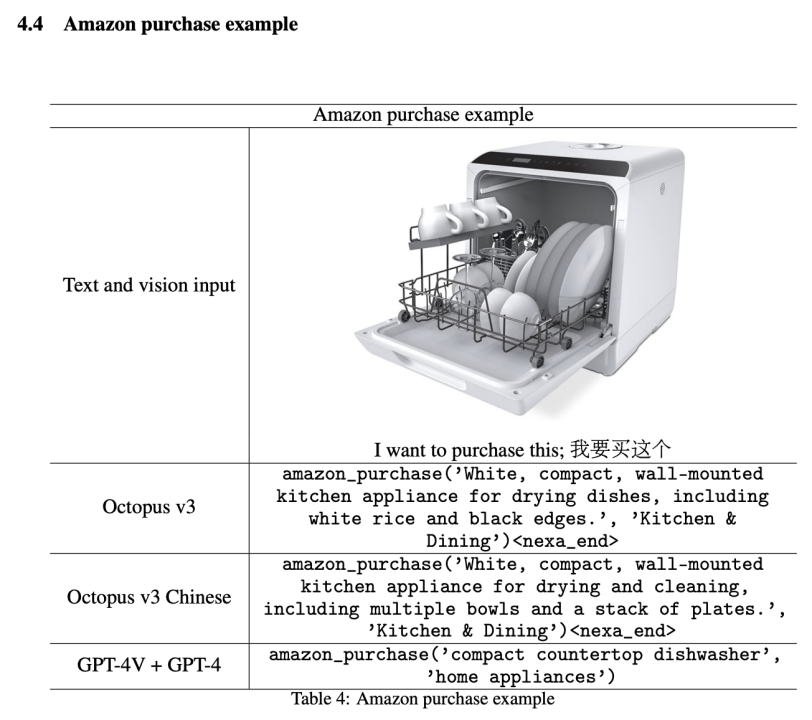

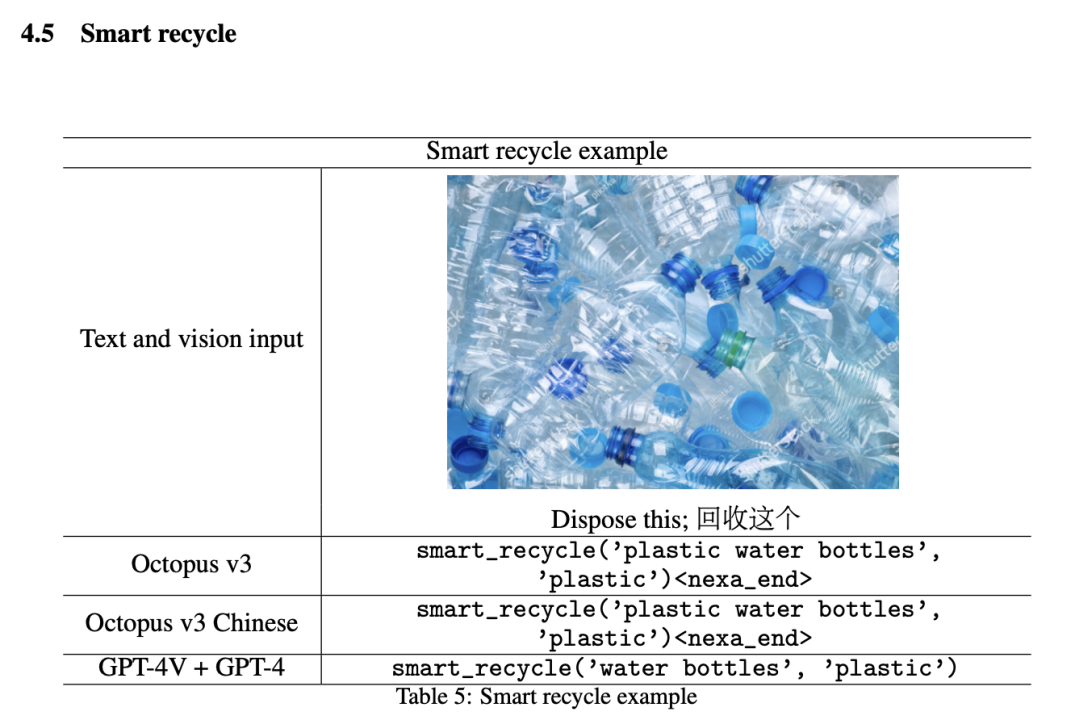

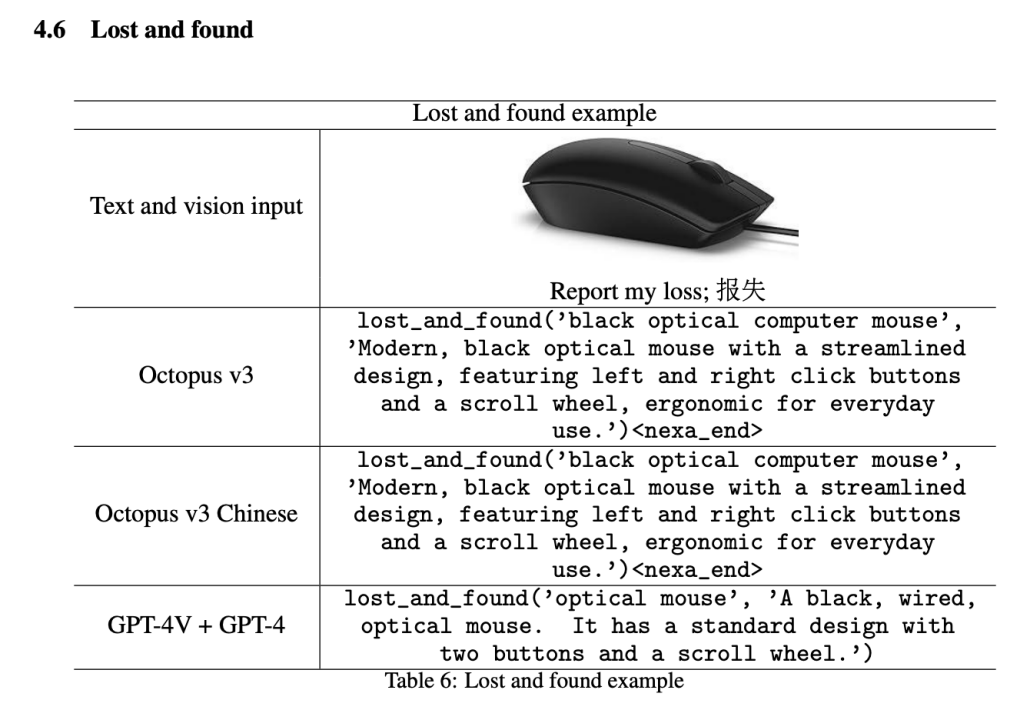

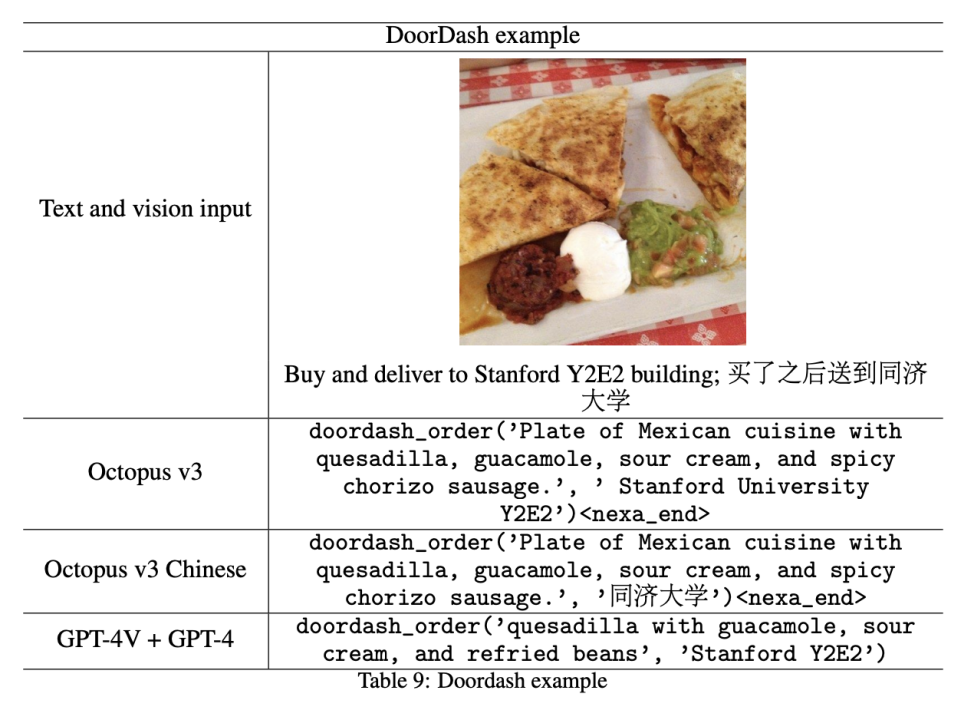

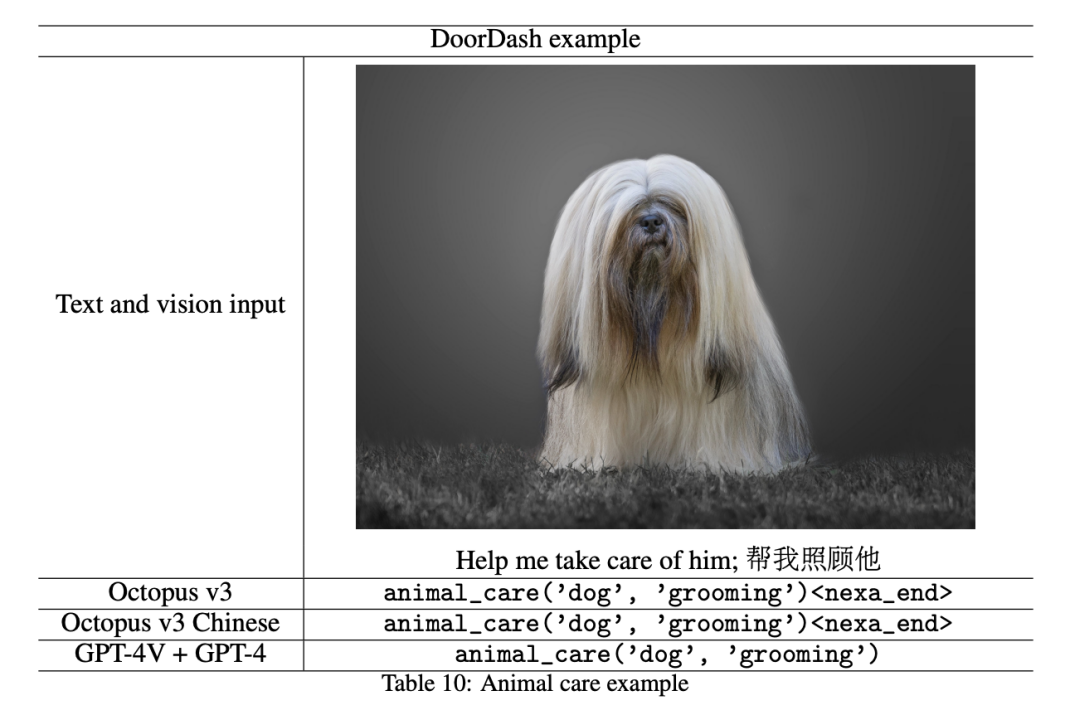

This section introduces the experimental results of the model and compares them with the effects of integrating GPT-4V and GPT-4 models. In the comparative experiment, the researchers first used GPT-4V (gpt-4-turbo) to process image information. The extracted data is then fed into the GPT-4 framework (gpt-4-turbo-preview), which contextualizes all function descriptions and applies few-shot learning to improve performance. In the demonstration, the researchers converted 10 commonly used smartphone APIs into functional tokens and evaluated their performance, as detailed in the subsequent sections.

It is worth noting that although this article only shows 10 functional tokens, the model can train more tokens to create a more general AI system. The researchers found that for selected APIs, models with less than 1 billion parameters performed as multimodal AI comparable to the combination of GPT-4V and GPT-4.

In addition, the scalability of the model in this article allows the inclusion of a wide range of functional tokens, thereby enabling the creation of highly specialized AI systems suitable for specific fields or scenarios. This adaptability makes our approach particularly valuable in industries such as healthcare, finance, and customer service, where AI-driven solutions can significantly improve efficiency and user experience.

Among all the function names below, Octopus only outputs functional tokens such as ,...,

Send an email

Send a text message

Google Search

Amazon Shopping

Intelligent Recycling

##Lost and Found

Interior Design

Instacart Shopping

DoorDash Delivery

Pet Care

Social Impact

Based on Octopus v2 Above, the updated model incorporates both textual and visual information, taking a significant step forward from its predecessor, a text-only approach. This significant advance enables simultaneous processing of visual and natural language data, paving the way for wider applications. The functional token introduced in Octopus v2 can be adapted to multiple fields, such as the medical and automotive industries. With the addition of visual data, the potential of functional tokens further extends to fields such as autonomous driving and robotics. In addition, the multi-modal model in this article makes it possible to actually transform devices such as Raspberry Pi into intelligent hardware such as Rabbit R1 and Humane AI Pin, using an end-point model rather than a cloud-based solution.

Functional token is currently authorized. The researcher encourages developers to participate in the framework of this article and innovate freely under the premise of complying with the license agreement. In future research, the researchers aim to develop a training framework that can accommodate additional data modalities such as audio and video. In addition, researchers have found that visual input can cause considerable latency and are currently optimizing inference speed.

The above is the detailed content of How can OctopusV3, with less than 1 billion parameters, compare with GPT-4V and GPT-4?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

In modern manufacturing, accurate defect detection is not only the key to ensuring product quality, but also the core of improving production efficiency. However, existing defect detection datasets often lack the accuracy and semantic richness required for practical applications, resulting in models unable to identify specific defect categories or locations. In order to solve this problem, a top research team composed of Hong Kong University of Science and Technology Guangzhou and Simou Technology innovatively developed the "DefectSpectrum" data set, which provides detailed and semantically rich large-scale annotation of industrial defects. As shown in Table 1, compared with other industrial data sets, the "DefectSpectrum" data set provides the most defect annotations (5438 defect samples) and the most detailed defect classification (125 defect categories

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Editor |KX To this day, the structural detail and precision determined by crystallography, from simple metals to large membrane proteins, are unmatched by any other method. However, the biggest challenge, the so-called phase problem, remains retrieving phase information from experimentally determined amplitudes. Researchers at the University of Copenhagen in Denmark have developed a deep learning method called PhAI to solve crystal phase problems. A deep learning neural network trained using millions of artificial crystal structures and their corresponding synthetic diffraction data can generate accurate electron density maps. The study shows that this deep learning-based ab initio structural solution method can solve the phase problem at a resolution of only 2 Angstroms, which is equivalent to only 10% to 20% of the data available at atomic resolution, while traditional ab initio Calculation

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

For AI, Mathematical Olympiad is no longer a problem. On Thursday, Google DeepMind's artificial intelligence completed a feat: using AI to solve the real question of this year's International Mathematical Olympiad IMO, and it was just one step away from winning the gold medal. The IMO competition that just ended last week had six questions involving algebra, combinatorics, geometry and number theory. The hybrid AI system proposed by Google got four questions right and scored 28 points, reaching the silver medal level. Earlier this month, UCLA tenured professor Terence Tao had just promoted the AI Mathematical Olympiad (AIMO Progress Award) with a million-dollar prize. Unexpectedly, the level of AI problem solving had improved to this level before July. Do the questions simultaneously on IMO. The most difficult thing to do correctly is IMO, which has the longest history, the largest scale, and the most negative

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Editor | ScienceAI Based on limited clinical data, hundreds of medical algorithms have been approved. Scientists are debating who should test the tools and how best to do so. Devin Singh witnessed a pediatric patient in the emergency room suffer cardiac arrest while waiting for treatment for a long time, which prompted him to explore the application of AI to shorten wait times. Using triage data from SickKids emergency rooms, Singh and colleagues built a series of AI models that provide potential diagnoses and recommend tests. One study showed that these models can speed up doctor visits by 22.3%, speeding up the processing of results by nearly 3 hours per patient requiring a medical test. However, the success of artificial intelligence algorithms in research only verifies this

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

In 2023, almost every field of AI is evolving at an unprecedented speed. At the same time, AI is constantly pushing the technological boundaries of key tracks such as embodied intelligence and autonomous driving. Under the multi-modal trend, will the situation of Transformer as the mainstream architecture of AI large models be shaken? Why has exploring large models based on MoE (Mixed of Experts) architecture become a new trend in the industry? Can Large Vision Models (LVM) become a new breakthrough in general vision? ...From the 2023 PRO member newsletter of this site released in the past six months, we have selected 10 special interpretations that provide in-depth analysis of technological trends and industrial changes in the above fields to help you achieve your goals in the new year. be prepared. This interpretation comes from Week50 2023

The accuracy rate reaches 60.8%. Zhejiang University's chemical retrosynthesis prediction model based on Transformer was published in the Nature sub-journal

Aug 06, 2024 pm 07:34 PM

The accuracy rate reaches 60.8%. Zhejiang University's chemical retrosynthesis prediction model based on Transformer was published in the Nature sub-journal

Aug 06, 2024 pm 07:34 PM

Editor | KX Retrosynthesis is a critical task in drug discovery and organic synthesis, and AI is increasingly used to speed up the process. Existing AI methods have unsatisfactory performance and limited diversity. In practice, chemical reactions often cause local molecular changes, with considerable overlap between reactants and products. Inspired by this, Hou Tingjun's team at Zhejiang University proposed to redefine single-step retrosynthetic prediction as a molecular string editing task, iteratively refining the target molecular string to generate precursor compounds. And an editing-based retrosynthetic model EditRetro is proposed, which can achieve high-quality and diverse predictions. Extensive experiments show that the model achieves excellent performance on the standard benchmark data set USPTO-50 K, with a top-1 accuracy of 60.8%.