Technology peripherals

Technology peripherals

AI

AI

OmniDrive: A framework for aligning large models with 3D driving tasks

OmniDrive: A framework for aligning large models with 3D driving tasks

OmniDrive: A framework for aligning large models with 3D driving tasks

May 06, 2024 pm 03:16 PMStart with a novel 3D MLLM architecture that uses sparse queries to lift and compress visual representations into 3D, which are then fed into the LLM.

Title: OmniDrive: A Holistic LLM-Agent Framework for Autonomous Driving with 3D Perception Reasoning and Planning

Author affiliation: Beijing Institute of Technology, NVIDIA, Huazhong University of Science and Technology

Open source Address: GitHub - NVlabs/OmniDrive

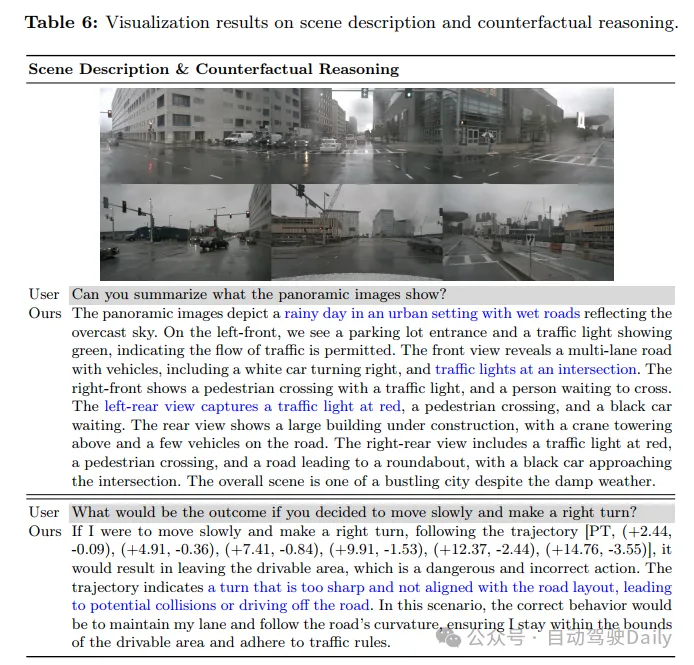

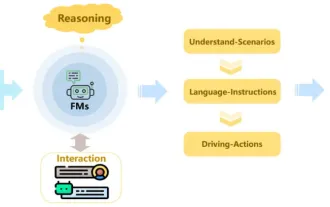

The development of multimodal large language models (MLLMs) has led to growing interest in LLM-based autonomous driving, leveraging their powerful inference capabilities. Leveraging the powerful reasoning capabilities of MLLMs to improve planning behavior is challenging because they require full 3D situation awareness beyond 2D reasoning. To address this challenge, this work proposes OmniDrive, a comprehensive framework for robust alignment between agent models and 3D driving tasks. The framework starts with a novel 3D MLLM architecture that uses sparse queries to lift and compress observation representations into 3D, which are then fed into the LLM. This query-based representation allows us to jointly encode dynamic objects and static map elements (e.g., traffic roads), providing a concise world model for perception-action alignment in 3D. We further propose a new benchmark that includes comprehensive visual question answering (VQA) tasks including scene description, traffic rules, 3D grounding, counterfactual reasoning, decision making, and planning. Extensive research demonstrates OmniDrive's superior reasoning and planning capabilities in complex 3D scenes.

Network structure

##Experimental results

The above is the detailed content of OmniDrive: A framework for aligning large models with 3D driving tasks. For more information, please follow other related articles on the PHP Chinese website!

Hot Article

Hot tools Tags

Hot Article

Hot Article Tags

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Towards 'Closed Loop' | PlanAgent: New SOTA for closed-loop planning of autonomous driving based on MLLM!

Jun 08, 2024 pm 09:30 PM

Towards 'Closed Loop' | PlanAgent: New SOTA for closed-loop planning of autonomous driving based on MLLM!

Jun 08, 2024 pm 09:30 PM

Towards 'Closed Loop' | PlanAgent: New SOTA for closed-loop planning of autonomous driving based on MLLM!

Review! Comprehensively summarize the important role of basic models in promoting autonomous driving

Jun 11, 2024 pm 05:29 PM

Review! Comprehensively summarize the important role of basic models in promoting autonomous driving

Jun 11, 2024 pm 05:29 PM

Review! Comprehensively summarize the important role of basic models in promoting autonomous driving

PHP Git practice: How to use Git to improve code quality and team efficiency?

Jun 03, 2024 pm 12:43 PM

PHP Git practice: How to use Git to improve code quality and team efficiency?

Jun 03, 2024 pm 12:43 PM

PHP Git practice: How to use Git to improve code quality and team efficiency?

How much margin is needed for Huobi futures contracts to avoid liquidation?

Jul 02, 2024 am 11:17 AM

How much margin is needed for Huobi futures contracts to avoid liquidation?

Jul 02, 2024 am 11:17 AM

How much margin is needed for Huobi futures contracts to avoid liquidation?