cURL vs. wget: Which one is better for you?

When you want to download files directly through the Linux command line, two tools can immediately come to mind: wget and cURL. They have many of the same characteristics and can easily accomplish some of the same tasks. Although they have some similar characteristics, they are not exactly the same. These two programs are suitable for different situations and have their own characteristics in specific situations.

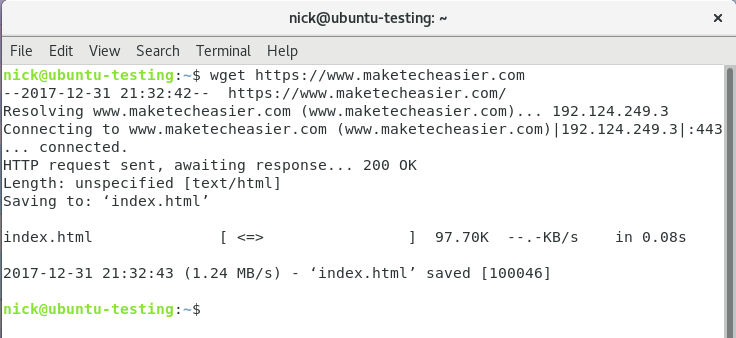

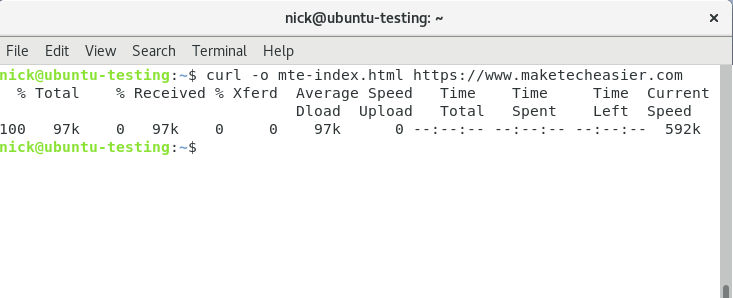

Both wget and cURL can download content. This is how they are designed at their core. They can both send requests to the Internet and return requested items. This can be a file, image, or something else like the raw HTML of the website.

Both programs can make HTTP POST requests. This means they can all send data to the website, such as filling out forms.

Since both are command line tools, they are designed to be scriptable. Both wget and cURL can be written into your Bash scripts to automatically interact with new content and download what you need.

wget is simple and straightforward. This means you can enjoy its exceptional download speeds. wget is an independent program, does not require additional resource libraries, and does not do anything outside its scope.

wget is a professional direct download program that supports recursive downloads. At the same time, it also allows you to download any content in a web page or FTP directory.

wget has smart default settings. It specifies how to handle many things in regular browsers, such as cookies and redirects, without requiring additional configuration. It can be said that wget is simply self-explanatory and ready to eat out of the can!

cURL is a versatile tool. Sure, it can download web content, but it can also do much more.

cURL technical support library is: libcurl. This means that you can write an entire program based on cURL, allowing you to write a downloader program based on the graphical environment in the libcurl library and access all its functionality.

cURL Broad network protocol support may be its biggest selling point. cURL supports access to HTTP and HTTPS protocols and can handle FTP transfers. It supports LDAP protocol and even supports Samba sharing. In fact, you can also use cURL to send and receive emails.

cURL also has some neat security features. cURL supports the installation of many SSL/TLS libraries and also supports access through network proxies, including SOCKS. This means that you can use cURL over Tor.

cURL also supports gzip compression technology that makes sending data easier.

So should you use cURL or wget? This comparison depends on the actual use. If you want fast downloads and don't have to worry about parameter identification, then you should use wget, which is lightweight and efficient. If you want to do some more complex usage, your intuition tells you that you should choose cRUL.

cURL allows you to do many things. You can think of cURL as a stripped-down command-line web browser. It supports almost every protocol you can think of and provides interactive access to almost any online content. The only difference from a browser is that cURL does not render the corresponding information it receives.

The above is the detailed content of cURL vs. wget: Which one is better for you?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Difference between centos and ubuntu

Apr 14, 2025 pm 09:09 PM

Difference between centos and ubuntu

Apr 14, 2025 pm 09:09 PM

The key differences between CentOS and Ubuntu are: origin (CentOS originates from Red Hat, for enterprises; Ubuntu originates from Debian, for individuals), package management (CentOS uses yum, focusing on stability; Ubuntu uses apt, for high update frequency), support cycle (CentOS provides 10 years of support, Ubuntu provides 5 years of LTS support), community support (CentOS focuses on stability, Ubuntu provides a wide range of tutorials and documents), uses (CentOS is biased towards servers, Ubuntu is suitable for servers and desktops), other differences include installation simplicity (CentOS is thin)

Centos stops maintenance 2024

Apr 14, 2025 pm 08:39 PM

Centos stops maintenance 2024

Apr 14, 2025 pm 08:39 PM

CentOS will be shut down in 2024 because its upstream distribution, RHEL 8, has been shut down. This shutdown will affect the CentOS 8 system, preventing it from continuing to receive updates. Users should plan for migration, and recommended options include CentOS Stream, AlmaLinux, and Rocky Linux to keep the system safe and stable.

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to install centos

Apr 14, 2025 pm 09:03 PM

How to install centos

Apr 14, 2025 pm 09:03 PM

CentOS installation steps: Download the ISO image and burn bootable media; boot and select the installation source; select the language and keyboard layout; configure the network; partition the hard disk; set the system clock; create the root user; select the software package; start the installation; restart and boot from the hard disk after the installation is completed.

How to use docker desktop

Apr 15, 2025 am 11:45 AM

How to use docker desktop

Apr 15, 2025 am 11:45 AM

How to use Docker Desktop? Docker Desktop is a tool for running Docker containers on local machines. The steps to use include: 1. Install Docker Desktop; 2. Start Docker Desktop; 3. Create Docker image (using Dockerfile); 4. Build Docker image (using docker build); 5. Run Docker container (using docker run).

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How to mount hard disk in centos

Apr 14, 2025 pm 08:15 PM

How to mount hard disk in centos

Apr 14, 2025 pm 08:15 PM

CentOS hard disk mount is divided into the following steps: determine the hard disk device name (/dev/sdX); create a mount point (it is recommended to use /mnt/newdisk); execute the mount command (mount /dev/sdX1 /mnt/newdisk); edit the /etc/fstab file to add a permanent mount configuration; use the umount command to uninstall the device to ensure that no process uses the device.

What to do after centos stops maintenance

Apr 14, 2025 pm 08:48 PM

What to do after centos stops maintenance

Apr 14, 2025 pm 08:48 PM

After CentOS is stopped, users can take the following measures to deal with it: Select a compatible distribution: such as AlmaLinux, Rocky Linux, and CentOS Stream. Migrate to commercial distributions: such as Red Hat Enterprise Linux, Oracle Linux. Upgrade to CentOS 9 Stream: Rolling distribution, providing the latest technology. Select other Linux distributions: such as Ubuntu, Debian. Evaluate other options such as containers, virtual machines, or cloud platforms.