Technology peripherals

Technology peripherals

AI

AI

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here.

DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each tag. Compared with DeepSeek 67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times.

DeepSeek is a company exploring the nature of artificial general intelligence (AGI) and is committed to integrating research, engineering and business.

The comprehensive capabilities of DeepSeek-V2

In the current mainstream list of large models, DeepSeek-V2 performs well:

- The Chinese comprehensive ability (AlignBench) is the strongest among the open source models: it is in the same echelon with closed source models such as GPT-4-Turbo and Wenxin 4.0 in the evaluation

- The English comprehensive ability (MT-Bench) is in the third place First echelon: English comprehensive ability (MT-Bench) is in the same echelon as the strongest open source model LLaMA3-70B, surpassing the strongest MoE open source model Mixtral 8x22B

- Knowledge, mathematics, reasoning, programming and other ranking results At the forefront

- Supports 128K context windows

New model structure

The potential of AI Being constantly excavated, we can’t help but ask: What is the key to promoting intelligent progress? DeepSeek-V2 gives the answer - the perfect combination of innovative architecture and cost-effectiveness.

"DeepSeek-V2 is an improved version. With a total parameter of 236B and activation of 21B, it finally reaches the capability of 70B~110B Dense model. At the same time, the memory consumption is only 1/5 of the same level model~ 1/100. On the 8-card H800 machine, it can process the input of more than 100,000 tokens per second and the output of more than 50,000 tokens per second. This is not only a leap in technology, but also a revolution in cost control. "

Today, with the rapid development of AI technology, the emergence of DeepSeek-V2 not only represents a technological breakthrough, but also heralds the popularization of intelligent applications. It lowers the threshold for AI and allows more companies and individuals to enjoy the benefits of efficient intelligent services. At the same time, it also heralds the popularization of intelligent applications. It lowers the threshold for AI and allows more companies and individuals to enjoy the benefits of efficient intelligent services.

Chinese capability VS price

In terms of Chinese capability, DeepSeek-V2 leads the world in the AlignBench ranking while providing a very competitive API price.

Both open source models and papers

DeepSeek-V2 is not just a model, it is a gateway to more The key to the smart world. It opens a new chapter in AI applications with lower cost and higher performance. The open source of DeepSeek-V2 is the best proof of this belief. It will inspire more people's innovative spirit and jointly promote the future of human intelligence.

- Model weights: https://huggingface.co/deepseek-ai

- Open source address: https://github.com/deepseek-ai/DeepSeek-V2

As AI continues to evolve, how do you think DeepSeek-V2 will change our world? Let’s wait and see. If you are interested, you can visit chat.deepseek.com to personally experience the technological changes brought about by DeepSeek-V2.

References

[1]

DeepSeek-V2: https: //www.php.cn/link/b2651c9921723afdfd04ed61ec302a6b

The above is the detailed content of The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

What are the Grayscale Encryption Trust Funds? Common Grayscale Encryption Trust Funds Inventory

Mar 05, 2025 pm 12:33 PM

What are the Grayscale Encryption Trust Funds? Common Grayscale Encryption Trust Funds Inventory

Mar 05, 2025 pm 12:33 PM

Grayscale Investment: The channel for institutional investors to enter the cryptocurrency market. Grayscale Investment Company provides digital currency investment services to institutions and investors. It allows investors to indirectly participate in cryptocurrency investment through the form of trust funds. The company has launched several crypto trusts, which has attracted widespread market attention, but the impact of these funds on token prices varies significantly. This article will introduce in detail some of Grayscale's major crypto trust funds. Grayscale Major Crypto Trust Funds Available at a glance Grayscale Investment (founded by DigitalCurrencyGroup in 2013) manages a variety of crypto asset trust funds, providing institutional investors and high-net-worth individuals with compliant investment channels. Its main funds include: Zcash (ZEC), SOL,

What libraries are used for floating point number operations in Go?

Apr 02, 2025 pm 02:06 PM

What libraries are used for floating point number operations in Go?

Apr 02, 2025 pm 02:06 PM

The library used for floating-point number operation in Go language introduces how to ensure the accuracy is...

Bitwise: Businesses Buy Bitcoin A Neglected Big Trend

Mar 05, 2025 pm 02:42 PM

Bitwise: Businesses Buy Bitcoin A Neglected Big Trend

Mar 05, 2025 pm 02:42 PM

Weekly Observation: Businesses Hoarding Bitcoin – A Brewing Change I often point out some overlooked market trends in weekly memos. MicroStrategy's move is a stark example. Many people may say, "MicroStrategy and MichaelSaylor are already well-known, what are you going to pay attention to?" This is true, but many investors regard it as a special case and ignore the deeper market forces behind it. This view is one-sided. In-depth research on the adoption of Bitcoin as a reserve asset in recent months shows that this is not an isolated case, but a major trend that is emerging. I predict that in the next 12-18 months, hundreds of companies will follow suit and buy large quantities of Bitcoin

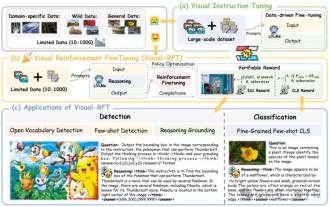

Significantly surpassing SFT, the secret behind o1/DeepSeek-R1 can also be used in multimodal large models

Mar 12, 2025 pm 01:03 PM

Significantly surpassing SFT, the secret behind o1/DeepSeek-R1 can also be used in multimodal large models

Mar 12, 2025 pm 01:03 PM

Researchers from Shanghai Jiaotong University, Shanghai AILab and the Chinese University of Hong Kong have launched the Visual-RFT (Visual Enhancement Fine Tuning) open source project, which requires only a small amount of data to significantly improve the performance of visual language big model (LVLM). Visual-RFT cleverly combines DeepSeek-R1's rule-based reinforcement learning approach with OpenAI's reinforcement fine-tuning (RFT) paradigm, successfully extending this approach from the text field to the visual field. By designing corresponding rule rewards for tasks such as visual subcategorization and object detection, Visual-RFT overcomes the limitations of the DeepSeek-R1 method being limited to text, mathematical reasoning and other fields, providing a new way for LVLM training. Vis

Which libraries in Go are developed by large companies or provided by well-known open source projects?

Apr 02, 2025 pm 04:12 PM

Which libraries in Go are developed by large companies or provided by well-known open source projects?

Apr 02, 2025 pm 04:12 PM

Which libraries in Go are developed by large companies or well-known open source projects? When programming in Go, developers often encounter some common needs, ...

Gitee Pages static website deployment failed: How to troubleshoot and resolve single file 404 errors?

Apr 04, 2025 pm 11:54 PM

Gitee Pages static website deployment failed: How to troubleshoot and resolve single file 404 errors?

Apr 04, 2025 pm 11:54 PM

GiteePages static website deployment failed: 404 error troubleshooting and resolution when using Gitee...

How to obtain the shipping region data of the overseas version? What are some ready-made resources available?

Apr 01, 2025 am 08:15 AM

How to obtain the shipping region data of the overseas version? What are some ready-made resources available?

Apr 01, 2025 am 08:15 AM

Question description: How to obtain the shipping region data of the overseas version? Are there ready-made resources available? Get accurate in cross-border e-commerce or globalized business...

Typecho route matching conflict: Why is my /test/tag/his/10086 matching TestTagIndex instead of TestTagPage?

Apr 01, 2025 am 09:03 AM

Typecho route matching conflict: Why is my /test/tag/his/10086 matching TestTagIndex instead of TestTagPage?

Apr 01, 2025 am 09:03 AM

Typecho routing matching rules analysis and problem investigation This article will analyze and answer questions about the inconsistent results of the Typecho plug-in routing registration and actual matching results...