Technology peripherals

Technology peripherals

AI

AI

In 2024, will there be substantial breakthroughs and progress in end-to-end autonomous driving in China?

In 2024, will there be substantial breakthroughs and progress in end-to-end autonomous driving in China?

In 2024, will there be substantial breakthroughs and progress in end-to-end autonomous driving in China?

Not everyone can understand that Tesla V12 has been widely launched in North America and has gained more and more user recognition due to its excellent performance. End-to-end autonomous driving has also become the most concerned about in the autonomous driving industry. technical direction. Recently, I had the opportunity to have some exchanges with first-class engineers, product managers, investors, and media people in many industries. I found that everyone is very interested in end-to-end autonomous driving, but even in terms of some basic understanding of end-to-end autonomous driving, There are still misunderstandings of this kind. As someone who has been fortunate enough to experience the city function with and without pictures from domestic first-tier brands, as well as the two versions of FSD V11 and V12, here I would like to talk about a few current developments based on my professional background and tracking the progress of Tesla FSD over the years. At this stage, everyone talked about common misunderstandings about end-to-end autonomous driving and gave my own interpretation of these issues.

Doubt 1: Can end-to-end perception and end-to-end decision-making and planning be counted as end-to-end autonomous driving?

All steps from sensor input to planning and then controlling signal output are end-to-end derivable, so that the entire system can be trained as a large model through gradient descent training. Through gradient backpropagation, parameters can be updated and optimized in all aspects of the model from input to output during model training, so that the driving behavior of the entire system can be optimized based on the driving decision trajectory directly perceived by the user. Recently, some friends have claimed that they are end-to-end sensing or end-to-end decision-making when promoting end-to-end autonomous driving. In fact, I think both of these cannot be counted as end-to-end autonomous driving, but can only be regarded as end-to-end autonomous driving. It is called pure data-driven perception and pure data-driven decision planning.

Some may make decisions based on a specific model, combined with a hybrid strategy of traditional methods for security verification and trajectory optimization, also known as end-to-end planning. In addition, some people believe that Tesla V12 is not a purely accurate model output control signal, but a hybrid strategy that combines some rule methods. According to the famous Green on http://X.com, he posted a tweet some time ago saying that the code of the rules can still be found in the V12 technology stack. My understanding of this is that the code discovered by Green is likely to be the V11 version code retained by the V12 high-speed technology stack, because we know that currently V12 only replaces the original urban technology stack with end-to-end, and the high-speed will still use the V11 solution, so Finding some fragments of regular code in the unraveled code does not mean that V12 is false "end-to-end", but it is likely that the code found is high-speed code. In fact, we can see from the AI Day in 2022 that V11 and previous versions are already hybrid solutions. Therefore, if V12 is not a complete model straight off the track, then the solution will not be much different from the previous versions. If it is There is no reasonable explanation for the jump in performance of the V12. For Tesla’s previous plans, please refer to my interpretation of EatElephant on AI Day: Tesla AI Day 2022 -- Interpretation of Shizi: It is called the Autonomous Driving Spring Festival Gala, and the decentralized R&D team is eager to transform into an AI technology company.

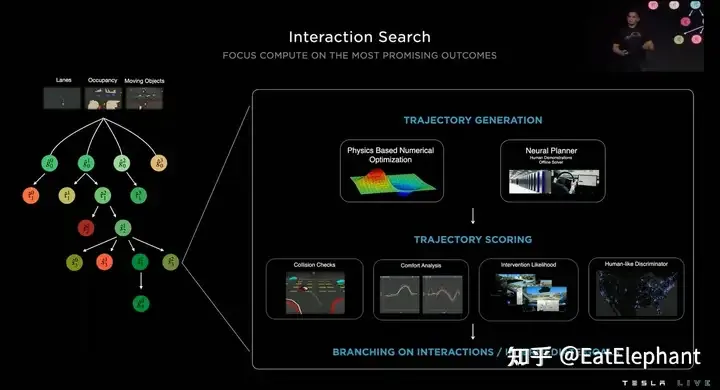

Judging from the 2022 AI Day, V11 is already a planning solution mixed with NN Planner

In general, whether it is the perception post-processing code , or the rule candidate trajectory scoring, or even the safety policy. Once the rule code is introduced and there is an if else branch, the stable transmission of the entire system will be truncated, which also results in the loss of the end-to-end system obtained through training. The biggest advantage of global optimization.

Doubt 2: Is end-to-end a reinvention of previous technology?

Another common misunderstanding is that end-to-end is to overthrow the previously accumulated technology and conduct a thorough new technological innovation, and many people think that Tesla has just realized users of the end-to-end autonomous driving system. Push, then other manufacturers no longer need to iterate on the original modular technology stack of perception, prediction, and planning. Everyone directly enters the end-to-end system. Instead, they can learn from the advantages of latecomers to quickly catch up with or even surpass Tesla. It is true that using a large model to complete the mapping from sensor input to planning control signals is the most thorough end-to-end approach. Companies have also tried similar methods for a long time. For example, Nvidia's DAVE-2 and Wayve and other companies have used similar methods. Methods. This thorough end-to-end technology is indeed closer to a black box and is difficult to debug and iteratively optimize. At the same time, since sensor input signals such as images and point clouds are very high-dimensional input spaces, output control signals such as steering wheel angle and throttle control The moving pedal is a relatively low-dimensional output space, making it completely unusable for actual vehicle testing.

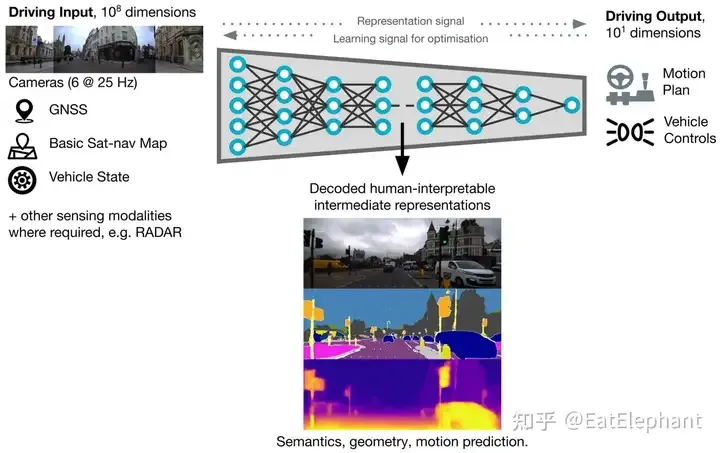

A thorough end-to-end system will also use some common auxiliary tasks such as semantic segmentation and depth estimation to help model convergence and debugging

So the FSD V12 we actually saw retains almost all previous visualization content, which shows that FSD V12 is end-to-end trained on the original strong perceptual basis, and the FSD iteration starting in October 2020 does not Being abandoned, it became the solid technical foundation of V12. Andrej Karparthy has answered similar questions before. Although he was not involved in the development of V12, he believes that all previous technology accumulation has not been abandoned, but has just been moved from the front to the behind the scenes. Therefore, end-to-end navigation is gradually realized based on the original technology by removing part of the rule code step by step.

V12 retains almost all the perceptions of FSD, and only cancels limited visual content such as cone barrels

Doubt 3: Academic Paper Can end-to-end be migrated to an actual product?

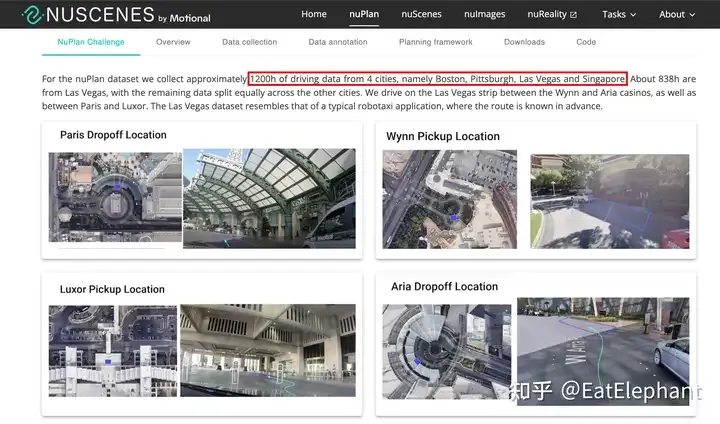

UniAD becoming the 2023 CVPR Best Paper undoubtedly represents the academic community’s high expectations for end-to-end autonomous driving systems. Since Tesla introduced the innovation of its visual BEV perception technology in 2021, the domestic academic community has invested a lot of enthusiasm in autonomous driving BEV perception, and a series of studies have been born, promoting the performance optimization and implementation deployment of BEV methods, then Can end-to-end follow a similar route, led by academia and followed by industry to promote the rapid iterative implementation of end-to-end technology in products? I think it is relatively difficult. First of all, BEV perception is still a relatively modular technology, more at the algorithm level, and entry-level performance does not require so high data volume. The launch of the high-quality academic open source data set Nuscenes provides a convenient precursor for many BEV research. Conditions, although the BEV sensing solution iterated on Nuscenes cannot meet product-level performance requirements, it is of great reference value as a proof of concept and model selection. However, academia lacks large-scale end-to-end available data. The largest Nuplan data set currently contains 1,200 hours of real vehicle collection data in 4 cities. However, at a financial report meeting in 2023, Musk said that for end-to-end autonomous driving, "1 million video cases have been trained, and it can barely work." ; 2 million, it’s slightly better; 3 million, you’ll feel Wow; when it reaches 10 million, its performance becomes incredible.” Tesla's Autopilot return data is generally considered to be a 1-minute segment, so the entry-level 1 million video case is about 16,000 hours, which is at least an order of magnitude more than the largest academic data set. It should be noted here that nuplan collects data continuously, so in the data There are fatal flaws in the distribution and diversity. The vast majority of the data are simple scenes, which means that using academic data sets like nuplan cannot even get a version that can barely get on the train.

The Nuplan data set is already a very large-scale academic data set, but the exploration of an end-to-end solution may not be enough

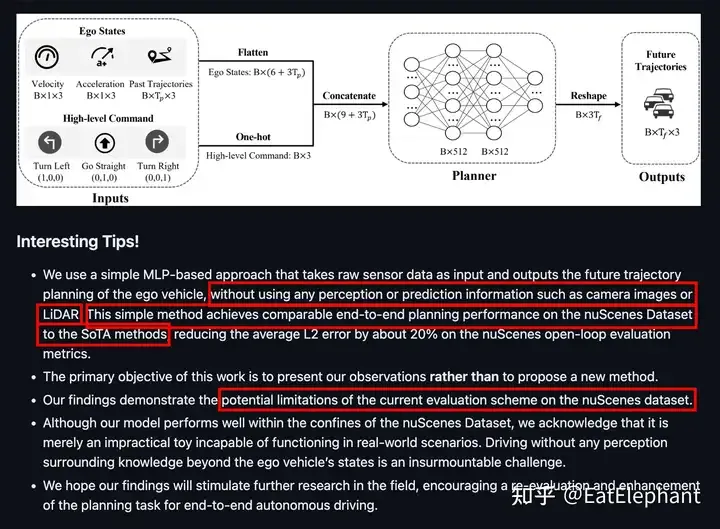

So we look at The vast majority of end-to-end autonomous driving solutions, including UniAD, cannot be run in actual vehicles, and they can only resort to open-loop evaluation. The reliability of open-loop evaluation indicators is very low, because open-loop evaluation cannot identify the problem of model confusion and cause-effect, so even if the model only learns to use historical path extrapolation, it can obtain very good open-loop indicators, but such a model is It is completely unusable. In 2023, Baidu once published a Paper called AD-MLP (https://arxiv.org/pdf/2305.10430) to discuss the shortcomings of open-loop planning evaluation indicators. This Paper only used historical information. , without introducing any perception, it has obtained very good open-loop evaluation indicators, even close to some current SOTA work. However, it is obvious that no one can drive a car well with eyes closed!

AD MLP achieves good open-loop indicators by not relying on sensory input to illustrate that using open-loop indicators as a reference has little practical significance

Then closed-loop policy verification Can the problem of open-loop imitation learning be solved? At least for now, the academic community generally relies on the CARLA closed-loop simulation system for end-to-end research and development, but the models obtained by CARLA based on game engines are also difficult to transfer to the real world.

Doubt 4: End-to-end autonomous driving is just an algorithm innovation?

Finally end-to-end is not just a new algorithm. The models of different modules of the modular autonomous driving system can be iteratively trained separately using the data of their respective tasks. However, each function of the end-to-end system is trained at the same time, which requires the training data to be extremely consistent, and each piece of data must be accurate. All subtask labels are labeled. Once a task labeling fails, it will be difficult to use this data in the end-to-end training task. This puts extremely high requirements on the success rate and performance of the automatic labeling Pipeline. Secondly, the end-to-end system requires all modules to reach a high performance level in order to achieve better results in the end-to-end decision planning output task. Therefore, it is generally believed that the data threshold of the end-to-end system is much higher than the data of each individual module. demand, and the threshold of data is not only the absolute quantity requirements, but also the distribution and diversity of data. This means that we do not have complete control over the vehicles and have to adapt to multiple suppliers with customers of different models. You may encounter greater difficulties when developing an end-to-end system. On the threshold of computing power, Musk stated on X.com in early March this year that the biggest limiting factor of FSD is computing power. Recently, Boss Ma said that their computing power problem has been greatly improved. , almost at the same time, at the 2024 Q1 financial report meeting, Tesla revealed that they now have 35,000 H100 computing resources, and revealed that this number will reach 85,000 by the end of 2024. There is no doubt that Tesla has very powerful computing power engineering optimization capabilities, which means that to reach the current level of FSD V12, there is a high probability that 35,000 H100 and billions of dollars in infrastructure capital expenditure are necessary prerequisites. If it is not as efficient as Tesla, then this threshold may be further raised.

In early March, Musk said that the main limiting factor in the iteration of FSD was computing power

In early April, Musk said that this year Tesla’s total investment in computing power will exceed 10 billion US dollars

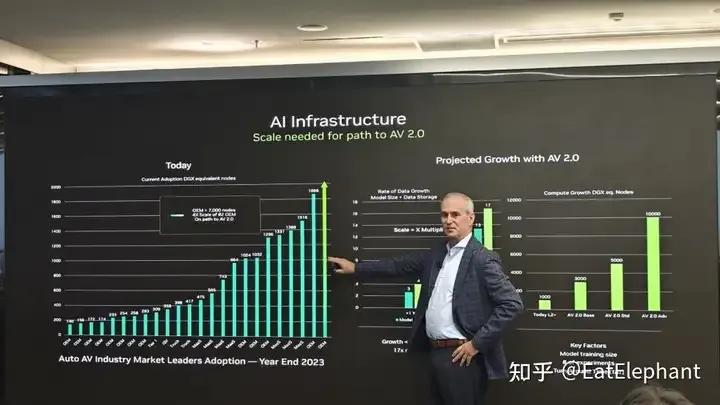

In addition, a netizen on http://X.com shared a screenshot of Norm Marks, an executive in the Nvidia automotive industry, at a meeting this year. It can be seen from this that by the end of 2023, the number of NV graphics cards owned by Tesla is completely overwhelming on the histogram (the green arrow on the far right of the left picture, the middle text illustrates the number of NV graphics cards owned by this No. 1 OEM) Number of graphics cards > 7,000 DGX nodes. This OEM is obviously Tesla. Each node is calculated based on 8 cards. By the end of 23, Tesla probably has more than 56,000 A100 graphics cards, which is more than four times more than the second-ranked OEM. I don’t understand this. Including the new 35,000-card H100 newly purchased in 2024), combined with the United States’ restrictive policy on the export of Chinese graphics cards, it becomes more difficult to catch up with this computing power.

Norm Marks shared a screenshot internally, source X.com@ChrisZheng001

In addition to the above data computing power challenges, the end-to-end system will also encounter What kind of new challenges are encountered, how to ensure the controllability of the system, how to detect problems as early as possible, solve problems through data-driven methods, and iterate quickly when rule codes cannot be used. Currently, for most autonomous driving R&D teams, Yandu is an unknown challenge.

Finally, end-to-end is still an organizational change for the current autonomous driving R&D team, because since L4 autonomous driving, the organizational structure of most autonomous driving teams is modular and is not only divided into perception groups, prediction groups Group, positioning group, planning control group, and even perception group are divided into visual perception, laser perception, etc. The end-to-end technical architecture directly eliminates the interface barriers between different modules, making the end-to-end R&D team need to integrate all human resources to adapt to the new technology paradigm. This is a great challenge to the inflexible team organizational culture.

The above is the detailed content of In 2024, will there be substantial breakthroughs and progress in end-to-end autonomous driving in China?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR