1. What is Unicode?

Unicode originated from a very simple idea: include all the characters in the world in one set. As long as the computer supports this character set, it can display all characters, and there will no longer be garbled characters.

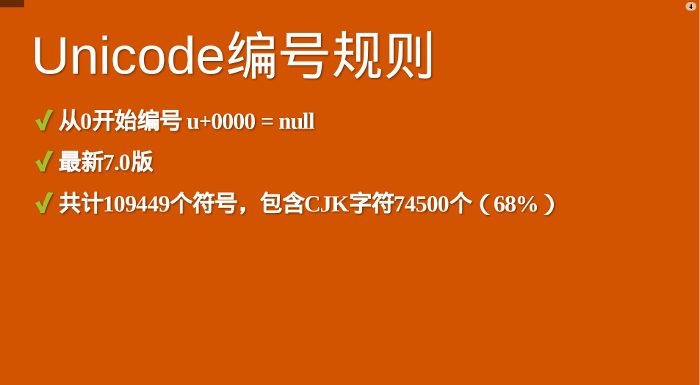

It starts from 0 and assigns a number to each symbol, which is called a "codepoint". For example, the symbol for code point 0 is null (meaning that all binary bits are 0).

In the above formula, U indicates that the hexadecimal number immediately following is the Unicode code point.

Currently, the latest version of Unicode is version 7.0, which contains a total of 109,449 symbols, including 74,500 Chinese, Japanese, and Korean characters. It can be approximated that more than two-thirds of the existing symbols in the world come from East Asian scripts. For example, the code point for "good" in Chinese is 597D in hexadecimal.

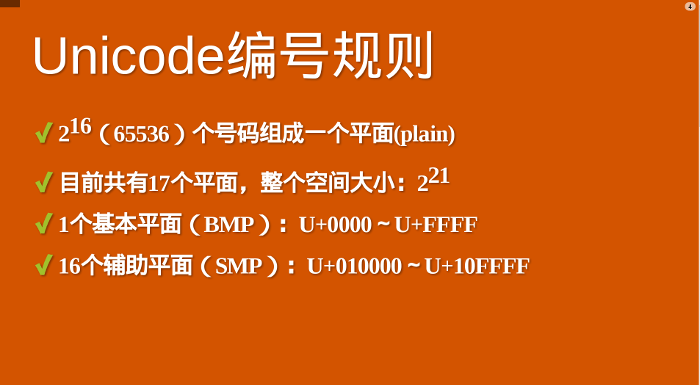

With so many symbols, Unicode is not defined once, but in partitions. Each area can store 65536 (216) characters, which is called a plane. Currently, there are 17 (25) planes in total, which means that the size of the entire Unicode character set is now 221.

The first 65536 character bits are called the basic plane (abbreviation BMP). Its code point range is from 0 to 216-1. Written in hexadecimal, it is from U 0000 to U FFFF. All the most common characters are placed on this plane, which is the first plane defined and announced by Unicode.

The remaining characters are placed in the auxiliary plane (abbreviated as SMP), and the code points range from U 010000 to U 10FFFF.

2. UTF-32 and UTF-8

Unicode only stipulates the code point of each character. What kind of byte order is used to represent this code point involves the encoding method.

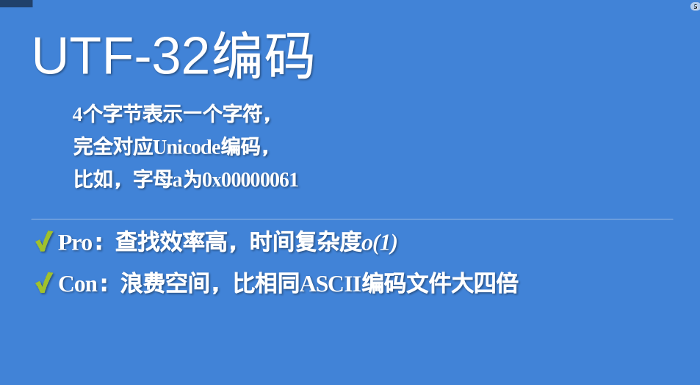

The most intuitive encoding method is that each code point is represented by four bytes, and the byte content corresponds to the code point one-to-one. This encoding method is called UTF-32. For example, code point 0 is represented by four bytes of 0, and code point 597D is preceded by two bytes of 0.

The advantage of UTF-32 is that the conversion rules are simple and intuitive, and the search efficiency is high. The disadvantage is that it wastes space. For the same English text, it will be four times larger than ASCII encoding. This shortcoming is so fatal that no one actually uses this encoding method. The HTML5 standard clearly stipulates that web pages must not be encoded into UTF-32.

What people really needed was a space-saving encoding method, which led to the birth of UTF-8. UTF-8 is a variable-length encoding method, with character lengths ranging from 1 byte to 4 bytes. The more commonly used characters are, the shorter the bytes are. The first 128 characters are represented by only 1 byte, which is exactly the same as the ASCII code.

Number range bytes 0x0000 - 0x007F10x0080 - 0x07FF20x0800 - 0xFFFF30x010000 - 0x10FFFF4

Due to the space-saving characteristics of UTF-8, it has become the most common web page encoding on the Internet. However, it has little to do with today's topic, so I won't go into depth. For specific transcoding methods, you can refer to the "Character Encoding Notes" I wrote many years ago.

3. Introduction to UTF-16

UTF-16 encoding is between UTF-32 and UTF-8, and combines the characteristics of fixed-length and variable-length encoding methods.

Its encoding rules are very simple: characters in the basic plane occupy 2 bytes, and characters in the auxiliary plane occupy 4 bytes. That is to say, the encoding length of UTF-16 is either 2 bytes (U 0000 to U FFFF) or 4 bytes (U 010000 to U 10FFFF).

So there is a question. When we encounter two bytes, how can we tell whether it is a character by itself, or does it need to be interpreted together with the other two bytes?

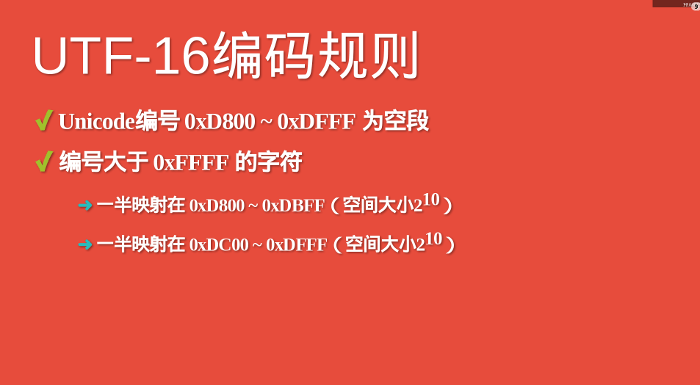

It’s very clever. I don’t know if it is an intentional design. In the basic plane, from U D800 to U DFFF is an empty segment, that is, these code points do not correspond to any characters. Therefore, this empty segment can be used to map auxiliary plane characters.

Specifically, there are 220 character bits in the auxiliary plane, which means that at least 20 binary bits are needed to correspond to these characters. UTF-16 splits these 20 bits in half. The first 10 bits are mapped from U D800 to U DBFF (space size 210), called the high bit (H), and the last 10 bits are mapped from U DC00 to U DFFF (space size 210). , called low bit (L). This means that an auxiliary plane character is split into two basic plane character representations.

So, when we encounter two bytes and find that their code points are between U D800 and U DBFF, we can conclude that the code points of the following two bytes should be between U DC00 and U DBFF. U DFFF, these four bytes must be read together.

4. UTF-16 transcoding formula

When converting Unicode code points to UTF-16, first distinguish whether this is a basic flat character or an auxiliary flat character. If it is the former, directly convert the code point to the corresponding hexadecimal form, with a length of two bytes.

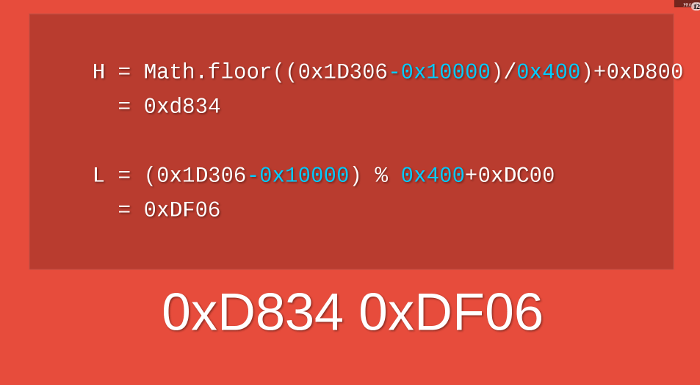

If it is an auxiliary flat character, Unicode version 3.0 provides a transcoding formula.

Take the character  as an example. It is an auxiliary plane character with a code point of U 1D306. The calculation process of converting it to UTF-16 is as follows.

as an example. It is an auxiliary plane character with a code point of U 1D306. The calculation process of converting it to UTF-16 is as follows.

So, the UTF-16 encoding of character  is 0xD834 DF06, and the length is four bytes.

is 0xD834 DF06, and the length is four bytes.

5. Which encoding does JavaScript use?

The JavaScript language uses the Unicode character set, but only supports one encoding method.

This encoding is neither UTF-16, nor UTF-8, nor UTF-32. None of the above coding methods are used in JavaScript.

JavaScript uses UCS-2!

6. UCS-2 encoding

Why did a UCS-2 suddenly appear? This requires a little history.

In the era before the Internet appeared, there were two teams who wanted to create a unified character set. One is the Unicode team established in 1988, and the other is the UCS team established in 1989. When they discovered each other's existence, they quickly reached an agreement: the world does not need two unified character sets.

In October 1991, the two teams decided to merge the character sets. In other words, from now on, only one character set will be released, which is Unicode, and the previously released character sets will be revised. The code points of UCS will be completely consistent with Unicode.

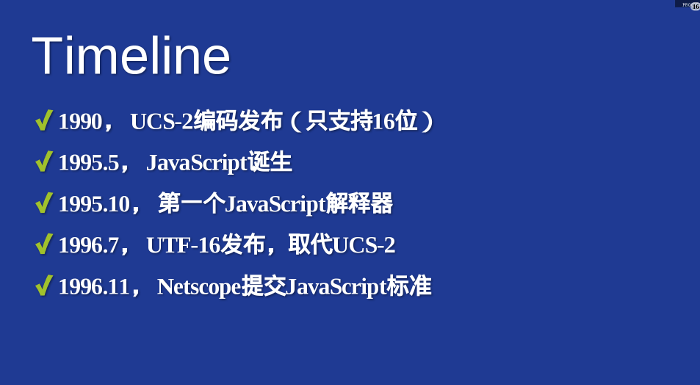

The development progress of UCS is faster than that of Unicode. The first encoding method UCS-2 was announced in 1990, using 2 bytes to represent characters that already have code points. (At that time, there was only one plane, the basic plane, so 2 bytes were enough.) UTF-16 encoding was not announced until July 1996, and it was clearly announced that it was a superset of UCS-2, that is, the basic plane characters were inherited. UCS-2 encoding, auxiliary plane characters define a 4-byte representation method.

To put it simply, the relationship between the two is that UTF-16 replaces UCS-2, or UCS-2 is integrated into UTF-16. So, now there is only UTF-16, no UCS-2.

7. Background of the birth of JavaScript

So, why doesn’t JavaScript choose the more advanced UTF-16, but uses the obsolete UCS-2?

The answer is simple: either you don’t want to or you can’t. Because when the JavaScript language appeared, there was no UTF-16 encoding.

In May 1995, Brendan Eich spent 10 days designing the JavaScript language; in October, the first interpretation engine came out; in November of the following year, Netscape officially submitted the language standard to ECMA (for details on the entire process, see "The Birth of JavaScript" ). Comparing the release time of UTF-16 (July 1996), you will understand that Netscape had no other choice at that time, only UCS-2 was available as an encoding method!

8. Limitations of JavaScript character functions

Since JavaScript can only handle UCS-2 encoding, all characters in this language are 2 bytes. If it is a 4-byte character, it will be treated as two double-byte characters. JavaScript's character functions are all affected by this and cannot return correct results.

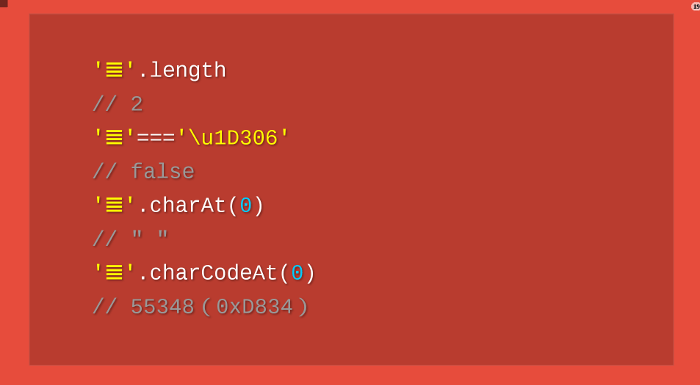

Let’s take the character  as an example. Its UTF-16 encoding is 4 bytes of 0xD834DF06. The problem arises. The 4-byte encoding does not belong to UCS-2. JavaScript does not recognize it and will only regard it as two separate characters, U D834 and U DF06. As mentioned before, these two code points are empty, so JavaScript will think that

as an example. Its UTF-16 encoding is 4 bytes of 0xD834DF06. The problem arises. The 4-byte encoding does not belong to UCS-2. JavaScript does not recognize it and will only regard it as two separate characters, U D834 and U DF06. As mentioned before, these two code points are empty, so JavaScript will think that  is a string composed of two empty characters!

is a string composed of two empty characters!

The above code indicates that JavaScript considers the length of the character  to be 2, the first character obtained is a null character, and the code point of the first character obtained is 0xDB34. None of these results are correct!

to be 2, the first character obtained is a null character, and the code point of the first character obtained is 0xDB34. None of these results are correct!

To solve this problem, you must make a judgment on the code point and then adjust it manually. The following is the correct way to traverse a string.

The above code indicates that when traversing a string, a judgment must be made on the code point. As long as it falls in the range from 0xD800 to 0xDBFF, it must be read together with the following 2 bytes

Similar problems exist with all JavaScript character manipulation functions.

String.prototype.replace()

String.prototype.substring()

String.prototype.slice()

...

The above functions are only valid for 2-byte code points. To correctly handle 4-byte code points, you must deploy your own versions one by one to determine the code point range of the current character.

9. ECMAScript 6

The next version of JavaScript, ECMAScript 6 (ES6 for short), greatly enhances Unicode support and basically solves this problem.

(1) Correctly identify characters

ES6 can automatically recognize 4-byte code points. Therefore, iterating over the string is much simpler.

However, to maintain compatibility, the length attribute still behaves in its original way. In order to get the correct length of the string, you can use the following method.

(2) Code point representation

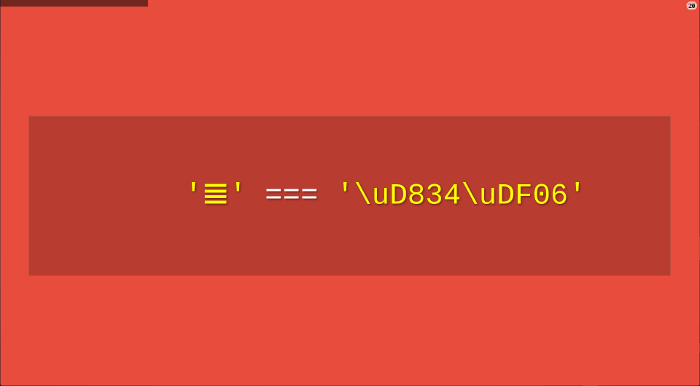

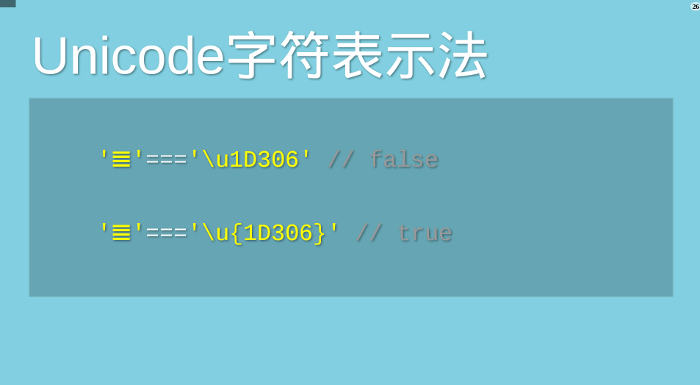

JavaScript allows you to directly use code points to represent Unicode characters. The writing method is "backslash u code point".

However, this representation is not valid for 4-byte code points. ES6 fixes this problem, and the code points can be correctly recognized as long as they are placed within curly brackets.

(3) String processing function

ES6 adds several new functions that specifically handle 4-byte code points.

String.fromCodePoint(): Returns the corresponding character from the Unicode code point

String.prototype.codePointAt(): Returns the corresponding code point from the character

String.prototype.at(): Returns the character at the given position in the string

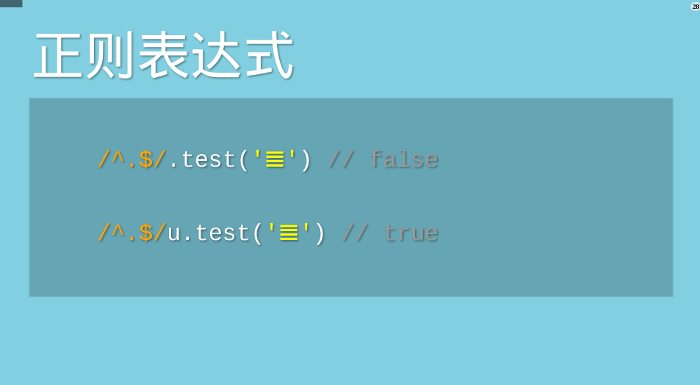

(4) Regular expression

ES6 provides the u modifier, which supports adding 4-byte code points to regular expressions.

(5) Unicode regularization

Some characters have additional symbols in addition to letters. For example, in the Chinese Pinyin of Ǒ, the tones above the letters are additional symbols. For many European languages, tone marks are very important.

Unicode provides two representation methods. One is a single character with an additional symbol, that is, one code point represents one character, for example, the code point of Ǒ is U 01D1; the other is the additional symbol as a separate code point, combined with the main character, that is, two codes A dot represents a character, for example Ǒ can be written as O (U 004F) ˇ (U 030C).

Both representations are visually and semantically identical and should be treated as equivalent. However, JavaScript can't tell.

ES6 provides the normalize method, allowing "Unicode normalization", that is, converting two methods into the same sequence.

For more introduction to ES6, please see "Introduction to ECMAScript 6".