With Node.js developing in full swing today, we can already use it to do a variety of things. Some time ago, the UP owner participated in the Geek Song event. In this event, we aimed to make a game that allows "low-down people" to communicate more. The core function is the real-time multi-player interaction based on the Lan Party concept. The Geek Pine competition only lasts a pitifully short 36 hours and requires everything to be quick and fast. Under such a premise, the initial preparations seemed a bit "natural". As a solution for cross-platform applications, we chose node-webkit, which is simple enough and meets our requirements.

According to needs, our development can be carried out separately according to modules. This article describes in detail the process of developing Spaceroom (our real-time multiplayer game framework), including a series of explorations and attempts, as well as solving some limitations of the Node.js and WebKit platforms themselves, and proposing solutions.

Getting started

Spaceroom at a glance

From the very beginning, the design of Spaceroom was definitely needs-driven. We hope that this framework can provide the following basic functions:

Be able to distinguish a group of users in units of rooms (or channels)

Able to receive instructions from users in the collection group

Time synchronization between each client can accurately broadcast game data according to the specified interval

Can minimize the impact caused by network delay

Of course, in the later stage of coding, we provided more functions for Spaceroom, including pausing the game, generating consistent random numbers between various clients, etc. (Of course, these can be implemented in the game logic framework according to needs, but not necessarily You need to use Spaceroom, a framework that works more on the communication level).

APIs

Spaceroom is divided into two parts: front-end and back-end. The work required on the server side includes maintaining the room list and providing the functions of creating rooms and joining rooms. Our client APIs look like this:

spaceroom.connect(address, callback) – connect to the server

spaceroom.createRoom(callback) – Create a room

spaceroom.joinRoom(roomId) – join a room

spaceroom.on(event, callback) – listen for events

…

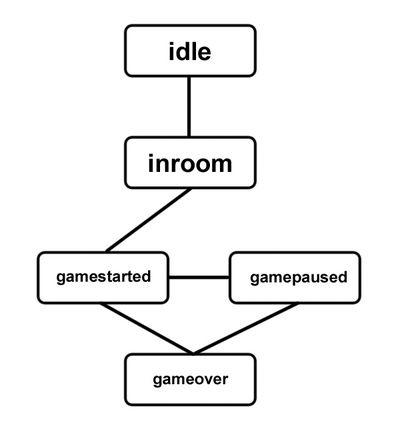

After the client connects to the server, it will receive a variety of events. For example, a user in a room may receive an event that a new player has joined, or that the game has started. We have given the client a "life cycle", and it will be in one of the following states at any time:

You can get the current state of the client through spaceroom.state.

Using the server-side framework is relatively simple. If you use the default configuration file, you can just run the server-side framework directly. We have a basic requirement: the server code can run directly in the client, without the need for a separate server. Those of you who have played on PS or PSP will know exactly what I'm talking about. Of course, it can be run on a dedicated server, which is naturally excellent.

The implementation of the logic code is simplified here. The first generation of Spaceroom completed the function of a Socket server. It maintained a list of rooms, including the status of the rooms, and the game-time communication (command collection, bucket broadcast, etc.) corresponding to each room. For specific implementation, please refer to the source code.

Synchronization algorithm

So, how can we make the things displayed between each client consistent in real time?

This thing sounds interesting. Think about it carefully, what do we need the server to help us pass? It is natural to think of what may cause logical inconsistencies between various clients: user instructions. Since the codes that handle game logic are all the same, given the same conditions, the results of the code will be the same. The only difference is the various player instructions received during the game. Of course, we need a way to synchronize these instructions. If all clients can get the same instructions, then all clients can theoretically have the same running results.

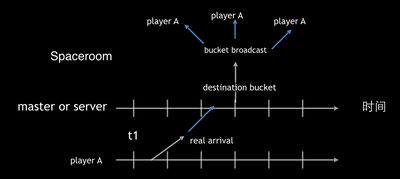

The synchronization algorithms of online games are all kinds of weird, and their applicable scenarios are also different. The synchronization algorithm used by Spaceroom is similar to the concept of frame lock. We divide the timeline into intervals, and each interval is called a bucket. Bucket is used to load instructions and is maintained by the server. At the end of each bucket time period, the server broadcasts the bucket to all clients. After the client gets the bucket, it fetches instructions from it and executes them after verification.

In order to reduce the impact of network delay, each instruction received by the server from the client will be delivered to the corresponding bucket according to a certain algorithm. Specifically, follow the following steps:

Suppose order_start is the instruction occurrence time carried by the instruction, and t is the starting time of the bucket where order_start is located

If t delay_time <= the starting time of the bucket currently collecting instructions, deliver the instruction to the bucket currently collecting instructions, otherwise continue to step 3

Deliver the command to the bucket corresponding to t delay_time

Among them, delay_time is the agreed server delay time, which can be taken as the average delay between clients. The default value in Spaceroom is 80, and the default value of bucket length is 48. At the end of each bucket time period, the server broadcasts this bucket to All clients start receiving instructions for the next bucket. The client automatically performs time adjustment in the logic based on the received bucket interval to control the time error within an acceptable range.

This means that under normal circumstances, the client will receive a bucket from the server every 48ms. When the time to process the bucket is reached, the client will process it accordingly. Assuming that the client FPS=60, a bucket will be received every 3 frames or so, and the logic will be updated based on this bucket. If the bucket is not received after the time is exceeded due to network fluctuations, the client suspends the game logic and waits. Within a bucket, logical updates can use the lerp method.

In the case of delay_time = 80, bucket_size = 48, any instruction will be delayed for at least 96ms. Changing these two parameters, for example, in the case of delay_time = 60, bucket_size = 32, any instruction will be delayed for at least 64ms.

A murder caused by a timer

Looking at the whole thing, our framework needs to have an accurate timer when running. Perform bucket broadcast at a fixed interval. Of course, we first thought of using setInterval(), but the next second we realized how unreliable this idea was: the naughty setInterval() seemed to have a very serious error. And the terrible thing is that every error will accumulate, causing more and more serious consequences.

So we immediately thought of using setTimeout() to dynamically correct the next arrival time to keep our logic roughly stable around the specified interval. For example, this time setTimeout() is 5ms less than expected, then we will make it 5ms earlier next time. However, the test results are not satisfactory, and this is not elegant enough.

So we have to change our thinking again. Is it possible to make setTimeout() expire as quickly as possible and then we check if the current time reaches the target time. For example, in our loop, using setTimeout(callback, 1) to constantly check the time seems like a good idea.

Disappointing timer

We immediately wrote a piece of code to test our idea, and the results were disappointing. In the latest stable version of node.js (v0.10.32) and Windows platform, run this code:

After a while, enter sum/count in the console and you will see a result similar to:

What?! I asked for a 1ms interval and you told me the actual average interval is 15.625ms! This picture is simply too beautiful. We did the same test on mac and got a result of 1.4ms. So we wondered: What the hell is this? If I were an Apple fan, I might have to conclude that Windows is too rubbish and give up on Windows. But fortunately, I am a rigorous front-end engineer, so I started to think about this number.

Wait, why does this number look so familiar? Is the number 15.625ms too similar to the maximum timer interval under Windows? I immediately downloaded a ClockRes for testing, and when I ran it on the console, I got the following results:

As expected! Looking at the node.js manual, we can see this description of setTimeout:

The actual delay depends on external factors like OS timer granularity and system load.

However, the test results show that this actual delay is the maximum timer interval (note that the current timer interval of the system at this time is only 1.001ms), which is unacceptable anyway. Our strong curiosity drove us to look at the source code of node.js. Take a closer look.

BUG in Node.js

I believe that most of you and I have a certain understanding of the even loop mechanism of Node.js. Looking at the source code of timer implementation, we can roughly understand the implementation principle of timer. Let us start from the main loop of event loop:

https://github.com/joyent/libuv/blob/v0.10/src/win/timer.c))

The internal implementation of this function uses the Windows GetTickCount() function to set the current time. Simply put, after calling the setTimeout function, after a series of struggles, the internal timer->due will be set to the current loop timeout. In the event loop, first update the current loop time through uv_update_time, and then check whether a timer has expired in uv_process_timers. If so, enter the world of JavaScript. After reading the whole article, the event loop probably has this process:

Update global time

Check the timer, if any timer expires, execute the callback

Check the reqs queue and execute pending requests

Enter the poll function to collect IO events. If an IO event arrives, add the corresponding processing function to the reqs queue for execution in the next event loop. Inside the poll function, a system method is called to collect IO events. This method will block the process until an IO event arrives or the set timeout is reached. When this method is called, the timeout is set to the most recent timer expiration time. This means that the collection of IO events is blocked, and the maximum blocking time is the end time of the next timer.

Source code of one of the poll functions under Windows:

Following the above steps, assuming we set a timer with timeout = 1ms, the poll function will block for up to 1ms and then resume (if there are no IO events during the period). When continuing to enter the event loop, uv_update_time will update the time, and then uv_process_timers will find that our timer has expired and execute a callback. So the preliminary analysis is that either there is a problem with uv_update_time (the current time is not updated correctly), or the poll function waits for 1ms and then recovers, and there is something wrong with this 1ms wait.

Looking up MSDN, we surprisingly found a description of the GetTickCount function:

The resolution of the GetTickCount function is limited to the resolution of the system timer, which is typically in the range of 10 milliseconds to 16 milliseconds.

The accuracy of GetTickCount is so rough! Assume that the poll function blocks correctly for 1ms, but the next time uv_update_time is executed, the current loop time is not updated correctly! So our timer was not judged to have expired, so poll waited for another 1ms and entered the next event loop. It wasn't until GetTickCount was finally updated correctly (so-called updated every 15.625ms) and the current time of the loop was updated that our timer was judged to have expired in uv_process_timers.

Ask WebKit for help

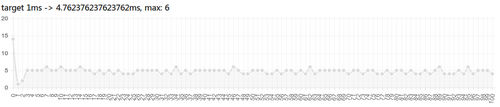

This source code of Node.js is very helpless: it uses a low-precision time function without any processing. But we immediately thought that since we use Node-WebKit, in addition to Node.js's setTimeout, we also have Chromium's setTimeout. Write a test code and run it with our browser or Node-WebKit: http://marks.lrednight.com/test.html#1 (The number following # indicates the interval that needs to be measured) , the result is as shown below:

According to HTML5 specifications, the theoretical result should be 1ms for the first 5 times and 4ms for subsequent results. The results displayed in the test case start from the third time, which means that the data on the table should theoretically be 1ms for the first three times, and the subsequent results are all 4ms. The results have a certain error, and according to regulations, the smallest theoretical result we can get is 4ms. Although we are not satisfied, this is obviously much more satisfactory than the result of node.js. Powerful Curiosity Trend Let’s take a look at the source code of Chromium to see how it is implemented. (https://chromium.googlesource.com/chromium/src.git/ /38.0.2125.101/base/time/time_win.cc)

First, to determine the current time of the loop, Chromium uses the timeGetTime() function. Consulting MSDN, you can find that the accuracy of this function is affected by the current timer interval of the system. On our test machine, it is theoretically the 1.001ms mentioned above. However, by default in Windows systems, the timer interval is its maximum value (15.625ms on the test machine), unless the application modifies the global timer interval.

If you follow news in the IT industry, you must have seen such a piece of news. It seems that our Chromium has set the timer interval very small! It seems we don’t have to worry about system timer intervals anymore? Don't be too happy too soon, this fix gives us a blow. In fact, this issue has been fixed in Chrome 38. Do we need to use a repair of the previous Node-WebKit? This is obviously inelegant and prevents us from using more performant versions of Chromium.

Looking further at the Chromium source code, we can find that when there is a timer and the timer's timeout

其中,kMinTimerIntervalLowResMs = 4,kMinTimerIntervalHighResMs = 1。timeBeginPeriod 以及timeEndPeriod 是 Windows 提供的用来修改系统 timer interval 的函数。也就是说在接电源时,我们能拿到的最小的 timer interval 是1ms,而使用电池时,是4ms。由于我们的循环不断地调用了 setTimeout,根据 W3C 规范,最小的间隔也是 4ms,所以松口气,这个对我们的影响不大。

又一个精度问题

回到开头,我们发现测试结果显示,setTimeout 的间隔并不是稳定在 4ms 的,而是在不断地波动。而http://marks.lrednight.com/test.html#48 测试结果也显示,间隔在 48ms 和 49ms 之间跳动。原因是,在 Chromium 和 Node.js 的 event loop 中,等待 IO 事件的那个 Windows 函数调用的精度,受当前系统的计时器影响。游戏逻辑的实现需要用到 requestAnimationFrame 函数(不停更新画布),这个函数可以帮我们将计时器间隔至少设置为 kMinTimerIntervalLowResMs(因为他需要一个16ms的计时器,触发了高精度计时器的要求)。测试机使用电源的时候,系统的 timer interval 是 1ms,所以测试结果有 ±1ms 的误差。如果你的电脑没有被更改系统计时器间隔,运行上面那个#48的测试,max可能会到达48 16=64ms。

使用 Chromium 的 setTimeout 实现,我们可以将 setTimeout(fn, 1) 的误差控制在 4ms 左右,而 setTimeout(fn, 48) 的误差可以控制在 1ms 左右。于是,我们的心中有了一幅新的蓝图,它让我们的代码看起来像是这样:

The above code allows us to wait for a time when the error is less than bucket_size (bucket_size – deviation) instead of directly equaling a bucket_size. Even if the maximum error occurs with a delay of 46ms, according to the above theory, the actual interval is less than 48ms. The rest of the time we use the busy waiting method to ensure that our gameLoop is executed at a sufficiently precise interval.

Although we solved the problem to a certain extent using Chromium, it is obviously not elegant enough.

Remember our original request? Our server-side code should be able to run independently of the Node-Webkit client and run directly on a computer with a Node.js environment. If you run the above code directly, the value of deviation is at least 16ms, which means that in every 48ms, we have to wait for 16ms. The CPU usage keeps going up.

Unexpected surprise

It’s really annoying. There is such a big BUG in Node.js, and no one noticed it? The answer really surprised us. This BUG has been fixed in v.0.11.3. You can also see the modified results by directly viewing the master branch of the libuv code. The specific method is to add a timeout to the current time of the loop after the poll function waits for completion. In this way, even if GetTickCount does not respond, after waiting for poll, we still add this waiting time. So the timer can expire smoothly.

In other words, the problem that took a long time to work on has been solved in v.0.11.3. However, our efforts are not in vain. Because even if the influence of the GetTickCount function is eliminated, the poll function itself is also affected by the system timer. One solution is to write a Node.js plug-in to change the interval of the system timer.

However, for our game this time, the initial setting is that there is no server. After the client creates a room, it becomes a server. The server code can run in the Node-WebKit environment, so the timer issue under Windows systems is not the highest priority. According to the solution we gave above, the result is enough to satisfy us.

Ending

After solving the timer problem, there are basically no obstacles to our framework implementation. We provide WebSocket support (in a pure HTML5 environment), and also customize the communication protocol to implement higher-performance Socket support (in a Node-WebKit environment). Of course, the functions of Spaceroom were relatively rudimentary at the beginning, but as demands were raised and time increased, we were gradually improving the framework.

For example, when we found that we needed to generate consistent random numbers in our game, we added such a function to Spaceroom. Spaceroom will distribute random number seeds when the game starts. The client's Spaceroom provides a method to use the randomness of md5 to generate random numbers with the help of random number seeds.

Looks quite pleased. In the process of writing such a framework, I also learned a lot. If you are interested in Spaceroom, you can also participate in it. I believe that Spaceroom will show its strength in more places.