mysql - php导出十多万条数据有没有办法更快?

现在是用phpExcel导出大概需要十分钟多,而且内存报错...

有没有什么办法可以让大量数据导出可以更快呢?

...忘了说因为是一些数据客户需要用到的,所以要用excel格式

回复内容:

现在是用phpExcel导出大概需要十分钟多,而且内存报错...

有没有什么办法可以让大量数据导出可以更快呢?

...忘了说因为是一些数据客户需要用到的,所以要用excel格式

首先应该明确问题场景:

1.这个导出10w数据是用户的功能还是后台或者系统内的功能,如果是用户使用的功能,那么可能PHP很难处理,因为要考虑用户体验问题,那么这个功能可能需要使用第三方服务或者用其他扩展来做。但是如果不是用户使用,而是系统人员或者内部人使用。则办法有很多种。

分步骤分析问题

1.从mysql数据库导出10w条数据,2,将10万天数据导出。

首先看下,mysql数据库查询10w条的时间:

我刚刚测试了一下,我的11万数据大概导了205s。因为机器的问题所以每个人的都不一样。但是可以明确一点,这个时间不快。如果PHP的话,一般默认连接时间是30s,就会有第一个问题,超时的问题。这个很好解决

2.用PHP将10w条数据导出。10w条数据就会有第二个问题,内存不够的问题。处理10w条数据比较慢

建议:

1.如@悲惨的大爷的方案,将10条分次导出,由于是SELECT,所以不会占用MYSQL太多资源,可以多线程查询SQL。

但这只能解决查询的问题,查询倒是快了,这个可以优化第一个步骤的时间,但是用PHP导出10条数据的问题还是在,因为是需要把10w条数据导出成一个excel。针对这点可以使用csv.这会比phpexcel快不少,

2.如果数据量太多或者应用场景比较频繁可以做一个服务。

分多个文件导出.

比如现在要导出10w 条数据,那么可以分10次导出,一次1w 条.

可以用多进程来处理.

我猜你是查数据库一次取了十万条,然后导出的。这样非常占用内存。

你可以试试分页取十次,每次取一万条导出。最后把导出的文件合并。

用mysql自带的mysqldump不好么

如果一定要excel 可以odbc连接到数据库后导出到excel,具体操作搜索下

我导出500000一点问题都没的,记着释放内存

直接对数据库操作,mysql导出数据,写个别的桌面程序来转换?

CSV 没有一点压力

用csv格式啊,不要用phpexcel

用csv。千万不要用phpexcel。这种东西读取速度特别的慢。我是吃过亏的。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1374

1374

52

52

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

MySQL has a free community version and a paid enterprise version. The community version can be used and modified for free, but the support is limited and is suitable for applications with low stability requirements and strong technical capabilities. The Enterprise Edition provides comprehensive commercial support for applications that require a stable, reliable, high-performance database and willing to pay for support. Factors considered when choosing a version include application criticality, budgeting, and technical skills. There is no perfect option, only the most suitable option, and you need to choose carefully according to the specific situation.

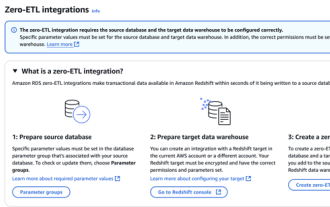

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

1. Use the correct index to speed up data retrieval by reducing the amount of data scanned select*frommployeeswherelast_name='smith'; if you look up a column of a table multiple times, create an index for that column. If you or your app needs data from multiple columns according to the criteria, create a composite index 2. Avoid select * only those required columns, if you select all unwanted columns, this will only consume more server memory and cause the server to slow down at high load or frequency times For example, your table contains columns such as created_at and updated_at and timestamps, and then avoid selecting * because they do not require inefficient query se

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

How to fill in mysql username and password

Apr 08, 2025 pm 07:09 PM

How to fill in mysql username and password

Apr 08, 2025 pm 07:09 PM

To fill in the MySQL username and password: 1. Determine the username and password; 2. Connect to the database; 3. Use the username and password to execute queries and commands.

How to copy and paste mysql

Apr 08, 2025 pm 07:18 PM

How to copy and paste mysql

Apr 08, 2025 pm 07:18 PM

Copy and paste in MySQL includes the following steps: select the data, copy with Ctrl C (Windows) or Cmd C (Mac); right-click at the target location, select Paste or use Ctrl V (Windows) or Cmd V (Mac); the copied data is inserted into the target location, or replace existing data (depending on whether the data already exists at the target location).

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL is suitable for beginners because it is simple to install, powerful and easy to manage data. 1. Simple installation and configuration, suitable for a variety of operating systems. 2. Support basic operations such as creating databases and tables, inserting, querying, updating and deleting data. 3. Provide advanced functions such as JOIN operations and subqueries. 4. Performance can be improved through indexing, query optimization and table partitioning. 5. Support backup, recovery and security measures to ensure data security and consistency.