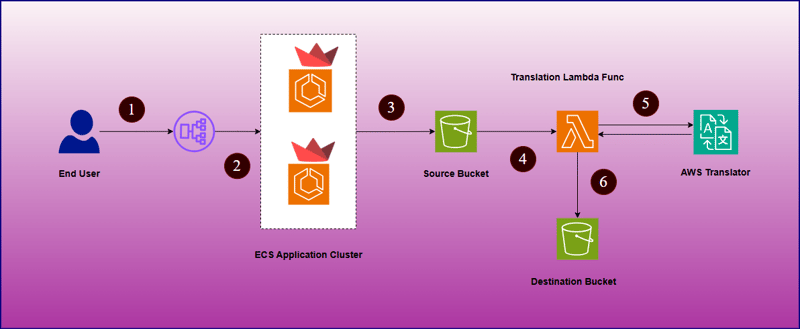

DocuTranslator, un système de traduction de documents, intégré à AWS et développé par le framework d'application Streamlit. Cette application permet à l'utilisateur final de traduire les documents dans la langue de son choix qu'il souhaite télécharger. Il offre la possibilité de traduire dans plusieurs langues selon le souhait de l'utilisateur, ce qui aide vraiment les utilisateurs à comprendre le contenu de manière confortable.

L'intention de ce projet est de fournir une interface d'application simple et conviviale pour réaliser le processus de traduction aussi simple que les utilisateurs l'attendent. Dans ce système, personne n'a besoin de traduire des documents en entrant dans le service AWS Translate, mais l'utilisateur final peut accéder directement au point de terminaison de l'application et remplir les exigences.

L'architecture ci-dessus montre les points clés ci-dessous -

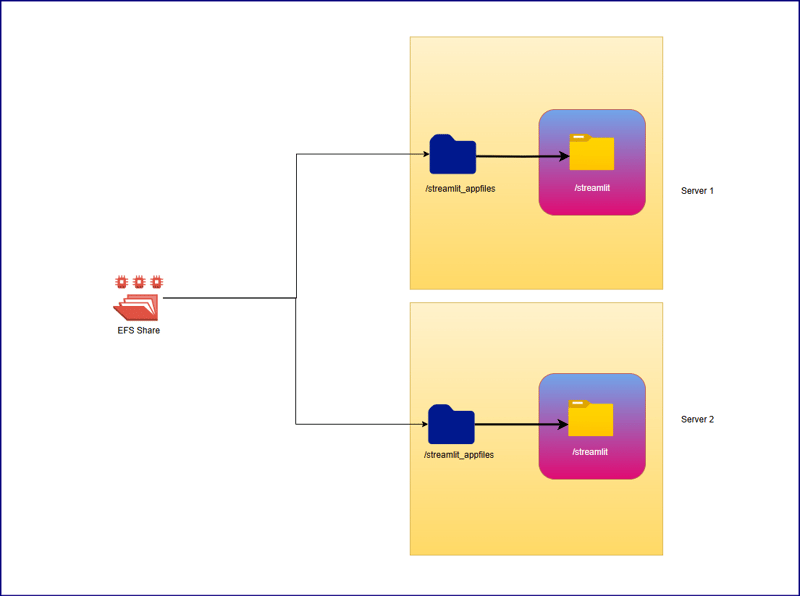

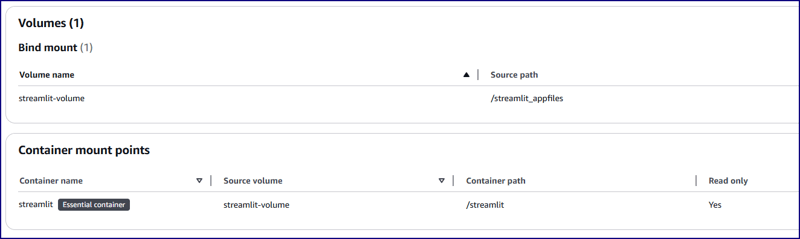

Ici, nous avons utilisé le chemin de partage EFS pour partager les mêmes fichiers d'application entre deux instances EC2 sous-jacentes. Nous avons créé un point de montage /streamlit_appfiles à l'intérieur des instances EC2 et monté avec le partage EFS. Cette approche aidera à partager le même contenu sur deux serveurs différents. Après cela, notre intention est de créer une réplication du même contenu d'application dans le répertoire de travail du conteneur qui est /streamlit. Pour cela, nous avons utilisé des montages de liaison afin que toutes les modifications apportées au code de l'application au niveau EC2 soient également répliquées dans le conteneur. Nous devons restreindre la réplication bidirectionnelle, ce qui signifie que si quelqu'un modifie par erreur le code depuis l'intérieur du conteneur, il ne doit pas être répliqué au niveau de l'hôte EC2. Par conséquent, le répertoire de travail du conteneur a été créé en tant que système de fichiers en lecture seule.

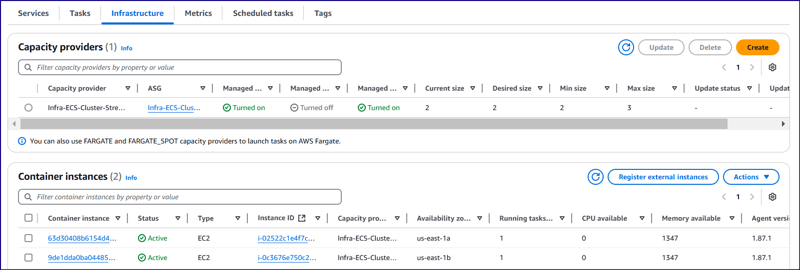

Configuration EC2 sous-jacente :

Type d'instance : t2.medium

Type de réseau : Sous-réseau privé

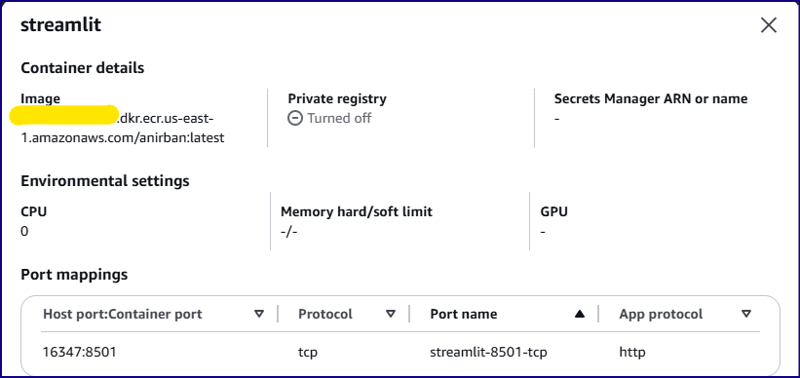

Configuration du conteneur :

Image :

Mode réseau : par défaut

Port hôte : 16347

Port à conteneurs : 8501

CPU de tâche : 2 vCPU (2 048 unités)

Mémoire de tâches : 2,5 Go (2 560 Mo)

Configuration des volumes :

Nom du volume : streamlit-volume

Chemin source : /streamlit_appfiles

Chemin du conteneur : /streamlit

Système de fichiers en lecture seule : OUI

Référence de définition de tâche :

{

"taskDefinitionArn": "arn:aws:ecs:us-east-1:<account-id>:task-definition/Streamlit_TDF-1:5",

"containerDefinitions": [

{

"name": "streamlit",

"image": "<account-id>.dkr.ecr.us-east-1.amazonaws.com/anirban:latest",

"cpu": 0,

"portMappings": [

{

"name": "streamlit-8501-tcp",

"containerPort": 8501,

"hostPort": 16347,

"protocol": "tcp",

"appProtocol": "http"

}

],

"essential": true,

"environment": [],

"environmentFiles": [],

"mountPoints": [

{

"sourceVolume": "streamlit-volume",

"containerPath": "/streamlit",

"readOnly": true

}

],

"volumesFrom": [],

"ulimits": [],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/Streamlit_TDF-1",

"mode": "non-blocking",

"awslogs-create-group": "true",

"max-buffer-size": "25m",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

},

"secretOptions": []

},

"systemControls": []

}

],

"family": "Streamlit_TDF-1",

"taskRoleArn": "arn:aws:iam::<account-id>:role/ecsTaskExecutionRole",

"executionRoleArn": "arn:aws:iam::<account-id>:role/ecsTaskExecutionRole",

"revision": 5,

"volumes": [

{

"name": "streamlit-volume",

"host": {

"sourcePath": "/streamlit_appfiles"

}

}

],

"status": "ACTIVE",

"requiresAttributes": [

{

"name": "com.amazonaws.ecs.capability.logging-driver.awslogs"

},

{

"name": "ecs.capability.execution-role-awslogs"

},

{

"name": "com.amazonaws.ecs.capability.ecr-auth"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.19"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.28"

},

{

"name": "com.amazonaws.ecs.capability.task-iam-role"

},

{

"name": "ecs.capability.execution-role-ecr-pull"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.18"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.29"

}

],

"placementConstraints": [],

"compatibilities": [

"EC2"

],

"requiresCompatibilities": [

"EC2"

],

"cpu": "2048",

"memory": "2560",

"runtimePlatform": {

"cpuArchitecture": "X86_64",

"operatingSystemFamily": "LINUX"

},

"registeredAt": "2024-11-09T05:59:47.534Z",

"registeredBy": "arn:aws:iam::<account-id>:root",

"tags": []

}

app.py

import streamlit as st

import boto3

import os

import time

from pathlib import Path

s3 = boto3.client('s3', region_name='us-east-1')

tran = boto3.client('translate', region_name='us-east-1')

lam = boto3.client('lambda', region_name='us-east-1')

# Function to list S3 buckets

def listbuckets():

list_bucket = s3.list_buckets()

bucket_name = tuple([it["Name"] for it in list_bucket["Buckets"]])

return bucket_name

# Upload object to S3 bucket

def upload_to_s3bucket(file_path, selected_bucket, file_name):

s3.upload_file(file_path, selected_bucket, file_name)

def list_language():

response = tran.list_languages()

list_of_langs = [i["LanguageName"] for i in response["Languages"]]

return list_of_langs

def wait_for_s3obj(dest_selected_bucket, file_name):

while True:

try:

get_obj = s3.get_object(Bucket=dest_selected_bucket, Key=f'Translated-{file_name}.txt')

obj_exist = 'true' if get_obj['Body'] else 'false'

return obj_exist

except s3.exceptions.ClientError as e:

if e.response['Error']['Code'] == "404":

print(f"File '{file_name}' not found. Checking again in 3 seconds...")

time.sleep(3)

def download(dest_selected_bucket, file_name, file_path):

s3.download_file(dest_selected_bucket,f'Translated-{file_name}.txt', f'{file_path}/download/Translated-{file_name}.txt')

with open(f"{file_path}/download/Translated-{file_name}.txt", "r") as file:

st.download_button(

label="Download",

data=file,

file_name=f"{file_name}.txt"

)

def streamlit_application():

# Give a header

st.header("Document Translator", divider=True)

# Widgets to upload a file

uploaded_files = st.file_uploader("Choose a PDF file", accept_multiple_files=True, type="pdf")

# # upload a file

file_name = uploaded_files[0].name.replace(' ', '_') if uploaded_files else None

# Folder path

file_path = '/tmp'

# Select the bucket from drop down

selected_bucket = st.selectbox("Choose the S3 Bucket to upload file :", listbuckets())

dest_selected_bucket = st.selectbox("Choose the S3 Bucket to download file :", listbuckets())

selected_language = st.selectbox("Choose the Language :", list_language())

# Create a button

click = st.button("Upload", type="primary")

if click == True:

if file_name:

with open(f'{file_path}/{file_name}', mode='wb') as w:

w.write(uploaded_files[0].getvalue())

# Set the selected language to the environment variable of lambda function

lambda_env1 = lam.update_function_configuration(FunctionName='TriggerFunctionFromS3', Environment={'Variables': {'UserInputLanguage': selected_language, 'DestinationBucket': dest_selected_bucket, 'TranslatedFileName': file_name}})

# Upload the file to S3 bucket:

upload_to_s3bucket(f'{file_path}/{file_name}', selected_bucket, file_name)

if s3.get_object(Bucket=selected_bucket, Key=file_name):

st.success("File uploaded successfully", icon="✅")

output = wait_for_s3obj(dest_selected_bucket, file_name)

if output:

download(dest_selected_bucket, file_name, file_path)

else:

st.error("File upload failed", icon="?")

streamlit_application()

about.py

import streamlit as st

## Write the description of application

st.header("About")

about = '''

Welcome to the File Uploader Application!

This application is designed to make uploading PDF documents simple and efficient. With just a few clicks, users can upload their documents securely to an Amazon S3 bucket for storage. Here’s a quick overview

of what this app does:

**Key Features:**

- **Easy Upload:** Users can quickly upload PDF documents by selecting the file and clicking the 'Upload' button.

- **Seamless Integration with AWS S3:** Once the document is uploaded, it is stored securely in a designated S3 bucket, ensuring reliable and scalable cloud storage.

- **User-Friendly Interface:** Built using Streamlit, the interface is clean, intuitive, and accessible to all users, making the uploading process straightforward.

**How it Works:**

1. **Select a PDF Document:** Users can browse and select any PDF document from their local system.

2. **Upload the Document:** Clicking the ‘Upload’ button triggers the process of securely uploading the selected document to an AWS S3 bucket.

3. **Success Notification:** After a successful upload, users will receive a confirmation message that their document has been stored in the cloud.

This application offers a streamlined way to store documents on the cloud, reducing the hassle of manual file management. Whether you're an individual or a business, this tool helps you organize and store your

files with ease and security.

You can further customize this page by adding technical details, usage guidelines, or security measures as per your application's specifications.'''

st.markdown(about)

navigation.py

import streamlit as st

pg = st.navigation([

st.Page("app.py", title="DocuTranslator", icon="?"),

st.Page("about.py", title="About", icon="?")

], position="sidebar")

pg.run()

Fichier Docker :

FROM python:3.9-slim WORKDIR /streamlit COPY requirements.txt /streamlit/requirements.txt RUN pip install --no-cache-dir -r requirements.txt RUN mkdir /tmp/download COPY . /streamlit EXPOSE 8501 CMD ["streamlit", "run", "navigation.py", "--server.port=8501", "--server.headless=true"]

Le fichier Docker créera une image en empaquetant tous les fichiers de configuration d'application ci-dessus, puis il sera transféré vers le référentiel ECR. Docker Hub peut également être utilisé pour stocker l'image.

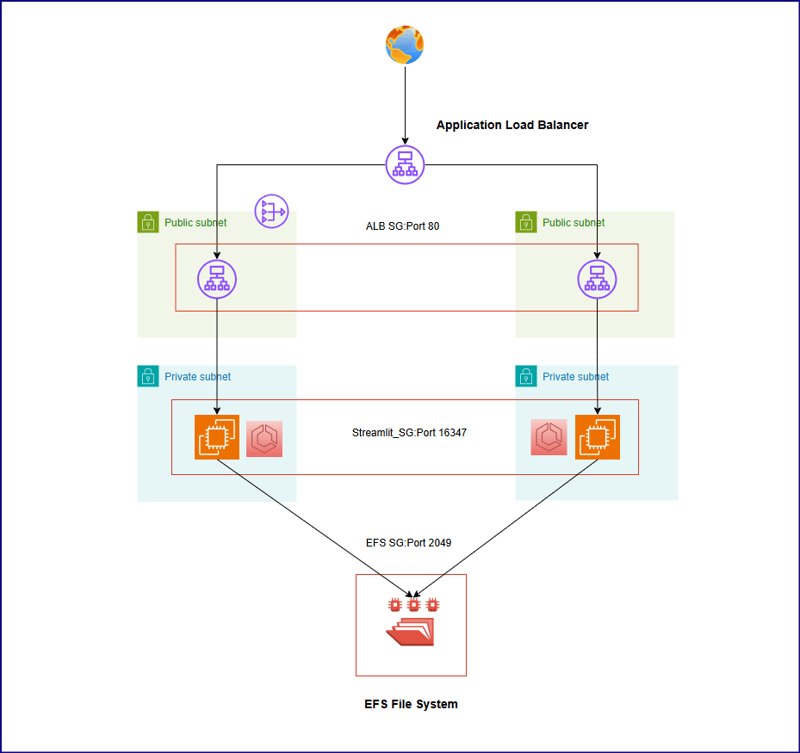

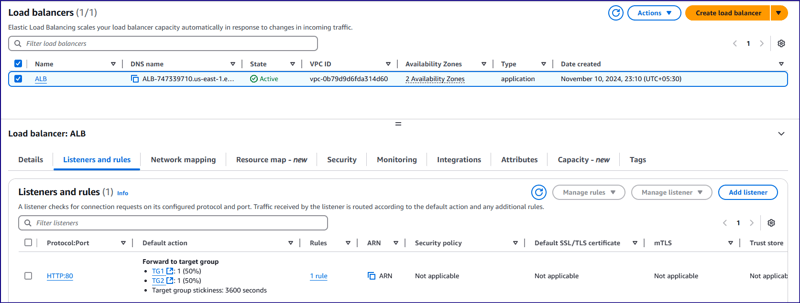

Dans l'architecture, les instances d'application sont censées être créées dans un sous-réseau privé et l'équilibreur de charge est censé créer pour réduire la charge de trafic entrant vers les instances EC2 privées.

Comme deux hôtes EC2 sous-jacents sont disponibles pour héberger les conteneurs, l'équilibrage de charge est configuré sur deux hôtes EC2 pour distribuer le trafic entrant. Deux groupes cibles différents sont créés pour placer deux instances EC2 dans chacun avec un poids de 50 %.

L'équilibreur de charge accepte le trafic entrant sur le port 80, puis le transmet aux instances backend EC2 sur le port 16347 et le transmet également au conteneur ECS correspondant.

Il existe une fonction lambda configurée pour prendre le compartiment source comme entrée pour télécharger le fichier pdf à partir de là et extraire le contenu, puis elle traduit le contenu de la langue actuelle vers la langue cible fournie par l'utilisateur et crée un fichier texte à télécharger vers la destination S3. seau.

{

"taskDefinitionArn": "arn:aws:ecs:us-east-1:<account-id>:task-definition/Streamlit_TDF-1:5",

"containerDefinitions": [

{

"name": "streamlit",

"image": "<account-id>.dkr.ecr.us-east-1.amazonaws.com/anirban:latest",

"cpu": 0,

"portMappings": [

{

"name": "streamlit-8501-tcp",

"containerPort": 8501,

"hostPort": 16347,

"protocol": "tcp",

"appProtocol": "http"

}

],

"essential": true,

"environment": [],

"environmentFiles": [],

"mountPoints": [

{

"sourceVolume": "streamlit-volume",

"containerPath": "/streamlit",

"readOnly": true

}

],

"volumesFrom": [],

"ulimits": [],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/Streamlit_TDF-1",

"mode": "non-blocking",

"awslogs-create-group": "true",

"max-buffer-size": "25m",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

},

"secretOptions": []

},

"systemControls": []

}

],

"family": "Streamlit_TDF-1",

"taskRoleArn": "arn:aws:iam::<account-id>:role/ecsTaskExecutionRole",

"executionRoleArn": "arn:aws:iam::<account-id>:role/ecsTaskExecutionRole",

"revision": 5,

"volumes": [

{

"name": "streamlit-volume",

"host": {

"sourcePath": "/streamlit_appfiles"

}

}

],

"status": "ACTIVE",

"requiresAttributes": [

{

"name": "com.amazonaws.ecs.capability.logging-driver.awslogs"

},

{

"name": "ecs.capability.execution-role-awslogs"

},

{

"name": "com.amazonaws.ecs.capability.ecr-auth"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.19"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.28"

},

{

"name": "com.amazonaws.ecs.capability.task-iam-role"

},

{

"name": "ecs.capability.execution-role-ecr-pull"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.18"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.29"

}

],

"placementConstraints": [],

"compatibilities": [

"EC2"

],

"requiresCompatibilities": [

"EC2"

],

"cpu": "2048",

"memory": "2560",

"runtimePlatform": {

"cpuArchitecture": "X86_64",

"operatingSystemFamily": "LINUX"

},

"registeredAt": "2024-11-09T05:59:47.534Z",

"registeredBy": "arn:aws:iam::<account-id>:root",

"tags": []

}

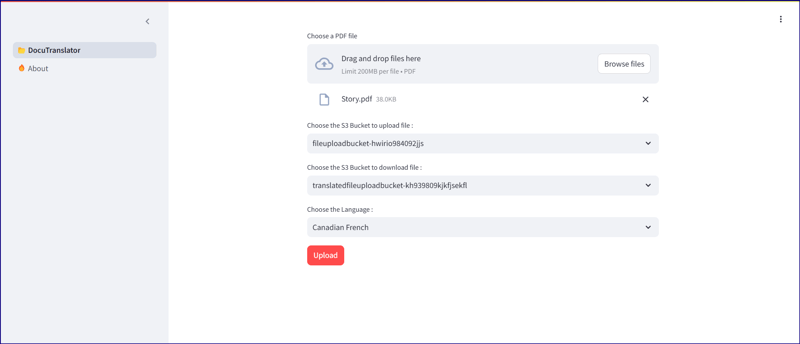

Ouvrez l'URL de l'équilibreur de charge de l'application "ALB-747339710.us-east-1.elb.amazonaws.com" pour ouvrir l'application Web. Parcourez n'importe quel fichier pdf, conservez à la fois la source "fileuploadbucket-hwirio984092jjs" et le compartiment de destination "translatedfileuploadbucket-kh939809kjkfjsekfl" tels quels, car dans le code lambda, la cible a été codée en dur. le seau est comme mentionné ci-dessus. Choisissez la langue dans laquelle vous souhaitez que le document soit traduit et cliquez sur télécharger. Une fois cliqué dessus, le programme d'application commencera à interroger le compartiment S3 de destination pour savoir si le fichier traduit est téléchargé. S'il trouve le fichier exact, une nouvelle option "Télécharger" sera visible pour télécharger le fichier à partir du compartiment S3 de destination.

Lien d'application : http://alb-747339710.us-east-1.elb.amazonaws.com/

Contenu réel :

import streamlit as st

import boto3

import os

import time

from pathlib import Path

s3 = boto3.client('s3', region_name='us-east-1')

tran = boto3.client('translate', region_name='us-east-1')

lam = boto3.client('lambda', region_name='us-east-1')

# Function to list S3 buckets

def listbuckets():

list_bucket = s3.list_buckets()

bucket_name = tuple([it["Name"] for it in list_bucket["Buckets"]])

return bucket_name

# Upload object to S3 bucket

def upload_to_s3bucket(file_path, selected_bucket, file_name):

s3.upload_file(file_path, selected_bucket, file_name)

def list_language():

response = tran.list_languages()

list_of_langs = [i["LanguageName"] for i in response["Languages"]]

return list_of_langs

def wait_for_s3obj(dest_selected_bucket, file_name):

while True:

try:

get_obj = s3.get_object(Bucket=dest_selected_bucket, Key=f'Translated-{file_name}.txt')

obj_exist = 'true' if get_obj['Body'] else 'false'

return obj_exist

except s3.exceptions.ClientError as e:

if e.response['Error']['Code'] == "404":

print(f"File '{file_name}' not found. Checking again in 3 seconds...")

time.sleep(3)

def download(dest_selected_bucket, file_name, file_path):

s3.download_file(dest_selected_bucket,f'Translated-{file_name}.txt', f'{file_path}/download/Translated-{file_name}.txt')

with open(f"{file_path}/download/Translated-{file_name}.txt", "r") as file:

st.download_button(

label="Download",

data=file,

file_name=f"{file_name}.txt"

)

def streamlit_application():

# Give a header

st.header("Document Translator", divider=True)

# Widgets to upload a file

uploaded_files = st.file_uploader("Choose a PDF file", accept_multiple_files=True, type="pdf")

# # upload a file

file_name = uploaded_files[0].name.replace(' ', '_') if uploaded_files else None

# Folder path

file_path = '/tmp'

# Select the bucket from drop down

selected_bucket = st.selectbox("Choose the S3 Bucket to upload file :", listbuckets())

dest_selected_bucket = st.selectbox("Choose the S3 Bucket to download file :", listbuckets())

selected_language = st.selectbox("Choose the Language :", list_language())

# Create a button

click = st.button("Upload", type="primary")

if click == True:

if file_name:

with open(f'{file_path}/{file_name}', mode='wb') as w:

w.write(uploaded_files[0].getvalue())

# Set the selected language to the environment variable of lambda function

lambda_env1 = lam.update_function_configuration(FunctionName='TriggerFunctionFromS3', Environment={'Variables': {'UserInputLanguage': selected_language, 'DestinationBucket': dest_selected_bucket, 'TranslatedFileName': file_name}})

# Upload the file to S3 bucket:

upload_to_s3bucket(f'{file_path}/{file_name}', selected_bucket, file_name)

if s3.get_object(Bucket=selected_bucket, Key=file_name):

st.success("File uploaded successfully", icon="✅")

output = wait_for_s3obj(dest_selected_bucket, file_name)

if output:

download(dest_selected_bucket, file_name, file_path)

else:

st.error("File upload failed", icon="?")

streamlit_application()

Contenu traduit (en français canadien)

import streamlit as st

## Write the description of application

st.header("About")

about = '''

Welcome to the File Uploader Application!

This application is designed to make uploading PDF documents simple and efficient. With just a few clicks, users can upload their documents securely to an Amazon S3 bucket for storage. Here’s a quick overview

of what this app does:

**Key Features:**

- **Easy Upload:** Users can quickly upload PDF documents by selecting the file and clicking the 'Upload' button.

- **Seamless Integration with AWS S3:** Once the document is uploaded, it is stored securely in a designated S3 bucket, ensuring reliable and scalable cloud storage.

- **User-Friendly Interface:** Built using Streamlit, the interface is clean, intuitive, and accessible to all users, making the uploading process straightforward.

**How it Works:**

1. **Select a PDF Document:** Users can browse and select any PDF document from their local system.

2. **Upload the Document:** Clicking the ‘Upload’ button triggers the process of securely uploading the selected document to an AWS S3 bucket.

3. **Success Notification:** After a successful upload, users will receive a confirmation message that their document has been stored in the cloud.

This application offers a streamlined way to store documents on the cloud, reducing the hassle of manual file management. Whether you're an individual or a business, this tool helps you organize and store your

files with ease and security.

You can further customize this page by adding technical details, usage guidelines, or security measures as per your application's specifications.'''

st.markdown(about)

Cet article nous a montré comment le processus de traduction de documents peut être aussi simple que nous l'imaginons lorsqu'un utilisateur final doit cliquer sur certaines options pour choisir les informations requises et obtenir le résultat souhaité en quelques secondes sans penser à la configuration. Pour l'instant, nous avons inclus une seule fonctionnalité pour traduire un document pdf, mais plus tard, nous rechercherons davantage à ce sujet pour avoir plusieurs fonctionnalités dans une seule application avec des fonctionnalités intéressantes.

Ce qui précède est le contenu détaillé de. pour plus d'informations, suivez d'autres articles connexes sur le site Web de PHP en chinois!