ナレッジグラフ構築におけるナレッジ抽出の問題

训练集:

{sentence: "张三是华为公司的员工", entities: [{"start": 0, "end": 2, "type": "person"}, {"start": 6, "end": 9, "type": "company"}]}

{sentence: "今天是2021年10月1日", entities: [{"start": 3, "end": 15, "type": "date"}]}import sklearn_crfsuite

# 定义特征函数

def word2features(sentence, i):

word = sentence[i]

features = {

'word': word,

'is_capitalized': word[0].upper() == word[0],

'is_all_lower': word.lower() == word,

# 添加更多的特征

}

return features

# 提取特征和标签

def extract_features_and_labels(sentences):

X = []

y = []

for sentence in sentences:

X_sentence = []

y_sentence = []

for i in range(len(sentence['sentence'])):

X_sentence.append(word2features(sentence['sentence'], i))

y_sentence.append(sentence['entities'][i].get('type', 'O'))

X.append(X_sentence)

y.append(y_sentence)

return X, y

# 准备训练数据

train_sentences = [

{'sentence': ["张三", "是", "华为", "公司", "的", "员工"], 'entities': [{'start': 0, 'end': 2, 'type': 'person'}, {'start': 2, 'end': 4, 'type': 'company'}]},

{'sentence': ["今天", "是", "2021", "年", "10", "月", "1", "日"], 'entities': [{'start': 0, 'end': 8, 'type': 'date'}]}

]

X_train, y_train = extract_features_and_labels(train_sentences)

# 训练模型

model = sklearn_crfsuite.CRF()

model.fit(X_train, y_train)

# 预测实体

test_sentence = ["张三", "是", "华为", "公司", "的", "员工"]

X_test = [word2features(test_sentence, i) for i in range(len(test_sentence))]

y_pred = model.predict_single(X_test)

# 打印预测结果

entities = []

for i in range(len(y_pred)):

if y_pred[i] != 'O':

entities.append({'start': i, 'end': i+1, 'type': y_pred[i]})

print(entities)以上がナレッジグラフ構築におけるナレッジ抽出の問題の詳細内容です。詳細については、PHP 中国語 Web サイトの他の関連記事を参照してください。

ホットAIツール

Undresser.AI Undress

リアルなヌード写真を作成する AI 搭載アプリ

AI Clothes Remover

写真から衣服を削除するオンライン AI ツール。

Undress AI Tool

脱衣画像を無料で

Clothoff.io

AI衣類リムーバー

AI Hentai Generator

AIヘンタイを無料で生成します。

人気の記事

ホットツール

メモ帳++7.3.1

使いやすく無料のコードエディター

SublimeText3 中国語版

中国語版、とても使いやすい

ゼンドスタジオ 13.0.1

強力な PHP 統合開発環境

ドリームウィーバー CS6

ビジュアル Web 開発ツール

SublimeText3 Mac版

神レベルのコード編集ソフト(SublimeText3)

ホットトピック

7523

7523

15

15

1378

1378

52

52

81

81

11

11

21

21

74

74

カスタム WordPress ユーザー フローの構築、パート 3: パスワードのリセット

Sep 03, 2023 pm 11:05 PM

カスタム WordPress ユーザー フローの構築、パート 3: パスワードのリセット

Sep 03, 2023 pm 11:05 PM

このシリーズの最初の 2 つのチュートリアルでは、新しいユーザーのログインと登録のためのカスタム ページを作成しました。さて、ログイン フローのうち調査して置き換える部分は 1 つだけ残っています。ユーザーがパスワードを忘れて WordPress パスワードをリセットしたい場合はどうなりますか?このチュートリアルでは、最後のステップに取り組み、シリーズ全体で構築してきたパーソナライズされたログイン プラグインを完成させます。 WordPress のパスワード リセット機能は、今日の Web サイトの標準的な方法にほぼ準拠しています。ユーザーは、ユーザー名または電子メール アドレスを入力し、WordPress にパスワードのリセットを要求することによってリセットを開始します。一時的なパスワード リセット トークンを作成し、ユーザー データに保存します。このトークンを含むリンクがユーザーの電子メール アドレスに送信されます。ユーザーがリンクをクリックします。重い中で

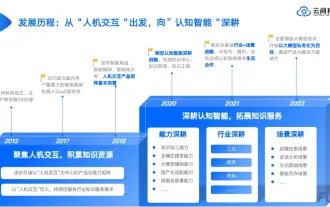

産業ナレッジグラフの高度な実践

Jun 13, 2024 am 11:59 AM

産業ナレッジグラフの高度な実践

Jun 13, 2024 am 11:59 AM

1. 背景の紹介 まず、Yunwen Technology の開発の歴史を紹介します。 Yunwen Technology Company ...2023 年は大規模モデルが普及する時期であり、多くの企業は大規模モデルの後、グラフの重要性が大幅に低下し、以前に検討されたプリセット情報システムはもはや重要ではないと考えています。しかし、RAG の推進とデータ ガバナンスの普及により、より効率的なデータ ガバナンスと高品質のデータが民営化された大規模モデルの有効性を向上させるための重要な前提条件であることがわかり、ますます多くの企業が注目し始めています。知識構築関連コンテンツへ。これにより、知識の構築と処理がより高いレベルに促進され、探索できる技術や方法が数多く存在します。新しいテクノロジーの出現によってすべての古いテクノロジーが打ち破られるわけではなく、新旧のテクノロジーが統合される可能性があることがわかります。

Jia Qianghuai: アリの大規模知識グラフの構築と応用

Sep 10, 2023 pm 03:05 PM

Jia Qianghuai: アリの大規模知識グラフの構築と応用

Sep 10, 2023 pm 03:05 PM

1. グラフの概要 まず、ナレッジ グラフの基本概念をいくつか紹介します。 1. ナレッジ グラフとは何ですか? ナレッジ グラフは、グラフ構造を使用して、物事間の複雑な関係をモデル化し、識別し、推論し、ドメイン知識を沈殿させることを目的としています。認知的インテリジェンスを実現するための重要な基盤であり、検索エンジンやインテリジェントな質問応答で広く使用されています。 、言語意味理解、ビッグデータ意思決定分析、その他多くの分野。ナレッジ グラフは、データ間の意味的関係と構造的関係の両方をモデル化し、深層学習テクノロジと組み合わせることで、2 つの関係をより適切に統合して表現できます。 2. なぜナレッジ グラフを構築する必要があるのか? ナレッジ グラフは主に次の 2 つの点から構築したいと考えています: 一方で、アリ自体のデータ ソース背景の特徴、もう一方で、アリがもたらす利点ナレッジグラフがもたらすことができます。 [1] データ ソース自体は多様であり、異質です。

スムーズなビルド: Maven イメージ アドレスを正しく構成する方法

Feb 20, 2024 pm 08:48 PM

スムーズなビルド: Maven イメージ アドレスを正しく構成する方法

Feb 20, 2024 pm 08:48 PM

スムーズなビルド: Maven イメージ アドレスを正しく構成する方法 Maven を使用してプロジェクトをビルドする場合、正しいイメージ アドレスを構成することが非常に重要です。ミラー アドレスを適切に構成すると、プロジェクトの構築を迅速化し、ネットワークの遅延などの問題を回避できます。この記事では、Maven ミラー アドレスを正しく構成する方法と、具体的なコード例を紹介します。 Maven イメージ アドレスを構成する必要があるのはなぜですか? Maven は、プロジェクトの自動構築、依存関係の管理、レポートの生成などを行うことができるプロジェクト管理ツールです。 Maven でプロジェクトをビルドする場合、通常は

ChatGPT Java: インテリジェントな音楽推奨システムを構築する方法

Oct 27, 2023 pm 01:55 PM

ChatGPT Java: インテリジェントな音楽推奨システムを構築する方法

Oct 27, 2023 pm 01:55 PM

ChatGPTJava: インテリジェントな音楽推奨システムを構築する方法、具体的なコード例が必要です はじめに: インターネットの急速な発展に伴い、音楽は人々の日常生活に欠かせないものになりました。音楽プラットフォームが出現し続けるにつれて、ユーザーはしばしば共通の問題に直面します。それは、自分の好みに合った音楽をどうやって見つけるかということです。この問題を解決するために、インテリジェント音楽推薦システムが登場しました。この記事では、ChatGPTJava を使用してインテリジェントな音楽推奨システムを構築する方法を紹介し、具体的なコード例を示します。いいえ。

Maven プロジェクトのパッケージ化プロセスを最適化し、開発効率を向上させます。

Feb 24, 2024 pm 02:15 PM

Maven プロジェクトのパッケージ化プロセスを最適化し、開発効率を向上させます。

Feb 24, 2024 pm 02:15 PM

Maven プロジェクトのパッケージ化ステップ ガイド: ビルド プロセスを最適化し、開発効率を向上させる ソフトウェア開発プロジェクトがますます複雑になるにつれて、プロジェクト構築の効率と速度は開発プロセスにおいて無視できない重要な要素になっています。人気のあるプロジェクト管理ツールとして、Maven はプロジェクトの構築において重要な役割を果たします。このガイドでは、Maven プロジェクトのパッケージ化手順を最適化することで開発効率を向上させる方法を検討し、具体的なコード例を示します。 1. プロジェクトの構造を確認する Maven プロジェクトのパッケージ化ステップの最適化を開始する前に、まず確認する必要があります。

Python を使用してインテリジェントな音声アシスタントを構築する方法

Sep 09, 2023 pm 04:04 PM

Python を使用してインテリジェントな音声アシスタントを構築する方法

Sep 09, 2023 pm 04:04 PM

Python を使用してインテリジェントな音声アシスタントを構築する方法 はじめに: 現代テクノロジーの急速な発展の時代において、インテリジェントなアシスタントに対する人々の需要はますます高まっています。その形態の一つとして、スマート音声アシスタントは、携帯電話、パソコン、スマートスピーカーなど、さまざまなデバイスで広く利用されています。この記事では、Python プログラミング言語を使用してシンプルなインテリジェント音声アシスタントを構築し、独自のパーソナライズされたインテリジェント アシスタントを最初から実装する方法を紹介します。準備 音声アシスタントの構築を始める前に、まず必要なツールをいくつか準備する必要があります。

JavaScript を使用してオンライン計算機を構築する

Aug 09, 2023 pm 03:46 PM

JavaScript を使用してオンライン計算機を構築する

Aug 09, 2023 pm 03:46 PM

JavaScript を使用したオンライン計算機の構築 インターネットの発展に伴い、ますます多くのツールやアプリケーションがオンラインに登場し始めています。その中でも、電卓は最も広く使用されているツールの 1 つです。この記事では、JavaScript を使用して簡単なオンライン計算機を作成する方法を説明し、コード例を示します。始める前に、HTML と CSS の基本的な知識を知っておく必要があります。電卓インターフェイスは、HTML テーブル要素を使用して構築し、CSS を使用してスタイル設定できます。ここが基本です