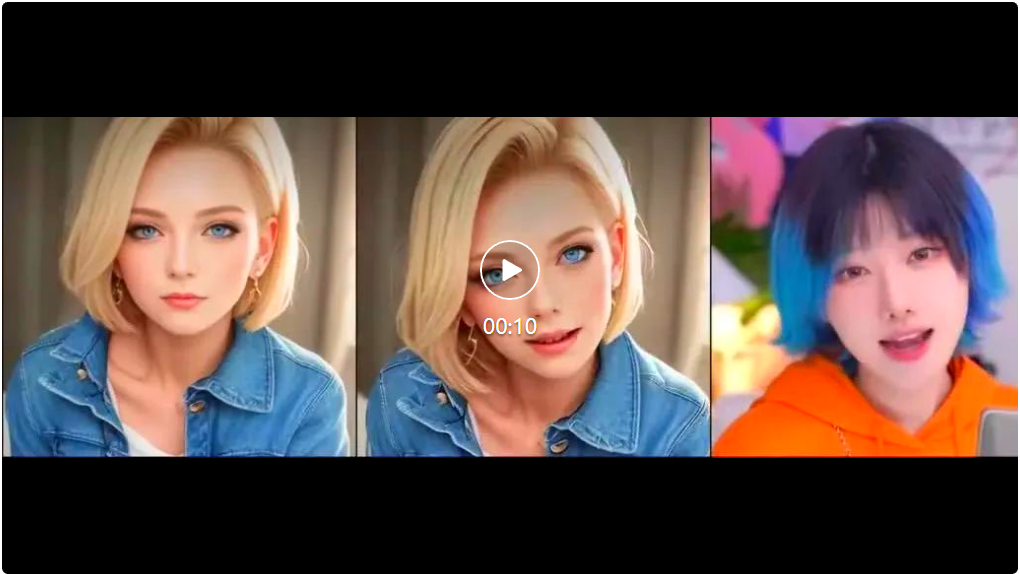

Up の所有者はすでに、Tencent のオープンソース「AniPortrait」を悪用し、写真に歌わせたりしゃべらせたりし始めています。

AniPortrait モデルはオープンソースであり、自由に再生できます。

- 論文タイトル: AniPortrait: フォトリアリスティックなポートレート アニメーションのオーディオ駆動合成

- 論文アドレス: https ://arxiv.org/pdf/2403.17694.pdf

- コードアドレス: https://github.com/Zejun-Yang/AniPortrait

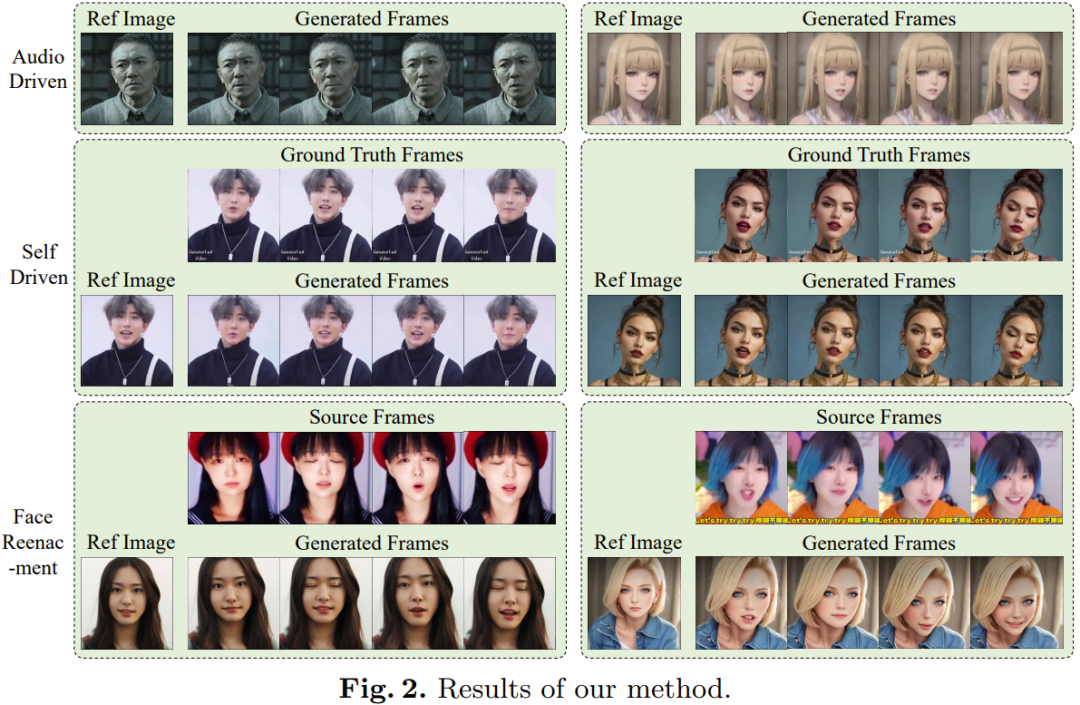

Tencent が新たに提案した AniPortrait フレームワークには、Audio2Lmk と Lmk2Video という 2 つのモジュールが含まれています。

Audio2Lmk は、音声入力から複雑な顔の表情や唇の動きをキャプチャするランドマーク シーケンスを抽出するために使用されます。 Lmk2Video は、このランドマーク シーケンスを使用して、時間的に安定した一貫した高品質のポートレート ビデオを生成します。

図 1 は、AniPortrait フレームワークの概要を示しています。

Please refer to the original paper for more details.

Please refer to the original paper for more details. 以上がUp の所有者はすでに、Tencent のオープンソース「AniPortrait」を悪用し、写真に歌わせたりしゃべらせたりし始めています。の詳細内容です。詳細については、PHP 中国語 Web サイトの他の関連記事を参照してください。

ホットAIツール

Undresser.AI Undress

リアルなヌード写真を作成する AI 搭載アプリ

AI Clothes Remover

写真から衣服を削除するオンライン AI ツール。

Undress AI Tool

脱衣画像を無料で

Clothoff.io

AI衣類リムーバー

AI Hentai Generator

AIヘンタイを無料で生成します。

人気の記事

ホットツール

メモ帳++7.3.1

使いやすく無料のコードエディター

SublimeText3 中国語版

中国語版、とても使いやすい

ゼンドスタジオ 13.0.1

強力な PHP 統合開発環境

ドリームウィーバー CS6

ビジュアル Web 開発ツール

SublimeText3 Mac版

神レベルのコード編集ソフト(SublimeText3)

ホットトピック

DeepMind ロボットが卓球をすると、フォアハンドとバックハンドが空中に滑り出し、人間の初心者を完全に打ち負かしました

Aug 09, 2024 pm 04:01 PM

DeepMind ロボットが卓球をすると、フォアハンドとバックハンドが空中に滑り出し、人間の初心者を完全に打ち負かしました

Aug 09, 2024 pm 04:01 PM

でももしかしたら公園の老人には勝てないかもしれない?パリオリンピックの真っ最中で、卓球が注目を集めています。同時に、ロボットは卓球のプレーにも新たな進歩をもたらしました。先ほど、DeepMind は、卓球競技において人間のアマチュア選手のレベルに到達できる初の学習ロボット エージェントを提案しました。論文のアドレス: https://arxiv.org/pdf/2408.03906 DeepMind ロボットは卓球でどれくらい優れていますか?おそらく人間のアマチュアプレーヤーと同等です: フォアハンドとバックハンドの両方: 相手はさまざまなプレースタイルを使用しますが、ロボットもそれに耐えることができます: さまざまなスピンでサーブを受ける: ただし、ゲームの激しさはそれほど激しくないようです公園の老人。ロボット、卓球用

初のメカニカルクロー!元羅宝は2024年の世界ロボット会議に登場し、家庭に入ることができる初のチェスロボットを発表した

Aug 21, 2024 pm 07:33 PM

初のメカニカルクロー!元羅宝は2024年の世界ロボット会議に登場し、家庭に入ることができる初のチェスロボットを発表した

Aug 21, 2024 pm 07:33 PM

8月21日、2024年世界ロボット会議が北京で盛大に開催された。 SenseTimeのホームロボットブランド「Yuanluobot SenseRobot」は、全製品ファミリーを発表し、最近、世界初の家庭用チェスロボットとなるYuanluobot AIチェスプレイロボット - Chess Professional Edition(以下、「Yuanluobot SenseRobot」という)をリリースした。家。 Yuanluobo の 3 番目のチェス対局ロボット製品である新しい Guxiang ロボットは、AI およびエンジニアリング機械において多くの特別な技術アップグレードと革新を経て、初めて 3 次元のチェスの駒を拾う機能を実現しました。家庭用ロボットの機械的な爪を通して、チェスの対局、全員でのチェスの対局、記譜のレビューなどの人間と機械の機能を実行します。

クロードも怠け者になってしまった!ネチズン: 自分に休日を与える方法を学びましょう

Sep 02, 2024 pm 01:56 PM

クロードも怠け者になってしまった!ネチズン: 自分に休日を与える方法を学びましょう

Sep 02, 2024 pm 01:56 PM

もうすぐ学校が始まり、新学期を迎える生徒だけでなく、大型AIモデルも気を付けなければなりません。少し前、レディットはクロードが怠け者になったと不満を漏らすネチズンでいっぱいだった。 「レベルが大幅に低下し、頻繁に停止し、出力も非常に短くなりました。リリースの最初の週は、4 ページの文書全体を一度に翻訳できましたが、今では 0.5 ページの出力さえできません」 !」 https://www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ というタイトルの投稿で、「クロードには完全に失望しました」という内容でいっぱいだった。

世界ロボット会議で「未来の高齢者介護の希望」を担う家庭用ロボットを囲みました

Aug 22, 2024 pm 10:35 PM

世界ロボット会議で「未来の高齢者介護の希望」を担う家庭用ロボットを囲みました

Aug 22, 2024 pm 10:35 PM

北京で開催中の世界ロボット会議では、人型ロボットの展示が絶対的な注目となっているスターダストインテリジェントのブースでは、AIロボットアシスタントS1がダルシマー、武道、書道の3大パフォーマンスを披露した。文武両道を備えた 1 つの展示エリアには、多くの専門的な聴衆とメディアが集まりました。弾性ストリングのエレガントな演奏により、S1 は、スピード、強さ、正確さを備えた繊細な操作と絶対的なコントロールを発揮します。 CCTVニュースは、「書道」の背後にある模倣学習とインテリジェント制御に関する特別レポートを実施し、同社の創設者ライ・ジエ氏は、滑らかな動きの背後にあるハードウェア側が最高の力制御と最も人間らしい身体指標(速度、負荷)を追求していると説明した。など)、AI側では人の実際の動きのデータが収集され、強い状況に遭遇したときにロボットがより強くなり、急速に進化することを学習することができます。そしてアジャイル

Li Feifei 氏のチームは、ロボットに空間知能を与え、GPT-4o を統合する ReKep を提案しました

Sep 03, 2024 pm 05:18 PM

Li Feifei 氏のチームは、ロボットに空間知能を与え、GPT-4o を統合する ReKep を提案しました

Sep 03, 2024 pm 05:18 PM

ビジョンとロボット学習の緊密な統合。最近話題の1X人型ロボットNEOと合わせて、2つのロボットハンドがスムーズに連携して服をたたむ、お茶を入れる、靴を詰めるといった動作をしていると、いよいよロボットの時代が到来するのではないかと感じられるかもしれません。実際、これらの滑らかな動きは、高度なロボット技術 + 精緻なフレーム設計 + マルチモーダル大型モデルの成果です。有用なロボットは多くの場合、環境との複雑かつ絶妙な相互作用を必要とし、環境は空間領域および時間領域の制約として表現できることがわかっています。たとえば、ロボットにお茶を注いでもらいたい場合、ロボットはまずティーポットのハンドルを掴んで、お茶をこぼさないように垂直に保ち、次にポットの口がカップの口と揃うまでスムーズに動かす必要があります。 、そしてティーポットを一定の角度に傾けます。これ

ACL 2024 賞の発表: HuaTech による Oracle 解読に関する最優秀論文の 1 つ、GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 賞の発表: HuaTech による Oracle 解読に関する最優秀論文の 1 つ、GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

貢献者はこの ACL カンファレンスから多くのことを学びました。 6日間のACL2024がタイのバンコクで開催されています。 ACL は、計算言語学と自然言語処理の分野におけるトップの国際会議で、国際計算言語学協会が主催し、毎年開催されます。 ACL は NLP 分野における学術的影響力において常に第一位にランクされており、CCF-A 推奨会議でもあります。今年の ACL カンファレンスは 62 回目であり、NLP 分野における 400 以上の最先端の作品が寄せられました。昨日の午後、カンファレンスは最優秀論文およびその他の賞を発表しました。今回の優秀論文賞は7件(未発表2件)、最優秀テーマ論文賞1件、優秀論文賞35件です。このカンファレンスでは、3 つの Resource Paper Award (ResourceAward) と Social Impact Award (

宏蒙スマートトラベルS9とフルシナリオ新製品発売カンファレンス、多数の大ヒット新製品が一緒にリリースされました

Aug 08, 2024 am 07:02 AM

宏蒙スマートトラベルS9とフルシナリオ新製品発売カンファレンス、多数の大ヒット新製品が一緒にリリースされました

Aug 08, 2024 am 07:02 AM

今日の午後、Hongmeng Zhixingは新しいブランドと新車を正式に歓迎しました。 8月6日、ファーウェイはHongmeng Smart Xingxing S9およびファーウェイのフルシナリオ新製品発表カンファレンスを開催し、パノラマスマートフラッグシップセダンXiangjie S9、新しいM7ProおよびHuawei novaFlip、MatePad Pro 12.2インチ、新しいMatePad Air、Huawei Bisheng Withを発表しました。レーザー プリンタ X1 シリーズ、FreeBuds6i、WATCHFIT3、スマート スクリーン S5Pro など、スマート トラベル、スマート オフィスからスマート ウェアに至るまで、多くの新しいオールシナリオ スマート製品を開発し、ファーウェイは消費者にスマートな体験を提供するフル シナリオのスマート エコシステムを構築し続けています。すべてのインターネット。宏孟志興氏:スマートカー業界のアップグレードを促進するための徹底的な権限付与 ファーウェイは中国の自動車業界パートナーと提携して、

AI の使用 | Microsoft CEO のクレイジーなアムウェイ AI ゲームは私を何千回も苦しめた

Aug 14, 2024 am 12:00 AM

AI の使用 | Microsoft CEO のクレイジーなアムウェイ AI ゲームは私を何千回も苦しめた

Aug 14, 2024 am 12:00 AM

Machine Power Report 編集者: Yang Wen 大型モデルや AIGC に代表される人工知能の波は、私たちの生活や働き方を静かに変えていますが、ほとんどの人はまだその使い方を知りません。そこで、直感的で興味深く簡潔な人工知能のユースケースを通じてAIの活用方法を詳しく紹介し、皆様の思考を刺激するコラム「AI in Use」を立ち上げました。また、読者が革新的な実践的な使用例を提出することも歓迎します。なんと、AIは本当に天才になってしまったのです。最近、AIが生成した写真の真贋を見分けるのが難しいと話題になっています。 (詳しくはこちら:AI活用中 | 3ステップでAI美女になり、1秒でAIに元に戻される) インターネット上で人気のAI Google ladyのほかにも、さまざまなFLUXジェネレーターが登場しています。ソーシャルプラットフォーム上に出現した