Classification of concurrent programming models

In concurrent programming, we need to deal with two key issues: how to communicate between threads and how to synchronize between threads (threads here refer to active entities executing concurrently) . Communication refers to the mechanism by which threads exchange information. In imperative programming, there are two communication mechanisms between threads: shared memory and message passing.

In the shared memory concurrency model, the common state of the program is shared between threads, and threads communicate implicitly by writing and reading the common state in memory. In the message passing concurrency model, there is no public state between threads, and threads must communicate explicitly by sending messages explicitly.

Synchronization refers to the mechanism used by programs to control the relative order in which operations occur between different threads. In the shared memory concurrency model, synchronization is performed explicitly. Programmers must explicitly specify that a method or piece of code needs to be executed exclusively between threads. In the message passing concurrency model, synchronization is performed implicitly because the sending of a message must precede the receiving of a message.

Java's concurrency uses a shared memory model. Communication between Java threads is always performed implicitly, and the entire communication process is completely transparent to programmers. If a Java programmer writing a multithreaded program does not understand how implicit inter-thread communication works, he is likely to encounter all kinds of strange memory visibility problems.

Abstraction of Java memory model

In java, all instance fields, static fields and array elements are stored in heap memory, and the heap memory is shared between threads (this article uses "shared variables" This term refers to instance fields, static fields, and array elements). Local variables, method definition parameters (called formal method parameters in the Java language specification), and exception handler parameters are not shared between threads, they do not have memory visibility issues, and they do not Affected by the memory model.

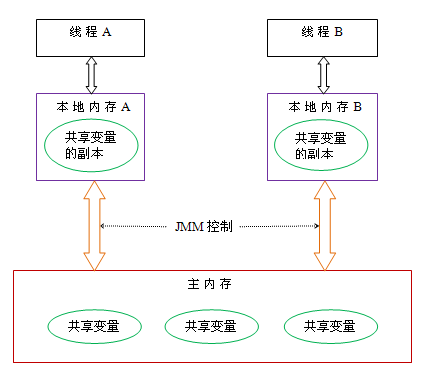

Communication between Java threads is controlled by the Java Memory Model (referred to as JMM in this article). JMM determines when a write to a shared variable by one thread is visible to another thread. From an abstract point of view, JMM defines the abstract relationship between threads and main memory: shared variables between threads are stored in main memory (main memory), and each thread has a private local memory (local memory) , a copy of the shared variable that the thread reads/writes is stored in local memory. Local memory is an abstract concept of JMM and does not really exist. It covers caches, write buffers, registers, and other hardware and compiler optimizations. The abstract schematic diagram of the Java memory model is as follows:

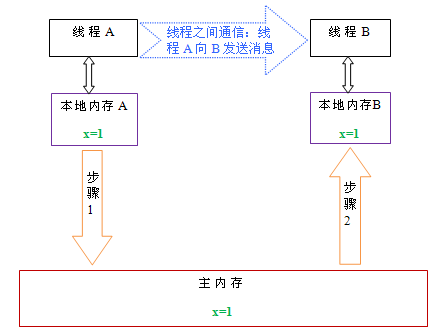

From the above figure, if thread A and thread B want to communicate, they must go through the following two steps:

First, thread A refreshes the updated shared variables in local memory A to the main memory.

Then, thread B goes to main memory to read the shared variables that thread A has updated before.

The following is a schematic diagram to illustrate these two steps:

As shown in the figure above, local memory A and B have copies of the shared variable x in main memory . Assume that initially, the x values in these three memories are all 0. When thread A is executing, it temporarily stores the updated x value (assuming the value is 1) in its own local memory A. When thread A and thread B need to communicate, thread A will first refresh the modified x value in its local memory to the main memory. At this time, the x value in the main memory becomes 1. Subsequently, thread B goes to the main memory to read the updated x value of thread A. At this time, the x value of thread B's local memory also becomes 1.

Overall, these two steps are essentially thread A sending messages to thread B, and this communication process must go through the main memory. JMM provides Java programmers with memory visibility guarantees by controlling the interaction between main memory and each thread's local memory.

Reordering

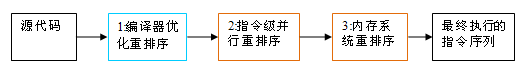

In order to improve performance when executing a program, compilers and processors often reorder instructions. There are three types of reordering:

Compiler optimized reordering. The compiler can rearrange the execution order of statements without changing the semantics of a single-threaded program.

Instruction-level parallel reordering. Modern processors use instruction-level parallelism (ILP) to execute multiple instructions in an overlapping manner. If there are no data dependencies, the processor can change the order in which statements correspond to machine instructions.

Memory system reordering. Because the processor uses cache and read/write buffers, this can make load and store operations appear to be executed out of order.

From the java source code to the final actually executed instruction sequence, it will undergo the following three reorderings:

The above 1 belongs to the compiler reordering, 2 and 3 belong to the processor reordering. These reorderings can cause memory visibility problems in multi-threaded programs. For compilers, JMM's compiler reordering rules prohibit certain types of compiler reordering (not all compiler reorderings are prohibited). For processor reordering, JMM's processor reordering rules require the Java compiler to insert specific types of memory barriers (Intel calls it memory fence) instructions when generating instruction sequences, and use memory barrier instructions to prohibit specific types of instructions. Processor reordering (not all processor reordering must be disabled).

JMM is a language-level memory model that ensures consistent memory for programmers by prohibiting specific types of compiler reordering and processor reordering on different compilers and different processor platforms. Visibility guaranteed.

Processor reordering and memory barrier instructions

Modern processors use write buffers to temporarily save data written to memory. The write buffer keeps the instruction pipeline running and avoids delays caused by the processor stalling while waiting for data to be written to memory. At the same time, by refreshing the write buffer in a batch process and merging multiple writes to the same memory address in the write buffer, the memory bus usage can be reduced. Although write buffers have so many benefits, the write buffer on each processor is only visible to the processor where it is located. This feature will have an important impact on the execution order of memory operations: the order in which the processor reads/writes memory operations is not necessarily consistent with the order in which the memory actually reads/writes operations! To illustrate, please see the following example:

Processor A

Processor B

a = 1; //A1

x = b; //A2

b = 2; //B1

y = a; //B2

Initial state: a = b = 0

The processor allows execution and the result is obtained: x = y = 0

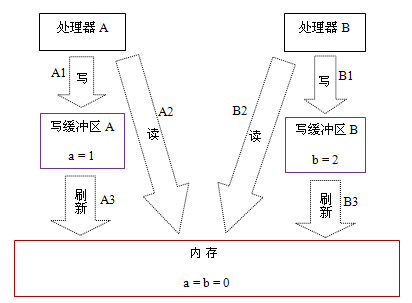

Assume processor A Executing memory accesses in parallel with processor B in program order may ultimately result in x = y = 0. The specific reasons are shown in the figure below:

Here processor A and processor B can write shared variables to their own write buffers (A1, B1) at the same time, then read another shared variable (A2, B2) from memory, and finally write themselves The dirty data saved in the cache area is flushed to the memory (A3, B3). When executed in this timing, the program can obtain the result x = y = 0.

Judging from the actual sequence of memory operations, the write operation A1 is not actually executed until processor A executes A3 to refresh its own write cache. Although processor A performs memory operations in the order: A1->A2, the order in which the memory operations actually occur is: A2->A1. At this time, the memory operation sequence of processor A is reordered (the situation of processor B is the same as that of processor A, so I will not go into details here).

The key here is that because the write buffer is only visible to its own processor, it will cause the order in which the processor performs memory operations to be inconsistent with the actual order in which the memory operations are performed. Because modern processors use write buffers, modern processors allow reordering of write-read operations.

The following is a list of the reordering types allowed by common processors:

Load-Load

Load-Store

Store-Store

Store-Load

Data dependency

sparc-TSO

N

N

N

Y

N

x86

N

N

N

Y

N

ia64

Y

Y

Y

Y

N

PowerPC

Y

Y

Y

Y

N

on An "N" in a table cell indicates that the processor does not allow reordering of the two operations, and a "Y" indicates that reordering is allowed.

We can see from the above table: Common processors allow Store-Load reordering; common processors do not allow reordering of operations with data dependencies. sparc-TSO and x86 have relatively strong processor memory models that only allow reordering of write-read operations (because they both use write buffers).

※Note 1: sparc-TSO refers to the characteristics of the sparc processor when running in the TSO (Total Store Order) memory model.

※Note 2: x86 in the above table includes x64 and AMD64.

※Note 3: Since the memory model of ARM processor is very similar to that of PowerPC processor, this article will ignore it.

※Note 4: Data dependency will be specifically explained later.

In order to ensure memory visibility, the Java compiler will insert memory barrier instructions at appropriate locations in the generated instruction sequence to prohibit specific types of processor reordering. JMM divides memory barrier instructions into the following four categories:

Barrier type

Instruction example

Description

LoadLoad Barriers

Load1; LoadLoad; Load2

Ensure the loading of Load1 data, before Load2 and the loading of all subsequent load instructions.

StoreStore Barriers

Store1; StoreStore; Store2

Ensure that Store1 data is visible to other processors (flushed to memory), prior to the store in Store2 and all subsequent store instructions.

LoadStore Barriers

Load1; LoadStore; Store2

Ensure that Load1 data is loaded before Store2 and all subsequent store instructions are flushed to memory.

StoreLoad Barriers

Store1; StoreLoad; Load2

Ensure that Store1 data becomes visible to other processors (referring to flushing to memory) before loading with Load2 and all subsequent load instructions. StoreLoad Barriers will cause all memory access instructions (store and load instructions) before the barrier to complete before executing the memory access instructions after the barrier.

StoreLoad Barriers is an "all-purpose" barrier that has the effects of the other three barriers at the same time. Most modern multiprocessors support this barrier (other types of barriers may not be supported by all processors). Executing this barrier can be expensive because current processors typically have to flush all the data in the write buffer to memory (buffer fully flush).

happens-before

Starting from JDK5, java uses the new JSR-133 memory model (unless otherwise stated, this article focuses on the JSR-133 memory model). JSR-133 proposes the concept of happens-before, through which memory visibility between operations is explained. If the results of one operation need to be visible to another operation, then there must be a happens-before relationship between the two operations. The two operations mentioned here can be within one thread or between different threads. The happens-before rules that are closely related to programmers are as follows:

Program sequence rules: Each operation in a thread happens-before any subsequent operation in that thread.

Monitor lock rules: Unlocking a monitor lock happens-before the subsequent locking of the monitor lock.

Volatile variable rules: Writing to a volatile field happens-before any subsequent reading of this volatile field.

Transitivity: If A happens- before B, and B happens- before C, then A happens- before C.

Note that there is a happens-before relationship between two operations, which does not mean that the former operation must be executed before the latter operation! happens-before only requires that the previous operation (result of execution) is visible to the next operation, and that the first operation is visible to and ordered before the second operation. The definition of happens-before is very subtle. The following article will explain specifically why happens-before is defined in this way.

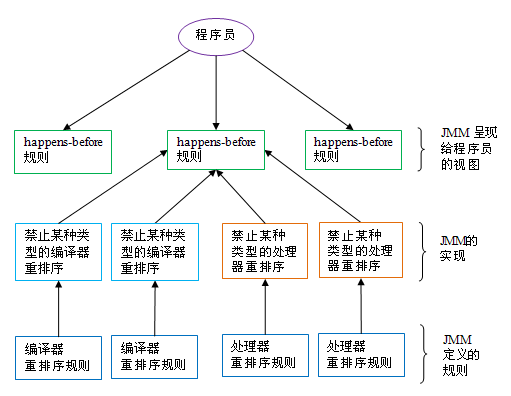

The relationship between happens-before and JMM is shown in the figure below:

As shown in the figure above, a happens-before rule usually corresponds to multiple compiler reordering rules and processor reordering rules. For Java programmers, the happens-before rule is simple and easy to understand. It prevents programmers from learning complex reordering rules and the specific implementation of these rules in order to understand the memory visibility guarantees provided by JMM.

The above is an in-depth analysis of the Java memory model: the basic part. For more related content, please pay attention to the PHP Chinese website (www.php.cn)!