Hbase+Hadoop安装部署

VMware安装多个RedHat Linux操作系统,摘抄了不少网上的资料,基本上按照顺序都能安装好 ? 1、建用户 groupadd bigdata useradd -g bigdata hadoop passwd hadoop ? 2、建JDK vi /etc/profile ? export JAVA_HOME=/usr/lib/java-1.7.0_07 export CLASSPATH=.

VMware安装多个RedHat Linux操作系统,摘抄了不少网上的资料,基本上按照顺序都能安装好

?

1、建用户

groupadd bigdata

useradd -g bigdata hadoop

passwd hadoop

?

2、建JDK

vi /etc/profile

?

export JAVA_HOME=/usr/lib/java-1.7.0_07

export CLASSPATH=.

export HADOOP_HOME=/home/hadoop/hadoop

export HBASE_HOME=/home/hadoop/hbase?

export HADOOP_MAPARED_HOME=${HADOOP_HOME}

export HADOOP_COMMON_HOME=${HADOOP_HOME}

export HADOOP_HDFS_HOME=${HADOOP_HOME}

export YARN_HOME=${HADOOP_HOME}

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HDFS_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export YARN_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HBASE_CONF_DIR=${HBASE_HOME}/conf

export ZK_HOME=/home/hadoop/zookeeper

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HBASE_HOME/bin:$HADOOP_HOME/sbin:$ZK_HOME/bin:$PATH

?

?

?

?

?

source /etc/profile

chmod 777 -R /usr/lib/java-1.7.0_07

?

?

3、修改hosts

vi /etc/hosts

加入

172.16.254.215 ? master

172.16.254.216 ? salve1

172.16.254.217 ? salve2

172.16.254.218 ? salve3

?

3、免ssh密码

215服务器

su -root

vi /etc/ssh/sshd_config

确保含有如下内容

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile ? ? ?.ssh/authorized_keys

重启sshd

service sshd restart

?

su - hadoop

ssh-keygen -t rsa

cd /home/hadoop/.ssh

cat id_rsa.pub >> authorized_keys

chmod 600 authorized_keys

?

在217 ?218 ?216 分别执行?

mkdir /home/hadoop/.ssh

chmod 700 /home/hadoop/.ssh

?

在215上执行

scp id_rsa.pub hadoop@salve1:/home/hadoop/.ssh/

scp id_rsa.pub hadoop@salve2:/home/hadoop/.ssh/

scp id_rsa.pub hadoop@salve3:/home/hadoop/.ssh/

?

在217 ?218 ?216 分别执行?

cat /home/hadoop/.ssh/id_rsa.pub >> /home/hadoop/.ssh/authorized_keys?

chmod 600 /home/hadoop/.ssh//authorized_keys

?

?

4、建hadoop与hbase、zookeeper

su - hadoop

mkdir /home/hadoop/hadoop

mkdir /home/hadoop/hbase

mkdir /home/hadoop/zookeeper

?

cp -r /home/hadoop/soft/hadoop-2.0.1-alpha/* /home/hadoop/hadoop/

cp -r /home/hadoop/soft/hbase-0.95.0-hadoop2/* /home/hadoop/hbase/

cp -r /home/hadoop/soft/zookeeper-3.4.5/* /home/hadoop/zookeeper/

?

?

1) hadoop 配置

?

vi /home/hadoop/hadoop/etc/hadoop/hadoop-env.sh?

修改?

export JAVA_HOME=/usr/lib/java-1.7.0_07

export HBASE_MANAGES_ZK=true

?

?

vi /home/hadoop/hadoop/etc/hadoop/core-site.xml

加入

?

vi /home/hadoop/hadoop/etc/hadoop/slaves ?

加入(不用master做salve)

salve1

salve2

salve3

?

vi /home/hadoop/hadoop/etc/hadoop/hdfs-site.xml

加入

?

?

?

?

?

?

?

?

?

?

?

?

?

?

?

vi /home/hadoop/hadoop/etc/hadoop/yarn-site.xml

加入

?

?

?

?

?

?

2) hbase配置

?

vi /home/hadoop/hbase/conf/hbase-site.xml

加入

?

?

?

?

?

?

vi /home/hadoop/hbase/conf/regionservers

加入

salve1

salve2

salve3

?

vi /home/hadoop/hbase/conf/hbase-env.sh

修改

export JAVA_HOME=/usr/lib/java-1.7.0_07

export HBASE_MANAGES_ZK=false

?

?

?

3) zookeeper配置

?

vi /home/hadoop/zookeeper/conf/zoo.cfg

加入

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/home/hadoop/zookeeper/data

clientPort=2181

server.1=salve1:2888:3888

server.2=salve2:2888:3888

server.3=salve3:2888:3888

?

将/home/hadoop/zookeeper/conf/zoo.cfg拷贝到/home/hadoop/hbase/

?

?

4) 同步master和salve

scp -r /home/hadoop/hadoop ?hadoop@salve1:/home/hadoop ?

scp -r /home/hadoop/hbase ?hadoop@salve1:/home/hadoop ?

scp -r /home/hadoop/zookeeper ?hadoop@salve1:/home/hadoop

?

scp -r /home/hadoop/hadoop ?hadoop@salve2:/home/hadoop ?

scp -r /home/hadoop/hbase ?hadoop@salve2:/home/hadoop ?

scp -r /home/hadoop/zookeeper ?hadoop@salve2:/home/hadoop

?

scp -r /home/hadoop/hadoop ?hadoop@salve3:/home/hadoop ?

scp -r /home/hadoop/hbase ?hadoop@salve3:/home/hadoop ?

scp -r /home/hadoop/zookeeper ?hadoop@salve3:/home/hadoop

?

设置 salve1 salve2 salve3 的zookeeper

?

echo "1" > /home/hadoop/zookeeper/data/myid

echo "2" > /home/hadoop/zookeeper/data/myid

echo "3" > /home/hadoop/zookeeper/data/myid

?

?

?

5)测试

测试hadoop

hadoop namenode -format -clusterid clustername

?

start-all.sh

hadoop fs -ls hdfs://172.16.254.215:9000/?

hadoop fs -mkdir hdfs://172.16.254.215:9000/hbase?

//hadoop fs -copyFromLocal ./install.log hdfs://172.16.254.215:9000/testfolder?

//hadoop fs -ls hdfs://172.16.254.215:9000/testfolder

//hadoop fs -put /usr/hadoop/hadoop-2.0.1-alpha/*.txt hdfs://172.16.254.215:9000/testfolder

//cd /usr/hadoop/hadoop-2.0.1-alpha/share/hadoop/mapreduce

//hadoop jar hadoop-mapreduce-examples-2.0.1-alpha.jar wordcount hdfs://172.16.254.215:9000/testfolder hdfs://172.16.254.215:9000/output

//hadoop fs -ls hdfs://172.16.254.215:9000/output

//hadoop fs -cat ?hdfs://172.16.254.215:9000/output/part-r-00000

?

启动 salve1 salve2 salve3 的zookeeper

zkServer.sh start

?

启动 start-hbase.sh

进入 hbase shell

测试 hbase?

list

create 'student','name','address' ?

put 'student','1','name','tom'

get 'student','1'

?

已有 0 人发表留言,猛击->> 这里

ITeye推荐

- —软件人才免语言低担保 赴美带薪读研!—

原文地址:Hbase+Hadoop安装部署, 感谢原作者分享。

핫 AI 도구

Undresser.AI Undress

사실적인 누드 사진을 만들기 위한 AI 기반 앱

AI Clothes Remover

사진에서 옷을 제거하는 온라인 AI 도구입니다.

Undress AI Tool

무료로 이미지를 벗다

Clothoff.io

AI 옷 제거제

Video Face Swap

완전히 무료인 AI 얼굴 교환 도구를 사용하여 모든 비디오의 얼굴을 쉽게 바꾸세요!

인기 기사

뜨거운 도구

메모장++7.3.1

사용하기 쉬운 무료 코드 편집기

SublimeText3 중국어 버전

중국어 버전, 사용하기 매우 쉽습니다.

스튜디오 13.0.1 보내기

강력한 PHP 통합 개발 환경

드림위버 CS6

시각적 웹 개발 도구

SublimeText3 Mac 버전

신 수준의 코드 편집 소프트웨어(SublimeText3)

뜨거운 주제

7690

7690

15

15

1639

1639

14

14

1393

1393

52

52

1287

1287

25

25

1229

1229

29

29

Win11 시스템에서 중국어 언어 팩을 설치할 수 없는 문제에 대한 해결 방법

Mar 09, 2024 am 09:48 AM

Win11 시스템에서 중국어 언어 팩을 설치할 수 없는 문제에 대한 해결 방법

Mar 09, 2024 am 09:48 AM

Win11 시스템에서 중국어 언어 팩을 설치할 수 없는 문제 해결 Windows 11 시스템이 출시되면서 많은 사용자들이 새로운 기능과 인터페이스를 경험하기 위해 운영 체제를 업그레이드하기 시작했습니다. 그러나 일부 사용자는 업그레이드 후 중국어 언어 팩을 설치할 수 없어 경험에 문제가 있다는 사실을 발견했습니다. 이 기사에서는 Win11 시스템이 중국어 언어 팩을 설치할 수 없는 이유에 대해 논의하고 사용자가 이 문제를 해결하는 데 도움이 되는 몇 가지 솔루션을 제공합니다. 원인 분석 먼저 Win11 시스템의 무능력을 분석해 보겠습니다.

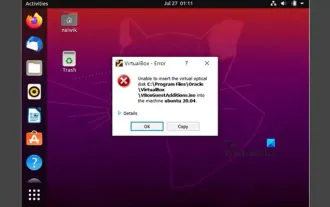

VirtualBox에 게스트 추가 기능을 설치할 수 없습니다

Mar 10, 2024 am 09:34 AM

VirtualBox에 게스트 추가 기능을 설치할 수 없습니다

Mar 10, 2024 am 09:34 AM

OracleVirtualBox의 가상 머신에 게스트 추가 기능을 설치하지 못할 수도 있습니다. Devices>InstallGuestAdditionsCDImage를 클릭하면 아래와 같이 오류가 발생합니다. VirtualBox - 오류: 가상 디스크를 삽입할 수 없습니다. C: 우분투 시스템에 FilesOracleVirtualBoxVBoxGuestAdditions.iso 프로그래밍 이 게시물에서는 어떤 일이 발생하는지 이해합니다. VirtualBox에 게스트 추가 기능을 설치할 수 없습니다. VirtualBox에 게스트 추가 기능을 설치할 수 없습니다. Virtua에 설치할 수 없는 경우

Baidu Netdisk를 성공적으로 다운로드했지만 설치할 수 없는 경우 어떻게 해야 합니까?

Mar 13, 2024 pm 10:22 PM

Baidu Netdisk를 성공적으로 다운로드했지만 설치할 수 없는 경우 어떻게 해야 합니까?

Mar 13, 2024 pm 10:22 PM

바이두 넷디스크 설치 파일을 성공적으로 다운로드 받았으나 정상적으로 설치가 되지 않는 경우, 소프트웨어 파일의 무결성에 문제가 있거나, 잔여 파일 및 레지스트리 항목에 문제가 있을 수 있으므로, 본 사이트에서 사용자들이 주의깊게 확인해 보도록 하겠습니다. Baidu Netdisk가 성공적으로 다운로드되었으나 설치가 되지 않는 문제에 대한 분석입니다. 바이두 넷디스크 다운로드에 성공했지만 설치가 되지 않는 문제 분석 1. 설치 파일의 무결성 확인: 다운로드한 설치 파일이 완전하고 손상되지 않았는지 확인하세요. 다시 다운로드하거나 신뢰할 수 있는 다른 소스에서 설치 파일을 다운로드해 보세요. 2. 바이러스 백신 소프트웨어 및 방화벽 끄기: 일부 바이러스 백신 소프트웨어 또는 방화벽 프로그램은 설치 프로그램이 제대로 실행되지 않도록 할 수 있습니다. 바이러스 백신 소프트웨어와 방화벽을 비활성화하거나 종료한 후 설치를 다시 실행해 보세요.

Linux에 Android 앱을 설치하는 방법은 무엇입니까?

Mar 19, 2024 am 11:15 AM

Linux에 Android 앱을 설치하는 방법은 무엇입니까?

Mar 19, 2024 am 11:15 AM

Linux에 Android 애플리케이션을 설치하는 것은 항상 많은 사용자의 관심사였습니다. 특히 Android 애플리케이션을 사용하려는 Linux 사용자의 경우 Linux 시스템에 Android 애플리케이션을 설치하는 방법을 익히는 것이 매우 중요합니다. Linux에서 직접 Android 애플리케이션을 실행하는 것은 Android 플랫폼에서만큼 간단하지는 않지만 에뮬레이터나 타사 도구를 사용하면 여전히 Linux에서 Android 애플리케이션을 즐겁게 즐길 수 있습니다. 다음은 Linux 시스템에 Android 애플리케이션을 설치하는 방법을 소개합니다.

Ubuntu 24.04에 Podman을 설치하는 방법

Mar 22, 2024 am 11:26 AM

Ubuntu 24.04에 Podman을 설치하는 방법

Mar 22, 2024 am 11:26 AM

Docker를 사용해 본 적이 있다면 데몬, 컨테이너 및 해당 기능을 이해해야 합니다. 데몬은 컨테이너가 시스템에서 이미 사용 중일 때 백그라운드에서 실행되는 서비스입니다. Podman은 Docker와 같은 데몬에 의존하지 않고 컨테이너를 관리하고 생성하기 위한 무료 관리 도구입니다. 따라서 장기적인 백엔드 서비스 없이도 컨테이너를 관리할 수 있는 장점이 있습니다. 또한 Podman을 사용하려면 루트 수준 권한이 필요하지 않습니다. 이 가이드에서는 Ubuntu24에 Podman을 설치하는 방법을 자세히 설명합니다. 시스템을 업데이트하려면 먼저 시스템을 업데이트하고 Ubuntu24의 터미널 셸을 열어야 합니다. 설치 및 업그레이드 프로세스 중에 명령줄을 사용해야 합니다. 간단한

Ubuntu 24.04에서 Ubuntu Notes 앱을 설치하고 실행하는 방법

Mar 22, 2024 pm 04:40 PM

Ubuntu 24.04에서 Ubuntu Notes 앱을 설치하고 실행하는 방법

Mar 22, 2024 pm 04:40 PM

고등학교에서 공부하는 동안 일부 학생들은 매우 명확하고 정확한 필기를 하며, 같은 수업을 받는 다른 학생들보다 더 많은 필기를 합니다. 어떤 사람들에게는 노트 필기가 취미인 반면, 어떤 사람들에게는 중요한 것에 대한 작은 정보를 쉽게 잊어버릴 때 필수입니다. Microsoft의 NTFS 응용 프로그램은 정규 강의 외에 중요한 메모를 저장하려는 학생들에게 특히 유용합니다. 이 기사에서는 Ubuntu24에 Ubuntu 애플리케이션을 설치하는 방법을 설명합니다. Ubuntu 시스템 업데이트 Ubuntu 설치 프로그램을 설치하기 전에 Ubuntu24에서 새로 구성된 시스템이 업데이트되었는지 확인해야 합니다. 우분투 시스템에서 가장 유명한 "a"를 사용할 수 있습니다

Win7 컴퓨터에 Go 언어를 설치하는 자세한 단계

Mar 27, 2024 pm 02:00 PM

Win7 컴퓨터에 Go 언어를 설치하는 자세한 단계

Mar 27, 2024 pm 02:00 PM

Win7 컴퓨터에 Go 언어를 설치하는 세부 단계 Go(Golang이라고도 함)는 Google에서 개발한 오픈 소스 프로그래밍 언어로, 간단하고 효율적이며 뛰어난 동시성 성능을 갖추고 있으며 클라우드 서비스, 네트워크 애플리케이션 및 개발에 적합합니다. 백엔드 시스템. Win7 컴퓨터에 Go 언어를 설치하면 언어를 빠르게 시작하고 Go 프로그램 작성을 시작할 수 있습니다. 다음은 Win7 컴퓨터에 Go 언어를 설치하는 단계를 자세히 소개하고 특정 코드 예제를 첨부합니다. 1단계: Go 언어 설치 패키지를 다운로드하고 Go 공식 웹사이트를 방문하세요.

Win7 시스템에서 Go 언어를 설치하는 방법은 무엇입니까?

Mar 27, 2024 pm 01:42 PM

Win7 시스템에서 Go 언어를 설치하는 방법은 무엇입니까?

Mar 27, 2024 pm 01:42 PM

Win7 시스템에서 Go 언어를 설치하는 것은 비교적 간단한 작업입니다. 성공적으로 설치하려면 다음 단계를 따르세요. 다음은 Win7 시스템에서 Go 언어를 설치하는 방법을 자세히 소개합니다. 1단계: Go 언어 설치 패키지를 다운로드합니다. 먼저 Go 언어 공식 웹사이트(https://golang.org/)를 열고 다운로드 페이지로 들어갑니다. 다운로드 페이지에서 Win7 시스템과 호환되는 설치 패키지 버전을 선택하여 다운로드하세요. 다운로드 버튼을 클릭하고 설치 패키지가 다운로드될 때까지 기다립니다. 2단계: Go 언어 설치