물체 감지에 Yolo V12를 사용하는 방법은 무엇입니까?

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy while maintaining real-time processing speeds. This article explores the key innovations in YOLO v12, highlighting how it surpasses the previous versions while minimizing computational costs without compromising detection efficiency.

Table of contents

- What’s New in YOLO v12?

- Key Improvements Over Previous Versions

- Computational Efficiency Enhancements

- YOLO v12 Model Variants

- Let’s compare YOLO v11 and YOLO v12 Models

- Expert Opinions on YOLOv11 and YOLOv12

- Conclusion

What’s New in YOLO v12?

Previously, YOLO models relied on Convolutional Neural Networks (CNNs) for object detection due to their speed and efficiency. However, YOLO v12 makes use of attention mechanisms, a concept widely known and used in Transformer models which allow it to recognize patterns more effectively. While attention mechanisms have originally been slow for real-time object detection, YOLO v12 somehow successfully integrates them while maintaining YOLO’s speed, leading to an Attention-Centric YOLO framework.

Key Improvements Over Previous Versions

1. Attention-Centric Framework

YOLO v12 combines the power of attention mechanisms with CNNs, resulting in a model that is both faster and more accurate. Unlike its predecessors which relied solely on CNNs, YOLO v12 introduces optimized attention modules to improve object recognition without adding unnecessary latency.

2. Superior Performance Metrics

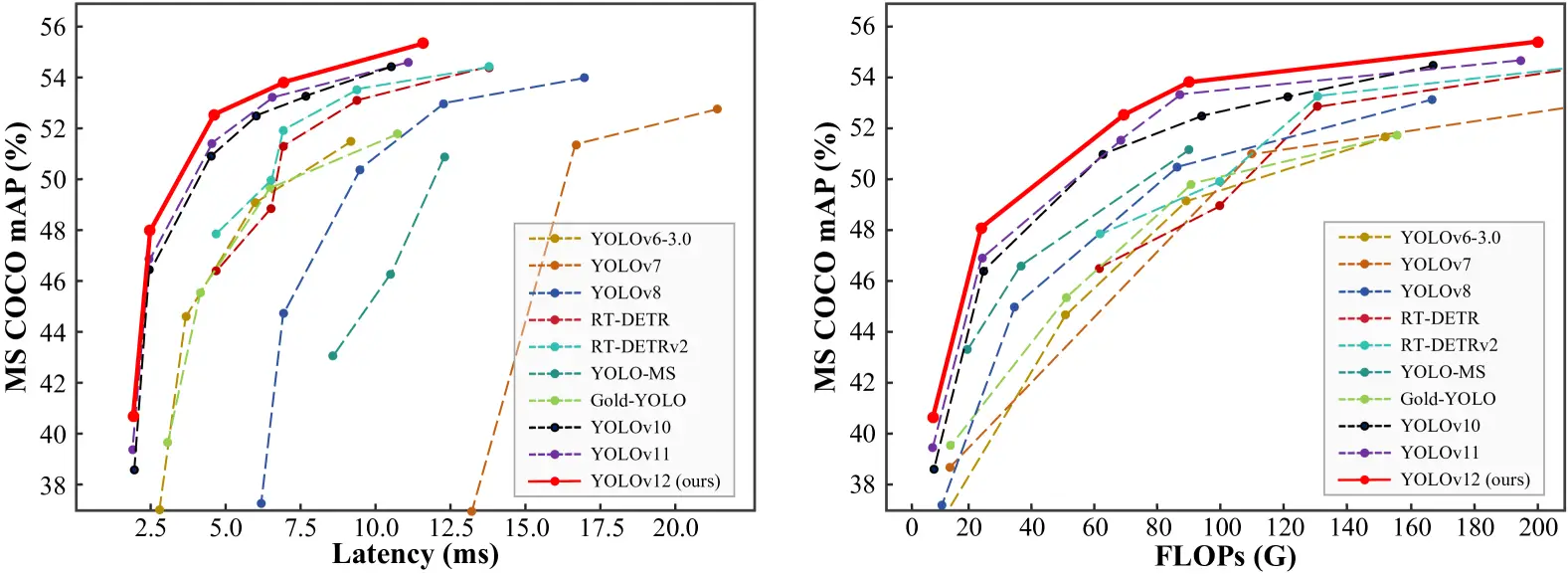

Comparing performance metrics across different YOLO versions and real-time detection models reveals that YOLO v12 achieves higher accuracy while maintaining low latency.

- The mAP (Mean Average Precision) values on datasets like COCO show YOLO v12 outperforming YOLO v11 and YOLO v10 while maintaining comparable speed.

- The model achieves a remarkable 40.6% accuracy (mAP) while processing images in just 1.64 milliseconds on an Nvidia T4 GPU. This performance is superior to YOLO v10 and YOLO v11 without sacrificing speed.

3. Outperforming Non-YOLO Models

YOLO v12 surpasses previous YOLO versions; it also outperforms other real-time object detection frameworks, such as RT-Det and RT-Det v2. These alternative models have higher latency yet fail to match YOLO v12’s accuracy.

Computational Efficiency Enhancements

One of the major concerns with integrating attention mechanisms into YOLO models was their high computational cost (Attention Mechanism) and memory inefficiency. YOLO v12 addresses these issues through several key innovations:

1. Flash Attention for Memory Efficiency

Traditional attention mechanisms consume a large amount of memory, making them impractical for real-time applications. YOLO v12 introduces Flash Attention, a technique that reduces memory consumption and speeds up inference time.

2. Area Attention for Lower Computation Cost

To further optimize efficiency, YOLO v12 employs Area Attention, which focuses only on relevant regions of an image instead of processing the entire feature map. This technique dramatically reduces computation costs while retaining accuracy.

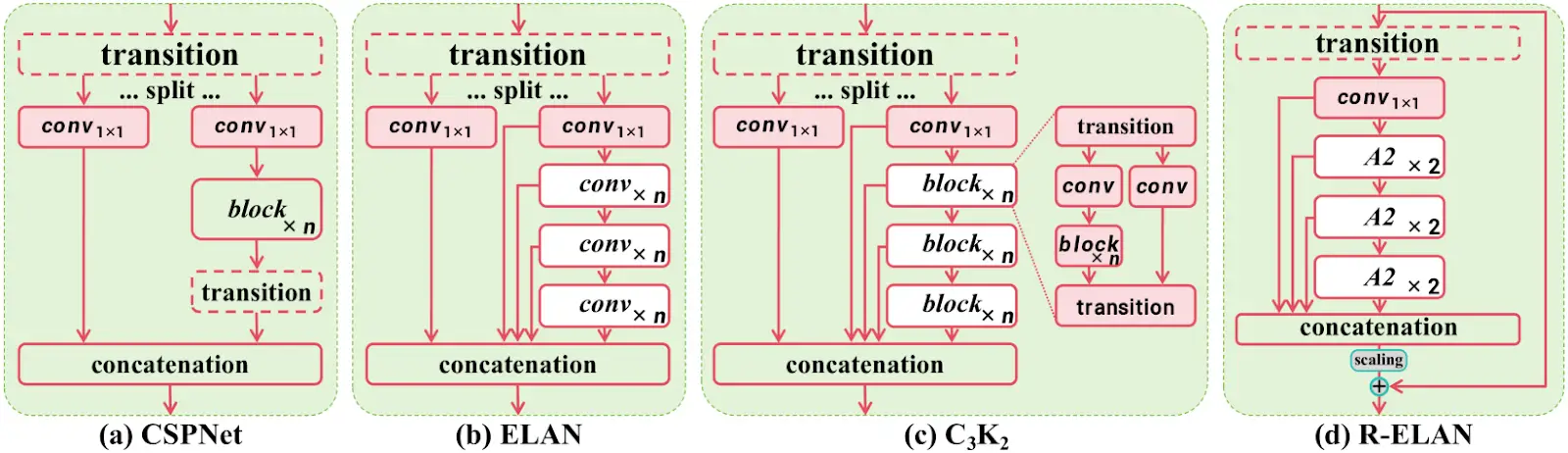

3. R-ELAN for Optimized Feature Processing

YOLO v12 also introduces R-ELAN (Re-Engineered ELAN), which optimizes feature propagation making the model more efficient in handling complex object detection tasks without increasing computational demands.

YOLO v12 Model Variants

YOLO v12 comes in five different variants, catering to different applications:

- N (Nano) & S (Small): Designed for real-time applications where speed is crucial.

- M (Medium): Balances accuracy and speed, suitable for general-purpose tasks.

- L (Large) & XL (Extra Large): Optimized for high-precision tasks where accuracy is prioritized over speed.

Also read:

- A Step-by-Step Introduction to the Basic Object Detection Algorithms (Part 1)

- A Practical Implementation of the Faster R-CNN Algorithm for Object Detection (Part 2)

- A Practical Guide to Object Detection using the Popular YOLO Framework – Part III (with Python codes)

Let’s compare YOLO v11 and YOLO v12 Models

We’ll be experimenting with YOLO v11 and YOLO v12 small models to understand their performance across various tasks like object counting, heatmaps, and speed estimation.

1. Object Counting

YOLO v11

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("highway.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)), int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)), int(cap.get(cv2.CAP_PROP_FPS)))

# Define region points

region_points = [(20, 1500), (1080, 1500), (1080, 1460), (20, 1460)] # Lower rectangle region counting

# Video writer (MP4 format)

video_writer = cv2.VideoWriter("object_counting_output.mp4", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Init ObjectCounter

counter = solutions.ObjectCounter(

show=False, # Disable internal window display

region=region_points,

model="yolo11s.pt",

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

im0 = counter.count(im0)

# Resize to fit screen (optional — scale down for large videos)

im0_resized = cv2.resize(im0, (640, 360)) # Adjust resolution as needed

# Show the resized frame

cv2.imshow("Object Counting", im0_resized)

video_writer.write(im0)

# Press 'q' to exit

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

video_writer.release()

cv2.destroyAllWindows()Output

YOLO v12

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("highway.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)), int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)), int(cap.get(cv2.CAP_PROP_FPS)))

# Define region points

region_points = [(20, 1500), (1080, 1500), (1080, 1460), (20, 1460)] # Lower rectangle region counting

# Video writer (MP4 format)

video_writer = cv2.VideoWriter("object_counting_output.mp4", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Init ObjectCounter

counter = solutions.ObjectCounter(

show=False, # Disable internal window display

region=region_points,

model="yolo12s.pt",

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

im0 = counter.count(im0)

# Resize to fit screen (optional — scale down for large videos)

im0_resized = cv2.resize(im0, (640, 360)) # Adjust resolution as needed

# Show the resized frame

cv2.imshow("Object Counting", im0_resized)

video_writer.write(im0)

# Press 'q' to exit

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

video_writer.release()

cv2.destroyAllWindows()Output

2. Heatmaps

YOLO v11

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("mall_arial.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

# Video writer

video_writer = cv2.VideoWriter("heatmap_output_yolov11.mp4", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# In case you want to apply object counting + heatmaps, you can pass region points.

# region_points = [(20, 400), (1080, 400)] # Define line points

# region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360)] # Define region points

# region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360), (20, 400)] # Define polygon points

# Init heatmap

heatmap = solutions.Heatmap(

show=True, # Display the output

model="yolo11s.pt", # Path to the YOLO11 model file

colormap=cv2.COLORMAP_PARULA, # Colormap of heatmap

# region=region_points, # If you want to do object counting with heatmaps, you can pass region_points

# classes=[0, 2], # If you want to generate heatmap for specific classes i.e person and car.

# show_in=True, # Display in counts

# show_out=True, # Display out counts

# line_width=2, # Adjust the line width for bounding boxes and text display

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

im0 = heatmap.generate_heatmap(im0)

im0_resized = cv2.resize(im0, (w, h))

video_writer.write(im0_resized)

cap.release()

video_writer.release()

cv2.destroyAllWindows()Output

YOLO v12

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("mall_arial.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

# Video writer

video_writer = cv2.VideoWriter("heatmap_output_yolov12.mp4", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# In case you want to apply object counting + heatmaps, you can pass region points.

# region_points = [(20, 400), (1080, 400)] # Define line points

# region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360)] # Define region points

# region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360), (20, 400)] # Define polygon points

# Init heatmap

heatmap = solutions.Heatmap(

show=True, # Display the output

model="yolo12s.pt", # Path to the YOLO11 model file

colormap=cv2.COLORMAP_PARULA, # Colormap of heatmap

# region=region_points, # If you want to do object counting with heatmaps, you can pass region_points

# classes=[0, 2], # If you want to generate heatmap for specific classes i.e person and car.

# show_in=True, # Display in counts

# show_out=True, # Display out counts

# line_width=2, # Adjust the line width for bounding boxes and text display

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

im0 = heatmap.generate_heatmap(im0)

im0_resized = cv2.resize(im0, (w, h))

video_writer.write(im0_resized)

cap.release()

video_writer.release()

cv2.destroyAllWindows()Output

3. Speed Estimation

YOLO v11

import cv2

from ultralytics import solutions

import numpy as np

cap = cv2.VideoCapture("cars_on_road.mp4")

assert cap.isOpened(), "Error reading video file"

# Capture video properties

w = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

h = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

fps = int(cap.get(cv2.CAP_PROP_FPS))

# Video writer

video_writer = cv2.VideoWriter("speed_management_yolov11.mp4", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Define speed region points (adjust for your video resolution)

speed_region = [(300, h - 200), (w - 100, h - 200), (w - 100, h - 270), (300, h - 270)]

# Initialize SpeedEstimator

speed = solutions.SpeedEstimator(

show=False, # Disable internal window display

model="yolo11s.pt", # Path to the YOLO model file

region=speed_region, # Pass region points

# classes=[0, 2], # Optional: Filter specific object classes (e.g., cars, trucks)

# line_width=2, # Optional: Adjust the line width

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

# Estimate speed and draw bounding boxes

out = speed.estimate_speed(im0)

# Draw the speed region on the frame

cv2.polylines(out, [np.array(speed_region)], isClosed=True, color=(0, 255, 0), thickness=2)

# Resize the frame to fit the screen

im0_resized = cv2.resize(out, (1280, 720)) # Resize for better screen fit

# Show the resized frame

cv2.imshow("Speed Estimation", im0_resized)

video_writer.write(out)

# Press 'q' to exit

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

video_writer.release()

cv2.destroyAllWindows()Output

YOLO v12

import cv2

from ultralytics import solutions

import numpy as np

cap = cv2.VideoCapture("cars_on_road.mp4")

assert cap.isOpened(), "Error reading video file"

# Capture video properties

w = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

h = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

fps = int(cap.get(cv2.CAP_PROP_FPS))

# Video writer

video_writer = cv2.VideoWriter("speed_management_yolov12.mp4", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Define speed region points (adjust for your video resolution)

speed_region = [(300, h - 200), (w - 100, h - 200), (w - 100, h - 270), (300, h - 270)]

# Initialize SpeedEstimator

speed = solutions.SpeedEstimator(

show=False, # Disable internal window display

model="yolo12s.pt", # Path to the YOLO model file

region=speed_region, # Pass region points

# classes=[0, 2], # Optional: Filter specific object classes (e.g., cars, trucks)

# line_width=2, # Optional: Adjust the line width

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

# Estimate speed and draw bounding boxes

out = speed.estimate_speed(im0)

# Draw the speed region on the frame

cv2.polylines(out, [np.array(speed_region)], isClosed=True, color=(0, 255, 0), thickness=2)

# Resize the frame to fit the screen

im0_resized = cv2.resize(out, (1280, 720)) # Resize for better screen fit

# Show the resized frame

cv2.imshow("Speed Estimation", im0_resized)

video_writer.write(out)

# Press 'q' to exit

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

video_writer.release()

cv2.destroyAllWindows()Output

Also Read: Top 30+ Computer Vision Models For 2025

Expert Opinions on YOLOv11 and YOLOv12

Muhammad Rizwan Munawar — Computer Vision Engineer at Ultralytics

“YOLOv12 introduces flash attention, which enhances accuracy, but it requires careful CUDA setup. It’s a solid step forward, especially for complex detection tasks, though YOLOv11 remains faster for real-time needs. In short, choose YOLOv12 for accuracy and YOLOv11 for speed.”

Linkedin Post – Is YOLOv12 really a state-of-the-art model? ?

Muhammad Rizwan, recently tested YOLOv11 and YOLOv12 side by side to break down their real-world performance. His findings highlight the trade-offs between the two models:

- Frames Per Second (FPS): YOLOv11 maintains an average of 40 FPS, while YOLOv12 lags behind at 30 FPS. This makes YOLOv11 the better choice for real-time applications where speed is critical, such as traffic monitoring or live video feeds.

- Training Time: YOLOv12 takes about 20% longer to train than YOLOv11. On a small dataset with 130 training images and 43 validation images, YOLOv11 completed training in 0.009 hours, while YOLOv12 needed 0.011 hours. While this might seem minor for small datasets, the difference becomes significant for larger-scale projects.

- Accuracy: Both models achieved similar accuracy after fine-tuning for 10 epochs on the same dataset. YOLOv12 didn’t dramatically outperform YOLOv11 in terms of accuracy, suggesting the newer model’s improvements lie more in architectural enhancements than raw detection precision.

- Flash Attention: YOLOv12 introduces flash attention, a powerful mechanism that speeds up and optimizes attention layers. However, there’s a catch — this feature isn’t natively supported on the CPU, and enabling it with CUDA requires careful version-specific setup. For teams without powerful GPUs or those working on edge devices, this can become a roadblock.

The PC specifications used for testing:

- GPU: NVIDIA RTX 3050

- CPU: Intel Core-i5-10400 @2.90GHz

- RAM: 64 GB

The model specifications:

- Model = YOLO11n.pt and YOLOv12n.pt

- Image size = 640 for inference

Conclusion

YOLO v12 marks a significant leap forward in real-time object detection, combining CNN speed with Transformer-like attention mechanisms. With improved accuracy, lower computational costs, and a range of model variants, YOLO v12 is poised to redefine the landscape of real-time vision applications. Whether for autonomous vehicles, security surveillance, or medical imaging, YOLO v12 sets a new standard for real-time object detection efficiency.

What’s Next?

- YOLO v13 Possibilities: Will future versions push the attention mechanisms even further?

- Edge Device Optimization: Can Flash Attention or Area Attention be optimized for lower-power devices?

To help you better understand the differences, I’ve attached some code snippets and output results in the comparison section. These examples illustrate how both YOLOv11 and YOLOv12 perform in real-world scenarios, from object counting to speed estimation and heatmaps. I’m excited to see how you guys perceive this new release! Are the improvements in accuracy and attention mechanisms enough to justify the trade-offs in speed? Or do you think YOLOv11 still holds its ground for most applications?

위 내용은 물체 감지에 Yolo V12를 사용하는 방법은 무엇입니까?의 상세 내용입니다. 자세한 내용은 PHP 중국어 웹사이트의 기타 관련 기사를 참조하세요!

핫 AI 도구

Undresser.AI Undress

사실적인 누드 사진을 만들기 위한 AI 기반 앱

AI Clothes Remover

사진에서 옷을 제거하는 온라인 AI 도구입니다.

Undress AI Tool

무료로 이미지를 벗다

Clothoff.io

AI 옷 제거제

Video Face Swap

완전히 무료인 AI 얼굴 교환 도구를 사용하여 모든 비디오의 얼굴을 쉽게 바꾸세요!

인기 기사

뜨거운 도구

메모장++7.3.1

사용하기 쉬운 무료 코드 편집기

SublimeText3 중국어 버전

중국어 버전, 사용하기 매우 쉽습니다.

스튜디오 13.0.1 보내기

강력한 PHP 통합 개발 환경

드림위버 CS6

시각적 웹 개발 도구

SublimeText3 Mac 버전

신 수준의 코드 편집 소프트웨어(SublimeText3)

Meta Llama 3.2- 분석 Vidhya를 시작합니다

Apr 11, 2025 pm 12:04 PM

Meta Llama 3.2- 분석 Vidhya를 시작합니다

Apr 11, 2025 pm 12:04 PM

메타의 라마 3.2 : 멀티 모달 및 모바일 AI의 도약 Meta는 최근 AI에서 강력한 비전 기능과 모바일 장치에 최적화 된 가벼운 텍스트 모델을 특징으로하는 AI의 상당한 발전 인 Llama 3.2를 공개했습니다. 성공을 바탕으로 o

10 생성 AI 코드의 생성 AI 코딩 확장 대 코드를 탐색해야합니다.

Apr 13, 2025 am 01:14 AM

10 생성 AI 코드의 생성 AI 코딩 확장 대 코드를 탐색해야합니다.

Apr 13, 2025 am 01:14 AM

이봐, 코딩 닌자! 하루 동안 어떤 코딩 관련 작업을 계획 했습니까? 이 블로그에 더 자세히 살펴보기 전에, 나는 당신이 당신의 모든 코딩 관련 문제에 대해 생각하기를 원합니다. 완료? - ’

직원에게 AI 전략 판매 : Shopify CEO의 선언문

Apr 10, 2025 am 11:19 AM

직원에게 AI 전략 판매 : Shopify CEO의 선언문

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke의 최근 메모는 AI 숙련도가 모든 직원에 대한 근본적인 기대를 대담하게 선언하여 회사 내에서 중요한 문화적 변화를 표시합니다. 이것은 도망가는 트렌드가 아닙니다. 그것은 p에 통합 된 새로운 운영 패러다임입니다

AV 바이트 : Meta ' S Llama 3.2, Google의 Gemini 1.5 등

Apr 11, 2025 pm 12:01 PM

AV 바이트 : Meta ' S Llama 3.2, Google의 Gemini 1.5 등

Apr 11, 2025 pm 12:01 PM

이번 주 AI 환경 : 발전의 회오리 바람, 윤리적 고려 사항 및 규제 토론. OpenAi, Google, Meta 및 Microsoft와 같은 주요 플레이어

GPT-4O vs Openai O1 : 새로운 OpenAI 모델은 과대 광고 가치가 있습니까?

Apr 13, 2025 am 10:18 AM

GPT-4O vs Openai O1 : 새로운 OpenAI 모델은 과대 광고 가치가 있습니까?

Apr 13, 2025 am 10:18 AM

소개 OpenAi는 기대가 많은 "Strawberry"아키텍처를 기반으로 새로운 모델을 출시했습니다. O1로 알려진이 혁신적인 모델은 추론 기능을 향상시켜 문제를 통해 생각할 수 있습니다.

비전 언어 모델 (VLMS)에 대한 포괄적 인 안내서

Apr 12, 2025 am 11:58 AM

비전 언어 모델 (VLMS)에 대한 포괄적 인 안내서

Apr 12, 2025 am 11:58 AM

소개 생생한 그림과 조각으로 둘러싸인 아트 갤러리를 걷는 것을 상상해보십시오. 이제 각 작품에 질문을하고 의미있는 대답을 얻을 수 있다면 어떨까요? “어떤 이야기를하고 있습니까?

SQL에서 열을 추가하는 방법? - 분석 Vidhya

Apr 17, 2025 am 11:43 AM

SQL에서 열을 추가하는 방법? - 분석 Vidhya

Apr 17, 2025 am 11:43 AM

SQL의 Alter Table 문 : 데이터베이스에 열을 동적으로 추가 데이터 관리에서 SQL의 적응성이 중요합니다. 데이터베이스 구조를 즉시 조정해야합니까? Alter Table 문은 솔루션입니다. 이 안내서는 Colu를 추가합니다

AI Index 2025 읽기 : AI는 친구, 적 또는 부조종사입니까?

Apr 11, 2025 pm 12:13 PM

AI Index 2025 읽기 : AI는 친구, 적 또는 부조종사입니까?

Apr 11, 2025 pm 12:13 PM

Stanford University Institute for Human-Oriented Intificial Intelligence가 발표 한 2025 인공 지능 지수 보고서는 진행중인 인공 지능 혁명에 대한 훌륭한 개요를 제공합니다. 인식 (무슨 일이 일어나고 있는지 이해), 감사 (혜택보기), 수용 (얼굴 도전) 및 책임 (우리의 책임 찾기)의 네 가지 간단한 개념으로 해석합시다. 인지 : 인공 지능은 어디에나 있고 빠르게 발전하고 있습니다 인공 지능이 얼마나 빠르게 발전하고 확산되고 있는지 잘 알고 있어야합니다. 인공 지능 시스템은 끊임없이 개선되어 수학 및 복잡한 사고 테스트에서 우수한 결과를 얻고 있으며 1 년 전만해도 이러한 테스트에서 비참하게 실패했습니다. AI 복잡한 코딩 문제 또는 대학원 수준의 과학적 문제를 해결한다고 상상해보십시오-2023 년 이후