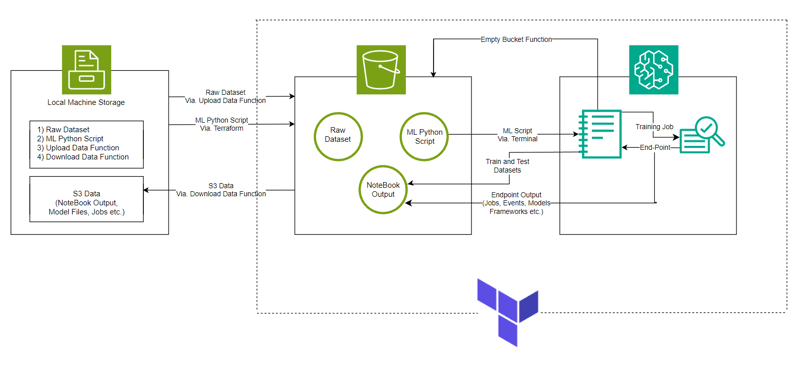

Model Pembelajaran Mesin yang dibuat dengan AWS SageMaker dan Python SDK untuk Klasifikasi Log HDFS menggunakan Terraform untuk automasi persediaan infrastruktur.

Pautan: GitHub

Bahasa: HCL (terraform), Python

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

hyperparameters = {

"max_depth":"5", ## Maximum depth of a tree. Higher means more complex models but risk of overfitting.

"eta":"0.2", ## Learning rate. Lower values make the learning process slower but more precise.

"gamma":"4", ## Minimum loss reduction required to make a further partition on a leaf node. Controls the model’s complexity.

"min_child_weight":"6", ## Minimum sum of instance weight (hessian) needed in a child. Higher values prevent overfitting.

"subsample":"0.7", ## Fraction of training data used. Reduces overfitting by sampling part of the data.

"objective":"binary:logistic", ## Specifies the learning task and corresponding objective. binary:logistic is for binary classification.

"num_round":50 ## Number of boosting rounds, essentially how many times the model is trained.

}

# A SageMaker estimator that calls the xgboost-container

estimator = sagemaker.estimator.Estimator(image_uri=container, # Points to the XGBoost container we previously set up. This tells SageMaker which algorithm container to use.

hyperparameters=hyperparameters, # Passes the defined hyperparameters to the estimator. These are the settings that guide the training process.

role=sagemaker.get_execution_role(), # Specifies the IAM role that SageMaker assumes during the training job. This role allows access to AWS resources like S3.

train_instance_count=1, # Sets the number of training instances. Here, it’s using a single instance.

train_instance_type='ml.m5.large', # Specifies the type of instance to use for training. ml.m5.2xlarge is a general-purpose instance with a balance of compute, memory, and network resources.

train_volume_size=5, # 5GB # Sets the size of the storage volume attached to the training instance, in GB. Here, it’s 5 GB.

output_path=output_path, # Defines where the model artifacts and output of the training job will be saved in S3.

train_use_spot_instances=True, # Utilizes spot instances for training, which can be significantly cheaper than on-demand instances. Spot instances are spare EC2 capacity offered at a lower price.

train_max_run=300, # Specifies the maximum runtime for the training job in seconds. Here, it's 300 seconds (5 minutes).

train_max_wait=600) # Sets the maximum time to wait for the job to complete, including the time waiting for spot instances, in seconds. Here, it's 600 seconds (10 minutes).

estimator.fit({'train': s3_input_train,'validation': s3_input_test})

xgb_predictor = estimator.deploy(initial_instance_count=1,instance_type='ml.m5.large')

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

hyperparameters = {

"max_depth":"5", ## Maximum depth of a tree. Higher means more complex models but risk of overfitting.

"eta":"0.2", ## Learning rate. Lower values make the learning process slower but more precise.

"gamma":"4", ## Minimum loss reduction required to make a further partition on a leaf node. Controls the model’s complexity.

"min_child_weight":"6", ## Minimum sum of instance weight (hessian) needed in a child. Higher values prevent overfitting.

"subsample":"0.7", ## Fraction of training data used. Reduces overfitting by sampling part of the data.

"objective":"binary:logistic", ## Specifies the learning task and corresponding objective. binary:logistic is for binary classification.

"num_round":50 ## Number of boosting rounds, essentially how many times the model is trained.

}

# A SageMaker estimator that calls the xgboost-container

estimator = sagemaker.estimator.Estimator(image_uri=container, # Points to the XGBoost container we previously set up. This tells SageMaker which algorithm container to use.

hyperparameters=hyperparameters, # Passes the defined hyperparameters to the estimator. These are the settings that guide the training process.

role=sagemaker.get_execution_role(), # Specifies the IAM role that SageMaker assumes during the training job. This role allows access to AWS resources like S3.

train_instance_count=1, # Sets the number of training instances. Here, it’s using a single instance.

train_instance_type='ml.m5.large', # Specifies the type of instance to use for training. ml.m5.2xlarge is a general-purpose instance with a balance of compute, memory, and network resources.

train_volume_size=5, # 5GB # Sets the size of the storage volume attached to the training instance, in GB. Here, it’s 5 GB.

output_path=output_path, # Defines where the model artifacts and output of the training job will be saved in S3.

train_use_spot_instances=True, # Utilizes spot instances for training, which can be significantly cheaper than on-demand instances. Spot instances are spare EC2 capacity offered at a lower price.

train_max_run=300, # Specifies the maximum runtime for the training job in seconds. Here, it's 300 seconds (5 minutes).

train_max_wait=600) # Sets the maximum time to wait for the job to complete, including the time waiting for spot instances, in seconds. Here, it's 600 seconds (10 minutes).

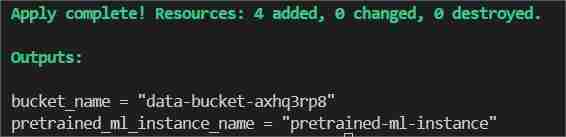

Dalam jenis terminal/tampal terraform init untuk memulakan hujung belakang.

Kemudian taip/tampal terraform Plan untuk melihat pelan atau hanya terraform validate untuk memastikan tiada ralat.

Akhir sekali dalam jenis terminal/tampal terraform apply --auto-lulus

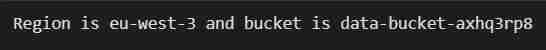

Ini akan menunjukkan dua output satu sebagai bucket_name yang lain sebagai pretrained_ml_instance_name (Sumber ke-3 ialah nama pembolehubah yang diberikan kepada baldi kerana ia adalah sumber global ).

estimator.fit({'train': s3_input_train,'validation': s3_input_test})

dan ubahnya ke laluan yang terdapat direktori projek dan simpannya.

xgb_predictor = estimator.deploy(initial_instance_count=1,instance_type='ml.m5.large')

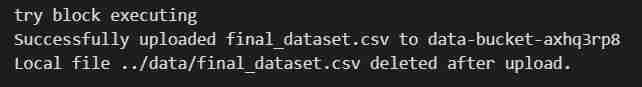

untuk memuat naik set data ke S3 Bucket.

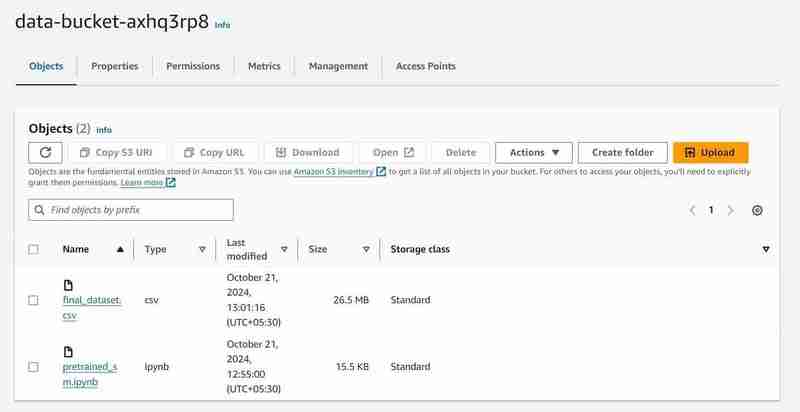

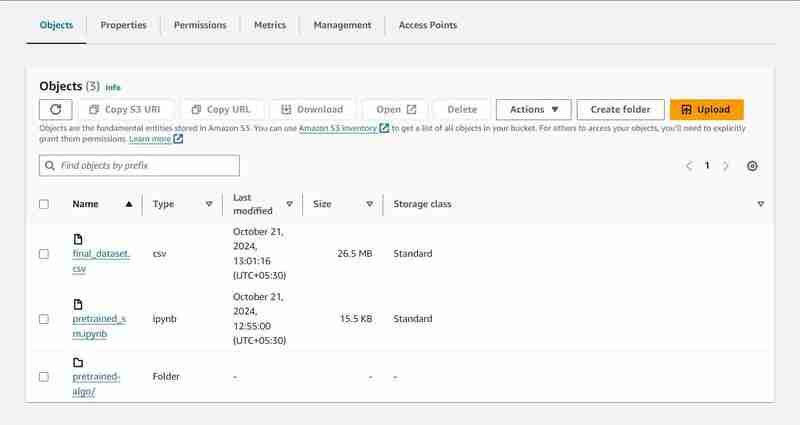

S3 Baldi dengan nama 'data-bucket-' dengan 2 objek dimuat naik, set data dan fail pralatihan_sm.ipynb yang mengandungi kod model.

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

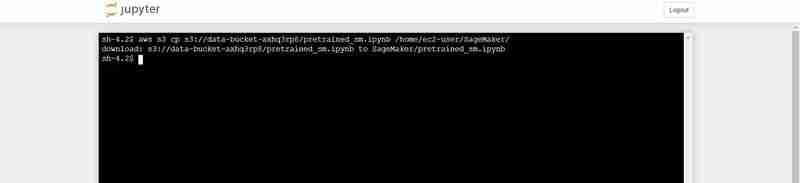

Arahan terminal untuk memuat naik pretrained_sm.ipynb dari S3 ke persekitaran Jupyter Notebook

hyperparameters = {

"max_depth":"5", ## Maximum depth of a tree. Higher means more complex models but risk of overfitting.

"eta":"0.2", ## Learning rate. Lower values make the learning process slower but more precise.

"gamma":"4", ## Minimum loss reduction required to make a further partition on a leaf node. Controls the model’s complexity.

"min_child_weight":"6", ## Minimum sum of instance weight (hessian) needed in a child. Higher values prevent overfitting.

"subsample":"0.7", ## Fraction of training data used. Reduces overfitting by sampling part of the data.

"objective":"binary:logistic", ## Specifies the learning task and corresponding objective. binary:logistic is for binary classification.

"num_round":50 ## Number of boosting rounds, essentially how many times the model is trained.

}

# A SageMaker estimator that calls the xgboost-container

estimator = sagemaker.estimator.Estimator(image_uri=container, # Points to the XGBoost container we previously set up. This tells SageMaker which algorithm container to use.

hyperparameters=hyperparameters, # Passes the defined hyperparameters to the estimator. These are the settings that guide the training process.

role=sagemaker.get_execution_role(), # Specifies the IAM role that SageMaker assumes during the training job. This role allows access to AWS resources like S3.

train_instance_count=1, # Sets the number of training instances. Here, it’s using a single instance.

train_instance_type='ml.m5.large', # Specifies the type of instance to use for training. ml.m5.2xlarge is a general-purpose instance with a balance of compute, memory, and network resources.

train_volume_size=5, # 5GB # Sets the size of the storage volume attached to the training instance, in GB. Here, it’s 5 GB.

output_path=output_path, # Defines where the model artifacts and output of the training job will be saved in S3.

train_use_spot_instances=True, # Utilizes spot instances for training, which can be significantly cheaper than on-demand instances. Spot instances are spare EC2 capacity offered at a lower price.

train_max_run=300, # Specifies the maximum runtime for the training job in seconds. Here, it's 300 seconds (5 minutes).

train_max_wait=600) # Sets the maximum time to wait for the job to complete, including the time waiting for spot instances, in seconds. Here, it's 600 seconds (10 minutes).

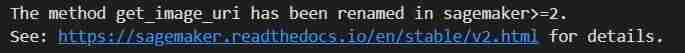

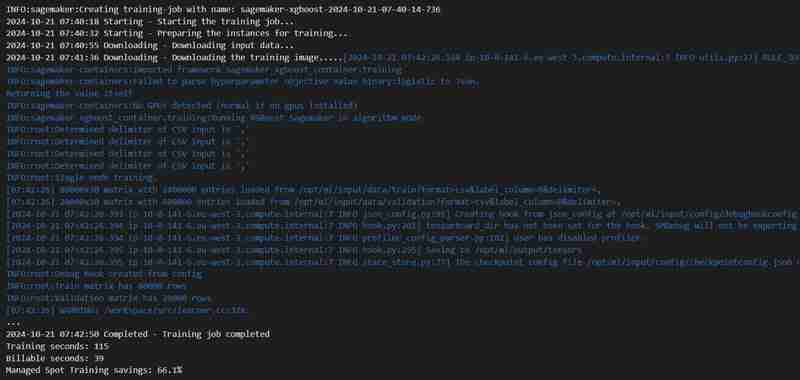

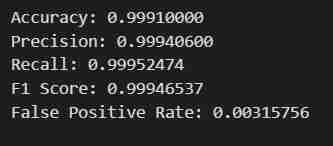

Output pelaksanaan sel kod

estimator.fit({'train': s3_input_train,'validation': s3_input_test})

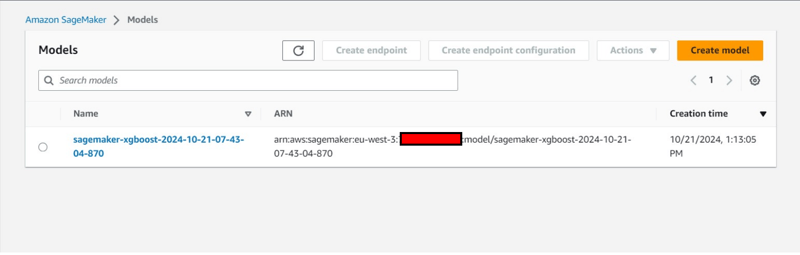

Pelaksanaan sel ke-8

xgb_predictor = estimator.deploy(initial_instance_count=1,instance_type='ml.m5.large')

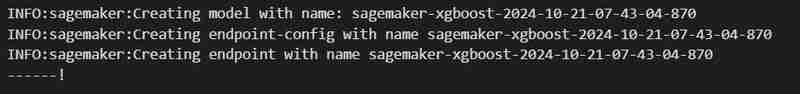

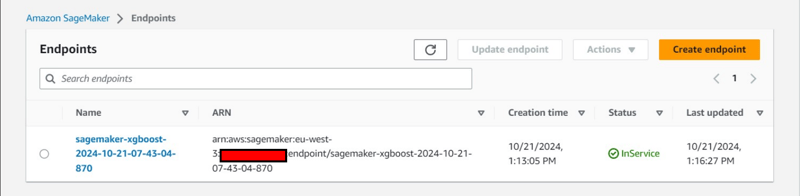

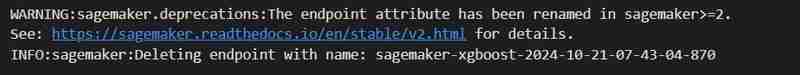

Pelaksanaan sel ke-23

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

Pelaksanaan sel kod ke-24

hyperparameters = {

"max_depth":"5", ## Maximum depth of a tree. Higher means more complex models but risk of overfitting.

"eta":"0.2", ## Learning rate. Lower values make the learning process slower but more precise.

"gamma":"4", ## Minimum loss reduction required to make a further partition on a leaf node. Controls the model’s complexity.

"min_child_weight":"6", ## Minimum sum of instance weight (hessian) needed in a child. Higher values prevent overfitting.

"subsample":"0.7", ## Fraction of training data used. Reduces overfitting by sampling part of the data.

"objective":"binary:logistic", ## Specifies the learning task and corresponding objective. binary:logistic is for binary classification.

"num_round":50 ## Number of boosting rounds, essentially how many times the model is trained.

}

# A SageMaker estimator that calls the xgboost-container

estimator = sagemaker.estimator.Estimator(image_uri=container, # Points to the XGBoost container we previously set up. This tells SageMaker which algorithm container to use.

hyperparameters=hyperparameters, # Passes the defined hyperparameters to the estimator. These are the settings that guide the training process.

role=sagemaker.get_execution_role(), # Specifies the IAM role that SageMaker assumes during the training job. This role allows access to AWS resources like S3.

train_instance_count=1, # Sets the number of training instances. Here, it’s using a single instance.

train_instance_type='ml.m5.large', # Specifies the type of instance to use for training. ml.m5.2xlarge is a general-purpose instance with a balance of compute, memory, and network resources.

train_volume_size=5, # 5GB # Sets the size of the storage volume attached to the training instance, in GB. Here, it’s 5 GB.

output_path=output_path, # Defines where the model artifacts and output of the training job will be saved in S3.

train_use_spot_instances=True, # Utilizes spot instances for training, which can be significantly cheaper than on-demand instances. Spot instances are spare EC2 capacity offered at a lower price.

train_max_run=300, # Specifies the maximum runtime for the training job in seconds. Here, it's 300 seconds (5 minutes).

train_max_wait=600) # Sets the maximum time to wait for the job to complete, including the time waiting for spot instances, in seconds. Here, it's 600 seconds (10 minutes).

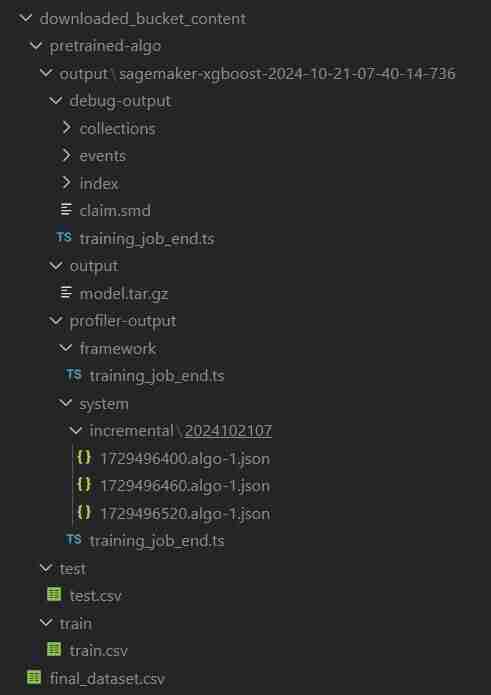

Pemerhatian Konsol Tambahan:

estimator.fit({'train': s3_input_train,'validation': s3_input_test})

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

ClassiSage/muat turun_bucket_kandungan

ClassiSage/.terraform

ClassiSage/ml_ops/pycache

ClassiSage/.terraform.lock.hcl

ClassiSage/terraform.tfstate

ClassiSage/terraform.tfstate.backup

NOTA:

Jika anda menyukai idea dan pelaksanaan Projek Pembelajaran Mesin ini menggunakan klasifikasi log AWS Cloud S3 dan SageMaker untuk HDFS, menggunakan Terraform untuk IaC (Automasi persediaan infrastruktur), Sila pertimbangkan untuk menyukai siaran ini dan membintangi selepas menyemak repositori projek di GitHub .

Atas ialah kandungan terperinci ClassiSage: Terraform IaC Automated AWS SageMaker berasaskan Model klasifikasi Log HDFS. Untuk maklumat lanjut, sila ikut artikel berkaitan lain di laman web China PHP!