Q&A: Even More Deadly Mistakes of MySQL Developm_MySQL

On Wednesday I gave a presentation on “ How to Avoid Even More Common (but Deadly) MySQL Development Mistakes ” for Percona MySQL Webinars . If you missed it, you can still register to view the recording and my slides.

On Wednesday I gave a presentation on “ How to Avoid Even More Common (but Deadly) MySQL Development Mistakes ” for Percona MySQL Webinars . If you missed it, you can still register to view the recording and my slides.

Thanks to everyone who attended, and especially to folks who asked the great questions. I answered as many as we had time for during the session, but here are all the questions with my complete answers:

Q: Disk bandwidth also not infinite

Indeed, you’re right!

We discussed in the webinar the impact on network bandwidth from using column wildcards in queries like SELECT *, but it’s also possible that using SELECT * can impact disk operations. Varchar, Blob, or Text columns can be stored on extra pages in the database, and if you include those columns in your query needlessly, it can cause the storage engine to do a lot of seeks and page reads unnecessarily.

For more details on string storage in InnoDB, see Peter Zaitsev’s blog on Blob Storage in Innodb .

Q: How many tables can be joined in a single query? What is the optimal number of joins?

MySQL has a limit of 63 table references in a given query. This limits how many JOIN operations you can do, and also limits the number of UNIONs. Actually you can go over this limit if your JOIN or UNION don’t reference any tables, that is, create a derived table of one row of expressions.

If you do join a lot of tables (or even self-join the same table many times), you’re likely to hit a practical scaling limit long before you reach 63 table references. The practical limit in your case depends on many factors, including the length of the tables, the data types, the type of join expressions in your queries, and your physical server’s capabilities. It’s not a fixed limit I can cite for you.

If you think you need dozens of table references in a single query, you should probably step back and reconsider your database design or your query design.

I often see this type of question (“what is the limit on the number of joins?”) when people try to use key/value tables, also called Entity-Attribute-Value, and they’re trying to pivot attributes from rows into columns, as if the table were stored in a conventional way with one column per attribute. This is a broken design for many reasons, and the scalability of many-way joins is just one problem with it.

Q: How many indexes can be created in a single table? Any limitation? What is the optimal number of indexes?

All MySQL storage engines support at least 16 indexes per table.

As far as the optimal number of indexes, I don’t pay attention to the number of indexes (as long as it remains lower than the max of 16). I try to make sure I have the right indexes for my queries. If you put an arbitrary cap of for example 8 or 10 indexes on a given table, then you might be running queries that lack a needed index, and the unnecessary extra cost of running that query is probably greater than the cost of maintaining the one extra index it needs.

That said, there are cases where you have such variation in query types that there’s no way to have optimal indexes to cover every possible case. Given that you can have multi-column indexes, and multi-column indexes with columns in different orders, there are n-factorial possible indexes on a table with n columns.

Q: There is a table with 3 columns: id(int), user_id(int), day(date). There is a high chance same user_id will ‘exist’ for every day. I read data by “where user_id = some_id” (very high throuhput) and delete all entries once a day by cron using “where sent_date = ’2014-01-01′ “. Have approx 6M rows per day deletion is pretty painfull. Will partitioning by column ‘day’ help me deleting those bulks faster? If yes – how much faster? How much will it slow down SELECTs? – not all entries are deleted, but only entries for some specific old day, e.g. ‘ WHERE day = ’1 week ago’

Range partitioning by date would give you the opportunity to ALTER TABLE…DROP PARTITION, so you could remove all data for a given date very quickly, much faster than deleting millions of rows. The performance of DROP PARTITION is like that of DROP TABLE, because each partition is physically stored like a separate table.

Searching for “where user_id = ?” would not be able to take advantage of partition pruning, but it would still be able to use an index on user_id. And if you drop old partitions, the benefit of searching a smaller table could be a good tradeoff.

Q: Regarding 20% selectivity as a threshold for the optimizer preferring a table-scan to an index lookup – is that a tunable?

No, it’s not tunable, it’s a fixed behavior of the query optimizer. If you search for a value and the optimizer estimates that > 20% of rows contain the value you search for, it will bypass the index and just do a table-scan.

For the same reason that the index of a book doesn’t contain very common words, because the list of pages that word appears on would be too long, and flipping back and forth from the back of the book to each listed page would actually be more work than just reading the book.

Also keep in mind my figure of 20% is approximate. Your results may vary. This is not a magic threshold in the source code, it’s just a tendency I have observed.

Q: Regarding generating synthetic test data, it sounds like a pretty easy perl script to write.

Yes, it might be easy to do that for one given table. But every table is different, and you might have hundreds of tables in dozens of applications to generate test data for. You might also want to vary the distribution of data values from one test to another.

Writing a test-data generator for one particular case is easy, so you might reasonably do it as a one-off task. Writing a general-purpose test-data generator that you can use for many cases is more work.

Q: Would love to have the set of URLs cited in the presentation without having to go back and mine them out of the presentation.

Open source message queues:

- Redis (Resque)

- OpenMQ

- ActiveMQ

- RabbitMQ

- Gearman

- Beanstalk

- Kafka

- Kestrel

MySQL Performance Blog articles:

- Exploring Message Brokers

- Kiss Kiss Kiss (keep it simple)

- Why you don’t want to shard

Open source test-data generator:

- Databene Benerator

Load-testing tools for web applications:

- JMeter

- Siege

Load-testing tools to replay query logs:

- Percona Playback

- pt-log-player

Further reading for implementing business rules:

- Real-World Rules Engines

- The Enterprise Rules Engine (a warning)

- Drools, the business logic integration platform

Q: How to best use mysql query cache?

Any cache is best used if you read from it many times for each time you write to it. So we’d like to estimate the average ratio of query cache reads to writes, to estimate how much leverage it’s giving us.

mysql> SHOW GLOBAL STATUS LIKE 'Qcache%';<br>Check the values for QCache_hits (which are cases when a query result was read from the query cache) over QCache_inserts (which are cases when the desired query result was not in the cache, and had to be run and then the result stored in the cache). I like to see a ratio of 1000% or more (i.e. 10:1 hits to inserts).

If you have a poor ratio, for example less than 1:1 or less than 100%, then consider disabling the query cache, because it may be costing more to maintain it than the performance benefit it’s giving you.

Keep in mind that this is only a guideline, because the calculation I described is only an average. It could be that the queries served by the query cache are very expensive, so using the cached result is a great benefit even if it accounts for a small number of hits. The only way to be certain is to load-test your application under your load, and compare overall performance results with the query cache enabled or disabled, and at different sizes.

Q: How to detect when too much indexes start to affect performance?

Some people are reluctant to create indexes because they have been warned that indexes require synchronous updates when you INSERT, UPDATE, or DELETE rows. Some people also make the generalization that indexes harm writes but benefit reads. Bot of these are not true.

Your DML operations aren’t really updating indexes in real time. InnoDB includes a feature called change buffering, which defers index updates. The change buffer is gradually merged into the index over time. That way, InnoDB can handle a big spike in traffic without it hurting throughput as much. You can monitor how much content in the change buffer remains to be merged:

mysql> SHOW GLOBAL STATUS LIKE 'Innodb_ibuf_size';<br>It’s also not accurate that indexes hurt writes. UPDATE and DELETE statements usually have a WHERE clause, to apply the changes to particular rows. These conditions use indexes to reduce the examined rows, just like in SELECT statements. But in UPDATE and DELETE statements, it’s even more important to use indexes, because otherwise the statement has to lock a lot of rows to ensure it locks the rows you’re changing.

So I generally say, don’t avoid indexes based only on the number of indexes you have, just make sure your indexes are being employed by the queries you run, and drop indexes that aren’t used. Here are a couple of past blog posts that show how to do this:

- Find unused indexes

- Quickly finding unused indexes (and estimating their size)

Thanks again for attending my webinar! Here are some more tips:

- Check out upcoming Percona Training classes in North America and Europe.

- Join Percona and the MySQL community at our Percona Live .

- Watch more webinars from Percona in the future!

Alat AI Hot

Undresser.AI Undress

Apl berkuasa AI untuk mencipta foto bogel yang realistik

AI Clothes Remover

Alat AI dalam talian untuk mengeluarkan pakaian daripada foto.

Undress AI Tool

Gambar buka pakaian secara percuma

Clothoff.io

Penyingkiran pakaian AI

Video Face Swap

Tukar muka dalam mana-mana video dengan mudah menggunakan alat tukar muka AI percuma kami!

Artikel Panas

Alat panas

Notepad++7.3.1

Editor kod yang mudah digunakan dan percuma

SublimeText3 versi Cina

Versi Cina, sangat mudah digunakan

Hantar Studio 13.0.1

Persekitaran pembangunan bersepadu PHP yang berkuasa

Dreamweaver CS6

Alat pembangunan web visual

SublimeText3 versi Mac

Perisian penyuntingan kod peringkat Tuhan (SublimeText3)

Topik panas

1392

1392

52

52

Bilakah imbasan jadual penuh lebih cepat daripada menggunakan indeks di MySQL?

Apr 09, 2025 am 12:05 AM

Bilakah imbasan jadual penuh lebih cepat daripada menggunakan indeks di MySQL?

Apr 09, 2025 am 12:05 AM

Pengimbasan jadual penuh mungkin lebih cepat dalam MySQL daripada menggunakan indeks. Kes -kes tertentu termasuk: 1) jumlah data adalah kecil; 2) apabila pertanyaan mengembalikan sejumlah besar data; 3) Apabila lajur indeks tidak selektif; 4) Apabila pertanyaan kompleks. Dengan menganalisis rancangan pertanyaan, mengoptimumkan indeks, mengelakkan lebih banyak indeks dan tetap mengekalkan jadual, anda boleh membuat pilihan terbaik dalam aplikasi praktikal.

Terangkan keupayaan carian teks penuh InnoDB.

Apr 02, 2025 pm 06:09 PM

Terangkan keupayaan carian teks penuh InnoDB.

Apr 02, 2025 pm 06:09 PM

Keupayaan carian teks penuh InnoDB sangat kuat, yang dapat meningkatkan kecekapan pertanyaan pangkalan data dan keupayaan untuk memproses sejumlah besar data teks. 1) InnoDB melaksanakan carian teks penuh melalui pengindeksan terbalik, menyokong pertanyaan carian asas dan maju. 2) Gunakan perlawanan dan terhadap kata kunci untuk mencari, menyokong mod boolean dan carian frasa. 3) Kaedah pengoptimuman termasuk menggunakan teknologi segmentasi perkataan, membina semula indeks dan menyesuaikan saiz cache untuk meningkatkan prestasi dan ketepatan.

Bolehkah saya memasang mysql pada windows 7

Apr 08, 2025 pm 03:21 PM

Bolehkah saya memasang mysql pada windows 7

Apr 08, 2025 pm 03:21 PM

Ya, MySQL boleh dipasang pada Windows 7, dan walaupun Microsoft telah berhenti menyokong Windows 7, MySQL masih serasi dengannya. Walau bagaimanapun, perkara berikut harus diperhatikan semasa proses pemasangan: Muat turun pemasang MySQL untuk Windows. Pilih versi MySQL yang sesuai (komuniti atau perusahaan). Pilih direktori pemasangan yang sesuai dan set aksara semasa proses pemasangan. Tetapkan kata laluan pengguna root dan simpan dengan betul. Sambung ke pangkalan data untuk ujian. Perhatikan isu keserasian dan keselamatan pada Windows 7, dan disyorkan untuk menaik taraf ke sistem operasi yang disokong.

Perbezaan antara indeks kluster dan indeks bukan clustered (indeks sekunder) di InnoDB.

Apr 02, 2025 pm 06:25 PM

Perbezaan antara indeks kluster dan indeks bukan clustered (indeks sekunder) di InnoDB.

Apr 02, 2025 pm 06:25 PM

Perbezaan antara indeks clustered dan indeks bukan cluster adalah: 1. Klustered Index menyimpan baris data dalam struktur indeks, yang sesuai untuk pertanyaan oleh kunci dan julat utama. 2. Indeks Indeks yang tidak berkumpul indeks nilai utama dan penunjuk kepada baris data, dan sesuai untuk pertanyaan lajur utama bukan utama.

Mysql: Konsep mudah untuk pembelajaran mudah

Apr 10, 2025 am 09:29 AM

Mysql: Konsep mudah untuk pembelajaran mudah

Apr 10, 2025 am 09:29 AM

MySQL adalah sistem pengurusan pangkalan data sumber terbuka. 1) Buat Pangkalan Data dan Jadual: Gunakan perintah Createdatabase dan Createtable. 2) Operasi Asas: Masukkan, Kemas kini, Padam dan Pilih. 3) Operasi lanjutan: Sertai, subquery dan pemprosesan transaksi. 4) Kemahiran Debugging: Semak sintaks, jenis data dan keizinan. 5) Cadangan Pengoptimuman: Gunakan indeks, elakkan pilih* dan gunakan transaksi.

Hubungan antara pengguna dan pangkalan data MySQL

Apr 08, 2025 pm 07:15 PM

Hubungan antara pengguna dan pangkalan data MySQL

Apr 08, 2025 pm 07:15 PM

Dalam pangkalan data MySQL, hubungan antara pengguna dan pangkalan data ditakrifkan oleh kebenaran dan jadual. Pengguna mempunyai nama pengguna dan kata laluan untuk mengakses pangkalan data. Kebenaran diberikan melalui perintah geran, sementara jadual dibuat oleh perintah membuat jadual. Untuk mewujudkan hubungan antara pengguna dan pangkalan data, anda perlu membuat pangkalan data, membuat pengguna, dan kemudian memberikan kebenaran.

Bolehkah Mysql dan Mariadb wujud bersama

Apr 08, 2025 pm 02:27 PM

Bolehkah Mysql dan Mariadb wujud bersama

Apr 08, 2025 pm 02:27 PM

MySQL dan Mariadb boleh wujud bersama, tetapi perlu dikonfigurasikan dengan berhati -hati. Kuncinya adalah untuk memperuntukkan nombor port dan direktori data yang berbeza untuk setiap pangkalan data, dan menyesuaikan parameter seperti peruntukan memori dan saiz cache. Konfigurasi sambungan, konfigurasi aplikasi, dan perbezaan versi juga perlu dipertimbangkan dan perlu diuji dengan teliti dan dirancang untuk mengelakkan perangkap. Menjalankan dua pangkalan data secara serentak boleh menyebabkan masalah prestasi dalam situasi di mana sumber terhad.

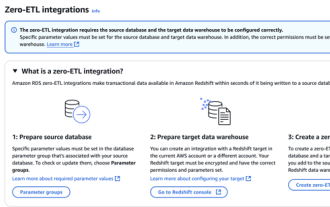

Integrasi RDS MySQL dengan Redshift Zero ETL

Apr 08, 2025 pm 07:06 PM

Integrasi RDS MySQL dengan Redshift Zero ETL

Apr 08, 2025 pm 07:06 PM

Penyederhanaan Integrasi Data: AmazonRDSMYSQL dan Integrasi Data Integrasi Zero ETL Redshift adalah di tengah-tengah organisasi yang didorong oleh data. Proses tradisional ETL (ekstrak, menukar, beban) adalah kompleks dan memakan masa, terutamanya apabila mengintegrasikan pangkalan data (seperti Amazonrdsmysql) dengan gudang data (seperti redshift). Walau bagaimanapun, AWS menyediakan penyelesaian integrasi ETL sifar yang telah mengubah keadaan ini sepenuhnya, menyediakan penyelesaian yang mudah, hampir-sebenar untuk penghijrahan data dari RDSMYSQL ke redshift. Artikel ini akan menyelam ke integrasi RDSMYSQL Zero ETL dengan redshift, menjelaskan bagaimana ia berfungsi dan kelebihan yang dibawa kepada jurutera dan pemaju data.