This article brings you relevant knowledge about nginx, which mainly introduces nginx interception of crawlers. Interested friends can take a look at it together. I hope it will be helpful to everyone.

Foreword:

Recently, I found that the memory of the server skyrocketed crazily during a certain period of time. At first, I thought it was caused by normal business. After upgrading the server memory, I found that it still did not work. Solve the problem; (I am lazy here and did not find the problem at first. The default is that the business volume has increased)

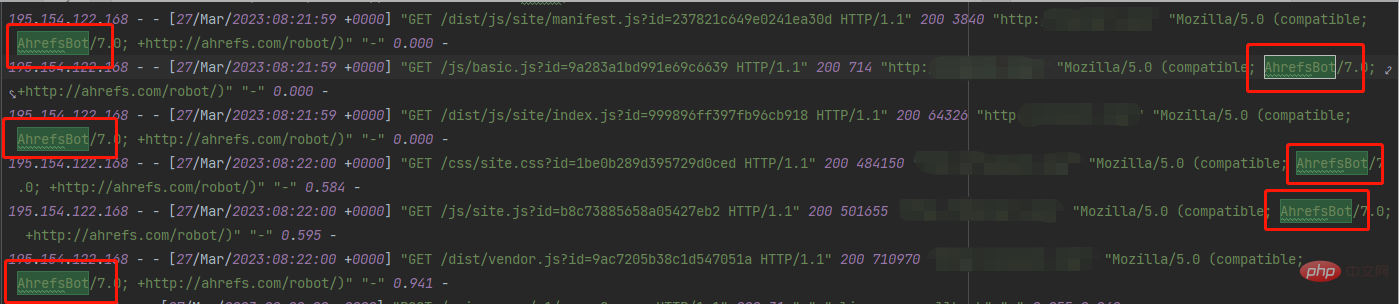

Immediately check the nginx log and found some unusual requests:

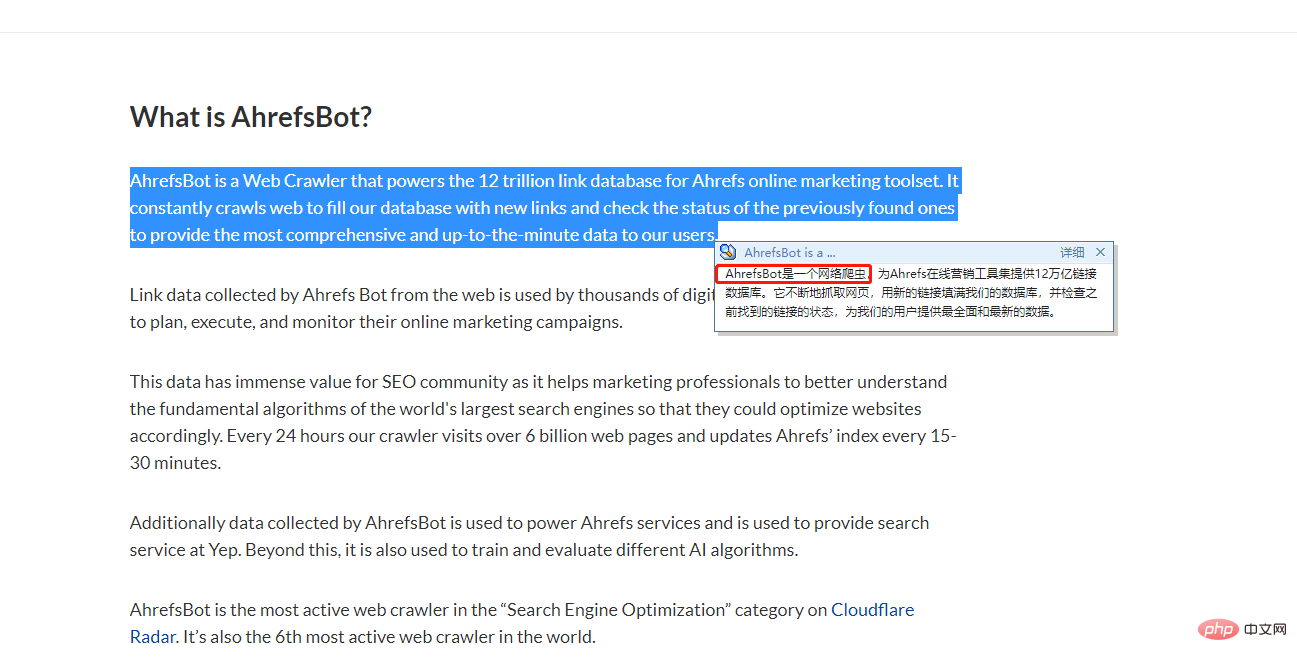

What is this? I immediately searched it out of curiosity, and the result was:

Good guy, I almost didn’t send my server home. ;

Quickly solve it:

nginx level solution

I found that although it is a crawler, it is not disguised. Every request They all contain user-agent, and they are all the same, so it’s easy to solve. Just enter the code: (I apply docker here)

1, docker-compose

version: '3'

services:

d_nginx:

container_name: c_nginx

env_file:

- ./env_files/nginx-web.env

image: nginx:1.20.1-alpine

ports:

- '80:80'

- '81:81'

- '443:443'

links:

- d_php

volumes:

- ./nginx/conf:/etc/nginx/conf.d

- ./nginx/nginx.conf:/etc/nginx/nginx.conf

- ./nginx/deny-agent.conf:/etc/nginx/agent-deny.conf

- ./nginx/certs:/etc/nginx/certs

- ./nginx/logs:/var/log/nginx/

- ./www:/var/www/html2. Directory structure

nginx -----nginx.conf -----agent-deny.conf -----conf ----------xxxx01_server.conf ----------xxxx02_server.conf

3. agent-deny.conf

if ($http_user_agent ~* (Scrapy|AhrefsBot)) {

return 404;

}

if ($http_user_agent ~ "Mozilla/5.0 (compatible; AhrefsBot/7.0; +http://ahrefs.com/robot/)|^$" ) {

return 403;

}4. Then include this agent-deny.conf

server {

include /etc/nginx/agent-deny.conf;

listen 80;

server_name localhost;

client_max_body_size 100M;

root /var/www/html/xxxxx/public;

index index.php;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

#客户端允许上传文件大小

client_max_body_size 300M;

#客户端缓冲区大小,设置过小,nginx就不会在内存里边处理,将生成临时文件,增加IO

#默认情况下,该指令,32位系统设置一个8k缓冲区,64位系统设置一个16k缓冲区

#client_body_buffer_size 5M;

#发现设置改参数后,服务器内存跳动的幅度比较大,因为你不能控制客户端上传,决定不设置改参数

#此指令禁用NGINX缓冲区并将请求体存储在临时文件中。 文件包含纯文本数据。 该指令在NGINX配置的http,server和location区块使用

#可选值有:

#off:该值将禁用文件写入

#clean:请求body将被写入文件。 该文件将在处理请求后删除

#on: 请求正文将被写入文件。 处理请求后,将不会删除该文件

client_body_in_file_only clean;

#客户端请求超时时间

client_body_timeout 600s;

location /locales {

break;

}

location / {

#禁止get请求下载.htaccess文件

if ($request_uri = '/.htaccess') {

return 404;

}

#禁止get请求下载.gitignore文件

if ($request_uri = '/storage/.gitignore') {

return 404;

}

#禁止get下载web.config文件

if ($request_uri = '/web.config') {

return 404;

}

try_files $uri $uri/ /index.php?$query_string;

}

location /oauth/token {

#禁止get请求访问 /oauth/token

if ($request_method = 'GET') {

return 404;

}

try_files $uri $uri/ /index.php?$query_string;

}

location /other/de {

proxy_pass http://127.0.0.1/oauth/;

rewrite ^/other/de(.*)$ https://www.baidu.com permanent;

}

location ~ \.php$ {

try_files $uri /index.php =404;

fastcgi_split_path_info ^(.+\.php)(/.+)$;

fastcgi_pass d_php:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_connect_timeout 300s;

fastcgi_send_timeout 300s;

fastcgi_read_timeout 300s;

include fastcgi_params;

#add_header 'Access-Control-Allow-Origin' '*';

#add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS, PUT, DELETE';

#add_header 'Access-Control-Allow-Headers' 'DNT,X-Mx-ReqToken,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type,Authorization,token';

}

}in each service so that each This AhrefsBot will be intercepted in the request.

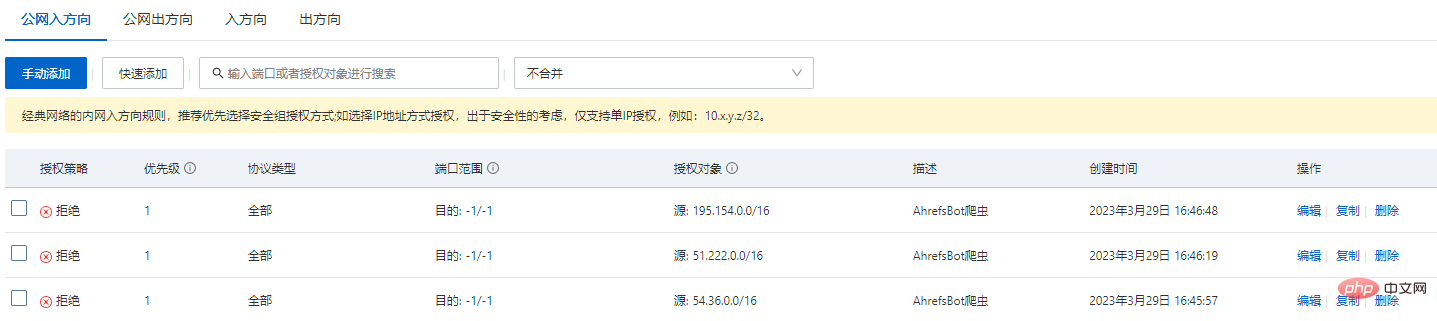

Alibaba Cloud Security Group Interception

Analyzing the logs also found that in fact, the requested IPs only have a few segments, so for the sake of multiple guarantees (Alibaba Cloud is the fastest effective , the best effect, the paid one is different)

ip segment:

54.36.0.0 51.222.0.0 195.154.0.0

Direct external network access direction:

Recommended Tutorial: nginx tutorial

The above is the detailed content of Memory soars! Remember once nginx intercepted the crawler. For more information, please follow other related articles on the PHP Chinese website!