Recommended: "PHP Video Tutorial"

##1. Why is it difficult to do flash sale business?

1) IM system, such as QQ or Weibo,Everyone reads their own data (friend list, group List, personal information);

2) Weibo system, everyone can read the data of the people you follow,One person can read the data of multiple people;

3) In the flash sale system, there is only one copy of the inventory. Everyone will read and write the data at a concentrated time.Multiple people read one data.

For example: Xiaomi mobile phones have a flash sale every Tuesday. There may be only 10,000 mobile phones, but the instantaneous traffic may be hundreds or tens of millions. Another example: 12306 grab tickets, tickets are limited, there is one inventory, there is a lot of instantaneous traffic, and they all read the same inventory.Read and write conflicts, locks are very serious, this is where the flash sales business is difficult. So how do we optimize the structure of the flash sale business?

2. Optimization direction

There are two optimization directions (I will talk about these two points today):(1)will Requests should be intercepted upstream of the system as much as possible (do not let lock conflicts fall to the database). The reason why the traditional flash sale system fails is that requests overwhelm the back-end data layer, data read-write lock conflicts are serious, concurrency is high and responses are slow, and almost all requests time out. Although the traffic is large, the effective traffic for successful orders is very small. Take 12306 as an example. There are actually only 2,000 tickets for a train. If 2 million people come to buy it, almost no one can buy it successfully, and the request efficiency rate is 0. (2)

Make full use of the cache, buy tickets in a flash, this is a typical application scenario of reading more and less. Most of the requests are train number query, ticket query, order and payment. It's a write request. There are actually only 2,000 tickets for a train, and 2 million people come to buy them. At most 2,000 people place orders successfully, and everyone else queries the inventory. The write ratio is only 0.1%, and the read ratio is 99.9%. It is very suitable for caching optimization. Okay, let’s talk about the “interception of requests as much as possible upstream of the system” method and the “caching” method later, and let’s talk about the details.

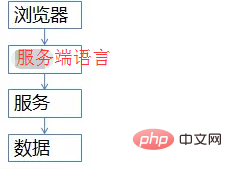

3. Common flash sale architectureThe common site architecture is basically like this (absolutely do not draw deceptive architecture diagrams)

(1) The browser side, the top layer, will execute some JS code

(2) The server side, this layer will access the back-end data and return the html page to the browser

(3) Service layer (web server), shields the underlying data details from the upstream and provides data access

(4) Data layer, the final inventory is stored here, mysql is a typical example (of course there are There will be caching)

Although this picture is simple, it can vividly illustrate the high-traffic and high-concurrency flash sale business architecture. Everyone should remember this picture.

How to optimize each level will be analyzed in detail below.

4. Optimization details at each levelThe first level, how to optimize the client (browser layer, APP layer)

Ask you a question , everyone has played WeChat's shake to grab red envelopes, right?

Every time you shake, will a request be sent to the backend? Looking back at the scene where we placed an order to grab tickets, after clicking the "Query" button, the system got stuck and the progress bar increased slowly. As a user, I would unconsciously click "Query" again, right? Keep clicking, keep clicking, click, click. . . Is it useful? The system load is increased for no reason. A user clicks 5 times, and 80% of the requests are generated. How to fix it? (a) At the product level, after the user clicks "Query" or "Purchase Tickets", the

button will be grayed out, prohibiting the user from repeatedly submitting requests; (b) JS At the level, users are restricted to submit only one request within

x seconds; at the APP level, similar things can be done. Although you are shaking WeChat crazily, it actually takes x seconds to go backwards. The client initiates a request. This is what is called "interception of requests as far upstream as possible in the system". The more upstream, the better. The browser layer and APP layer are blocked, so that 80% of requests can be blocked. This method can only block ordinary users (but 99% The users are ordinary users) They cannot stop the

high-end programmers in the group. As soon as firebug captures the packet, everyone knows what http looks like. JS cannot stop programmers from writing for loops and calling http interfaces. How to handle this part of the request? The second layer, request interception at the server level

How to intercept? How to prevent programmers from writing for loop calls? Is there any basis for deduplication? IP? cookie-id? ...It’s complicated. This kind of business requires login, just use uid. At the server level, performs request counting and deduplication on uid , and does not even need to store the count uniformly, but directly store it in the server memory (this count will be inaccurate, but it is the simplest). A uid can only pass one request in 5 seconds, which can block 99% of for loop requests.

5s only passes one request, what about the other requests? Cache, Page cache, the same uid, limit the access frequency, do page caching, and all requests that arrive at the server within x seconds will return the same page. For queries on the same item, such as train numbers, page caching is performed, and requests arriving at the server within x seconds will all return the same page. Such a current limit can not only ensure that users have a good user experience (no 404 is returned) but also ensure the robustness of the system (using page caching to intercept requests on the server) .

Page caching does not necessarily ensure that all servers return consistent pages. It can also be placed directly in the memory of each site. The advantage is that it is simple, but the disadvantage is that the http request falls to different servers, and the returned ticket data may be different. This is the request interception and cache optimization of the server.

Okay, this method stops programmers who write for loops to send http requests. Some high-end programmers (hackers) control 100,000 broilers, have 100,000 uids in their hands, and send requests at the same time (not considering the real-name system for now) The problem is that Xiaomi does not need real-name system to grab mobile phones), what should I do now? Server cannot be stopped according to the uid current limit.

The third layer is the service layer to intercept (anyway, don’t let the request fall to the database)

How to intercept the service layer? Brother, I am at the service level. I know clearly that Xiaomi only has 10,000 mobile phones. I know that there are only 2,000 tickets for a train. What is the point of making 100,000 requests to the database? That's right, request queue!

For write requests, make a request queue, and only transmit a limited number of write requests to the data layer each time (place an order, pay for such write business)

1w mobile phones, only transmit 1w order requests go to db

3k train tickets, only 3k order requests go to db

If all are successful, another batch will be placed. If the inventory is not enough, write requests in the queue All return "Sold Out".

How to optimize read requests? Cache resistance, whether it is memcached or redis, there should be no problem if a single machine can resist 10w per second. With such current limiting, only very few write requests and very few read cache mis-requests will penetrate the data layer, and 99.9% of the requests are blocked.

Of course, there are also some optimizations in business rules. Recall what 12306 has done, Time-sharing and segmented ticket sales. They used to sell tickets at 10 o'clock, but now they sell tickets at 8 o'clock, 8:30, 9 o'clock... and release a batch every half hour: the traffic Spread evenly.

Secondly, Optimization of data granularity: When you go to buy tickets, for the remaining ticket query business, there are 58 tickets left, or 26. Do you really care? In fact, we only Are you concerned about voting or not voting? When traffic is heavy, just make a coarse-grained cache of "ticketed" and "unticketed".

Third, the asynchronousness of some business logic: such as the separation of order business and payment business. These optimizations are all combined with business. I have shared a view before that "all architectural designs that are divorced from business are rogues." The optimization of architecture must also be targeted at business.

Okay, finally the database layer

The browser intercepted 80%, Server intercepted 99.9% and cached the page, and the service layer did another write With the request queue and data cache, each request to the database layer is controllable. There is basically no pressure on the db. You can take a leisurely stroll and can handle it on a single machine. Again, the inventory is limited and Xiaomi's production capacity is limited. There is no point in making so many requests to the database.

All are transmitted to the database, 1 million orders are placed, 0 are successful, and the request efficiency is 0%. 3k data were obtained, all were successful, and the request efficiency was 100%.

5. Summary

The above description should be very clear. There is no summary. For the flash sale system, I will repeat the two architecture optimization ideas from my personal experience. :

(1)Try to intercept the request in the upstream of the system (the more upstream, the better);

(2)Read more and write less, which is more commonly used. Cache (cache resists read pressure);

Browser and APP: speed limit

Server: speed limit according to uid, and page caching

Service layer (web server): Make write request queues to control traffic according to the business, and do data caching

Data layer: Stroll in the courtyard

And: Optimize based on the business

6. Q&A

Question 1. According to your architecture, in fact, the greatest pressure is on the server. Assuming that the number of real and effective requests is 10 million, it is unlikely to limit the number of request connections, so this part of the pressure How to deal with it?

Answer: The concurrency per second may not be 1kw. Assuming there is 1kw, there are two solutions:

(1) The service layer (web server) can be expanded by adding machines. It's not enough to have 1k machines.

(2) If the machine is not enough, discard the request and discard 50% (50% will be returned directly and try again later). The principle is to protect the system and not allow all users to fail.

Question 2. "Control 100,000 broilers, have 100,000 uids in hand, and send requests at the same time." How to solve this problem?

Answer: As mentioned above, the service layer (web server) write request queue control

Question 3: Can the cache that limits access frequency also be used for search? For example, if user A searches for "mobile phone" and user B searches for "mobile phone", will the cached page generated by A's search be used first?

Answer: This is possible. This method is also often used in "dynamic" operation activity pages, such as pushing 4kw user app-push operation activities in a short period of time and doing page caching.

Question 4: What to do if queue processing fails? What should I do if the broiler chickens burst the queue?

Answer: If the processing fails, the order will be returned as failed, and the user will be asked to try again. The queue cost is very low, so it would be difficult to explode. In the worst case, after several requests are cached, subsequent requests will directly return "no ticket" (there are already 1 million requests in the queue, and they are all waiting, so there is no point in accepting any more requests)

Question 5 :ServerIf you filter, do you save the number of uid requests separately in the memory of each site? If this is the case, how to deal with the situation where multiple server clusters distribute the responses of the same user to different servers through the load balancer? Or should we put server filtering before load balancing?

Answer: It can be placed in the memory. In this case, it seems that one server limits one request for 5s. Globally (assuming there are 10 machines), it actually limits 10 requests for 5s. Solution:

1) Increase the restriction (this is the recommended solution, the simplest)

2) Do 7-layer balancing on the nginx layer, so that a uid request falls on the same machine as much as possible

Question 6: If the service layer (web server) filters, the queue is a unified queue of the service layer (web server) ? Or is there a queue for each server that provides services? If it is a unified queue, is it necessary to perform lock control before the requests submitted by each server are put into the queue?

Answer: You don’t need to unify a queue. In this case, each service will pass a smaller number of requests (total number of tickets/number of services). It’s that simple. Unifying a queue is complicated again.

Question 7: After the payment is completed after the flash sale, and the placeholder is canceled without payment, how to control and update the remaining inventory in a timely manner?

Answer: There is a status in the database, unpaid. If the time exceeds, for example, 45 minutes, the inventory will be restored again (known as "return to warehouse"). The inspiration for us to grab tickets is that after starting the flash sale, try again after 45 minutes, maybe there will be tickets again~

Question 8: Different users browse the same product and the inventory displayed in different cache instances is completely different. Could you please tell me how to make the cache data consistent or allow dirty reads?

Answer: With the current architecture design, requests fall on different sites, and the data may be inconsistent (the page cache is different). This business scenario is acceptable. But the real data at the database level is no problem.

Question 9: Even if the business optimization considers "3k train tickets, only 3k order requests go to the db", will these 3K orders not cause congestion?

Answer: (1) The database can withstand 3k write requests; (2) Data can be split; (3) If 3k cannot be sustained, the service layer (web server) You can control the number of concurrent connections, based on the stress test situation. 3k is just an example;

Question 10; If it is on the server or service layer (web server)If the background processing fails, do you need to consider replaying this batch of failed requests? Or just throw it away?

Answer: Don’t replay it. Return to user query failure or order failure. One of the architectural design principles is “fail fast”.

Question 11. For flash sales of large systems, such as 12306, there are many flash sales going on at the same time. How to divert them?

Answer: Vertical split

Question 12. Another question comes to mind. Is this process synchronous or asynchronous? If it is synchronized, there should be slow response feedback. But if it is asynchronous, how to control whether the response result can be returned to the correct requester?

Answer: The user level is definitely synchronous (the user's http request is suppressed), and the service layer (web server) can be synchronous or asynchronous.

Question 13. Flash sale group question: At what stage should inventory be reduced? If you place an order to lock inventory, what should you do if a large number of malicious users place orders to lock inventory without paying?

Answer: The write request volume at the database level is very low. Fortunately, the order will not be paid. Wait until the time has elapsed before "returning to the position". As mentioned before.

Tips: Things worth paying attention to

1. Deploy away from the original site (the server for the flash sale function and the mall server should not be placed on the same server to prevent flash sales Crash, the mall cannot be accessed...)

2. Monitor more, pay attention to monitoring, find someone to keep an eye on

Key points of flash sale:

1. High Available: dual-active

2. High concurrency: load balancing, security filtering

Design ideas:

1. Static page: cdn (use ready-made by major manufacturers), URL hiding, page compression, caching mechanism

2. Dynamic pages: queuing, asynchronous, qualification rush

Other suggestions:

1. Baidu’s suggestions: opcode cache, cdn , Larger server instance

2. Alibaba’s suggestions: cloud monitoring, cloud shield, ecs, oss, rds, cdn

Usage ideas of cdn:

1. Upload static resources (pictures, js, css, etc.) to cdn

2. Disadvantages: But please note that the cdn is not updated in time when updating, so you need to push it

Recognize the current situation Environment, form:

1. Users: extremely large number, normal/bad people (cdn acceleration is also a diversion, because he accesses the cdn node nearby)

2. Region: all over the country (delayed 1. 2s is not enough, because the flash sale may end with a delay of 1s, and CDN is needed to allow users to select the nearest node)

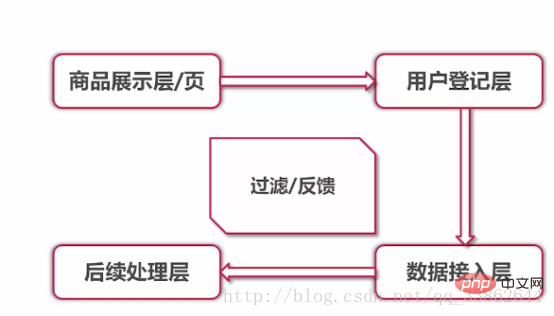

3. Business process: product display and registration at the front desk. Backend data access and data processing

Add a page before the flash sale to divert and promote other products

## Product display layer architecture

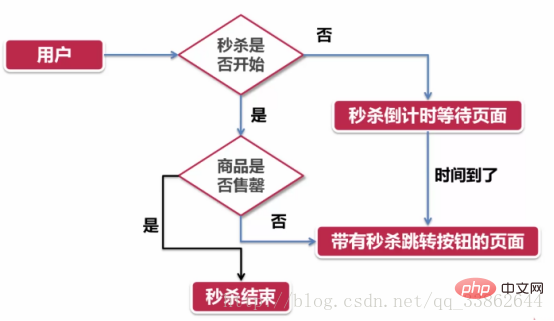

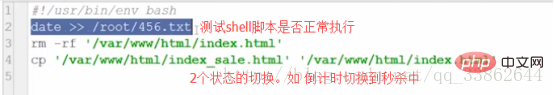

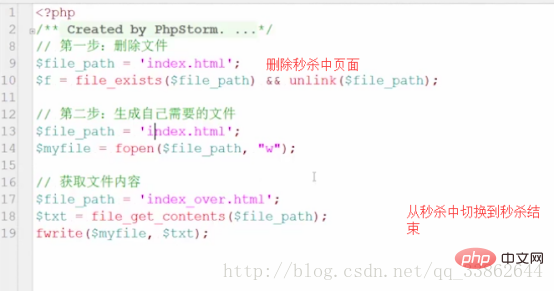

3 statuses of the page: 1. Product display: countdown page2. Flash sale in progress: click to enter the flash sale page3. The flash sale activity is over: prompt that the activity has endedNew idea: think about the problem in terms of timeline

Assume that what we see is the flash sale In progress, pushing forward is the countdown, pushing back is the end

The following picture shows the problem from the user's perspective:

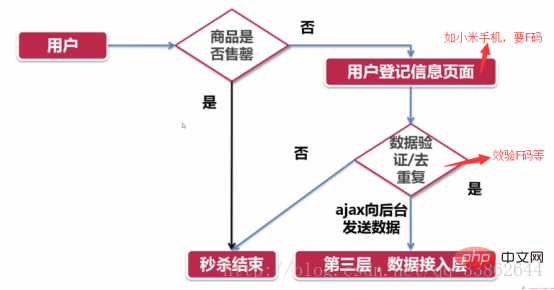

User registration layer architecture

##$.cookie encapsulation

##$.cookie encapsulation

Code:

Code:

How to calculate the time from layer 2 to layer 3? ?

How long is the total delay caused by factors such as layer 2 sending data to layer 3 network transmission dns resolution, etc., so that we can evaluate the number of servers that need to be configured

Summary in 2 sentences:

Summary in 2 sentences:

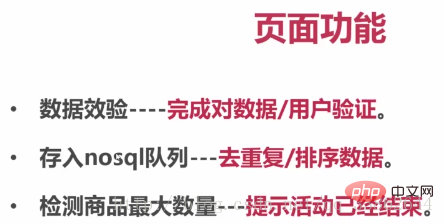

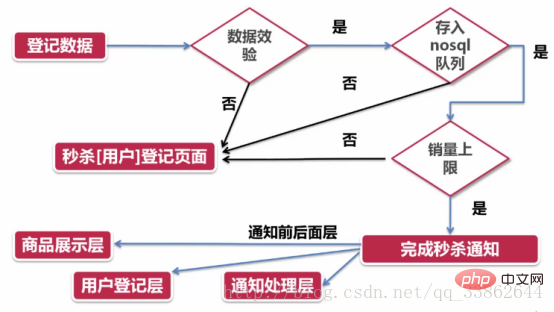

1. If you fail at the key points, return directly to the previous layer (user registration layer)

2. Pass at the key points , will notify other layers

Effect: encryption and decryption similar to Microsoft serial number

Queue: ordered collection using redis

Upper limit: technical flag

Data processing layer

The above is the detailed content of Share ideas for implementing the PHP flash sale function. For more information, please follow other related articles on the PHP Chinese website!