描述问题:

目前想用SQL语句来查询一个表(50万条),并将表内昵称为空的用户Openid拿出来,每百条查询一次。环境MySQL。

问题关键:

一次性取出,分百条查询,也会给服务器造成很大压力(*1),能不能让SQL查询出100条符合结果的记录,并返回最后一条的ID?

拓展:

除了我这种想法,业界有没有一个合适的方案?或者关于SQL大容量查询 的一些书籍。

*1:首先,我的服务器是小水管,50万条已经相当重了。其次,就算我现在扩展服务器配置,假设某天达到了1亿条,也会成为很大的负载压力。

表结构:

id openid nickname avatar

部分openid的nickname或avatar(头像)是空的,想要每次查出100个nickname或avatar(头像)为空的openid,并调用微信接口,将获取到的信息插入。

This question is a bit strange. Do you want to return 100 pieces of data? Or should we return the last

IDamong these 100 pieces of data?First of all, 500,000 pieces of data is not too much, unless the performance of the machine where the server is deployed is very poor, so the query speed should not be very slow (unless you write very complex or low-performance SQL). It is indeed possible to retrieve it in multiple times. Reduce database pressure, but increase network transmission pressure.

In addition, the solution for 50W table data is completely different from the solution for 1E table data, so don’t assume that one solution can be solved once and for all.

Attached SQL:

Since

ORis used, it is estimated that the indexes ofnicknameandavatarare not needed, or you can add a field calledcompletedto indicate whether the record has been completed (i.e.nicknameandavatarare not empty, this field is maintained when the program inserts data), so that your query SQL can avoidORconnections.Build indexes on all the fields that need to be queried. In your current scenario, there will be no pressure on tens of millions of data

1.Has the index been added?

2. Optimize SQL

500,000 data is really not a lot.

I don’t understand what function you want to achieve. It’s meaningless to find 100 items but only return one column of the last one. It’s just 500,000 data. If you have to implement an indexed write storage process, it’s almost the same.

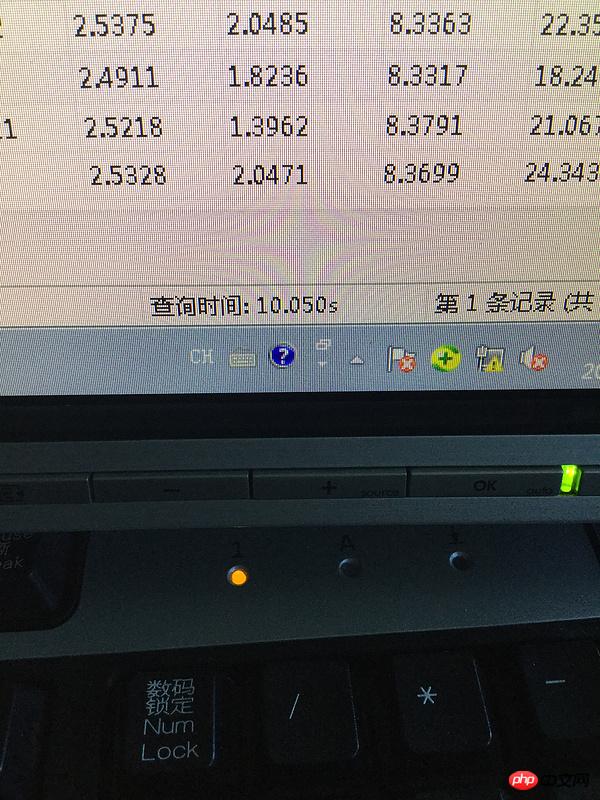

A table with 480w rows checks for qualified columns and returns all the entire rows. It only takes 10 seconds. Your problem may be that there are too many qualified rows and there is insufficient memory when outputting. You You can write a cursor to insert the results one by one into a new table, which is just slower.

A table with 480w rows checks for qualified columns and returns all the entire rows. It only takes 10 seconds. Your problem may be that there are too many qualified rows and there is insufficient memory when outputting. You You can write a cursor to insert the results one by one into a new table, which is just slower.

Add an index, or write the database to the cache regularly

It’s boring to be like this and it’s ruining my reputation. If the result is not ideal, we can discuss it. Just delete it.