WAIT: synchronous replication for Redis

Redis unstable has a new command called "WAIT". Such a simple name, is indeed the incarnation of a simple feature consisting of less than 200 lines of code, but providing an interesting way to change the default behavior of Redis replicati

Redis unstable has a new command called "WAIT". Such a simple name, is indeed the incarnation of a simple feature consisting of less than 200 lines of code, but providing an interesting way to change the default behavior of Redis replication.The feature was extremely easy to implement because of previous work made. WAIT was basically a direct consequence of the new Redis replication design (that started with Redis 2.8). The feature itself is in a form that respects the design of Redis, so it is relatively different from other implementations of synchronous replication, both at API level, and from the point of view of the degree of consistency it is able to ensure.

Replication: synchronous or not?

===

Replication is one of the main concepts of distributed systems. For some state to be durable even when processes fail or when there are network partitions making processes unable to communicate, we are forced to use a simple but effective strategy, that is to take the same information “replicated” across different processes (database nodes).

Every kind of system featuring strong consistency will use a form of replication called synchronous replication. It means that before some new data is considered “committed”, a node requires acknowledge from other nodes that the information was received. The node initially proposing the new state, when the acknowledge is received, will consider the information committed and will reply to the client that everything went ok.

There is a price to pay for this safety: latency. Systems implementing strong consistency are unable to reply to the client before receiving enough acknowledges. How many acks are “enough”? This depends on the kind of system you are designing. For example in the case of strong consistent systems that are available as long as the majority of nodes are working, the majority of the nodes should reply back before some data is considered to be committed. The result is that the latency is bound to the slowest node that replies as the (N/2+1)th node. Slower nodes can be ignored once the majority is reached.

Asynchronous replication

===

This is why asynchronous replication exists: in this alternative model we reply to the client confirming its write BEFORE we get acknowledges from other nodes.

If you think at it from the point of view of CAP, it is like if the node *pretends* that there is a partition and can’t talk with the other nodes. The information will eventually be replicated at a latter time, exactly like an eventually consistent DB would do during a partition. Usually this latter time will be a few hundred microseconds later, but if the node receiving the write fails before propagating the write, but after it already sent the reply to the client, the write is lost forever even if it was acknowledged.

Redis uses asynchronous replication by default: Redis is designed for performances and low, easy to predict, latency. However if possible it is nice for a system to be able to adapt consistency guarantees depending on the kind of write, so some form of synchronous replication could be handy even for Redis.

WAIT: call me synchronous if you want.

===

The way I depicted synchronous replication above, makes it sound simpler than it actually is.

The reality is that usually synchronous replication is “transactional”, so that a write is propagated to the majority of nodes (or *all* the nodes with some older algorithm), or none, in one way or the other not only the node proposing the state change must wait for a reply from the majority, but also the other nodes need to wait for the proposing node to consider the operation committed in order to, in turn, commit the change. Replicas require in one way or the other a mechanism to collect the write without applying it, basically.

This means that nothing happens during this process, everything is blocked for the current operation, and later you can process a new one, and so forth and so forth.

Because synchronous replication can be very costly, maybe we can do with a bit less? We want a way to make sure some write propagated across the replicas, but at the same time we want other clients to go at light speed as usually sending commands and receiving replies. Clients waiting in a synchronous write should never block any other client doing other synchronous or non-synchronous work.

There is a tradeoff you can take: WAIT does not allow to rollback an operation that was not propagated to enough slaves. It only offers, merely, a way to inform the client about what happened.

The information, specifically, is the number of replicas that your write was able to reach, all this encapsulated into a simple to use blocking command.

This is how it works:

redis 127.0.0.1:9999> set foo bar

OK

redis 127.0.0.1:9999> incr mycounter

(integer) 1

redis 127.0.0.1:9999> wait 5 100

(integer) 7

Basically you can send any number of commands, and they’ll be executed, and replicated as usually.

As soon as you call WAIT however the client stops until all the writes above are successfully replicated to the specified number of replicas (5 in the example), unless the timeout is reached (100 milliseconds in the example).

Once one of the two limits is reached, that is, the master replicated to 5 replicas, or the timeout was reached, the command returns, sending as reply the number of replicas reached. If the return value is less than the replicas we specified, the request timed out, otherwise the above commands were successfully replicated to the specified number of replicas.

In practical terms this means that you have to deal with the condition in which the command was accepted by less replicas you specified in the amount of time you specified. More about that later.

How it works?

===

The WAIT implementation is surprisingly simple. The first thing I did was to take the blocking code of BLPOP & other similar operations and make it a generic primitive of Redis internal API, so now implementing blocking commands is much simpler.

The rest of the implementation was trivial because 2.8 introduced the concept of master replication offset, that is, a global offset that we increment every time we send something to the slaves. All the salves receive exactly the same stream of data, and remember the offset processed so far as well.

This is very useful for partial resynchronization as you can guess, but moreover slaves acknowledge the amount of replication offset processed so far, every second, with the master, so the master has some idea about how much they processed.

Every second sucks right? We can’t base WAIT on an information available every second. So when WAIT is used by a client, it sets a flag, so that all the WAIT callers in a given event loop iteration will be grouped together, and before entering the event loop again we send a REPLCONF GETACK command into the replication stream. Slaves will reply ASAP with a new ACK.

As soon as the ACKs received from slaves is enough to unblock some client, we do it. Otherwise we unblock the client on timeout.

Not considering the refactoring needed for the block operations that is technically not part of the WAIT implementation, all the code is like 170 lines of code, so very easy to understand, modify, and with almost zero effects on the rest of the code base.

Living with the indetermination

===

WAIT does not offer any “transactional” feature to commit a write to N nodes or nothing, but provides information about the degree of durability we achieved with our write, in an easy to use form that does not slow down operations of other clients.

How this improves consistency in Redis land? Let’s look at the following pseudo code:

def save_payment(payment_id)

redis.set(payment_id,”confirmed”)

end

We can imagine that the function save_payment is called every time an user payed for something, and we want to store this information in our database. Now imagine that there are a number of clients processing payments, so the function above gets called again and again.

In Redis 2.6 if there was an issue communicating with the replicas, while running the above code, it was impossible to sense the problem in time. The master failing could result in replicas missing a number of writes.

In Redis 2.8 this was improved by providing options to stop accepting writes if there are problems communicating with replicas. Redis can check if there are at least N replicas that appear to be alive (pinging back the master) in this setup. With this option we improved the consistency guarantees a bit, now there is a maximum window to write to a master that is not backed by enough replicas.

With WAIT we can finally considerably improve how much safe are our writes, since I can modify the code in the following way:

def save_payment(payment_id)

redis.set(payment_id,”confirmed”)

if redis.wait(3,1000) >= 3 then

return true

else

return false

end

In the above version of the program we finally gained some information about what happened to the write, even if we actually did not changed the outcome of the write, we are now able to report back this information to the caller.

However what to do if wait returns less than 3? Maybe we could try to revert our write sending redis.del(payment_id)? Or we can try to set the value again in order to succeed the next time?

With the above code we are exposing our system to too much troubles. In a given moment if only two slaves are accepting writes all the transactions will have to deal with this inconsistency, whatever it is handled. There is a better thing we can do, modifying the code in a way so that it actually does not set a value, but takes a list of events about the transaction, using Redis lists:

def save_payment(payment_id)

redis.rpush(payment_id,”in progress”) # Return false on exception

if redis.wait(3,1000) >= 3 then

redis.rpush(payment_id,”confirmed”) # Return false on exception

if redis.wait(3,1000) >= 3 then

return true

else

redis.rpush(payment_id,”cancelled”)

return false

end

else

return false

end

Here we push an “in progress” state into the list for this payment ID before to actually confirming it. If we can’t reach enough replicas we abort the payment, and it will not have the “confirmed” element. In this way if there are only two replicas getting writes the transactions will fail one after the other. The only clients that will have the deal with inconsistencies are the clients that are able to propagate “in progress” to 3 or more replicas but are not able to do the same with the “confirmed” write. In the above code we try to deal with this issue with a best-effort “cancelled” write, however there is still the possibility of a race condition:

1) We send “in progress”

2) We send “confirmed”, it only reaches 2 slaves.

3) We send “cancelled” but at this point the master crashed and a failover elected one of the slaves.

So in the above case we returned a failed transaction while actually the “confirmed” state was written.

You can do different things to deal better with this kind of issues, that is, to mark the transaction as “broken” in a strong consistent and highly available system like Zookeeper, to write a log in the local client, to put it in the hands of a message queue that is designed with some redundancy, and so forth.

Synchronous replication and failover: two close friends

===

Synchronous replication is important per se because it means, there are N copies of this data around, however to really exploit the improved data safety, we need to make sure that when a master node fails, and a slave is elected, we get the best slave.

The new implementation of Sentinel already elects the slave with the best replication offset available, assuming it publishes its replication offset via INFO (that is, it must be Redis 2.8 or greater), so a good setup can be to run an odd number of Redis nodes, with a Redis Sentinel installed in every node, and use synchronous replication to write to the majority of nodes. As long as the majority of the nodes is available, a Sentinel will be able to win the election and elect a slave with the most updated data.

Redis cluster is currently not able to elect the slave with the best replication offset, but will be able to do that before the stable release. It is also conceivable that Redis Cluster will have an option to only promote a slave if the majority of replicas for a given hash slot are reachable.

I just scratched the surface of synchronous replication, but I believe that this is a building block that we Redis users will be able to exploit in the future to stretch Redis capabilities to cover new needs for which Redis was traditionally felt as inadequate. Comments

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

Video Face Swap

使用我們完全免費的人工智慧換臉工具,輕鬆在任何影片中換臉!

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

F5刷新金鑰在Windows 11中不起作用

Mar 14, 2024 pm 01:01 PM

F5刷新金鑰在Windows 11中不起作用

Mar 14, 2024 pm 01:01 PM

您的Windows11/10PC上的F5鍵是否無法正常運作? F5鍵通常用於刷新桌面或資源管理器或重新載入網頁。然而,我們的一些讀者報告說,F5鍵正在刷新他們的計算機,並且無法正常工作。如何在Windows11中啟用F5刷新?要刷新您的WindowsPC,只需按下F5鍵即可。在某些筆記型電腦或桌上型電腦上,您可能需要按下Fn+F5組合鍵才能完成刷新操作。為什麼F5刷新不起作用?如果按下F5鍵無法刷新您的電腦或在Windows11/10上遇到問題,可能是因為功能鍵被鎖定。其他潛在原因包括鍵盤或F5鍵

Java中sleep和wait方法有什麼差別

May 06, 2023 am 09:52 AM

Java中sleep和wait方法有什麼差別

May 06, 2023 am 09:52 AM

一、sleep和wait方法的區別根本區別:sleep是Thread類別中的方法,不會馬上進入運行狀態,wait是Object類別中的方法,一旦一個物件呼叫了wait方法,必須要採用notify()和notifyAll ()方法喚醒該程序釋放同步鎖定:sleep會釋放cpu,但是sleep不會釋放同步鎖定的資源,wait會釋放同步鎖定資源使用範圍:sleep可以在任何地方使用,但wait只能在synchronized的同步方法或是程式碼區塊中使用異常處理:sleep需要捕獲異常,而wait不需要捕獲異常二、wa

win7按f8怎麼一鍵還原電腦系統

Jul 13, 2023 pm 12:17 PM

win7按f8怎麼一鍵還原電腦系統

Jul 13, 2023 pm 12:17 PM

在Windows7中電腦的修復和恢復功能得到了加強和改進,當我們的電腦發生故障或需要恢復備份時,可以透過在啟動時按下F8鍵啟動Windows的“高級啟動選項”,然後進行還原系統,下面就來看看如何操作吧。 1、首先單擊Windows開始圖標,在“搜尋程式和文件”輸入框中鍵入“cmd”,在搜尋結果中用滑鼠右鍵單擊“cmd.exe”,並在彈出的列表中單擊“以管理員身份運作」。 2、然後,在開啟的命令列環境下鍵入“reagentc/info”,並按下“回車”鍵。之後會出現WindowsRE的相關資訊。如

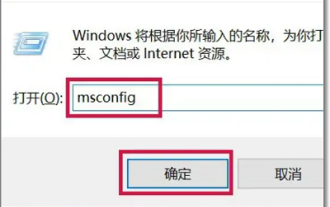

無法使用F8鍵進入win10安全模式

Jan 03, 2024 pm 07:16 PM

無法使用F8鍵進入win10安全模式

Jan 03, 2024 pm 07:16 PM

很多用戶在操作電腦的時候發現按F8卻無法進入到安全模式裡面,我們可以進入群組原則裡面進行修改和調整,具體的方法還是很簡單的,只需要跟著下面的步驟來操作就可以了。 win10按f8無法進入安全模式1、按下win+R,然後輸入“MSConfig”2、點擊上面的“引導”,再點擊“安全引導”3、彈出對話框裡面,你點擊重新啟動就可以進入安全模式了。 4.如果你不進入安全模式的話,那就回去點擊「正常啟動」就好了。

MySQL同步資料Replication如何實現

May 26, 2023 pm 03:22 PM

MySQL同步資料Replication如何實現

May 26, 2023 pm 03:22 PM

MySQL提供了Replication功能,可以實現將一個資料庫的資料同步到多台其他資料庫。前者通常稱之為主庫(master),後者則被稱為從庫(slave)。 MySQL複製過程採用非同步方式,但延遲非常小,秒級同步。一、同步複製資料基本原理1.在主庫上發生的資料變化記錄到二進位日誌Binlog2.從庫的IO線程將主庫的Binlog複製到自己的中繼日誌Relaylog3.從庫的SQL線程通過讀取、重播中繼日誌實作資料複製MySQL的複製有三種模式:StatementLevel、RowLevel、Mi

Java中如何使用wait和notify實現線程間的通信

Apr 22, 2023 pm 12:01 PM

Java中如何使用wait和notify實現線程間的通信

Apr 22, 2023 pm 12:01 PM

一.為什麼需要線程通信線程是並發並行的執行,表現出來是線程隨機執行,但是我們在實際應用中對線程的執行順序是有要求的,這就需要用到線程通信線程通信為什麼不使用優先權來來解決執行緒的運行順序?總的優先權是由執行緒pcb中的優先權資訊和執行緒等待時間共同決定的,所以一般開發中不會依賴優先權來表示執行緒的執行順序看下面這樣的一個場景:麵包房的例子來描述生產者消費者模型有一個麵包房,裡面有麵包師傅和顧客,對應我們的生產者和消費者,而麵包房有一個庫存用來存放麵包,當庫存滿了之後就不在生產,同時消費者也在購買麵包,當

深入理解Java多執行緒程式設計:進階應用wait和notify方法

Dec 20, 2023 am 08:10 AM

深入理解Java多執行緒程式設計:進階應用wait和notify方法

Dec 20, 2023 am 08:10 AM

Java中的多執行緒程式設計:掌握wait和notify的高階用法引言:多執行緒程式設計是Java開發中常見的技術,面對複雜的業務處理和效能最佳化需求,合理利用多執行緒可以大幅提高程式的運作效率。在多執行緒程式設計中,wait和notify是兩個重要的關鍵字,用來實現執行緒間的協調和通訊。本文將介紹wait和notify的高階用法,並提供具體的程式碼範例,以幫助讀者更好地理解和應用

探究Java中物件方法wait和notify的內部實作機制

Dec 20, 2023 pm 12:47 PM

探究Java中物件方法wait和notify的內部實作機制

Dec 20, 2023 pm 12:47 PM

深入理解Java中的物件方法:wait和notify的底層實作原理,需要具體程式碼範例Java中的物件方法wait和notify是用於實作執行緒間通訊的關鍵方法,它們的底層實作原理涉及Java虛擬機的監視器機制。本文將深入探討這兩種方法的底層實作原理,並提供具體的程式碼範例。首先,我們來了解wait和notify的基本用途。 wait方法的作用是使目前執行緒釋放對象