作者| 康乃爾大學杜沅豈

編輯| ScienceAI

隨著AI for Science 受到越來越多的關注,人們更加關心AI 如何解決一系列科學問題並且可以被成功借鑒到其他相近的領域。

AI 與小分子藥物發現是其中一個非常有代表性和很早被探索的領域。分子發現是一個非常困難的組合優化問題(由於分子結構的離散性)並且搜索空間非常龐大與崎嶇,同時驗證搜索到的分子屬性又十分困難,通常需要昂貴的實驗,至少是至少是模擬計算、量子化學的方法來提供回饋。

隨著機器學習的高速發展和得益於早期的探索(包括構建了簡單可用的優化目標與效果衡量方法),大量的算法被研發,包括組合優化,搜索,採樣算法(遺傳算法、蒙特卡洛樹搜尋、強化學習、生成流模型/GFlowNet,馬可夫鏈蒙特卡洛等),與連續最佳化演算法,貝葉斯最佳化,基於梯度的最佳化等。同時現有較完整的演算法衡量基準,較客觀且公平的比較方式,也為開發機器學習演算法開拓了廣闊的空間。

近日,康乃爾大學、劍橋大學和洛桑聯邦理工學院(EPFL)的研究人員在《Nature Machine Intelligence》發表了題為《Machine learning-aided generative molecular design》的綜述文章。

論文連結:https://www.nature.com/articles/s42256-024-00843-5

這篇綜述回顧了機器學習在生成式分子設計中的應用。藥物發現和開發需要優化分子以滿足特定的理化性質和生物活性。然而,由於搜尋空間巨大且最佳化函數不連續,傳統方法既昂貴又容易失敗。機器學習透過結合分子生成和篩選步驟,進而加速早期藥物發現過程。

圖示:生成式 ML 輔助分子設計流程。

生成性分子設計任務

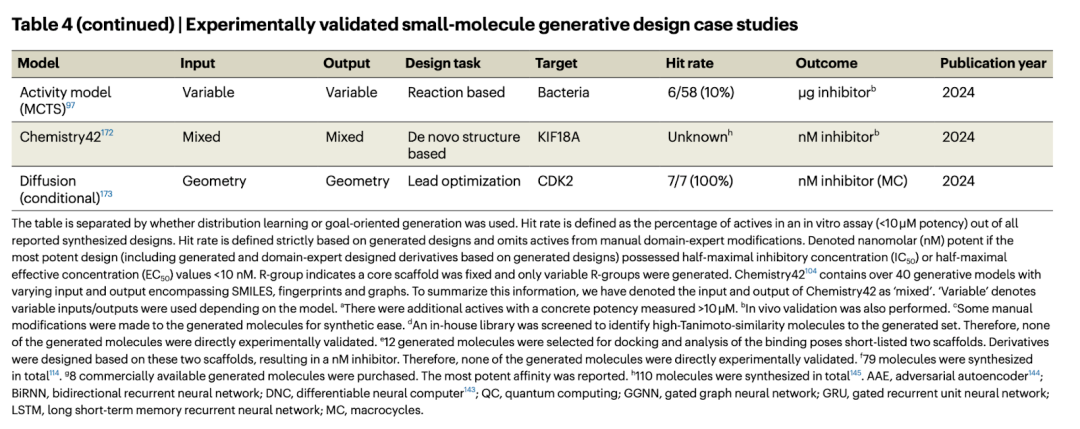

生成性分子設計可分為兩大範式:分佈學習和目標導向生成,其中目標導向生成可以進一步分為條件生成和分子優化。每種方法的適用性取決於特定任務和所涉及的資料。

分佈學習 (distribution learning)

條件產生 (conditional generation)

分子優化 (molecule optimization)

Illustrations: Illustrations of generation tasks, generation strategies, and molecular characterization.

Molecular generation process

Molecular generation is a complex process including many different combination units. We list the representative work in the figure below and introduce the representative units of each part.

Molecular Representation

When developing molecularly generated neural architectures, it is first necessary to determine machine-readable input and output representations of the molecular structure. The input representation helps inject appropriate inductive biases into the model, while the output representation determines the optimized search space for the molecule. The representation type determines the applicability of the generation method, for example, discrete search algorithms can only be applied to combinatorial representations such as graphs and strings.

While various input representations have been studied, the trade-offs between representation types and the neural architectures that encode them are not yet clear. Representation transformations between molecules are not necessarily bijective; for example, density maps and fingerprints cannot uniquely identify molecules, and further techniques are needed to solve this non-trivial mapping problem. Common molecular representations include strings, two-dimensional topological graphs, and three-dimensional geometric graphs.

Representation granularity is another consideration in generative model design. Typically, methods utilize atoms or molecular fragments as basic building blocks during generation. Fragment-based representation refines molecular structures into larger units containing groups of atoms, carrying hierarchical information such as functional group identification, thereby aligning with traditional fragment-based or pharmacophore drug design approaches.

Generative methods

Deep generative models are a class of methods that estimate the probability distribution of data and sample from a learning distribution (also called distribution learning). These include variational autoencoders, generative adversarial networks, normalizing flows, autoregressive models, and diffusion models. Each of these generation methods has its use cases, pros and cons, and the choice depends on the required task and data characteristics.

Generation strategy

Generation strategy refers to the way the model outputs the molecular structure, which can generally be divided into one-time generation, sequential generation or iterative improvement.

One-shot generation: One-shot generation generates the complete molecular structure in a single forward pass of the model. This approach often struggles to generate realistic and reasonable molecular structures with high accuracy. Furthermore, one-shot generation often cannot satisfy explicit constraints, such as valence constraints, which are crucial to ensure the accuracy and validity of the generated structure.

Sequential Generation: Sequential generation builds a molecular structure through a series of steps, usually by atoms or fragments. Valence constraints can be easily injected into sequential generation, thereby improving the quality of the generated molecules. However, the main limitation of sequential generation is that the order of generated trajectories needs to be defined during training and is slower in inference.

Iterative improvement: Iterative improvement adjusts the prediction by predicting a series of updates, circumventing the difficulties in one-shot generation methods. For example, the cyclic structure module in AlphaFold2 successfully refined the backbone framework, an approach that inspired related molecule generation strategies. Diffusion modeling is a common technique that generates new data through a series of noise reduction steps. Currently, diffusion models have been applied to a variety of molecule generation problems, including conformational generation, structure-based drug design, and linker design.

Optimization strategy

Combination optimization: For the combinatorial encoding of molecules (pictures or strings), techniques in the field of combinatorial optimization can be directly applied.

Continuous Optimization: Molecules can be represented or encoded in continuous domains, such as point clouds and geometric maps in Euclidean space, or deep generative models that encode discrete data in continuous latent space.

Evaluation of Generative Machine Learning Models

Evaluating generative models requires computational evaluation and experimental verification. Standard metrics include effectiveness, uniqueness, novelty, etc. Multiple metrics should be considered when evaluating a model to fully assess build performance.

Experimental verification

The generated molecules must be explicitly verified through wet experiments, in contrast to existing research that focuses primarily on computational contributions. While generative models are not without weaknesses, the disconnect between predictions and experiments is also due to the expertise, expense, and lengthy testing cycles required to conduct such validations.

Generating model laws

Most studies reporting experimental validation use RNN and/or VAE, with SMILES as the operating object. We summarize four main observations:

Future Directions

Although machine learning algorithms have brought hope to small molecule drug discovery, there are still more challenges and opportunities to face.

Challenge

Opportunity

Author: Du Yuanqi, a second-year doctoral student in the Department of Computer Science at Cornell University. His main research interests include geometric deep learning, probabilistic models, sampling, search, optimization problems, interpretability, and applications in the field of molecular exploration. For specific information, please see: https://yuanqidu.github.io/.

以上是AI小分子藥物發現的「百科全書」,康乃爾、劍橋、EPFL等研究者綜述登Nature子刊的詳細內容。更多資訊請關注PHP中文網其他相關文章!