TPAMI 2024 | ProCo: 無限contrastive pairs的長尾對比學習

AIxiv專欄是本站發布學術、技術內容的欄位。過去數年,本站AIxiv專欄接收通報了2,000多篇內容,涵蓋全球各大專院校與企業的頂尖實驗室,有效促進了學術交流與傳播。如果您有優秀的工作想要分享,歡迎投稿或聯絡報道。投稿信箱:liyazhou@jiqizhixin.com;zhaoyunfeng@jiqizhixin.com

本論文第一作者杜超群是清華大學自動化系 2020 級直博生。導師為黃高副教授。此前於清華大學物理系獲理學士學位。研究興趣為不同資料分佈上的模型泛化和穩健性研究,如長尾學習,半監督學習,遷移學習等。在 TPAMI、ICML 等國際級期刊、會議上發表多篇論文。

個人主頁:https://andy-du20.github.io

本文介紹清華大學的一篇關於長尾視覺識別的論文: Probabilistic Contrastive Learning for Long-Tailed Visual Recognition. TPAMI 2024 錄用,程式碼已開源。

研究主要關注對比學習在長尾視覺識別任務中的應用,提出了一種新的長尾對比學習方法ProCo,透過對contrastive loss 的改進實現了無限數量contrastive pairs 的對比學習,有效解決了監督對比學習(supervised contrastive learning)[1] 對batch (memory bank) size 大小的固有依賴問題。除了長尾視覺分類任務,該方法還在長尾半監督學習、長尾目標偵測和平衡資料集上進行了實驗,取得了顯著的效能提升。

論文連結: https://arxiv.org/pdf/2403.06726

-

專案連結: https://githubo.com/LeapLabTH學習在自監督學習中的成功表明了其在學習視覺特徵表示方面的有效性。影響對比學習表現的核心因素是

contrastive pairs 的數量 ,這使得模型能夠從更多的負樣本中學習,體現在兩個最具代表性的方法SimCLR [2] 和MoCo [3] 中分別為batch size 和memory bank 的大小。然而在長尾視覺辨識任務中,由於

,增加contrastive pairs 的數量所帶來的增益會產生嚴重的邊際遞減效應,這是由於大部分的contrastive pairs 都是由頭部類別的樣本構成的,

難以覆蓋到尾部類別。 例如,在長尾Imagenet 資料集中,若batch size (memory bank) 大小設為常見的4096 和8192,那麼每個batch (memory bank) 中平均分別有212 個和89 個類別的 個類別的樣本數不足一個。 因此,ProCo 方法的核心 idea 是:在長尾數據集上,透過對每類數據的分佈進行

建模、參數估計並從中採樣以構建 contrastive pairs,保證能夠覆蓋到所有的類別。進一步,當採樣數量趨於無窮時,可以從理論上嚴格推導出contrastive loss 期望的解析解,從而直接以此作為優化目標,避免了對contrastive pairs 的低效採樣,實現無限數量contrastive pairs 的對比學習。 然而,實現以上想法主要有以下幾個困難:

如何對每類資料的分佈進行建模。

如何有效率地估計分佈的參數,尤其是對於樣本數量較少的尾部類別。

如何保證 contrastive loss 的期望的解析解存在且可計算。

事實上,以上問題可以透過一個統一的機率模型來解決,即選擇一個簡單有效的機率分佈對特徵分佈進行建模,從而可以利用最大似然估計高效地估計分佈的參數,併計算期望contrastive loss 的解析解。

由於對比學習的特徵是分佈在單位超球面上的,因此一個可行的方案是選擇球面上的von Mises-Fisher (vMF) 分佈作為特徵的分佈(該分佈類似於球面上的正態分佈) 。 vMF 分佈參數的最大似然估計有近似解析解且僅依賴於特徵的一階矩統計量,因此可以高效地估計分佈的參數,並且嚴格推導出contrastive loss 的期望,從而實現無限數量contrastive pairs 的對比學習。

Figure 1 The ProCo algorithm estimates the distribution of samples based on the characteristics of different batches. By sampling an unlimited number of samples, the analytical solution of the expected contrastive loss can be obtained, effectively eliminating the inherent dependence of supervised contrastive learning on the batch size (memory bank) size. .

Details of the method

The following will introduce the ProCo method in detail from four aspects: distribution assumption, parameter estimation, optimization objectives and theoretical analysis.

Distribution Assumption

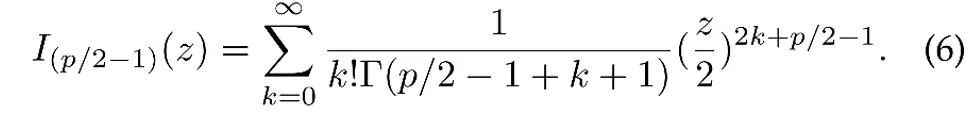

As mentioned before, the features in contrastive learning are constrained to the unit hypersphere. Therefore, it can be assumed that the distribution obeyed by these features is the von Mises-Fisher (vMF) distribution, and its probability density function is:

where z is the unit vector of p-dimensional features, I is the modified Bessel function of the first kind,

μ is the mean direction of the distribution, κ is the concentration parameter, which controls the degree of concentration of the distribution. When κ is larger, the degree of sample clustering near the mean is higher; when κ =0, the vMF distribution degenerates into a sphere. uniform distribution.

Parameter estimation

Based on the above distribution assumption, the overall distribution of data features is a mixed vMF distribution, where each category corresponds to a vMF distribution.

where the parameter  represents the prior probability of each category, corresponding to the frequency of category y in the training set. The mean vector

represents the prior probability of each category, corresponding to the frequency of category y in the training set. The mean vector  and lumping parameter

and lumping parameter  of the feature distribution are estimated by maximum likelihood estimation.

of the feature distribution are estimated by maximum likelihood estimation.

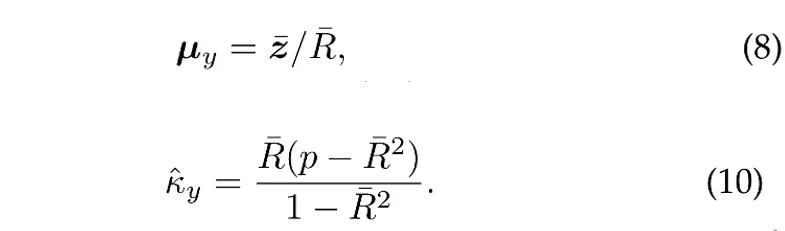

Assuming that N independent unit vectors are sampled from the vMF distribution of category y, the maximum likelihood estimate (approximately) [4] of the mean direction and concentration parameters satisfies the following equation:

where  is the sample mean,

is the sample mean,  is the modulus length of the sample mean. In addition, in order to utilize historical samples, ProCo adopts an online estimation method, which can effectively estimate the parameters of the tail category.

is the modulus length of the sample mean. In addition, in order to utilize historical samples, ProCo adopts an online estimation method, which can effectively estimate the parameters of the tail category.

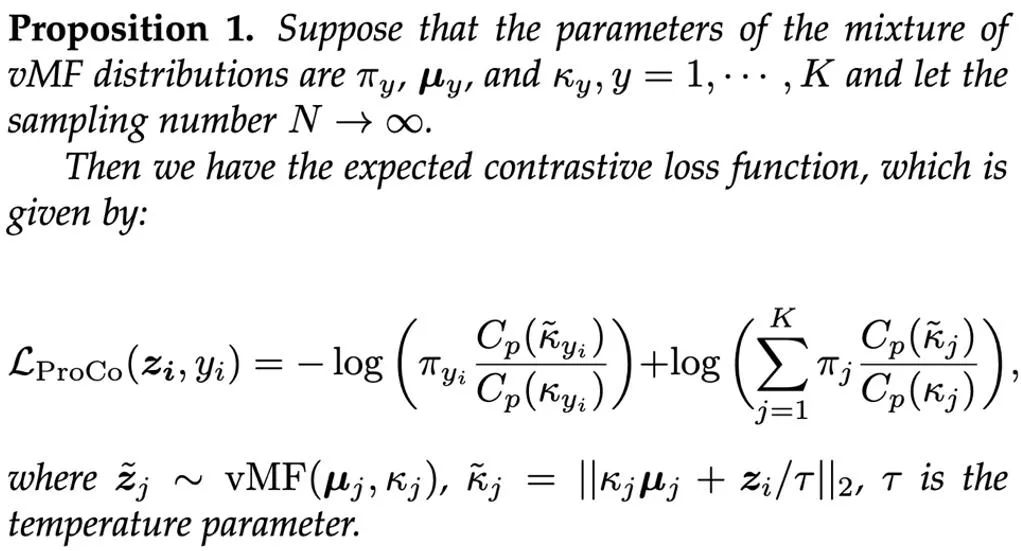

Optimization objective

Based on the estimated parameters, a straightforward approach is to sample from the mixed vMF distribution to construct contrastive pairs. However, sampling a large number of samples from the vMF distribution in each training iteration is inefficient. Therefore, this study theoretically extends the number of samples to infinity and strictly derives the analytical solution of the expected contrast loss function directly as the optimization goal.

By introducing an additional feature branch (representation learning based on this optimization goal) during the training process, this branch can be trained together with the classification branch and will not increase since only the classification branch is needed during inference Additional computational cost. The weighted sum of the losses of the two branches is used as the final optimization goal, and α=1 is set in the experiment. Finally, the overall process of the ProCo algorithm is as follows: Theoretical analysis

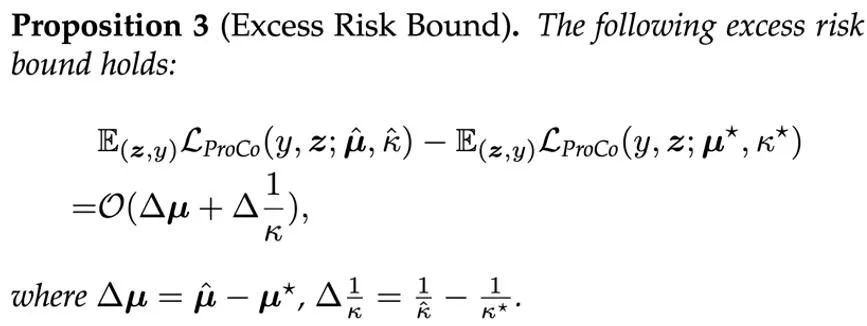

In order to further analyze the To theoretically verify the effectiveness of the ProCo method, the researchers analyzed its generalization error bound and excess risk bound. To simplify the analysis, it is assumed here that there are only two categories, namely y∈{-1,+1}. The analysis shows that the generalization error bound is mainly controlled by the number of training samples and the variance of the data distribution. This finding is consistent with The theoretical analysis of related work [6][7] is consistent, ensuring that ProCo loss does not introduce additional factors and does not increase the generalization error bound, which theoretically guarantees the effectiveness of this method.

In order to further analyze the To theoretically verify the effectiveness of the ProCo method, the researchers analyzed its generalization error bound and excess risk bound. To simplify the analysis, it is assumed here that there are only two categories, namely y∈{-1,+1}. The analysis shows that the generalization error bound is mainly controlled by the number of training samples and the variance of the data distribution. This finding is consistent with The theoretical analysis of related work [6][7] is consistent, ensuring that ProCo loss does not introduce additional factors and does not increase the generalization error bound, which theoretically guarantees the effectiveness of this method.

Furthermore, this method relies on certain assumptions about feature distributions and parameter estimates. To evaluate the impact of these parameters on model performance, the researchers also analyzed the excess risk bound of ProCo loss, which measures the deviation between the expected risk using estimated parameters and the Bayes optimal risk, which is in the true distribution. Expected risk under parameters.

This shows that the excess risk of ProCo loss is mainly controlled by the first-order term of the parameter estimation error.

Experimental results

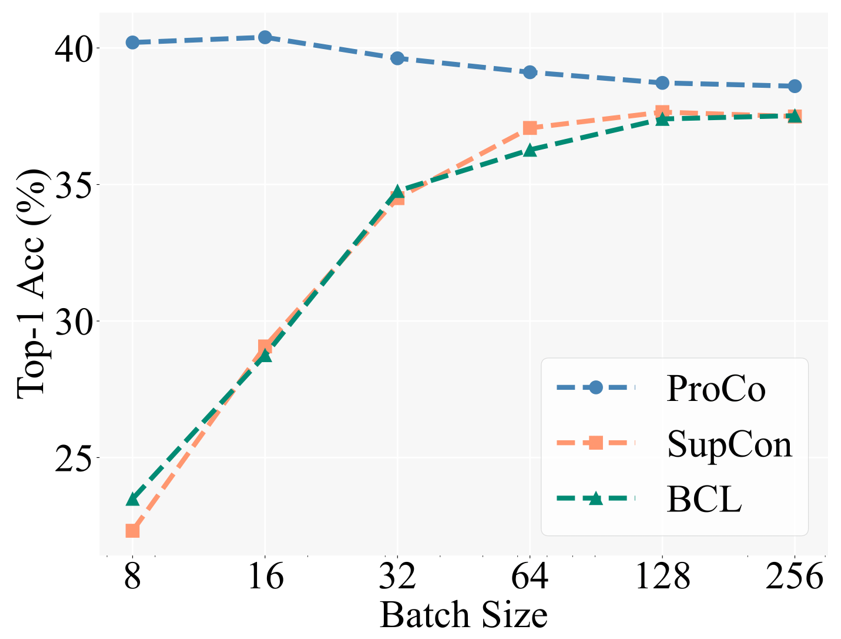

As a verification of core motivation, researchers first compared the performance of different contrastive learning methods under different batch sizes. Baseline includes Balanced Contrastive Learning [5] (BCL), an improved method also based on SCL on long-tail recognition tasks. The specific experimental setting follows the two-stage training strategy of Supervised Contrastive Learning (SCL), that is, first only use contrastive loss to train representation learning, and then train a linear classifier for testing with freeze backbone.

The figure below shows the experimental results on the CIFAR100-LT (IF100) data set. The performance of BCL and SupCon is obviously limited by the batch size, but ProCo effectively eliminates the impact of SupCon on the batch size by introducing the feature distribution of each category. dependence, thereby achieving the best performance under different batch sizes.

In addition, the researchers also conducted experiments on long-tail recognition tasks, long-tail semi-supervised learning, long-tail object detection and balanced data sets. Here we mainly show the experimental results on the large-scale long-tail data sets Imagenet-LT and iNaturalist2018. First, under a training schedule of 90 epochs, compared to similar methods of improving contrastive learning, ProCo has at least 1% performance improvement on two data sets and two backbones.

The following results further show that ProCo can also benefit from a longer training schedule. Under the 400 epochs schedule, ProCo achieved SOTA performance on the iNaturalist2018 data set, and also verified that it can compete with other non- A combination of contrastive learning methods, including distillation (NCL) and other methods. "A simple framework for contrastive learning of visual representations." International conference on machine learning. PMLR, 2020.

- S . Sra, “A short note on parameter approximation for von mises-fisher distributions: and a fast implementation of is (x),” Computational Statistics, 2012.

- J. Zhu, et al. “Balanced contrastive learning for long -tailed visual recognition," in CVPR, 2022.

- W. Jitkrittum, et al. "ELM: Embedding and logit margins for long-tail learning," arXiv preprint, 2022.

- A. K. Menon, et al. . “Long-tail learning via logit adjustment,” in ICLR, 2021.

以上是TPAMI 2024 | ProCo: 無限contrastive pairs的長尾對比學習的詳細內容。更多資訊請關注PHP中文網其他相關文章!

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

Video Face Swap

使用我們完全免費的人工智慧換臉工具,輕鬆在任何影片中換臉!

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

ControlNet作者又出爆款!一張圖生成繪畫全過程,兩天狂攬1.4k Star

Jul 17, 2024 am 01:56 AM

ControlNet作者又出爆款!一張圖生成繪畫全過程,兩天狂攬1.4k Star

Jul 17, 2024 am 01:56 AM

同樣是圖生視頻,PaintsUndo走出了不一樣的路線。 ControlNet作者LvminZhang又開始整活了!這次瞄準繪畫領域。新項目PaintsUndo剛上線不久,就收穫1.4kstar(還在瘋狂漲)。項目地址:https://github.com/lllyasviel/Paints-UNDO透過這個項目,用戶輸入一張靜態圖像,PaintsUndo就能自動幫你生成整個繪畫的全過程視頻,從線稿到成品都有跡可循。繪製過程,線條變化多端甚是神奇,最終視頻結果和原始圖像非常相似:我們再來看一個完整的繪

arXiv論文可以發「彈幕」了,史丹佛alphaXiv討論平台上線,LeCun按讚

Aug 01, 2024 pm 05:18 PM

arXiv論文可以發「彈幕」了,史丹佛alphaXiv討論平台上線,LeCun按讚

Aug 01, 2024 pm 05:18 PM

乾杯!當論文討論細緻到詞句,是什麼體驗?最近,史丹佛大學的學生針對arXiv論文創建了一個開放討論論壇——alphaXiv,可以直接在任何arXiv論文之上發布問題和評論。網站連結:https://alphaxiv.org/其實不需要專門訪問這個網站,只需將任何URL中的arXiv更改為alphaXiv就可以直接在alphaXiv論壇上打開相應論文:可以精準定位到論文中的段落、句子:右側討論區,使用者可以發表問題詢問作者論文想法、細節,例如:也可以針對論文內容發表評論,例如:「給出至

登頂開源AI軟體工程師榜首,UIUC無Agent方案輕鬆解決SWE-bench真實程式設計問題

Jul 17, 2024 pm 10:02 PM

登頂開源AI軟體工程師榜首,UIUC無Agent方案輕鬆解決SWE-bench真實程式設計問題

Jul 17, 2024 pm 10:02 PM

AIxiv專欄是本站發布學術、技術內容的欄位。過去數年,本站AIxiv專欄接收通報了2,000多篇內容,涵蓋全球各大專院校與企業的頂尖實驗室,有效促進了學術交流與傳播。如果您有優秀的工作想要分享,歡迎投稿或聯絡報道。投稿信箱:liyazhou@jiqizhixin.com;zhaoyunfeng@jiqizhixin.com這篇論文的作者皆來自伊利諾大學香檳分校(UIUC)張令明老師團隊,包括:StevenXia,四年級博士生,研究方向是基於AI大模型的自動代碼修復;鄧茵琳,四年級博士生,研究方

從RLHF到DPO再到TDPO,大模型對齊演算法已經是「token-level」

Jun 24, 2024 pm 03:04 PM

從RLHF到DPO再到TDPO,大模型對齊演算法已經是「token-level」

Jun 24, 2024 pm 03:04 PM

AIxiv專欄是本站發布學術、技術內容的欄位。過去數年,本站AIxiv專欄接收通報了2,000多篇內容,涵蓋全球各大專院校與企業的頂尖實驗室,有效促進了學術交流與傳播。如果您有優秀的工作想要分享,歡迎投稿或聯絡報道。投稿信箱:liyazhou@jiqizhixin.com;zhaoyunfeng@jiqizhixin.com在人工智慧領域的發展過程中,對大語言模型(LLM)的控制與指導始終是核心挑戰之一,旨在確保這些模型既強大又安全地服務人類社會。早期的努力集中在透過人類回饋的強化學習方法(RL

OpenAI超級對齊團隊遺作:兩個大模型博弈一番,輸出更好懂了

Jul 19, 2024 am 01:29 AM

OpenAI超級對齊團隊遺作:兩個大模型博弈一番,輸出更好懂了

Jul 19, 2024 am 01:29 AM

如果AI模型給的答案一點也看不懂,你敢用嗎?隨著機器學習系統在更重要的領域中得到應用,證明為什麼我們可以信任它們的輸出,並明確何時不應信任它們,變得越來越重要。獲得對複雜系統輸出結果信任的一個可行方法是,要求系統對其輸出產生一種解釋,這種解釋對人類或另一個受信任的系統來說是可讀的,即可以完全理解以至於任何可能的錯誤都可以被發現。例如,為了建立對司法系統的信任,我們要求法院提供清晰易讀的書面意見,解釋並支持其決策。對於大型語言模型來說,我們也可以採用類似的方法。不過,在採用這種方法時,確保語言模型生

黎曼猜想显著突破!陶哲轩强推MIT、牛津新论文,37岁菲尔兹奖得主参与

Aug 05, 2024 pm 03:32 PM

黎曼猜想显著突破!陶哲轩强推MIT、牛津新论文,37岁菲尔兹奖得主参与

Aug 05, 2024 pm 03:32 PM

最近,被稱為千禧年七大難題之一的黎曼猜想迎來了新突破。黎曼猜想是數學中一個非常重要的未解決問題,與素數分佈的精確性質有關(素數是那些只能被1和自身整除的數字,它們在數論中扮演著基礎性的角色)。在當今的數學文獻中,已有超過一千個數學命題以黎曼猜想(或其推廣形式)的成立為前提。也就是說,黎曼猜想及其推廣形式一旦被證明,這一千多個命題將被確立為定理,對數學領域產生深遠的影響;而如果黎曼猜想被證明是錯誤的,那麼這些命題中的一部分也將隨之失去其有效性。新的突破來自MIT數學教授LarryGuth和牛津大學

LLM用於時序預測真的不行,連推理能力都沒用到

Jul 15, 2024 pm 03:59 PM

LLM用於時序預測真的不行,連推理能力都沒用到

Jul 15, 2024 pm 03:59 PM

語言模型真的能用於時序預測嗎?根據貝特里奇頭條定律(任何以問號結尾的新聞標題,都能夠用「不」來回答),答案應該是否定的。事實似乎也果然如此:強大如斯的LLM並不能很好地處理時序資料。時序,即時間序列,顧名思義,是指一組依照時間發生先後順序排列的資料點序列。在許多領域,時序分析都很關鍵,包括疾病傳播預測、零售分析、醫療和金融。在時序分析領域,近期不少研究者都在研究如何使用大型語言模型(LLM)來分類、預測和偵測時間序列中的異常。這些論文假設擅長處理文本中順序依賴關係的語言模型也能泛化用於時間序

首個基於Mamba的MLLM來了!模型權重、訓練程式碼等已全部開源

Jul 17, 2024 am 02:46 AM

首個基於Mamba的MLLM來了!模型權重、訓練程式碼等已全部開源

Jul 17, 2024 am 02:46 AM

AIxiv专栏是本站发布学术、技术内容的栏目。过去数年,本站AIxiv专栏接收报道了2000多篇内容,覆盖全球各大高校与企业的顶级实验室,有效促进了学术交流与传播。如果您有优秀的工作想要分享,欢迎投稿或者联系报道。投稿邮箱:liyazhou@jiqizhixin.com;zhaoyunfeng@jiqizhixin.com。引言近年来,多模态大型语言模型(MLLM)在各个领域的应用取得了显著的成功。然而,作为许多下游任务的基础模型,当前的MLLM由众所周知的Transformer网络构成,这种网