ICML 2024 | 人物互動圖像,現在更懂你的提示詞了,北大推出基於語意感知的人物交互圖像生成框架

AIxiv專欄是本站發布學術、技術內容的欄位。過去數年,本站AIxiv專欄接收通報了2,000多篇內容,涵蓋全球各大專院校與企業的頂尖實驗室,有效促進了學術交流與傳播。如果您有優秀的工作想要分享,歡迎投稿或聯絡報道。投稿信箱:liyazhou@jiqizhixin.com;zhaoyunfeng@jiqizhixin.com

人物互動圖像生成指產生滿足文字描述需求,內容為人與物體互動的圖像,並要求圖像盡可能真實且符合語意。近年來,文字生成圖像模型在生成真實圖像方面取得了顯著的進展,但這些模型在生成以人物互動為主體內容的高保真圖像生成方面仍然面臨挑戰。其困難主要源自於兩個面向:一是人體姿勢的複雜性和多樣性給合理的人物生成帶來挑戰;二是交互邊界區域(交互語意豐富區域)不可靠的生成可能導致人物交互語意表達的不足。

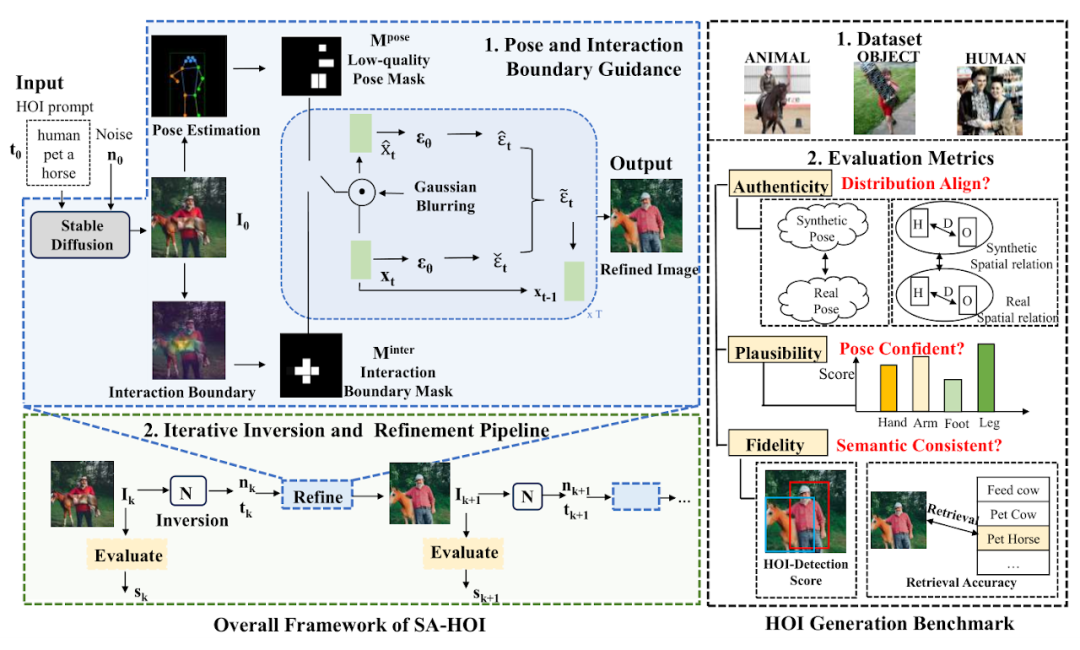

針對上述問題,來自北京大學的研究團隊提出了一種姿勢和交互感知的人物交互圖像生成框架(SA-HOI), 利用人體姿勢的生成質量和交互邊界區域信息作為去噪過程的指導,生成了更合理,更真實的人物互動圖像。為了全面測評產生影像的質量,他們還提出了一個全面的人物互動影像生成基準。

論文連結:https://proceedings.mlr.press/v235/xu24e.html

計畫首頁:https://sites.html

/🜟

原始碼連結:https://github.com/XZPKU/SA-HOI- 實驗室首頁:http://www.wict.pku.edu.cn/mipl

SA-HOI 是一種語意感知的人物互動影像產生方法,從人體姿態和互動語義兩方面提升人物互動影像生成的整體品質並減少存在的生成問題。透過結合影像反演的方法,產生了迭代式反演和影像修正流程,可以使生成影像逐步自我修正,提升品質。

研究團隊在論文中也提出了第一個涵蓋人 - 物體、人 - 動物和人 - 人交互的人物交互圖像生成基準,並為人物交互圖像生成設計了針對性的評估指標。大量實驗表明,該方法在針對人物交互圖像生成的評估指標和常規圖像生成的評估指標下均優於現有的基於擴散的圖像生成方法。

方法介紹

論文中提出的方法如圖1 所示,主要由兩個設計組成:

姿態和互動指導(Pose and Interaction Guidance, PIG)和迭代反演和修正流程(Iterative Inversion and Refinement Pipeline, IIR)。

在PIG 中,對於給定的人物交互文本描述 和噪聲

和噪聲 ,首先使用穩定擴散模型(Stable Diffusion [2])生成

,首先使用穩定擴散模型(Stable Diffusion [2])生成 作為初始圖像,並使用姿態檢測器[3] 獲取人類體關節位置

作為初始圖像,並使用姿態檢測器[3] 獲取人類體關節位置 和對應的置信分數

和對應的置信分數 , 建構姿態遮罩

, 建構姿態遮罩 高亮低品質姿態區域。

高亮低品質姿態區域。

對於交互指導,利用分割模型定位交互邊界區域,得到關鍵點 和相應的置信分數

和相應的置信分數 , 並在交互掩碼

, 並在交互掩碼 中高亮交互區域,以增強交互邊界的語義表達。對於每個去噪步驟,

中高亮交互區域,以增強交互邊界的語義表達。對於每個去噪步驟, 和

和  作為約束來對這些高亮的區域進行修正,從而減少這些區域中存在的生成問題。此外, IIR 結合影像反演模型N,從需要進一步修正的影像中擷取雜訊n 和文字描述的嵌入t,然後使用PIG 對此影像進行下一次修正,利用品質評估器Q 對修正後的影像品質進行評估,以 的操作來逐步提高影像品質。

作為約束來對這些高亮的區域進行修正,從而減少這些區域中存在的生成問題。此外, IIR 結合影像反演模型N,從需要進一步修正的影像中擷取雜訊n 和文字描述的嵌入t,然後使用PIG 對此影像進行下一次修正,利用品質評估器Q 對修正後的影像品質進行評估,以 的操作來逐步提高影像品質。

姿態與互動指導

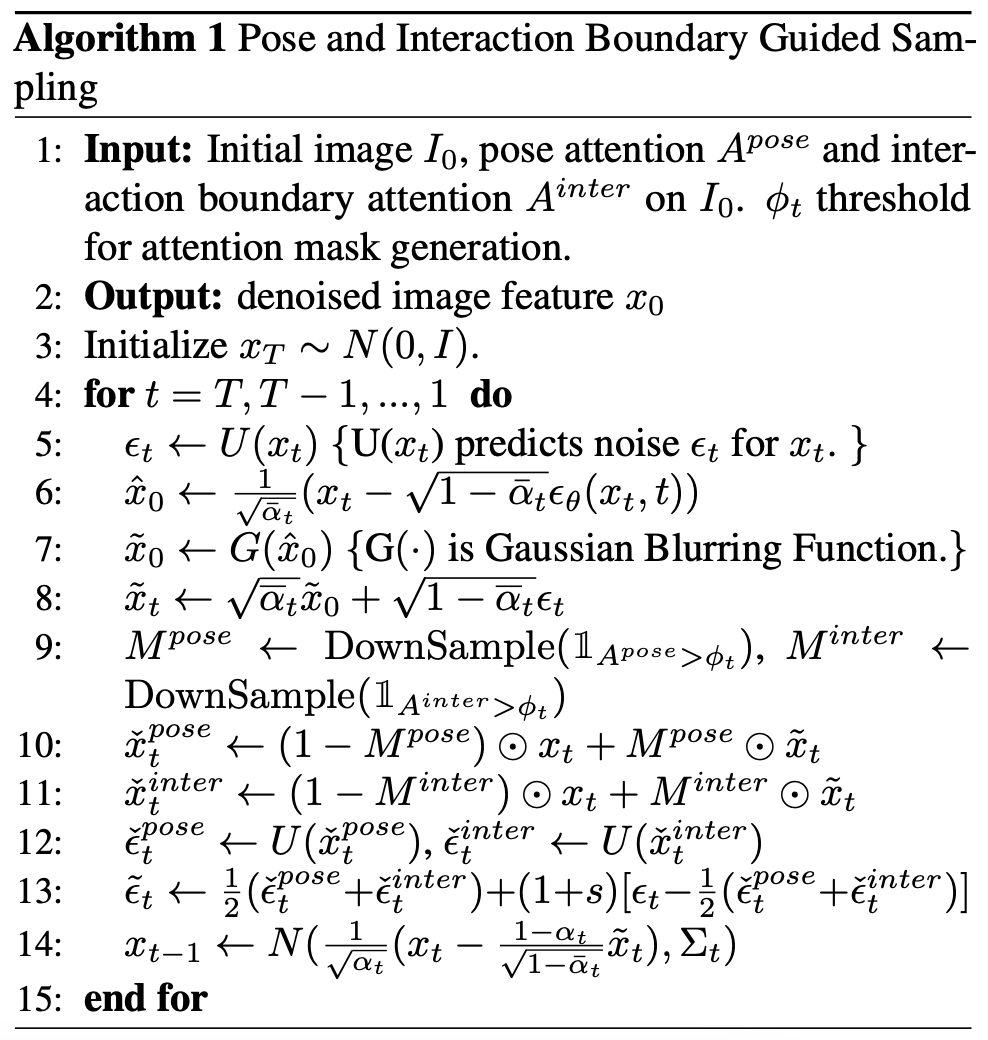

圖 2:姿勢與互動指導取樣偽代碼

姿勢和交互引導採樣的偽代碼如圖 2 所示,在每個去噪步驟中,我們首先按照穩定擴散模型(Stable Diffusion)中的設計獲取預測的噪聲 ϵt 和中間重構 。然後我們在 上應用高斯模糊 G 來獲得退化的潛在特徵 和 ,隨後將對應潛在特徵中的信息引入去噪過程中。

和

和  被用於產生

被用於產生  和

和 ,並在

,並在  和

和  中突出低姿勢品質區域,指導模型減少這些區域的畸變生成。為了指導模型改進低品質區域,將透過以下公式來高亮低姿勢得分區域:

中突出低姿勢品質區域,指導模型減少這些區域的畸變生成。為了指導模型改進低品質區域,將透過以下公式來高亮低姿勢得分區域:

其中  ,x、y 是影像的逐像素座標,H,W 是影像大小,σ 是高斯分佈的變異數。

,x、y 是影像的逐像素座標,H,W 是影像大小,σ 是高斯分佈的變異數。  表示以第 i 個關節為中心的注意力,透過結合所有關節的注意力,我們可以形成最終的注意力圖

表示以第 i 個關節為中心的注意力,透過結合所有關節的注意力,我們可以形成最終的注意力圖 ,並使用閾值將

,並使用閾值將  轉換為一個掩碼

轉換為一個掩碼  。

。

其中 ϕt 是在時間步 t 產生遮罩的閾值。類似地,對於交互指導,論文作者利用分割模型得到物體的外輪廓點O 以及人體關節點C,計算人與物體之間的距離矩陣D,從中採樣得到交互邊界的關鍵點 ,利用和姿勢指導相同的方法產生交互注意力

,利用和姿勢指導相同的方法產生交互注意力 與掩蔽

與掩蔽 ,並應用於計算最終的預測雜訊。

,並應用於計算最終的預測雜訊。

迭代式反演與影像修正流程

In order to obtain the quality assessment of the generated images in real time, the author of the paper introduces the quality evaluator Q as a guide for the iterative operation. For the k-th round image  , the evaluator Q is used to obtain its quality score

, the evaluator Q is used to obtain its quality score  , and then

, and then  is generated based on

is generated based on  . In order to retain the main content of

. In order to retain the main content of  after optimization, the corresponding noise is needed as the initial value for denoising.

after optimization, the corresponding noise is needed as the initial value for denoising.

However, such noise is not readily available, so the image inversion method  is introduced to obtain its noise potential features

is introduced to obtain its noise potential features  and text embedding

and text embedding  , as the input of PIG, to generate optimized results

, as the input of PIG, to generate optimized results  .

.

By comparing the quality scores in the before and after iteration rounds, you can judge whether to continue optimization: when there is no significant difference between  and

and  , that is, below the threshold θ, it can be considered that the process may have made sufficient improvements to the image. Correction, thus ending optimization and outputting the image with the highest quality score.

, that is, below the threshold θ, it can be considered that the process may have made sufficient improvements to the image. Correction, thus ending optimization and outputting the image with the highest quality score.

Character interaction image generation benchmark

Human interaction image generation benchmark (data set + evaluation index)

Human interaction image generation benchmark (data set + evaluation index)

Considering that there are no existing models and benchmarks designed for the human interaction image generation task, The author of the paper collected and integrated a human interaction image generation benchmark, including a real human interaction image data set containing 150 human interaction categories, and several evaluation indicators customized for human interaction image generation.

This data set is filtered from the open source human interaction detection data set HICO-DET [5] to obtain 150 human interaction categories, covering three different interaction scenarios: human-object, human-animal and human-human. A total of 5k real images of human interaction were collected as a reference data set for this paper to evaluate the quality of generated human interaction images.

In order to better evaluate the quality of the generated character interaction images, the author of the paper customized several evaluation criteria for character interaction generation, from the perspectives of reliability (Authenticity), feasibility (Plausibility) and fidelity (Fidelity) Comprehensive evaluation of generated images. In terms of reliability, the author of the paper introduced pose distribution distance and person-object distance distribution to evaluate whether the generated results are close to the real images: the closer the generated results are to the real images in a distribution sense, the better the quality. In terms of feasibility, the pose confidence score is calculated to measure the credibility and rationality of the generated human joints. In terms of fidelity, the human interaction detection task and the image-text retrieval task are used to evaluate the semantic consistency between the generated image and the input text.

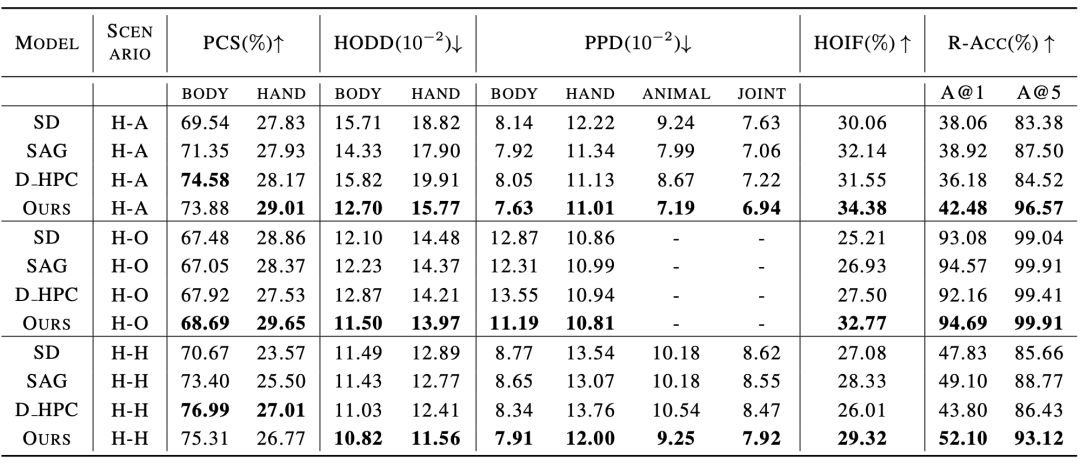

Experimental results

Comparison with existing methods The experimental results are shown in Table 1 and Table 2, which compare the performance on character interaction image generation indicators and conventional image generation indicators respectively. Table 2: Comparative experimental results with existing methods in conventional image generation indicators

In addition, the author of the paper also conducted a subjective evaluation, inviting many users to rate from multiple perspectives such as human body quality, object appearance, interactive semantics and overall quality. The experimental results prove that the SA-HOI method is more in line with human aesthetics from all angles. .

Table 3: Subjective evaluation results with existing methods

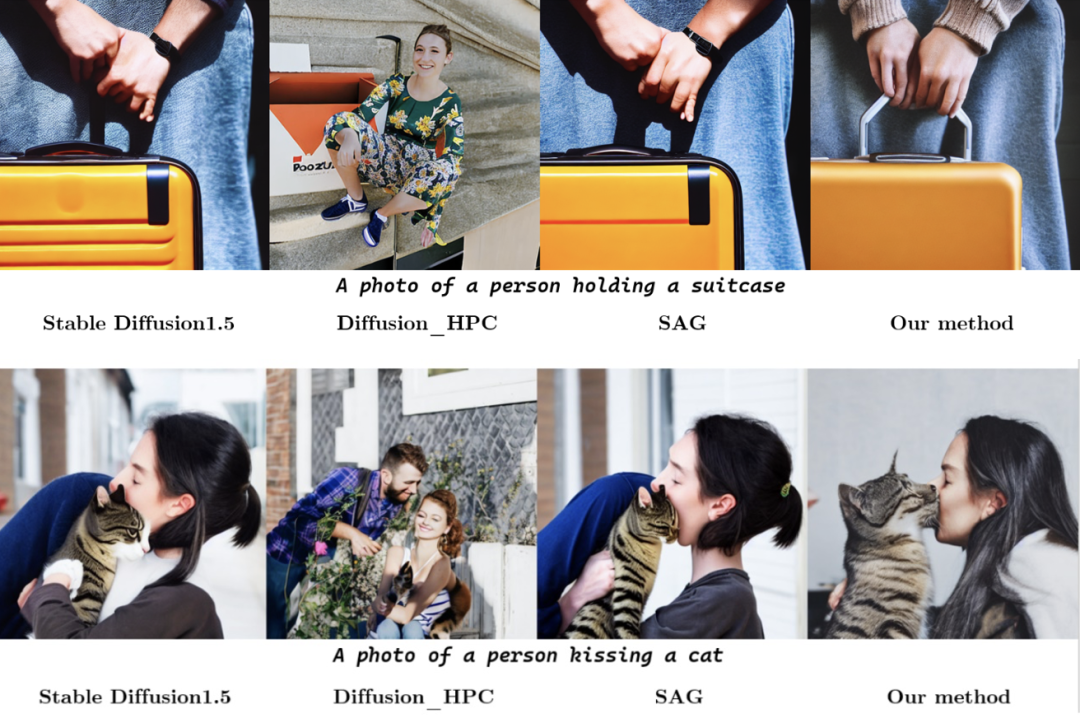

In qualitative experiments, the figure below shows the comparison of the results generated by different methods for the same character interaction category description. In the above group of pictures, the model using the new method accurately expresses the semantics of "kissing", and the generated human body postures are also more reasonable. In the group of pictures below, the method in the paper also successfully alleviates the distortion and distortion of the human body that exists in other methods, and enhances the interaction of "taking the suitcase" by generating the suitcase's lever in the area where the hand interacts with the suitcase. Semantic expression, thereby obtaining results that are superior to other methods in both human body posture and interaction semantics.

.

References:[1] Rombach, R., Blattmann, A., Lorenz, D., Esser, P., and Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF

Conference on Computer Vision and Pattern Recognition (CVPR), pp. 10684–10695, June 2022

[2] HuggingFace, 2022. URL https://huggingface .co/CompVis/stable-diffusion-v1-4.

[3] Chen, K., Wang, J., Pang, J., Cao, Y., Xiong, Y., Li, X ., Sun, S., Feng, W., Liu, Z., Xu, J., Zhang, Z., Cheng, D., Zhu, C

., Cheng, T., Zhao, Q., Li , B., Lu, X., Zhu, R., Wu, Y., Dai, J., Wang, J., Shi, J., Ouyang, W., Loy, C. C., and Lin, D. MMDetection: Open mmlab detection toolbox and benchmark. arXiv preprint arXiv:1906.07155, 2019. [4] Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. Null-

text inversion for editing real images using guided diffusion models. arXiv preprint

arXiv:2211.09794, 2022.

[5] Yu-Wei Chao, Zhan Wang, Yugeng He, Jiaxuan Wang, and Jia Deng. HICO: A benchmark for recognizing human-object interactions in images. In Proceedings of the IEEE International Conference on Computer Vision, 2015.

以上是ICML 2024 | 人物互動圖像,現在更懂你的提示詞了,北大推出基於語意感知的人物交互圖像生成框架的詳細內容。更多資訊請關注PHP中文網其他相關文章!

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

Video Face Swap

使用我們完全免費的人工智慧換臉工具,輕鬆在任何影片中換臉!

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

DeepMind機器人打乒乓球,正手、反手溜到飛起,全勝人類初學者

Aug 09, 2024 pm 04:01 PM

DeepMind機器人打乒乓球,正手、反手溜到飛起,全勝人類初學者

Aug 09, 2024 pm 04:01 PM

但可能打不過公園裡的老大爺?巴黎奧運正在如火如荼地進行中,乒乓球項目備受關注。同時,機器人打乒乓球也取得了新突破。剛剛,DeepMind提出了第一個在競技乒乓球比賽中達到人類業餘選手等級的學習型機器人智能體。論文地址:https://arxiv.org/pdf/2408.03906DeepMind這個機器人打乒乓球什麼程度呢?大概和人類業餘選手不相上下:正手反手都會:對手採用多種打法,機器人也能招架得住:接不同旋轉的發球:不過,比賽激烈程度似乎不如公園老大爺對戰。對機器人來說,乒乓球運動

首配機械爪!元蘿蔔亮相2024世界機器人大會,發布首個走進家庭的西洋棋機器人

Aug 21, 2024 pm 07:33 PM

首配機械爪!元蘿蔔亮相2024世界機器人大會,發布首個走進家庭的西洋棋機器人

Aug 21, 2024 pm 07:33 PM

8月21日,2024世界機器人大會在北京隆重召開。商湯科技旗下家用機器人品牌「元蘿蔔SenseRobot」家族全系產品集體亮相,並最新發布元蘿蔔AI下棋機器人-國際象棋專業版(以下簡稱「元蘿蔔國象機器人」),成為全球首個走進家庭的西洋棋機器人。作為元蘿蔔的第三款下棋機器人產品,全新的國象機器人在AI和工程機械方面進行了大量專項技術升級和創新,首次在家用機器人上實現了透過機械爪拾取立體棋子,並進行人機對弈、人人對弈、記譜複盤等功能,

Claude也變懶了!網友:學會給自己放假了

Sep 02, 2024 pm 01:56 PM

Claude也變懶了!網友:學會給自己放假了

Sep 02, 2024 pm 01:56 PM

開學將至,該收心的不只即將開啟新學期的同學,可能還有AI大模型。前段時間,Reddit擠滿了吐槽Claude越來越懶的網友。 「它的水平下降了很多,經常停頓,甚至輸出也變得很短。在發布的第一周,它可以一次性翻譯整整4頁文稿,現在連半頁都輸出不了!」https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/在一個名為“對Claude徹底失望了的帖子裡”,滿滿地

世界機器人大會上,這家承載「未來養老希望」的國產機器人被包圍了

Aug 22, 2024 pm 10:35 PM

世界機器人大會上,這家承載「未來養老希望」的國產機器人被包圍了

Aug 22, 2024 pm 10:35 PM

在北京舉行的世界機器人大會上,人形機器人的展示成為了現場絕對的焦點,在星塵智能的展台上,由於AI機器人助理S1在一個展區上演揚琴、武術、書法三台大戲,能文能武,吸引了大量專業觀眾和媒體的駐足。在有彈性的琴弦上優雅的演奏,讓S1展現出速度、力度、精準度兼具的精細操作與絕對掌控。央視新聞對「書法」背後的模仿學習和智慧控制進行了專題報道,公司創始人來傑解釋到,絲滑動作的背後,是硬體側追求最好力控和最仿人身體指標(速度、負載等),而是在AI側則採集人的真實動作數據,讓機器人遇強則強,快速學習進化。而敏捷

ACL 2024獎項發表:華科大破解甲骨文最佳論文之一、GloVe時間檢驗獎

Aug 15, 2024 pm 04:37 PM

ACL 2024獎項發表:華科大破解甲骨文最佳論文之一、GloVe時間檢驗獎

Aug 15, 2024 pm 04:37 PM

本屆ACL大會,投稿者「收穫滿滿」。為期六天的ACL2024正在泰國曼谷舉辦。 ACL是計算語言學和自然語言處理領域的頂級國際會議,由國際計算語言學協會組織,每年舉辦一次。一直以來,ACL在NLP領域的學術影響力都名列第一,它也是CCF-A類推薦會議。今年的ACL大會已是第62屆,接收了400餘篇NLP領域的前沿工作。昨天下午,大會公佈了最佳論文等獎項。此次,最佳論文獎7篇(兩篇未公開)、最佳主題論文獎1篇、傑出論文獎35篇。大會也評出了資源論文獎(ResourceAward)3篇、社會影響力獎(

李飛飛團隊提出ReKep,讓機器人具備空間智能,還能整合GPT-4o

Sep 03, 2024 pm 05:18 PM

李飛飛團隊提出ReKep,讓機器人具備空間智能,還能整合GPT-4o

Sep 03, 2024 pm 05:18 PM

視覺與機器人學習的深度融合。當兩隻機器手絲滑地互相合作疊衣服、倒茶、將鞋子打包時,加上最近老上頭條的1X人形機器人NEO,你可能會產生一種感覺:我們似乎開始進入機器人時代了。事實上,這些絲滑動作正是先進機器人技術+精妙框架設計+多模態大模型的產物。我們知道,有用的機器人往往需要與環境進行複雜精妙的交互,而環境則可被表示成空間域和時間域上的限制。舉個例子,如果要讓機器人倒茶,那麼機器人首先需要抓住茶壺手柄並使之保持直立,不潑灑出茶水,然後平穩移動,一直到讓壺口與杯口對齊,之後以一定角度傾斜茶壺。這

分散式人工智慧盛會DAI 2024徵稿:Agent Day,強化學習之父Richard Sutton將出席!顏水成、Sergey Levine以及DeepMind科學家將做主旨報告

Aug 22, 2024 pm 08:02 PM

分散式人工智慧盛會DAI 2024徵稿:Agent Day,強化學習之父Richard Sutton將出席!顏水成、Sergey Levine以及DeepMind科學家將做主旨報告

Aug 22, 2024 pm 08:02 PM

會議簡介隨著科技的快速發展,人工智慧成為了推動社會進步的重要力量。在這個時代,我們有幸見證並參與分散式人工智慧(DistributedArtificialIntelligence,DAI)的創新與應用。分散式人工智慧是人工智慧領域的重要分支,這幾年引起了越來越多的關注。基於大型語言模型(LLM)的智能體(Agent)異軍突起,透過結合大模型的強大語言理解和生成能力,展現了在自然語言互動、知識推理、任務規劃等方面的巨大潛力。 AIAgent正在接棒大語言模型,成為目前AI圈的熱門話題。 Au

鴻蒙智行享界S9全場景新品發表會,多款重磅新品齊發

Aug 08, 2024 am 07:02 AM

鴻蒙智行享界S9全場景新品發表會,多款重磅新品齊發

Aug 08, 2024 am 07:02 AM

今天下午,鸿蒙智行正式迎来了新品牌与新车。8月6日,华为举行鸿蒙智行享界S9及华为全场景新品发布会,带来了全景智慧旗舰轿车享界S9、问界新M7Pro和华为novaFlip、MatePadPro12.2英寸、全新MatePadAir、华为毕昇激光打印机X1系列、FreeBuds6i、WATCHFIT3和智慧屏S5Pro等多款全场景智慧新品,从智慧出行、智慧办公到智能穿戴,华为全场景智慧生态持续构建,为消费者带来万物互联的智慧体验。鸿蒙智行:深度赋能,推动智能汽车产业升级华为联合中国汽车产业伙伴,为