等一下...

我們都遇到過這種情況,我們正忙著嘗試投入生產,但選擇部署平台有很多因素。 Emmmm 是的,我們將選擇 AWS。通常在堅持使用一個平台之後,我們現在可以依賴一些因素,例如:架構、成本、可靠性、可擴展性、可用性和可行性。你猜怎麼了! ! !這不會涉及可靠性、可擴展性、可用性和可行性,因為 AWS 在所有這些方面都值得信賴。在本教程中,我們將確定 Django 應用程式的某些架構的優缺點。

在我們繼續之前,讓我們了解一些先決條件,以便完全理解正在發生的事情。

:) 本教程中涉及的所有程式碼都將作為開源提供。歡迎留下你的足跡

在繼續之前,您需要:

快取是一種用於將經常存取的資料暫時儲存在快速存取位置的技術,從而減少檢索該資料所需的時間。在 AWS 中,快取透過最大限度地減少主資料庫和 API 上的負載來提高應用程式效能和可擴展性,從而加快最終用戶的回應時間。

我們快取是為了提高效率、減少延遲並降低成本。透過將資料儲存在更靠近應用程式的位置,快取可以降低資料庫查詢的頻率、網路流量和運算負載。這可以提高資料檢索速度、改善使用者體驗並優化資源使用,這對於高流量應用程式至關重要。

EC2:

從其完整意義來看,EC2 是“彈性運算引擎”,是 AWS 資料中心中的 Web 伺服器。換句話說,EC2 是虛擬的,您可以從 AWS 獲得。憑藉所有可用功能,您可以透過「即用即付計畫」以非常便宜的月費獲得一個。

AWS 應用程式運行器:

這是一項完全託管的服務,可簡化 Web 應用程式和 API 的運作和擴展,使開發人員能夠從程式碼儲存庫或容器映像快速部署,而無需基礎架構管理。

芹菜和薑戈芹菜:

Celery是一個開源的分散式任務佇列,用於Python中的即時處理。 Django Celery 將 Celery 與 Django 框架集成,支援 Django 應用程式內的非同步任務執行、週期性任務和後台作業管理。該技術的用例各不相同。它可以是通訊服務(簡訊、電子郵件)、排程作業(Cron)和後台資料處理任務,例如資料聚合、機器學習模型訓練或檔案處理。

Amazon RDS(關聯式資料庫服務):

它是一種託管資料庫服務,可簡化雲端中關聯式資料庫的設定、操作和擴充。它支援MySQL、PostgreSQL、Oracle、SQL Server等多種資料庫引擎,提供自動化備份、修補程式和高可用性,將使用者從資料庫管理任務中解放出來。

讓我們研究一下應用程式的結構以及部署設定的行為。

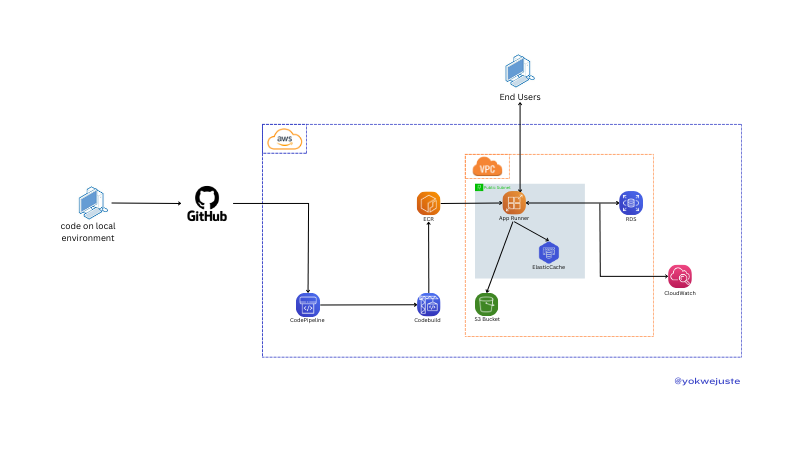

使用 AWS App Runner (ECR) 進行部署設定

我們將程式碼推送到 GitHub,觸發 CodePipeline 工作流程。 CodePipeline 使用 CodeBuild 建立儲存在彈性容器登錄機表 (ECR) 中的 Docker 映像,以進行版本控制。本教學跳過虛擬私有雲 (VPC) 配置。我們透過使用 CloudWatch 持續監控日誌來確保應用程式運作狀況。另一個好處是可以快速配置專案以使用 AWS RDS 和 S3 提供的 Postgres 來處理靜態檔案。

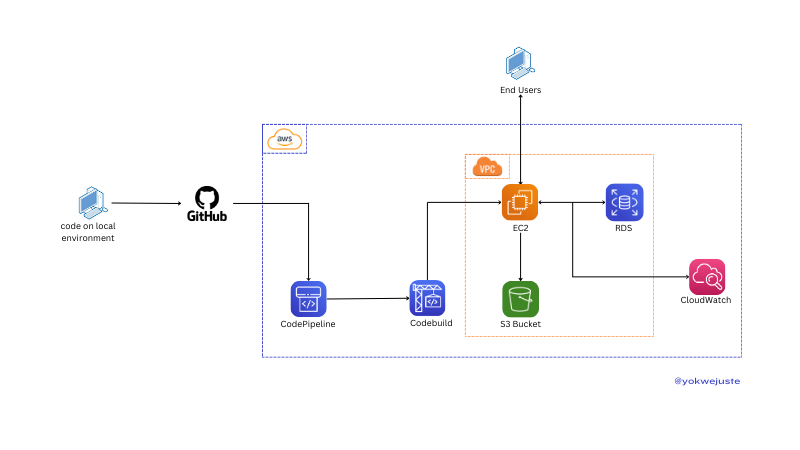

使用AWS EC2執行個體部署

使用類似的過程,省略版本控制和 ECR,我們將程式碼推送到 GitHub,觸發 CodePipeline,後者使用 CodeBuild 建立儲存在 ECR 中的 Docker 映像以進行版本控制。 EC2 執行個體提取這些映像以在 VPC 內部署應用程序,從而使最終用戶可以存取該應用程式。該應用程式與 RDS 互動以儲存數據,並與 S3 互動以儲存靜態文件,並由 CloudWatch 監控。我們可以選擇使用 certbot 等選項將 SSL 設定新增到此實例中。

以下是基於典型使用情境的 EC2 和 App Runner 之間的假設價格比較:

| 服務 | 組件 | 成本明細 | 每月費用範例(估算) |

|---|---|---|---|

| EC2 | 實例使用 | t2.micro(1 個 vCPU,1 GB RAM) | $8.50 |

| 存放 | 30 GB 通用 SSD | $3.00 | |

| 資料傳輸 | 100 GB 資料傳輸 | 9.00 美元 | |

| 總計 | $20.50 | ||

| 應用程式運行器 | 請求 | 100 萬個請求 | $5.00 |

| 計算 | 1 個 vCPU,2 GB RAM,每月 30 小時 | $15.00 | |

| 資料傳輸 | 100 GB 資料傳輸 | 9.00 美元 | |

| 總計 | $29.00 |

讓我們快速總結一下這兩種資源的管理方式。

| 因素 | EC2 | 應用運行器 |

|---|---|---|

| 設定 | 需要手動設定 | 完全託管服務 |

| 管理開銷 | 高 - 需要作業系統更新、安全性修補程式等 | 低 - 抽象基礎設施管理 |

| 配置 | 對實例配置的廣泛控制 | 有限控制,注重簡單 |

| 因素 | EC2 | 應用運行器 |

|---|---|---|

| 縮放設定 | 手動設定 Auto Scaling 組 | 根據流量自動縮放 |

| 規模化管理 | 需要設定和監控 | 由AWS管理,無縫擴充 |

| 靈活性 | 對擴展策略的高粒度控制 | 簡化,靈活性較差 |

| 因素 | EC2 | 應用運行器 |

|---|---|---|

| 部署時間 | 較慢 - 實例配置和配置 | 更快 - 託管部署 |

| 更新流程 | 可能需要停機或滾動更新 | 無縫更新 |

| 自動化 | 需要設定部署管道 | 簡化、整合部署 |

| 因素 | EC2 | 應用運行器 |

|---|---|---|

| 客製化 | 廣泛 - 對環境的完全控制 | 有限 - 託管環境 |

| 控制 | 高 - 選擇特定實例類型、儲存等 | 較低 - 注重易用性 |

| 靈活性 | 高 - 適合特殊配置 | 針對標準 Web 應用程式進行了簡化 |

| 因素 | EC2 | 應用運行器 |

|---|---|---|

| 安全控制 | 對安全配置的高細部控制 | 簡化安全管理 |

| 管理 | 需要手動設定安全群組、IAM | 由AWS管理,控製粒度較小 |

| 合規 | 豐富的合規性設定選項 | 簡化合規管理 |

Given that the comparison of our project does not rely on the project setup itself. We will have a basic Django application with a celery configuration from AWS.

We will go with a basic project using Django.

The commands should be run in the order below:

# Project directory creation mkdir MySchedular && cd MySchedular # Creating an isolated space for the project dependencies python -m venv venv && source venv/bin/activate # Dependencies installation pip install django celery redis python_dotenv # Creating project and app django-admin startproject my_schedular . && python manage.py startapp crons # Let's add a few files to the project skeleton touch my_schedular/celery.py crons/urls.py crons/tasks.py

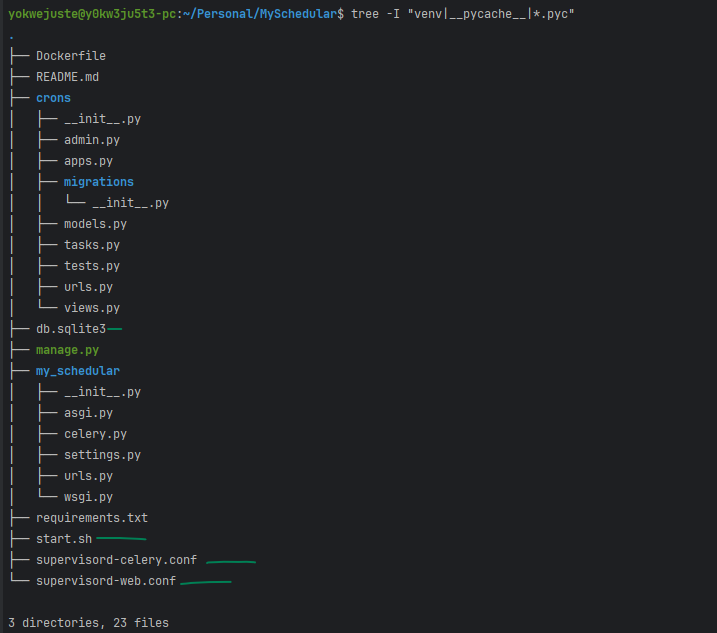

At this point in time we can check our project skeleton with this:

tree -I "venv|__pycache__" .

And we should have this one at the moment

.

├── crons

│ ├── __init__.py

│ ├── admin.py

│ ├── apps.py

│ ├── migrations

│ │ └── __init__.py

│ ├── models.py

+ │ ├── tasks.py

│ ├── tests.py

+ │ ├── urls.py

│ └── views.py

├── manage.py

└── my_schedular

├── __init__.py

├── asgi.py

+ ├── celery.py

├── settings.py

├── urls.py

└── wsgi.py

3 directories, 16 files

We can proceed now by adding a couple of lines for the logic of out app and covering another milestone for this project.

1- Setting up the celery

# my_schedular/celery.py

from __future__ import absolute_import, unicode_literals

import os

from celery import Celery

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'myproject.settings')

app = Celery('myproject')

app.config_from_object('django.conf:settings', namespace='CELERY')

app.autodiscover_tasks()

@app.task(bind=True)

def debug_task(self):

print(f'Request: {self.request!r}')

2- Let's overwrite the celery variables to set our broker

# my_schedular/settings.py

CELERY_BROKER_URL = os.getenv('CELERY_BROKER_URL ')

CELERY_RESULT_BACKEND = os.getenv('CELERY_RESULT_BACKEND')

3- Update init.py to ensure the app is loaded when Django starts:

# my_schedular/__init__.py

from __future__ import absolute_import, unicode_literals

from .celery import app as celery_app

__all__ = ('celery_app',)

4- We create our task

# crons/tasks.py

from celery import shared_task

import time

@shared_task

def add(x, y):

time.sleep(10)

return x + y

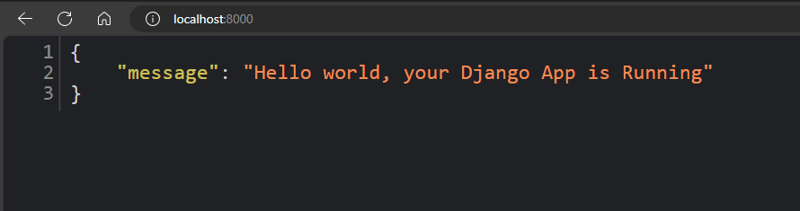

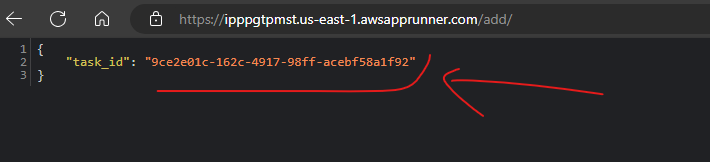

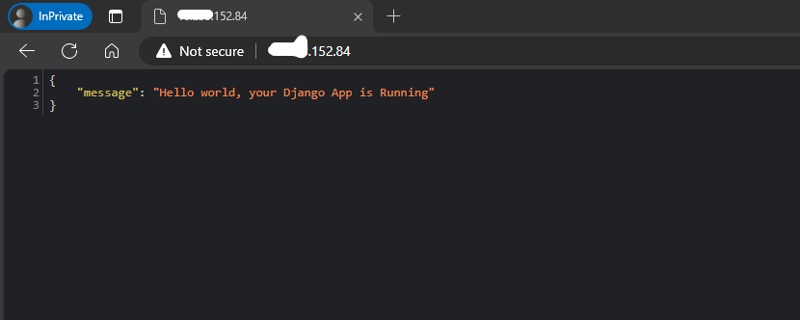

5- Let's add our view now, just a simple one with a simple Json response.

# crons/views.py

from django.http import JsonResponse

from crons.tasks import add

def index(request):

return JsonResponse({"message": "Hello world, your Django App is Running"})

def add_view(request):

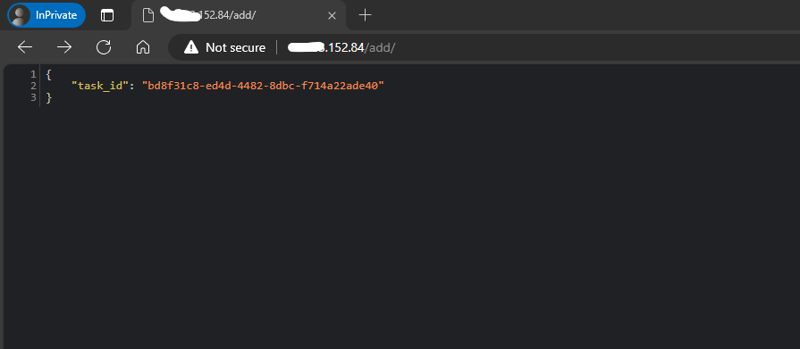

result = add.delay(4, 6)

return JsonResponse({'task_id': result.id})

6- We cannot have a view, without an endpoint to make it possible to access it

# crons/urls.py

from django.urls import path

from crons.views import add_view, index

urlpatterns = [

path('', index, name='index'),

path('add/', add_view, name='add'),

]

7- Adding our apps urls to the general urls.py of the whole project.

# my_schedular/urls.py

from django.contrib import admin

from django.urls import include, path

urlpatterns = [

path('admin/', admin.site.urls),

path('/', include('crons.urls')),

]

Adding Environment Variables:

# .env SECRET_KEY= DEBUG= CELERY_BROKER_URL= CELERY_RESULT_BACKEND=

After proper follow up of all these steps, we have this output:

Since we are shipping to AWS We need to configure a few resource to

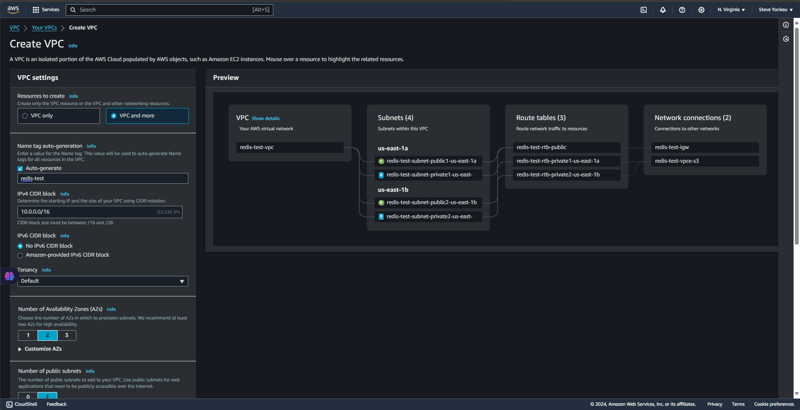

We create an isolated environment and a network for a secure access and communication between our resources.

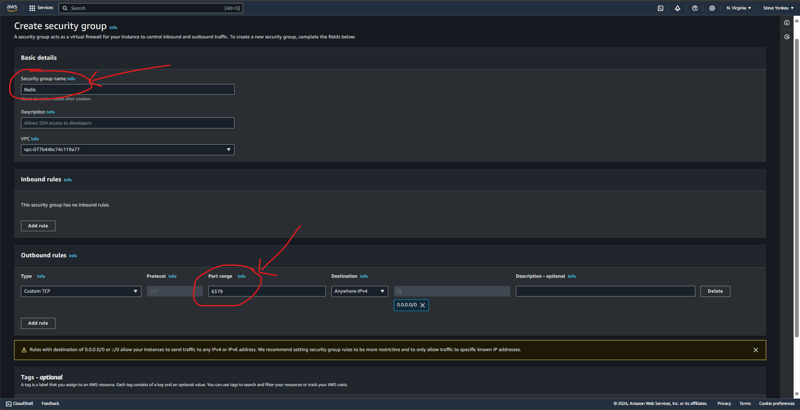

We create a security group under the previously made VPC and together add inbound and outbound rules to the TCP port 6379 (the Redis Port).

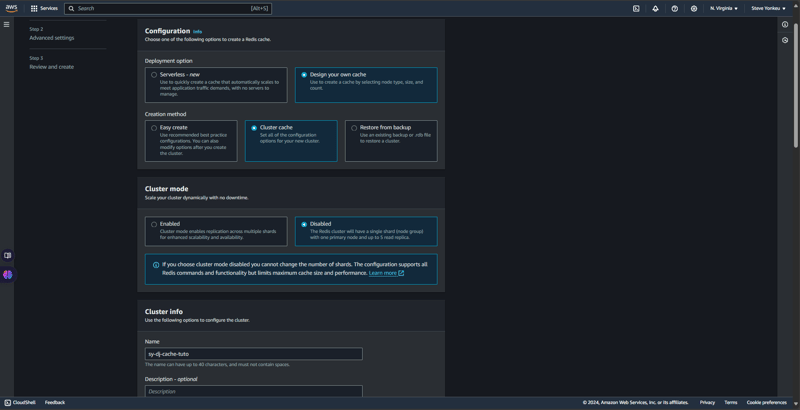

Basically, AWS Elastic Cache offers us two varieties when it comes to caching, namely: RedisOSS and memCache. RedisOSS offers advanced data structures and persistence features, while Memcached is simpler, focusing on high-speed caching of key-value pairs. Redis also supports replication and clustering, unlike Memcached. Back to business, back to Redis.

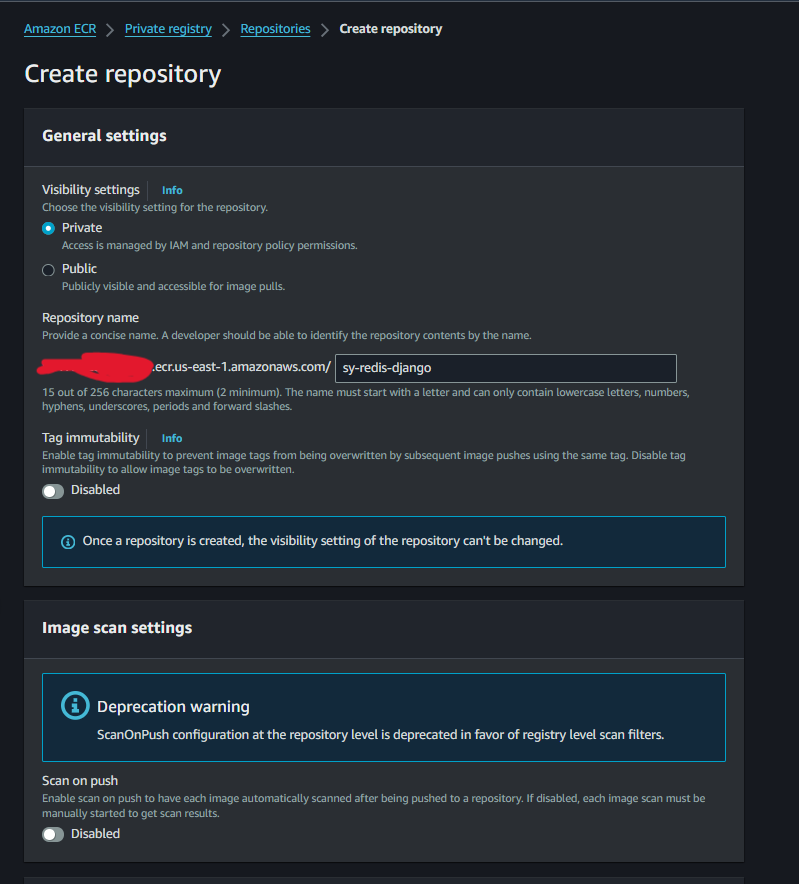

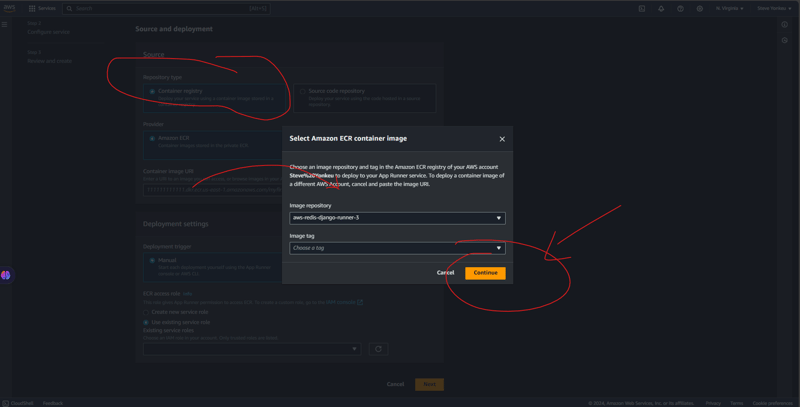

The Creation of an ECR image will be very simple and straight forward.

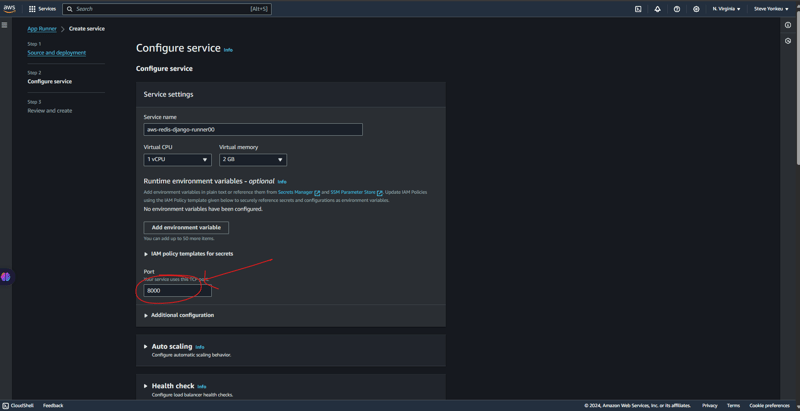

Follow the steps below to have your app runner running.

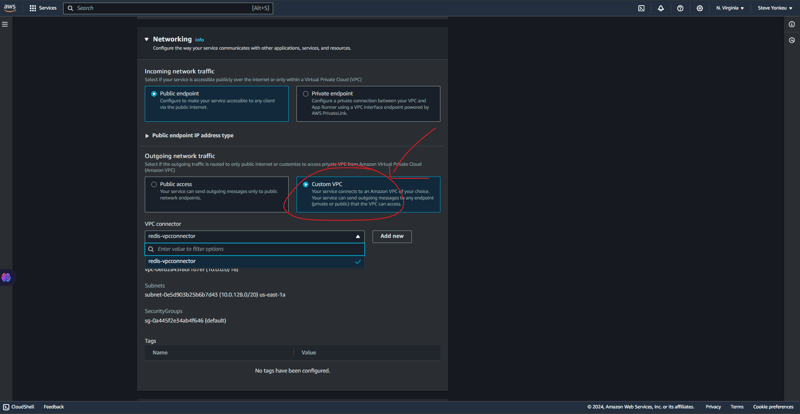

Here we need to be very technical. A VPC is a secured network where most of our resources lie, since an App runner is not found into a VPC, we will need to provide a secured means for communication between those resources.

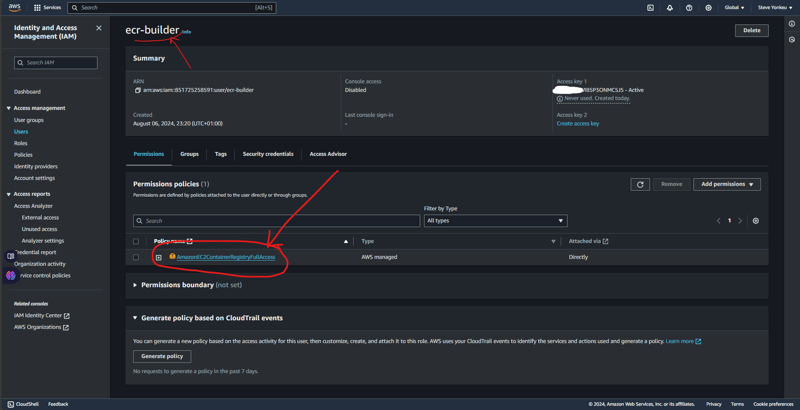

For this tutorial we will need an authorization to connect our workflow to our ECR. Then we add the AmazonEC2ContainerRegistryFullAccess permission policy so it can push the image to our AWS ECR.

When all is done we have this tree structure.

You can have the whole code base for this tutorial on My GitHub.

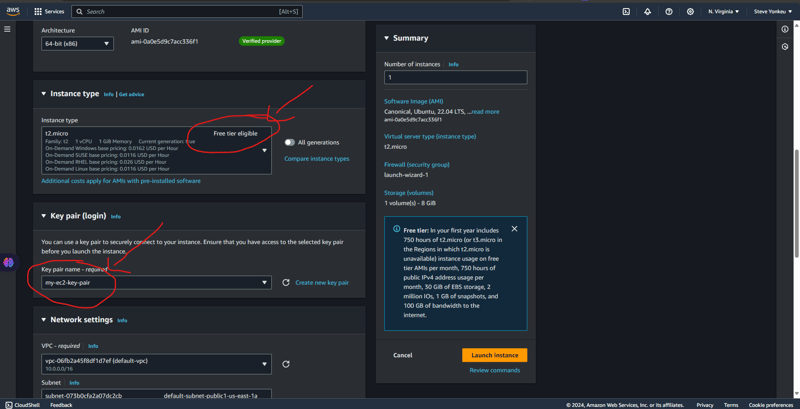

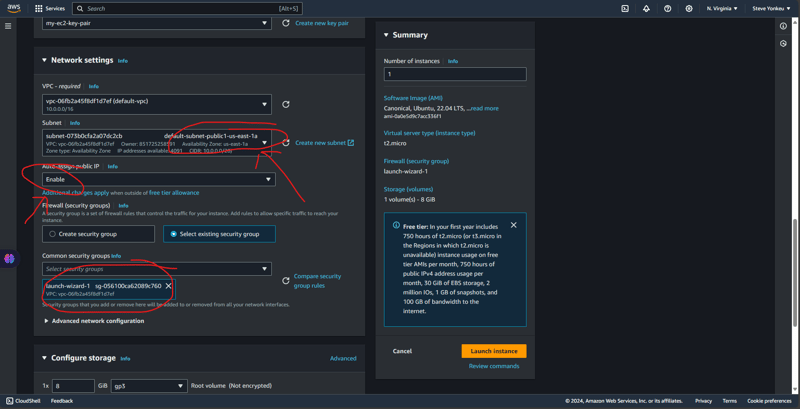

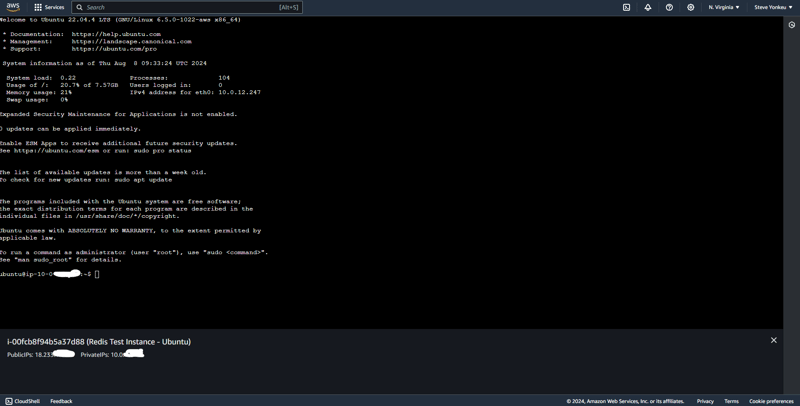

We will go with one the easiest EC2 to setup and the one having a free tier, an ubuntu EC2 instance. And The same code base that was used above is the same we are using here.

![EC2 1]https://dev-to-uploads.s3.amazonaws.com/uploads/articles/rk8waijxkthu1ule91fn.png)

Alternatively, we can setup the security group separately.

Run this script to install necessary dependencies

#!/bin/bash

# Update the package list and upgrade existing packages

sudo apt-get update

sudo apt-get upgrade -y

# Install Python3, pip, and other essentials

sudo apt-get install -y python3-pip python3-dev libpq-dev nginx curl

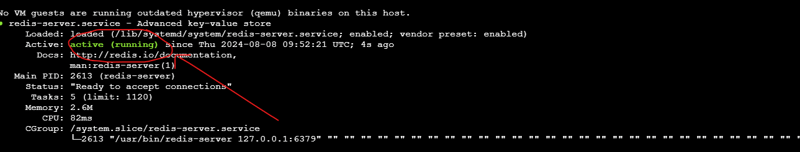

# Install Redis

sudo apt-get install -y redis-server

# Start and enable Redis

sudo systemctl start redis.service

sudo systemctl enable redis.service

# Install Supervisor

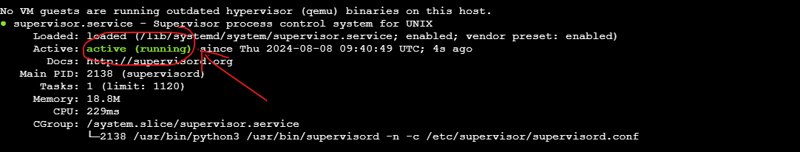

sudo apt-get install -y supervisor

# Install virtualenv

sudo pip3 install virtualenv

# Setup your Django project directory (adjust the path as needed)

cd ~/aws-django-redis

# Create a virtual environment

virtualenv venv

# Activate the virtual environment

source venv/bin/activate

# Install Gunicorn and other requirements

pip install gunicorn

pip install -r requirements.txt

# Create directories for logs if they don't already exist

sudo mkdir -p /var/log/aws-django-redis

sudo chown -R ubuntu:ubuntu /var/log/aws-django-redis

# Supervisor Configuration for Gunicorn

echo "[program:aws-django-redis]

command=$(pwd)/venv/bin/gunicorn --workers 3 --bind 0.0.0.0:8000 my_schedular.wsgi:application

directory=$(pwd)

autostart=true

autorestart=true

stderr_logfile=/var/log/aws-django-redis/gunicorn.err.log

stdout_logfile=/var/log/aws-django-redis/gunicorn.out.log

user=ubuntu

" | sudo tee /etc/supervisor/conf.d/aws-django-redis.conf

# Supervisor Configuration for Celery

echo "[program:celery]

command=$(pwd)/venv/bin/celery -A my_schedular worker --loglevel=info

directory=$(pwd)

autostart=true

autorestart=true

stderr_logfile=/var/log/aws-django-redis/celery.err.log

stdout_logfile=/var/log/aws-django-redis/celery.out.log

user=ubuntu

" | sudo tee /etc/supervisor/conf.d/celery.conf

# Reread and update Supervisor

sudo supervisorctl reread

sudo supervisorctl update

sudo supervisorctl restart all

# Set up Nginx to proxy to Gunicorn

echo "server {

listen 80;

server_name <your_vm_ip>;

location / {

proxy_pass http://127.0.01:8000;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto \$scheme;

}

error_log /var/log/nginx/aws-django-redis_error.log;

access_log /var/log/nginx/aws-django-redis_access.log;

}" | sudo tee /etc/nginx/sites-available/aws-django-redis

# Enable the Nginx site configuration

sudo ln -s /etc/nginx/sites-available/aws-django-redis /etc/nginx/sites-enabled/

sudo rm /etc/nginx/sites-enabled/default

# Test Nginx configuration and restart Nginx

sudo nginx -t

sudo systemctl restart nginx

This setup is available on GitHub on the dev branch, have a look and open a PR.

| Feature / Service | Self-Managed on EC2 (Free Tier) | Fully Managed AWS Services |

|---|---|---|

| EC2 Instance | t2.micro - Free for 750 hrs/mo | Not applicable |

| Application Hosting | Self-managed Django & Gunicorn | AWS App Runner (automatic scaling) |

| Database | Self-managed PostgreSQL | Amazon RDS (managed relational DB) |

| In-Memory Cache | Redis on the same EC2 | Amazon ElastiCache (Redis) |

| Task Queue | Celery with Redis | AWS managed queues (e.g., SQS) |

| Load Balancer | Nginx (self-setup) | AWS Load Balancer (integrated) |

| Static Files Storage | Serve via Nginx | Amazon S3 (highly scalable storage) |

| Log Management | Manual setup (Supervisor, Nginx, Redis) | AWS CloudWatch (logs and monitoring) |

| Security | Manual configurations | AWS Security Groups, IAM roles |

| Scaling | Manual scaling | Automatic scaling |

| Maintenance | Manual updates and patches | Managed by AWS |

| Pricing | Minimal (mostly within free tier) | Higher due to managed services |

Note: Prices are approximate and can vary based on region and specific AWS pricing changes. Always check the current AWS Pricing page to get the most accurate cost estimates for your specific requirements.

Nahhhhhhhhhh!!! Unfortunately, there is no summary for this one. Yes, go back up for a better understanding.

The learning path is long and might seems difficult, but one resource at a time, continuously appending knowledge leads us to meet our objectives and goal.

以上是部署 Django 應用程式:使用外部 Celery 的 ECs 應用程式運行器的詳細內容。更多資訊請關注PHP中文網其他相關文章!